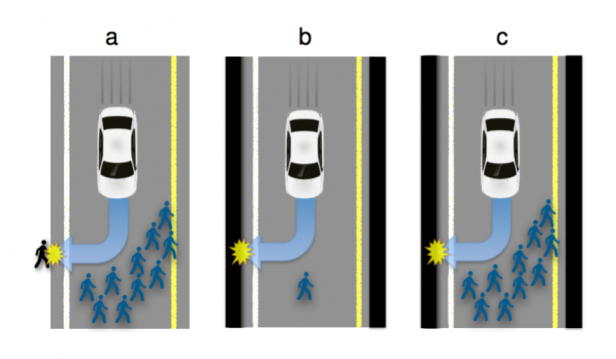

Self-driving cars are starting to pop up everywhere as companies slowly begin to test and improve them for the commercial market. Heck, Google’s self-driving car actually has its very own driver’s license in Nevada! There have been minimal accidents, and most of the time, they say it’s not the autonomous cars’ fault. But when autonomous cars are widespread — there will still be accidents — it’s inevitable. And what will happen when your car has to decide whether to save you, or a crowd of people? Ever think about that before?

It’s an extremely valid concern, and raises a huge ethical issue. In the rare circumstance that the car has to choose the “best” outcome — what will determine that? Reducing the loss of life? Even if it means crashing into a wall, mortally injuring you, the driver? Maybe car manufacturers will finally have to make ejection seats a standard feature!

If that is standard on all commercial autonomous vehicles, are consumers really going to buy a car that might in the 0.00001% probability be programmed to kill you in order to save pedestrians? Would you even be able to make that choice if you were the one driving?

Well — the researchers decided to poll the public. Which is wherein lies the paradox — people do think autonomous cars should sacrifice the needs of the few for the needs of the many… as long as they don’t have to drive one themselves. There’s actually a name for this kind of predicament. It’s called the Trolley Problem.

In scenario 1, imagine you are driving a trolley down a set of railway tracks towards a group of five workers. Your inaction will result in their death. If you steer onto the side track, you will kill one worker who happens to be in the way.

In scenario 2, you’re a surgeon. Five people require immediate organ transplants. Your only option is to fatally harvest the required organs from a perfectly healthy sixth patient, who doesn’t consent to the idea. If you don’t do anything, the five patients will die.

The two scenarios are identical in outcome. Kill one to save five. But is there a moral difference between the two? In one scenario, you’re a hero — you did the right thing. In the other, you’re a psychopath. Isn’t psychology fun?

As the authors of the research paper put it, their surveys:

suggested that respondents might be prepared for autonomous vehicles programmed to make utilitarian moral decisions in situations of unavoidable harm… When it comes to split-second moral judgments, people may very well expect more from machines than they do from each other.

Fans of Issac Asimov may find this tough reading: when asked if these decisions should be enshrined in law, most of those surveyed felt that they should not. Although the idea of creating laws to formalize these moral decisions had more support in autonomous vehicles than human-driven ones, it was still strongly opposed.

This does create an interesting grey area, though: if these rules are not enforced by law, who do we trust to create the systems that make these decisions? Are you okay with letting Google make these life and death decisions?

What this really means is before autonomous cars become commercial, public opinion is going to have to make a big decision on what’s really “OK” for autonomous cars to do (or not to do). It’s a pretty interesting problem and if you’re interested in reading more, check out this other article about How to Help Self-Driving Cars Make Ethical Decisions.

Might have you reconsider building your own self-driving vehicle then, huh?

[Research Paper via MIT Technology Review]

A film that explores it based on a Twilight Zone based on a Richard Matheson short story:

https://en.wikipedia.org/wiki/The_Box_%282009_film%29

(It goes weird after the Twilight Zone punch line, but was interesting).

Spoiler Alert!

A wife is given a box with a button protected by a locked dome and a key, though there is no visible mechanism. The man who gives it to her says it is her choice to press the button. If she does, two things will happen. 1. She will receive $1 Million post tax. 2. Someone who she does not know will die.

In each story after playing around she eventually presses the button. In the Twilight Zone version the man brings the $1M, takes the box and says “it will be given to someone who you do not know”.

.

“Still, Captain Janeway does seem to succeed more than random chance would allow. I’ll factor it into my calculations.”

My take on this is pretty simple. I have been the driver in at least one situation where a computer would most certainly be attempting to decide the best possible course of action with the least possible serious injuries or fatalities that I, as a flesh and blood computer, was able to avoid entirely. Granted, it required maneuvering straight out of an action movie and blessed by the gods, but it did work, and I don’t think that computer driven cars will have the capability to perform complex high speed maneuvers like that for some time.

Of course, I would note that in all likelihood, the computer wouldn’t have gotten itself into that situation in the first place.

“what will determine that? Reducing the loss of life? Even if it means crashing into a wall, mortally injuring you, the driver?”

Yes, obviously. All things being equal less life lost is obviously the better option. As it always is. You have to start contorted and specific scenarios in order to justify killing more people to save a individual.

This is old hat. Asimov spent most of his carrier talking about scenarios like this.

Meanwhile in the real world the cars will just be coded to the letter of the law.

What concerns me more is what would happen if the sixth person is -say- the President of the USA, and the car knows it because the electronics is being instructed to stay away from him via hidden backdoors. That would result in the car hitting the group of other people all times, no matter what their number would be.

This is not really an issue, and should never ever be one.

Collision avoidance, which is what this is about, requires the fastest possible detection and the fastest possible correct reaction. Time is critical and continuous. A delay in either detection time or reaction time risks changing an avoidable collision into an unavoidable one. No matter how fast a processor is, each line of code takes time to execute and creates a delay and increases the risk of a collision.

It would be bad engineering and an unacceptable trade-off to deliberately increase the risk of collisions and death to run code because it might make extremely unlikely collisions less catastrophic.

But where this becomes a truly insane discussion is the concept of software engineers programming machines to kill, to be tasked with creating algorithms, and determining conditions, and parameters that result in deliberately killing someone.

Regardless of what someone comes up with in a hypothetical discussion, it would never be acceptable to program any machine to determine when to kill, and no engineer should ever be tasked with doing so or trusted to get it right.

This article needs panel “d” depicting the non-automated driver who, in a brief moment of indecision plows into the crowd of people, killing 4 and injuring an additional 6.

As much as I love technology, driverless cars mixed with driven cars on highways with pedestrians and everything, seems a poor choice in the presence of better choices. What’s wrong with rail? Why can’t our rail systems just be upgraded to have more stops, and perhaps dedicated rails for parcel delivery, if it’s so important that things be zooming around without someone in them.

I can envision various scenarios: a drunk person getting home without “driving” his car. (His car knows the way home.) This reminds me of stories of Amish buggies with drunk man passed out on the floor, but they get home anyways because the horse knows its way home. It happens. Problem is, the horse doesn’t always know and obey the traffic laws etc.

Are humans too stupid to drive their cars safely? It will be fantastic if it can be done correctly and safely, but good grief… I like to drive, tell my why I actually *want* to have my car out on the roads with nobody in it, or worse, with my children in it while the thing drives itself to school? Not. What about snow and other kinds of bad weather driving? Really, come on people. To driverless car proponents: if you think that many people are too dumb or too drunk to drive their own cars maybe you should evaluate your circle of friends.

LOL, think of it this way:

When driver-less cars are readily available, you will be off the road anyway.

Keep Calm and Carry On.

The Correct Answer.

Knowing the system in question, a few people could jump in front of a car knowing the car would crash into the wall to avoid them.

The advantage of human behavior is unpredictable outcomes.

All AIs fail when you find the formula and can work the process.

That completely changes the comment to annoying people. “Go play in the street”