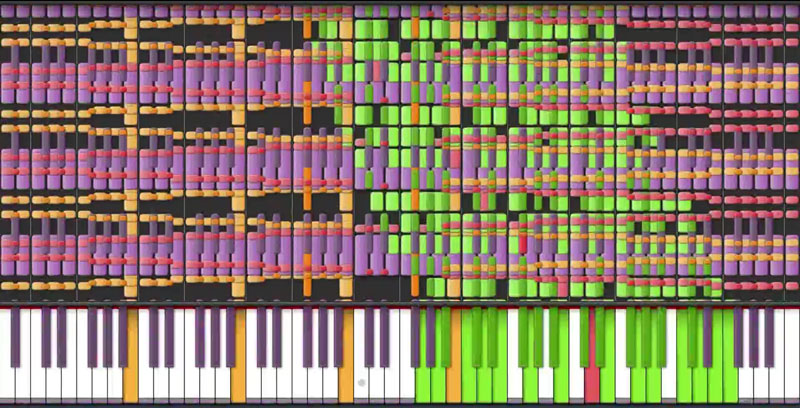

Imagine if you played all the keys on a piano at once. What would it sound like? Now imagine that you’d like to transcribe that music. What would it look like? So many notes that you could hardly see the paper underneath.

Which is why the people making such “impossible music” are calling themselves the Black MIDI Crew: if you wrote the music down, it’d look like a big black blob. Or at least, that’s the joke. Amazingly, though, it doesn’t sound like a big mess. Check out “Pi, The Song With 3.1415 Million Notes” below the break to see what we mean.

In the end, the impossible arpeggios and splatter-chords end up sounding surprisingly like chiptunes. Ironically, chiptunes used these fast-moving arpeggios as a perceptual workaround for the limited number of voices that they could play at a single time — playing the notes of a chord one after another fast enough that your brain almost gets tricked into thinking that they’re simultaneous.

We say “ironic” because in Black MIDI, they’re playing as many notes at a time as possible and the insane arpeggios seem instead to be a way to put some musical structure into the chaos. Indeed, the Black MIDI pieces play so many notes at once that we can’t really perceive them as such, and they end up sounding like a single synthetic timbre, and it ends up sounding even more like a chiptune than a piano. It’s a neat effect.

And in case you think that the effects have something to do with the synthesized “pianos” in use, have a look at this project from way back that used a solenoid-driven real piano as a vocoder. (I demand a mash-up!)

Play enough notes at once on a piano and it becomes hard to hear a “note” — you lose the concept of a fundamental frequency, and you just hear sound.

Thanks much to [jwcrawley] for the tip. Via Rhizome.org

The video has no relationship to Pi and the colored note sequences in the video have no real relationship with the sound that you hear.

Beautiful concept and excellent composition but unfortunately nothing to do with Pi.

Boohoo

It actually has… there are some visual representations of PI in the stream of descending notes, which in itself makes this a PI related music ;)

Even if that were the case … what you hear has very little to the descending notes you see.

I love how dismissive you are, but could you be more specific? Just throwing out “this isn’t real” doesn’t give us anything. Tell us why.

Isn’t every sinewave constructed using PI?

I just LOVE this! AMAZING!

no

Yeah, because “Pi” is called that because it comprises 3.14 million notes. And it’s close to pi seconds long.

And a really cool song.

Err… Pi minutes.

The only relation with pi claimed is that the song has 3.1415 million notes. So, well, why not.

And the colored note sequence is the exact representation of the sound. You forget that the visual sequence doesn’t represent the note intensity, it’s made clear at 2:30. The human brain isn’t good at discerning quiet sounds among louder sounds. Just because you can’t hear all the wiggly patterns doesn’t mean they aren’t played.

Seconded!

If a wiggly pattern falls in the forest, and noone hears it, does it make a sound?

Only if bear sh!ts on it.

If my vote counts, you win the internet today.

I think the vast majority of those colored notes are just extremely quiet so they show up as a “note” but they contribute nothing to the sound, only the visualization.

The song has 3.1415 million notes. I think thats the relation to Pi.

You can download the midi and the tool from the description, why should he fake it?

It has few relationships to Pi. Here are all of the things i did:

Created the song to be exactly 3:14:1 long

It contains 3,141,592 notes

(unrelated) Contains Morse code of the origin of Pi

One section has “3.1415” written in note form all across the screen

heres the real one https://www.youtube.com/watch?v=BdUy70dh8LY

Amazing.

I bet that song would make a good bus test pattern.

There’s nothing “impossible” about experimental music. It’s actually a common discipline in modern music. There also are notations to represent “untypical” tonal intervals. These notations just don’t use the well known 5 bars.

It’s MIDI limitations that create the arpeggio-like sound, so one could say that MIDI is responsible for “flaws” in the actual experiment.

Just thought about the microtonal scales, but also remembered how the actual midi is limited.

How long will it take to get a new version of the midi standard?

It already exists, just isn’t terribly popular: https://en.wikipedia.org/wiki/Open_Sound_Control

Open sound control is not the next version of MIDI, it’s an alternative.

The next version of MIDI is so-called “HD MIDI” (ugh), which is supposedly nearing completion, but since it has been so long since they started working on it there is no guarantee that the industry will pick it up.

Many synthesizers can only synthesize a limited number of notes at the same time. They decide which ones are important and ignore the rest. On the C64, the “limited number” was 4. Modern synthesizers will go much higher, but I doubt they can handle the “black blobs” in the video. I suspect that this is a reason you don’t hear just a “hiss” during those passages (which is what you’d hear if all notes are played at once).

64voice polyfone is not uncommen in the synth world and even 128 voices, so I think it’s doable.

Generally, rendering of these black MIDIs is not done in real time – it is done with software synthesizers with no limit to how many simultaneous notes they can play.

Reminds me a bit of this: https://www.youtube.com/watch?v=tCRPUv8V22o

“music” generated with very simple C (and java script) one-line functions

This is pushing me towards audio again which is probably a good thing. I watched many of these and they fall into two categories – creators that are very … well .. creative and second creators that have a very ‘sound’ understanding of … well … sound.

I want to demonstrate what people have forgotten about the ‘human’ perception of sound and in the modern world that is something that requires a very mathematical approach.

Lucky me, I’m very good at maths and I can program maths well.

Leading me back to this articles central point … Pi. Pi is the number of times the diameter goes into ‘one’ circumference. The ONE circumference is significant because with unity the error is zero ie it is ONE circle plus or minus nothing and yet we choose to express this with a constant that has the greatest error through is inappropriate application in decimal.

Here’s something for you – those who write maths engines – what is Pi to the base Pi. yeah error problem solved.

One circle plus or minus a slight difference is what makes phasing and harmonics, and this is all about what is music. No error makes for no music :)

I’m pretty sure the central point of the article isn’t Pi.

Anyway, most of us get along quite well with just 3 1/4. Maybe if I were sending a rocket to mars, it would matter. Now tell us all of your amazing thoughts about floating point. Is it a compression algorithm or no? Discuss.

Try 355/113 for a better but not terribly more complicated approximation :)

Floating point, with its mainstream introduction as a 8087 chip was the death of math for common use.

It’s amazing that even while computers use base two, we still calculate in decimal as though we were counting our fingers. This is really how archaic this is! When I see decimal being used for science, I imagine a monkey counting his fingers.

Floating point is *all* about taking values on a computer with masses of memory and reducing their resolution to save memory. Using floating point on a computer today is akin to taking a abacus when you go shopping to calculate costs.

The first thing floating point killed was error calculation. Is that 5.something volts is it 5.0something volts or is 5.00 Volts + or minus 10%

These numbers are not the same 1, 1.0, 1.00, 1.0000 well unless you use floating point.

Calculate 22/7 on your floating point computer and then tell me the error margin – that’s right it takes more to calculate the error than to calculate 22/7 in the first place so there goes your precious saved bytes.

Nothing in this world is restricted to base 10 except the imagination of one species that has 10 digits on it’s hands.

Is it pedantic that my only thought after reading that rant is, “You mean ‘its’, not ‘it’s'”?

1. Floating point numbers are stored in binary, no in base 10, so I don’t understand why you are ranting against base 10. IEEE 754 (the most common floating point format) is really nothing more than a binary version of scientific notation, build to fit within a certain number of bits. You store the sign of the number, a fraction (in binary), and an exponent. The number is simply fraction*2^exponent.

2. Floating point numbers are not intended to save on storage space. They are designed to make the math quick and efficient. It is not about “precious saved bytes”. They are constrained to a certain number of bits because of how processors work. A 32 bit processor, for example, does very fast math on a 32 bit floating point number.

3. 1, 1.0, 1.00, 1.0000 are most certainly the same number. The extra zeroes simply tell you to how many decimal points you can trust your calculations. But, it’s not the job of floating point to keep track of that information. It is, however trivial to track it yourself, just like you would if you did the math by hand.

4. Yup. It is a bit harder to calculate errors. But not that much. And the relative error with floating point numbers is always low. It’s designed that way.

5. There actually aren’t any things in the world that are particularly restricted to any base. And we do use other bases when appropriate, like we do with binary in computers.

Are there gotchas to IEEE 754 floating point numbers? Sure! But as long as you’re aware of them, you can work around it easily. And you are free to write your own software that does all the math and storage it’s own way, to bypass these limitations, but at that point you lose out on all the hardware accelerated math-goodness.

Now the real question is, why on Earth did I bother spending this much time writing this post out? I’m just going to be met with hostility. C’est la vie.

1) For all you ranting about base 2 and scientific notation, you then summarise with fraction*2^exponent and it seems that you are suggesting this is to the base of 2 (ie binary) however scientific notation is specifically in base 10 just like your calculator ie 1.0000000 E +02 => 100.

2) You say that floating point isn’t intended to save space but is for speed. Well it isn’t any faster – in fact it’s slower to work with floating point when you need high precision. Give me the first a 100 digit prime number with floating point and no 1.2387637678 E+99 is not a prime number. As for saving memory – well you haven’t had to write math routines when precision is required otherwise you would realise that it’s not unusual for a variable to be over 1kBytes and even larger in extreme cases.

3) Wrong 1 is a number that could be anything from 1 to 1.999…, 1.0 is a number that could be anything between 1.00 and 1.0999… etc this is why crystals have all the zeros – they’re specifying an error range.

4) A bit harder !!! tell me the error of Pi^2/Pi in base 10, now tell me what the error is in base Pi … um zero nothing zilch

5) well perhaps you can think that we use different bases where appropriate and then you give examples of only when the base is an integer. Um nature isn’t so ordered.

Now I *do* understand that floating point is useful for the common things you do. I hope you can understand that the things that you commonly do are not common of their own accord – they are common because of what technology can do (floating point) is common and because of the hardware *commonly* available. If someone were to endeavour to do something that is more complex than floating point is good for then they will most probably abandon this endeavour because it is so much harder to code maths routines than it is to choose one of the gazillion pre-written platforms that work with floating point.

There is a whole world of math out there that is completely invisible to people who have been blinkered with floating point. We don’t have to say … the answer is n plus or minus x. With existing technology we can say the answer is precisely n … but instead we sit here like monkeys counting out fingers so when we are asked what is three times one third, we respond 0.999….

It’s rather amazing how beautiful things can be created or brought forth with the right combinations of otherwise mundane items.

https://www.youtube.com/watch?v=dsU3B0W3TMs

I like my classical music

https://www.youtube.com/watch?v=cJsyMmC76aM

It’s easy to transcibe all notes being played at once.

It’s called a cluster: https://en.wikipedia.org/wiki/Tone_cluster

It’s not that it can’t be represented, it’s that the result is a solid wall of notes that are completely useless for actually reading by a human, and even if you could read them they couldn’t feasibly be played.

That there was anyone, let alone multiple people, who took “impossible music” literally despite the this-is-figurative quotes is astonishing to me.

Sidenote: this genre reminds me of “Faerie’s Aire and Death Waltz.”

It’s looking a lot like the Rock Band song from hell. It certainly doesn’t sound as chaotic as it looks on screen.

This music pretty much started with touhou.

https://youtu.be/tds0qoxWVss

https://youtu.be/IltRgZW2Jcw

One of the idea’s was too make the music similar to the game.

http://img.photobucket.com/albums/v488/the_foreign_guy/danmaku.jpg

https://www.youtube.com/watch?v=YysVxA5rnIY

And closing the art-life-imitation circle;

http://youtu.be/Iankyw47vqY

Even in MY hackaday, Touhou everywhere!

Hey touhou is pretty sweet set of games if you like a challenge.

*points to name* xD

The strongest. =D

There is one automated way to do this sort of thing with any sound source.

Complex waveform to FFT to genetic programming algorithm to midi

You split the sound into bands and then find the midi note, envelope and instrument combination sequence to match a given band than play all the bands at the same time.

Just imagine if Salvador Dali invented the MP3 algorithm and you’ll get an idea of what the midi file will look like. :-)

This has been done already.

https://www.youtube.com/watch?v=oPG-VhU6Kiw

I wonder if they try to subtract harmonics of piano notes to take that into account in higher bands.

Got a URL or reference to a paper? Not sure what you mean by subtract but the midi notes are additive, then again if you are using the rendered audio file from the midi to teach your genetic algorithm it should take into account the effect of interference between individual waveforms to given you both additive and subtractive synthesis. All it cares about is that it finds the simplest combination of midi parameters that gives the closest output match to the original input signal.

Never mind I found the guy’s site, http://ablinger.mur.at/speaking_piano.html and it is not the same thing, just a similar idea. Perhaps you can see that now from the explanation above. A piano could not render the file produced by the method I described as the method depends a lot on the timbre relationships of all the instruments used by the midi renderer used to train the GA that writes the midi file.

In this video he says he needs a ‘real’ piano to do this so there must be some limitation with Midi that prevents this.

I’m not an expert at midi but I know enough to know there is not a limit if your midi renderer is powerful enough an instrument to do a real-time playback of enough notes across enough channels. If it is both sufficiently polyphonic and polytymbral. All you are doing is finding the set of wavelets existent in a set of voices that is required to generate a waveform that is close enough to the original to be acceptable, with the complexity of the midi file and the fidelity of the imitation being traded off against each other. So the results fall into a 2D space, meaning that a simple “it can’t work” is always false because it is just a question of how well it works.

I’ve just had a go at doing this myself. Have a look at the results. TSAudioToMidi then played back in Reason 5

https://youtu.be/yHztsURc2fQ

Nice, a link back to HAD on the vid page would be sweet.

That chiptune-like “laser blast” sound is what you get when pressing very many adjacent keys at once. Though I don’t know whether it occurs on real pianos. I guess it’s because of perfect tuning and timing. I don’t even know how to test it…

Not just at once, but many times at once (like PWM?) If you notice some notes are played with solid bars, some that are segmented/dashed.

I swear I saw some alpha-numerics there somewhere.

Conlon Nancarrow had some similar approaches to composition… Except he did it without the assistance of computers. Just hot-rodded some old player pianos and built a tool to punch sheets for the pianos to create music that would’ve otherwise taken years to compose by hand

How these songs could be played by people using actual instruments.

First off, build a 1/10th piano that has just 10 keys. Then build a lot more of them so there are multiple coverings of the full scale. If you want to keep it to just 88 notes then some will only have 8 keys.

Next, get some Google Glasses or similar HUD devices.

Third, break up the notes at rates it’s humanly possible to play so that the super fast arpeggios get interleaved across two or more players. Skip the 8 key pianos to give some notes even more spread.

The HUD’s keep all the players in sync. It would make for a rather unusual stage show.

They call that an orchestra.

Oh my FSM people, do you get all P-Oed when you order death by chocolate at a restaurant and don’t die immediately after consuming it? Do you shout insults at people who finish marathons and don’t immediately expire?

Does somebody know how many notes simultaneously a physical MIDI protocol can handle at a time?

Yes, you do, tomorrow, after reading the specs, http://www.midi.org/techspecs/

It’s pretty simple: MIDI uses separate commands for “Note On” and “Note Off”. You can send MIDI messages to turn all notes on – they are independent of each other. How many simultaneous notes a particular MIDI device is capable of playing is another thing entirely.

Max notes x Max Channels is about 2000, but max messages per sec (old midi hardware limit) is about 2000, so you can have 200 voices updating 10 times a second. That is still a hell of a lot of “sonic brushes” to paint any sound. The trick is to use the GA to extract out all the right parameters to get the closest match possible, rather than just FFT to get bands and simple notes controlled at a high rate, but as people have demonstrated that can be very impressive too.

Oh nice, thanks, that’s better than wikipedia explaination

I read these specs and the answer seems to be – Notes: 128, Simultaneous: none

http://hackaday.com/2015/11/12/black-midi-there-is-no-denser-music/#comment-2793895

Pro tip for the makers: MIDI can do more than the default instrument and you can get better sound fonts than the cheapest lousiest synthesized one bundled with stuff.

Traditionally, black midi uses only piano. And some of the many soundfonts i have are up to 300mb – 3gb in size, and those soundsfonts tend to glitch out when playing black midis. Most of us choose to use soundfonts right in the 60 – 200mb range. As for my videos, it is rendered audio from FLstudio11

The only thing i couldn’t do was make the tempo 314BPM. That’s wayyyy too fast haha!