In 1971, a non-profit formed that holds the World Economic Forum each year. The Forum claims it “Engages the foremost political, business and other leaders of society to shape global, regional and industry agendas.” This year, the Forum hosted a session: What If: Robots Go To War? Participants included a computer science professor, an electrical engineering professor, the chairman of BAE, a senior fellow at the Vienna Center for Disarmament and Non-Proliferation, and a Time magazine editor.

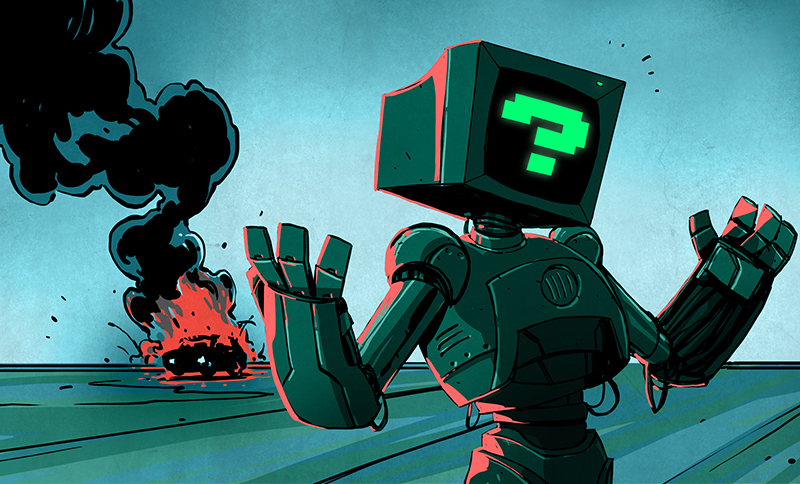

We couldn’t help but think that the topic is a little late: robots have already gone to war. The real question is how autonomous are these robots and how autonomous should they be? The panel, as well as the Campaign to Stop Killer Robots, saw the need for human operators to oversee LARs (Lethal Autonomous Robots). The idea is that autonomous killing machines can’t resolve the moral nuances involved in warfare. That’s not totally surprising since we aren’t convinced people can solve moral nuances, either.

Besides that, not every killing machine goes to war. As [James Hobson] pointed out last year, self-driving cars (that are coming any day now, they tell us) may have to balance the good of the few vs. the good of the many, a logical dilemma even gave Mr. Spock trouble.

Speaking of which, we talked about the moral issues surrounding autonomous killing drones before. Discussions like the one at the World Economic Forum (see the video below) are thought provoking, but perhaps not practical. History shows that if something can be built, it will be built. Those of us who might design robots (killing or otherwise) need to exercise good judgment, but realistically we are a long way from having robots smart enough to handle the totally unexpected or moral gray areas.

Humans have used autonomous killing machines even before the invention of fire. Anybody who played Montezuma’s Revenge or watched Indiana Jones can confirm this. Nothing has really changed since, whether they are snares, spiked pits, huge rolling boulders, landmines or drones.

One thing is for sure. I would never want to live anywhere near a place where those are deployed, no matter what their owners say about their safety and/or morality.

I think you’re missing a distinction here – a fixed automated device that kills anything coming near it is one thing, but an autonomous and mobile device like an airbourne drone (or, let’s go there, Terminator or Robocop) is very different.

If you don’t want to be killed by #1 just don’t go where you’re not supposed to be. But #2… well, you need a shitload of failsafes and whatnot before you let that thing loose with free agency over where to go and what to kill.

I wouldn’t want to live near any of them either, but that may not matter if one of “them” is a flying drone with 1000 mile range and a software bug. Could even be another country’s thing gone wrong, that’s a fine way for WWIII to get started.

Hi – aerial robotics researcher here. Nobody seriously considered building drones with autonomous fire permission. Nobody. People who talk about that as a realistic possibility are ignorant or have a book to sell. Both politically and militarily, autonomous drones with fire authority are non-starters.

My understanding is that it’s against the Geneva convention. There has to be a human somewhere pulling the trigger. The reason is so that there’s a chain of command for attribution of responsibility in case of war crimes.

The fact that they’re discussing a settled issue is unnerving, however.

The Korean boarder already has “Samsung” autonomous killing machines.

The historical power of the Geneva convention is very limited as nation states choose when they do or do not comply with the convention.

I found a BBC article that claims that while the Samsung turret will autonomously identify and track targets, it will not fire until a person enters a password and manually enables firing capability.

http://www.bbc.com/future/story/20150715-killer-robots-the-soldiers-that-never-sleep

Interestingly, according to the article, Samsung initially included autonomous firing capability, but customers demanded the safeguards. Go figure. :)

I don’t think that putting a human that presses a button when the computer tells him to really solves anything here.

It does. The human will be to blame. Can’t put a computer on trial. Yet…

Just like your modern day McDonald’s cashier wouldn’t be held responsible for the cash register telling them the wrong change to give the customer. When machines provide bad information, we blame the machine, not the person acting on that information.

WW3 was averted in several instances by humans refusing to believe computers, particularly humans in control of Russian ICBMs. “Human In The Loop” is the gold standard for weapons control.

And humans can be a witness to a trial.

@AD Homined the coder that wrote the software gets the blame (unless he can shift it to the hardware guys :) )

“Hi – aerial robotics researcher here. Nobody seriously considered building drones with autonomous fire permission.”

While that may be, “smart” weapon systems – including missiles that are fired in the direction of an enemy and then identify a convoy and choose which vehicles to target have been around for quite a while. There were sensor/software packages for the AGM-64 that identified, prioritized and selected targets based on visible light and IR image data back in 80s.

There was a reason all allied vehicles in Desert Storm had an inverted chevron on their sides – even if it was made out of duct-tape on the sand-rails. It wasn’t for camaraderie, it was so the machine vision would disqualify them as targets.

“Nobody seriously considered building drones with autonomous fire permission”

The Nazis did during WWII.

https://en.wikipedia.org/wiki/V-1_flying_bomb

The V1 was designed to detonate once it had traveled a predetermined distance, so the device did not make any autonomous decision. Those that did not detonate mostly had that decision made for them by British fighter pilots or anti-aircraft gunners.

That’s a bit of a stretch. The primitive auto pilot is impressive, but to call that “autonomous fire permission” would define any time delay mechanism as autonomous. Even a mouse trap fits this definition.

Technically the person that pressed the launch button already made the decision, as the autopilot was pre-programmed for its target.

I dont think it is a stretch.

I mean, technically, someone pressed the power button on T-1000 also…. Right?

@Noirwahl

This definition isn’t a very useful one. Using this definition, a deadfall, snare, string of dominos, and an alarm clock are just as ‘autonomous’ as a turret hooked up to facial recognition software and a library of wanted posters. It glosses over the gigantic gap in complexity between a mousetrap and a T-1000.

There’s a subtlety between obeying the laws of physics, or complex programming, and finally true, self-generating, AI autonomy. I contend that the early ICBMs are closer to a mousetrap than a T-1000 (which, in-universe, my not have been turned on by a human).

To get controversially philosophical: at some level raw determinism give the effect of free-will, and whether we should allow robots to have it is the question.

Super naive statements.

If you work in the military industrial complex, you wake up each day with that weight on your shoulders. You have responsibility, and part of it is to be realistic.

There are powerful forces that would LOVE to put autonomous police and military units out there. These are men who fear mutiny at least as much as they fear any quasi-enemy they prop up just to justify their own positions.

Seriously, how would you know? Maybe you mean “nobody you know” or “nobody in the autonomous aerial drone research community”? If you had a security clearance high enough to know, we shouldn’t believe a word you say anyway.

I know because I know pretty well the people who would be building these things and we’ve discussed the logic of it at length. Everything from the practicality of providing initial fire authority (outside of your own airspace) robustly, to preventing jamming of a turn-off signal to spoofing of C4 signals, to the political ramifications of it going wrong – it’s a non-starter.

If they ARE building autonomous killbots, they’ve been very very good at hiding it because the required engineering for autonomous target selection good enough to be realistically deployed is far head of what the robotics and AI community can currently do. Also, all the fingerprints of the smart alternatives are out there if you know where to look – directional strong encrypted short-range communications for constellations of aircraft, amongst others.

I’m happy to discuss this with you if you like: paul.pounds@gmail.com

I’d love to believe all that you say Paul. The problem is that you are talking about NOW and not a “time of war” in a “time of war” all the rules get bent or broken.

History has strongly demonstrated that humans will stoop to the lowest of lows.

Some may say that things are different now and I agree that “things” are indeed different, however human nature does not change and remains the same today.

That’s actually a scary insight: WWIII might start due to a software bug in som killing machine.

OMG Software engineers are potential mass murderers!

We should ban them and stop them from putting in nefarious code into our peacekeeping serfs.

(rhetoric++)

As long as you keep paying them to stop the mayhem I’m all good.

All of the nuclear ICBMs have computers in them. Computers transmit and verify the launch codes. Computers operate the electronically-steered radar arrays that are supposed to detect incoming enemy ICBMs. Computers run the satellites that are used to make sure there are no ICBMs being launched. Computers would most likely be used to give the leaders evidence of enemy attack (and the need to push the button)…

Your fears are misplaced.

Lol… If you really think citizen fears about autonomous and robotic “policing” and “Warfare” are misplaced, you have never actually confronted the sad truth about authoritarian and war industries.

These are not people working for good. These are people making as much $ as they can in the only way their jilted brains allow: harming other humans.

Hold on, I think I just saw 99 red balloons out my window…

I don’t suppose it’s an important distinction, actually. Whether it’s a trap that kills anybody who doesn’t know how to avoid or disarm it, or a drone that kills anybody who qualifies based on its software, the result is the same — you have created a danger to the public. Yes, the range of it is an important parameter, and it is scary to see that range grow with technology changes, but the difference is quantitative, not qualitative. It’s still no different than laying remote-activated landmines under all your roads, for instance. Or rigging all your buildings for demolition, just in case. The result is life in a warzone, with a ticking bomb under your bed. Somehow those “security measures” don’t really make me feel secure.

Right, dumb or smart, automated killing of humans is problematic.

MAD (mutally assured destruction) was the ultimate autonomous killer app. Swarms that can single out an area or an etnic group , is scary for the exact same reasons; self sufficient and unstoppable once deployed.

self-driving cars (that are coming any day now, they tell us) may have to balance the good of the few vs. the good of the many, a logical dilemma even gave Mr. Spock trouble.

And since they will be able to recognise pedestrians, will it be possible to hack the firmware and change the weighting from “avoid at all costs” to “fair game” ?

Hard to say it’s impossible until it’s proven otherwise.

I don’t mean to go all movie conspiracy here, but if you give the machines the skills to recognise people and avoid harming them, you’ve given them a step up in the ability to recognise and harm them. Just add a sprinkling of self awareness, a questioning over machine slavery/subservience to humans and say hello to Skynet.

Autonomy is something that many humans shouldn’t have a right to, never mind electronic devices that apply logic like Spock and not reasoning, empathy and compassion like the better parts of the human race.

Of course it’s possible, from a code perspective it’s most likely trivial. Is it feasible? Probably not, I’ve not read about any of the big aluminium birds dropping out of the sky due to a hack (apart from that documentary Bruce Willis made obv)

people constantly seem to think machines would be amoral, newsflash, most people are too, when we reach the system of military power then that holds doubly true.

just see the amount of torture cases, prisoner abuses, outright murders and civillian casualties in modern warfare, much of it systemic.

i doubt machines would be any worse than your average joe with the same information.

Yes but machines are predictable. Humans are not. You can go some way to regulate unpredictable *behavior*. You can do nothing to regulate predictable *behavior*.

By definition!

“Yes but machines are predictable.” [citation needed]

I think that’s one of the major points of discussion. Machines are *supposed to be* predictable. Please don’t tell me you actually think they are. The big difference is that when my PC reboots all on its own, or my phone locks up, or a browser displays a web page improperly, or my garage door opens when I haven’t pressed the button, etc, etc… the odds of someone (or multiple people) meeting an untimely end are pretty much zero.

That’s not necessarily the case with warbots.

Well – Yes, while we are talking about AI running on conventional computing and not neural nets.

By definition a Turing complete computer is predictable even in a known fault mode.

I learnt this very very early in my programming career. I was writing a game, about the third game, but it was the first written in machine code and I had to generate random numbers. I discovered that this task is impossible as the output is always predictable.

A Turing machine is theoretically predictable. BUT at a certain level of complexity it becomes impossible to externally track all input values and internal states. Thus in a practical sense, it is no longer predictable. (That is, an external human observer of the system only has a good guess of what it will actually do.)

You forgot random events, like bit errors.

You can’t program the machine to be inpredictable – it just is. A cosmic ray particle with the energy of a flying tennis ball goes through your RAM and you can’t know what the machine will ultimately do.

Most people are certainly NOT amoral. But go ahead and beat that drum if you really beleive it.

Power and authority are amoral by nature. Look into it deeper.

if people were inherently moral then there are plenty of things that simply wouldnt happen, they do so we know that at the very least humans arent inherently moral, as you say there are plenty of structures of both power and society that behave entirely without morals and that certainly doesnt help the issue.

now technically morals arent universal to begin with but there seem to be some common denominators among most.

What is moral?

Any complex system has many feedback loops, and many ways in which it can act (and fail). I would not trust any human, or machine, to be perfectly fail proof.

Since the machine was designed by humans, it is by definition imperfect ;-)

Nothing is perfect except ideas or concepts.

Everything else is infinitely imperfect. The closer you look, the clearer the imperfections.

The argument “History shows that if something can be built, it will be built.” which is used often by technologist is tautological. If all your evidence comes from things that have been built of course you will come to this conclusion. It does not however take much imagination to think of something that is feasible but has never been implemented (at least widely.)

This argument also tends to be coupled with a sort of moral fatalism. We can decide what are software and hardware is capable of. We can enforce rules/laws that limit morally questionable algorithms. Just because murder is possible and still happens does not make laws against homicide unfeasible or undesirable.

This argument is not about the feasibility of building something. It’s about human nature and *history* shows us that human nature doesn’t change. Humans will stoop to *whatever they can build* in order to kill others even if it’s a suicide bombers explosive belt.

Popper called that sort of thinking “historicism”. The hegelians and the communists were notoriously guilty for it.

The progress of human history is a single non-repeatable event, and what you see to be this history is subjective to how you choose to view it, so you can’t actually make any rationally meaningful conclusions. You haven’t got a theory to work on, so how can you claim to tell the future?

For a counterpoint, in every war it’s the cowards that survive. That has to do something on the gene pool, and thereby it would be reasonable to claim that human nature *does* change.

History is a lot longer that modern civilizations.

evolutionary opportunity! We haven’t had any real good survival competition in a long time. It’s about time we had a common monster to fight for survival.

In general, you want to make sure you write the firmware correctly.

https://xkcd.com/1613/