Today’s computers are unimaginably complex, and so complicated it’s nearly impossible for anyone to comprehend everything a CPU can do in excruciating detail. It wasn’t always like this – the early CPUs of the 70s and 80s were relatively simple and can easily be recreated at the individual gate level. CPUs can be even simpler, as [Jack Eisenmann] demonstrates with a single instruction computer, the DUO Compact 2, made entirely out of 74-series logic chips and a bunch of memory.

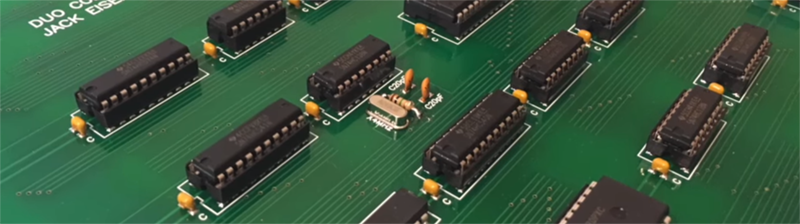

[Jack] has a long history of building strange computers out of individual chips, including a TTL logic CPU and a significantly more complicated single instruction computer. The latest, though, is as simple as it gets. It’s just twenty chips, capable of calculating prime numbers, sorting strings, and everything else a computer is able to do.

With every one-instruction computer, there is the obvious question of what instruction this computer uses. For the DUO Compact 2 it’s a single instruction that accepts three arguments, A, B, and C. The instruction copies a byte from A to B, then jumps to the instruction at C. Is it even possible for a computer to add two numbers with this instruction? Yes, if you have massive look up tables stored in 2 Megabytes of Flash and 512 kB of RAM.

In the video below, [Jack] goes over how his tiny computer works and demonstrates prime number generation (it’s slow), string sorting (also slow), and displaying ’99 bottles of beer on the wall’ on the computer’s LCD. All the files to replicate this computer are available on [Jack]’s webpage, along with an emulator in case you don’t want to break out a breadboard for this one.

An exercise in minimalism. Always interesting, and great work.

XD, “also slow”.

Pff, bubble sort? Sure it’s easy to implement, but it just doesn’t look cool.

The rest of the system is pretty damned cool though. I always love a good Turing tarpit.

How do you calculate, if no logical operation is being done on the numbers? I’ve heard of OISC using, for example, “subtract, branch if negative”, also a three-operand instruction. The Youtube video contains a link to his text files, which would’ve been a better link, but it doesn’t explain the principle it’s based on.

BTW if it’s “transport triggered architecture”, I consider that a cheat. That’s still implementing several instructions, just offloading them to memory addresses rather than having them as direct instructions. A TTA CPU is really just an instruction decoder. The function-addresses are the ALU, and the whole thing together is the real computer. Hiding instructions in memory addresses doesn’t stop them being instructions!

It’s an interesting concept and truly exciting how a fully functional CPU can be built by some simple components and smart thinking. One instruction computer doesn’t mean that it’s transport triggered (here’s a transport triggered CPU: https://hackaday.io/project/11703-8-bit-ttl-cpu); this CPU is not transport triggered as it has microcode and lacks any ALU, but rather a CPU that allows the programmer to define all the ALU functionalities in software and skips more sophisticated instructions, as those also can be defined by the programmer. Truly simple and flexible. But simplicity has a price, the complexity comes when someone attempts to write programs for these simply built CPUs.

Sure, but how does this one work? How does it, say, add, or compare? Just a walk through a simple example would be nice. How can just copying data do computing? A computer needs to be able to change data based on other data.

Said machine doth compute thusly, https://esolangs.org/wiki/ByteByteJump

Ah, ta! I’ve just remembered the IBM CADET, although I think that worked somewhat differently, I suppose it shows the principle. A Karnaugh map is a lookup table of a function, so you can do logic that way. Normally you’d use an index register to the table though, I guess, which has an implicit adder.

Still needs a bit of thinking to understand, I’ll put my mind to it, now I know what it’s called (a byte-byte-jump machine). Would genuinely be easier to program in Brainfuck. RPN Brainfuck.

As far as I read it, self-modifying code is the trick used. Download Storage.zip and inspect the macro’s: e.g. a conditional jump basically assembles a jump instruction in RAM at runtime dependent on the condition variables (eg the code encodes to a JMP A if the condition variable is 1 and a JMP B when it is 0) , then jumps to it to perform the actual jump. That’s my guesstimate of how it works; it’s all pretty uncommented code so I may be miles off though.

I feel the need to mention the wireworld computer, which blew my mind when I first saw it.

http://www.quinapalus.com/wi-index.html

It also calculates primes as originally programmed and in only 64 words of memory, including the program counter. It also has only one instruction and that needs only 2 operands (a write to the program counter jumps).

For those people that don’t know wireworld, it’s a cellular automata, a little like Conway’s life. They built a computer in a cellular automata.

Wireworld’s been around a long while now, a few decades. It was never really used, AFAIK, for much more than simple logic tests. Partly I suppose because computers couldn’t run a CA fast enough for the size you’d need. That’s one great thing about CA. I had a go at programming one ages ago, as part of a psychedelic graphics generator, there’s lots of little gimmicks to make it do weird things.

Related are L-Systems, or Lindenmayer systems. They’re the polygon fractals, the ones drawn with straight lines. Where you specify a function to draw a “Y”, then recursively call it, to draw something like a tree.

My idea was to have an L-system you can edit graphically, drawing new lines, creating new nodes and attaching lines to them with a mouse. The few L-system programs you can get force you to write simple code instead, like turtle graphics. Doing it graphically would open it up to many more people, it might see more use in art, and more complex systems could easily be created.

I suppose I could knock it up myself in Java, but there’s a challenge if anyone wants one. And likes fractals.

Also, forgot to add…

In the early days of CA, somebody invented a machine dedicated to them. Far as I remember, it used lookup tables in memory for each cell. So it’d lookup the surrounding 8 cells plus the one in question, look up their pattern in a 512-address single bit memory, to produce the 1 or 0 for the next generation. It meant no CPU was needed. I suppose it used a counter to run through the RAM each generation. Was much faster than any software, CA researchers used to borrow it from the guy who constructed it.

The “Vote” / “Parity” CA is very weird. Just by using odd or even neighbour count to determine the next generation, it copies patterns. But not immediately. First they go through several undecipherable stages, where you’ve no real idea what they’re aiming for. But the data’s all stored in there. Then the replicated patterns replicate themselves. Bizarre how clever such a simple system can be. I think all the possible rules, all 512 of them, for Conway’s life, have been explored by now.

I’ve been into CAs at least since I read an article in “Computer Shopper” about them when I was about 10 or 11. Text-mode on an 8-bit machine in BASIC had to suffice, for a while. Now you can do megapixels worth, as fast as your monitor can update the screen.

The wireworld computer is very impressive and I appreciate it so much that I analysed its design to understand it:

https://www.logre.eu/wiki/Projet_Wireworld/en

For some reason I am reminded of this (just the first half):

https://www.youtube.com/watch?v=BIroRqO_BpM

I’m glad the single instruction wasn’t NOP.

Ha ha, yes indeed. I could almost build that one.

I designed the Recalcitrant-1 that just had the single opcode NOPE, and another one called CRETIN, that was effectively the same but used DERP.

Or HaCF!

I highly recommend Logisim, a cross-platform, digital circuit and logic simulator. It’s the best way to get started designing your won CPU.

http://www.cburch.com/logisim/

I completely agree. I used to simulate some part of wirewold computer logic and its really nice tool

How does it compare with TKGate? Does the Verilog code output from TKGate have any advantages?