Often, CPUs that work together operate on SIMD (Single Instruction Multiple Data) or MISD (Multiple Instruction Single Data), part of Flynn’s taxonomy. For example, your video card probably has the ability to apply a single operation (an instruction) to lots of pixels simultaneously (multiple data). Researchers at the University of California–Davis recently constructed a single chip with 1,000 independently programmable processors onboard. The device is energy efficient and can compute up to 1.78 trillion instructions per second.

The KiloCore chip (not to be confused with the 2006 Rapport chip of the same name) has 621 million transistors and uses special techniques to be energy efficient, an important design feature when dealing with so many CPUs. Each processor operates at 1.78 GHz or less and can shut itself down when not needed. The team reports that even when computing 115 billion instructions per second, the device only consumes about 700 milliwatts.

Unlike some multicore designs that use a shared memory area to communicate between processors, the KiloCore allows processors to directly communicate. If you are just a diehard Arduino user, maybe you could scale up this design. Or, if you want to make use of the unused power in your video card under Linux, you can always try to bring KGPU up to date.

Impressive

Depends. The reason why other multicore systems like GPUs consume so much power is because they actually are handling a lot of data. You can do 1.78 trillion NOPs per second on no power at all.

Takes more than half of a modern CPU’s core power budget to run the massive caches, and then there’s also the integrated memory interface to external RAM. These cores are all constructed with ~600,000 transistors minus interface logic and routing, so they can’t have more than few kilobytes of local memory each.

They’re more like a thousand stripped down Arduinos running at 1.78 GHz.

Maybe, but then again that is still rather impressive.

To me it’s a big question mark, because it’s basically a variation of the concept tried back in the 80’s with multiple independent CPUs in an interconnected multi-dimensional “lattice”

These things:

https://en.wikipedia.org/wiki/Connection_Machine

Those things had up to 65,536 simple 1-bit CPUs, and all you ever saw of them was a bit in Jurassic Park because they had neat blinking lights.

The Connection Machine was a case of trying to run before learning to walk. The problem is that the software for these things never was as good as the hardware but that is changing.

@[Dax] What are these “other multicore systems”?

I haven’t seen much (by way of ASIC) that departs from tradition except the 8 core Propeller Cog, Bitcoin mining machines and a lot of hot air about what appears to be vaporware in most cases.

Then there is VHDL and this is an interesting thing to play with in VHDL (or Verilog) but FPGA is far far from energy efficient so it cold never make it to market.

Now that Moore’s law is more or less dead we need to tackle the the other issues – we can’t save energy by making transistors smaller because we need at least ‘x’ atoms per transistor.

That leaves us with the exponential power consumption associated with increasing clock speed so we have to turn to more – parallel like or multi core processors.

This chip is built with 32nm tech so it’s as good as it gets with today’s tech as far as energy efficiency goes.

To demonstrate some very rough math –

You have 1 of 1GHz cores and it uses 1 squared power (the reference benchmark) and that gives you 1 GFLOPS

You double the speed so you quadruple the power consumption (2 squared) and now you have 2 GFLOPS but at only half the power efficiency.

You double the number of cores so you double the power consumption and now have 2 GFLOPS at the SAME power efficiency.

Now compare using 8 cores at half the speed. You use one quarter of the power and have 4 GFLOPS.

Now all we need is the software tools to cross compile from traditional threaded sequential programing to a more paralleled process(or).

Even if you apply Moore’s law strictly, the rate of improvement in the ability to fabricate a number of 2D transistors for a given area of silicon, it does not stall until you try to go past 5nm and that could mean a decade from now. But that ignores the fact that the transistor will also become less relevant a measure of how much computation will be achievable for a given volume of technology.

“But that ignores the fact that the transistor will also become less relevant”

You still need a certain number of transistors per bit of memory, and you can’t squeeze a thing like an ALU any smaller than the information it contains, so it’s rather strange to claim that the number of transistors can become irrelevant.

The UNSW work with quantum systems on silicon throws out the old ratios between computations/time and transistors. That is one tech that we know of right now, who knows what is in the labs that we don’t know about but which will come to light over the next decade. i.e. Moore’s Law does not fail, it becomes irrelevant.

“quantum systems on silicon”

Well if you don’t perform computation on transistors, then the number of transistors becomes irrelevant. Duh.

The problem with quantum computation is that it’s not the same thing as classical computation, so it’s not directly comparable in terms like GFLOPS. For starters, the answer you get from a quantum computer is not exact but “likely”, and it’s accumulated by running the algorithm over and over again, and you trade speed with uncertainty – and you’re still relying on classical transistorized systems to load the qubits with data for each re-run.

>Now all we need is the software tools to cross compile from traditional threaded sequential programing to a more >paralleled process(or).

Yes and also cute rainbow unicorn and warp drive interstellar ships.

“What are these “other multicore systems”? ”

As I said, things like GPUs. Mine has 1024 cores arranged into 16 control units. It’s just semantics to call them “shader units” instead of cpus.

Shader units…

Don’t forget: your’s is SIMD (single instruction multiple data). Whenever there’s an ‘if’, and half of the bunch wants to take a left turn and another half a right turn… half of your performance goes down the toilet[1]. Each architecture has its sweet spot…

[1] http://www.gamedev.net/topic/428045-why-is-gpu-branching-slow/

But then it turns out that the communications overhead between individual CPUs assigned to a single task starts to decrease performance at a surprisingly low number of cores because they start to spend more time dividing the task between them than what you gain from adding one more CPU to the task.

So the 1000 core MIMD approach is great only if you have loads of independent tasks, but that’s already an “embarrassingly parallel” problem because the tasks don’t share data.

“You double the speed so you quadruple the power consumption (2 squared) and now you have 2 GFLOPS but at only half the power efficiency.”

Power consumption of a CPU is roughly proportional to clock speed over a wide range. Doubling the CPU speed doubles the power requirement.

You would also play with the voltage so that a part would work at a higher/lower frequency, so it is cube not square!

https://software.intel.com/en-us/blogs/2014/02/19/why-has-cpu-frequency-ceased-to-grow

> So, the transistor switch speed is proportional to voltage. We need to take into consideration that processor frequency can be raised proportionally to transistor switch speed only

> f ~ V and P ~ Cdyn*V3

>Linear frequency growth causes power dissipation to be increasingly cubed! If the frequency is raised only twice, there will be eight times greater heat that must be accommodated or the processor will melt or shutdown.

It’s really inspiring to see what a plucky group of post-docs with a semiconductor foundry and a million dollars budget can do. Truly an amazing computer hack that shows how much you can do with just the tools at hand.

I do wish you didn’t have to add the stuff about the Arduinos though. It seems like every blog entry lately has an Arduino in it. Well, except for the big diaper story last week. No Arduinos there thank goodness.

I’m sure one could rig up a nice moisture level measurement circuit with an Arduino to complete that article, too!

That makes Arduino kind of the rule34 of electronics, doesnt it.

Let us know when there’s real–i.e., 1000 CPUs-in-parallel–software available, and not just ‘…a compiler being worked on…”.

Then you’ll have an article.

That is not a lot of transistors really, about 1/3 of what is on the top-of-the-line commodity CPUs, and they are only using 32 nanometer technology. This indicates that the design can scale very rapidly over the next 4 years to 4K processors or more.

It is hard to comment on what applications it is best suited for, other than the obvious ones, because I have no details about the architecture, instruction set, or I/O throughput which is critical with huge data sets. But at a guess I’d say mobile AI.

They carefully formulate a few things that makes me read between the lines that each processor only has a SMALL local memory and little or no connection to the outside world. If your processor is executing instructions at the order of 1000M per second, then 1Gb of memory is appropriate for real work. So how are they going to interface 1Tb of memory to this processor at reasonable bandwidth?

This is an academic project. These guys made the processor, the next batch of undergraduates, graduates, or postdocs will “try to do something useful with this”.

If they scale it so that there is more onboard memory/cache and that the IO bandwidth increases, even at a loss-of-cpu-count of say a factor of 8, this might become useful.

Who knows what they will come up with, they could just use more silicon to add a large amount of high speed RAM that is on the chip. It would only cost more, but for every one they had to cut down because there was a flaw in that area they would have many that worked fine, and if they do the design right they can use the RAM of a defective cluster section with the cluster section from a defective RAM area to offer a cheaper slower part. In theory the entire wafer could be one huge integrated cluster with a big network on chip, RAM I/O buffers etc. If they get a defect they slice down the wafer to get the most amount of everything that is still working, or they just use a way of disconnecting the areas that are defective. That could give you one huge chip 30 cm across with perhaps 100,000 cores and lots of RAM etc. So scaling to just 10,000 is very doable, if you can find a client to pay for it. Then there are the more advanced options such as stacking the logic directly above one of the more advanced RAM types.

I’ve done this with Windows XP and it is able to do 27 trillion process a second using just one old fashion PC. It was done using the speech software from windows and a few other ingredients… but nothing special. The problem I had was when I went to a friend’s house to hook it up to his network, while I was plugging it in it shot a huge blue arc just like plasma arc from welding,about 4 inches from the wall to the plug it fryd the graphics card but the HDD continued to operate it was booting to my friends network. how I know that was because of the algorithm of the Hdd, it’s like a song I knew the rhythm of the bet sword of speak… but anyways.all my work was shit out all over the internet through his Mac and his Windows OS that he had on the network. My program was acting like a server,it pushed out more packets of info that any one can imagine.then I unplugged it and hooked it to the Mac it shit out the other half of the info I had programmed… I did this to see if it was something else on his network. So half went to Microsoft and the other half went to Macintosh/Apple. It’s the platform that run all smartphones… If you want to know more Email me at grbbrister32@gmail.com

Your name should have been Markov.

Heh, I hope you are right, it would be tragic for an actual human to be like that.

Old news… bla bla bla. tell me somthing i havn’t done yet!!!!

Worked out how to have a meaningful relationship with something other than your hand?

Do you have any evidence to suggest his hand likes him too? :)

Are you saying that “he” is a rapist too?

Surprised nobody has mentioned the GRVI Phalanx yet. 400 32-bit cores in a medium large FPGA with a network on a chip connecting all of them.

This? Well I’m glad you did!

https://www.youtube.com/watch?v=828oMNFGSjg

Or picoChip and GreenArrays

Some more info about the chip here: 2016.vlsi.symp.kiloCore.pdf

http://vcl.ece.ucdavis.edu/pubs/2016.06.vlsi.symp.kiloCore/

Funding from DoD, IBM and the National Science Foundation, so double government funding and I am guessing that any physical product will be eventually brought to market by IBM.

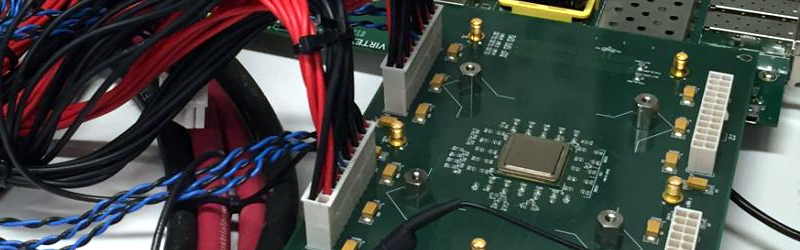

72 different instructions and there are 12x64KiB SRAM modules (768KiB in total) at the bottom edge of the chip, which is a bit strange looking.

Reminds me of a mutli-cpu chip that was programmed using a variant of… FORTRAN or maybe it was COBOL. I think. I tried to get a test board or even a sample CPU but couldn’t get one. I think the developer of the language also developed the CPU.

I remember how crazy it was. It had an odd number of bytes worth of registers for each core. AVR uses 32. Something like 7 or 15 of registers.. some oddball number that purposely made it nearly impossible to adapt C to it or hold all of some value. You had to push instructions onto the stack and pop them off.

It pissed me off that I couldn’t get ahold of any chip in any way despite the myriad of promises so I just picked up ARM and went with that.

I can’t find the website now.

Are you thinking about Chuck Moore’s Greenarray Forth chips?

Yes!

Those chips always seemed less about the hardware and more about pushing the use, and acceptance, of the language.

So, where do i obtain one of these multi-CPUs? and can I run win10/space engineers on it? no?..

i can truthfuly say that all that what you people are working on was made possible from my voice…

i can truthfuly say that all that what you people are working on was made possible from my voice…and yes you had to program it by forceing system into a corner so that their is no other path to take but the default path… and yes it runs as parallel as it can get.. so much so that it is inverted, imagine a box with 4 mirrors on the inside and 2 of the 6 walls of the box are open and then compress the box so that its almost flat. then i could use my voice and ping like a sub through the cpu,mother board,ram,imbeded chip sets and the hard drive until the reverbaration would lockloop. its not the programing that holds new tecnolegy from moveing forward its the propritary imformation the big companys dont want to let the public knowing…

poor dumb dan did your mother rap you or was it your dad?

It’s the whole, how may transistors on an i7 die. [ saying that server processors give much more ]

How many to emulate a z80, so how many z80s can fit on an i7 whilst still letting them talk to each other and giving them their own little bit of ram.