For several years, hackers have been exploring inertial measurement units (IMUs) as cheap sensors for motion capturing. [Ivo Herzig’s] final Diploma project “Bewegungsfelder” takes the concept of IMU-based MoCap one step further with a freely configurable motion capturing system based on strap-on, WiFi-enabled IMU modules.

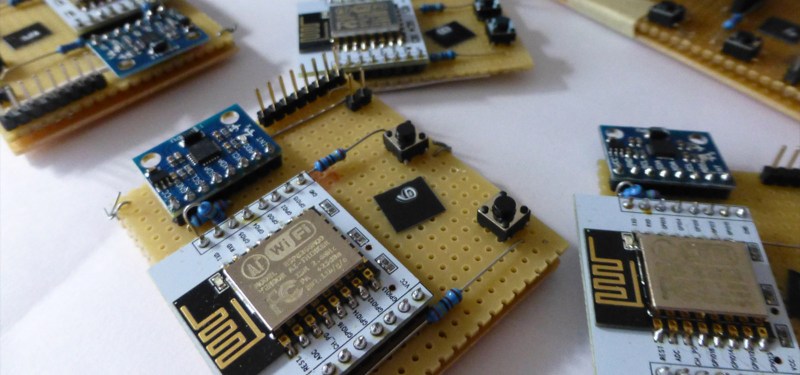

The Bewegungsfelder system consists of multiple, ESP8266-powered standalone IMU sensor nodes and a motion capturing server. Attached to a person’s body (or anything else) the nodes wirelessly stream the output of their onboard MPU6050 6-axis accelerometer/gyroscope to a central motion capturing server. A server application translates the incoming data into skeletal animations, visualizes them as a live preview and stores the MoCap data for later use. Because the sensor nodes are entirely self-contained, they can be easily reconfigured to any skeletal topology, be it a human, a cat, or an industrial robot.

[Ivo] already included support for custom skeleton definitions, as well as BVH import/export to use the generated data in commonly used tools like Blender of Maya. The software portion of the project is released as open source under MIT License, with both the firmware and the server application code being available on GitHub. According to [Ivo], these nodes can be built for as few as $5, which puts them in a sweet price range for AR/VR applications — or for making your own cartoons.

Awesome!!!

Hey, please help me achieve this. :) I want to make Full body mocap with 1 arduino, is it possible? Please help me I don’t know how to use this and I have to get it. Please. My email: oaxpglitchersec@gmail.com

Please help me, I need this but I don’t know how to do it.

any schematics for the hardware IMU board?

Looks just like an ESP8266 module, the mentioned IMU module, 2 push button switches and 4 pullups (2 for buttons and 2 for I2C)

Looks like its just a veroboard hooking your run of the mill ESP-12 to your run of the mill MPU6050 breakout over I2C. You could probably make the schematic in ASCII art pretty quickly :p

Niiiice!

this is awesome! Which algorithm does he use for sensor data fusion?

Can be made more compact… has been made more compact

https://www.kickstarter.com/projects/hackarobot/withumb-arduino-compatible-wifi-usb-thumb-imu/description

Even better:

https://github.com/cnlohr/wiflier

This tech can be used to record all of your movements over an extended period of time so that the data set could be provided to a neural network to jump-start robot motion training. i.e. You give it a large set of successful motions from the complete set of possible motions. You can construct Markov relationships between poses, this would let the robot move around randomly but constrained and biased by the recorded motion data. This would result in the motion being choreographically valid, and sometimes even meaningful to an observer, in the same way that Markov chains can be used to generate text that is “readable” if mostly nonsense.

No you can’t.

Add all the heuristics known, and the problem is still NP-hard.

=P

Right, from the ground, that’s what looks like the first 99% of the mountain… but after you’ve climbed it, all that’s left is the other 99%

If you knew what you were talking about Google translate wouldn’t work. :-)

“Google translate wouldn’t work.”

Your informal fallacy isn’t a mathematical proof.

If NASA can’t solve this problem efficiently, than it is highly improbable you can.

https://www.youtube.com/watch?v=wvVPdyYeaQU

It is NASA who are stupid, they made a robot to go in the space station and gave it arms and legs, FFS all it needs is 4 arms because it can’t walk anywhere anyway! Try arguing with that you wanker, actually I expect you will because you are a pathetic troll who doesn’t actually know what I was talking about. The only fallacy here is your pseudo scientific verbal diarrhoea.

Please provide a basis for your seemingly non-sequitur mentioning of NP-hardness.

fusion engine is the trick to a better solution. drift matters. kalman filters are hard to tune. This solution is great..

– EM7180 sensor hub.

– https://www.tindie.com/products/onehorse/ultimate-sensor-fusion-solution/

How does this compare to a BNO055?

I think I need to make a batch of these, and track down my old Taijiquan instructor. He was trying to get this kind of data working with a university, with access to mo-cap and other tools; he and I both wondered how certain disabilities and joint/muscle problems impacted the way people walked through the forms. Something like this would be a huge step from the method I last heard was being worked on: using a WiiFit balance board to compare total weight (measure at start) to weight distribution (one foot on the board while in a pose), with WiiMotes in hands to get positional data. They were recording reference with the bigger tools, but wanted a low-cost method to get a gamified version to end-users.

there are so many projects like this out there. Problem usually isn’t building the hardware, but getting EMI under control and the horrible drift most (cheap) IMU show. I know that Xsense, for example, invest far more money into their software development than into their hardware dep.

looking at the data viz of the “dancing woman” … there’s a lot left to do about the software. Ground contact control for starters. Catch drifting feet. Yeah … like I said above. The hardware part’s the easy one. Nailing the software is where the money burns.

Wounds in the Face (recognized by the next cam at your Train-Station) would be the CRC/Back channel/Semaphor for the NSA/CIA at the ne and the main-reason for the #BurkaVerbot in case they would have such technology that is smaller than yours

Very cool looking, but I can’t see immediately from the photos what is powering these units. I assume Gymnastic Girl is wearing a fairly hefty battery pack?