There are only two ways of creating two perfect copies of a person: 3D printing and twins. 3D printing magician [Simon] the Sorcerer 3D-printerer uses his secret knowledge to create the perfect illusion: A 3D-printing-real-people prank.

Author: Moritz Walter114 Articles

Treadmill To Belt Grinder Conversion Worked Out

[Mike] had a bunch of disused fitness machines lying around. Being a skilled welder, he decided to take them apart and put them back together in the shape of a belt grinder.

In particular, [Mike] is reusing the height-adjustment guide rail of an old workout bench to build the adjustable frame that holds the sanding belt. A powerful DC motor including a flywheel was scavenged from one treadmill, the speed controller came from another. [Mike] won’t miss the workout bench: Once you’re welding a piece of steel tube dead-center on a flywheel, as happened for the grinder’s drive wheel, you may call yourself a man (or woman) of steel.

The finished frame received a nice paint job, a little switching cabinet, proper running wheels and, of course, a sanding belt. Despite all recycling efforts, about 80 bucks went into the project, which is still a good deal for a rock-solid, variable-speed belt grinder.

Apparently, disused fitness devices make an ideal framework to build your own tools: Strong metal frames, plentiful adjustment guides, and strong treadmill motors. Let us know how you put old steel to good use in the comments and enjoy [Mike’s] build documentation video below!

Continue reading “Treadmill To Belt Grinder Conversion Worked Out”

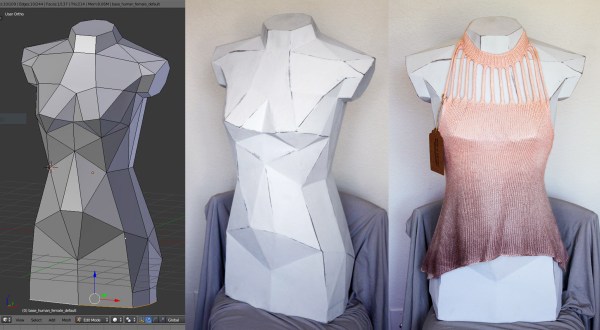

Fashion Mannequin Is Fiberglass Reinforced Paper Craft

[Leah and Ailee] run their own handmade clothing business and needed a mannequin to drape their creations onto for display and photography. Since ready-made busts are quite pricey and also didn’t really suit their style, [Leah] set out to make her own mannequins by cleverly combining paper craft techniques and fiberglass.

Continue reading “Fashion Mannequin Is Fiberglass Reinforced Paper Craft”

Hackaday Prize Entry: Tongue Vision

Visually impaired people know something the rest of us often overlooks: we actually don’t see with our eyes, but with our brains. For his Hackaday Prize entry, [Ray Lynch] is building a tongue vision system, that will help blind people to see through one of the human brain’s auxiliary ports: the taste buds.

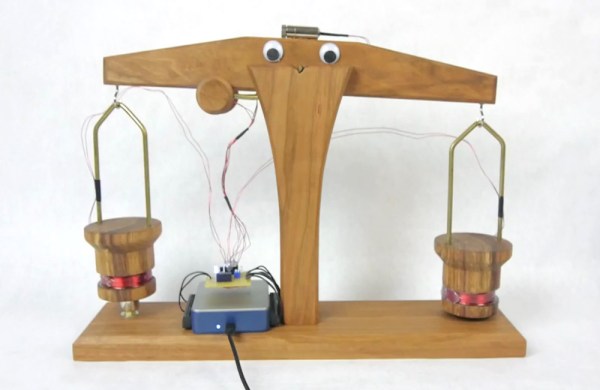

DIY Watt-Balance Redefines Your Kilograms From Scratch

Machined from a chunk of a virtually indestructible platinum-iridium alloy, the international prototype kilogram (IPK) was built to last for an eternity. And yet, being the last remaining, physical artifact in a club of fundamental SI units, the current definition of the kilogram has worn out. Most certainly the watt-balance will take its place, and redefine the kilogram with a true, physical phenomenon. [Grady] just built his own watt-balance from scratch, and he provides you with a decent portion of scientific background on the matter.

Continue reading “DIY Watt-Balance Redefines Your Kilograms From Scratch”

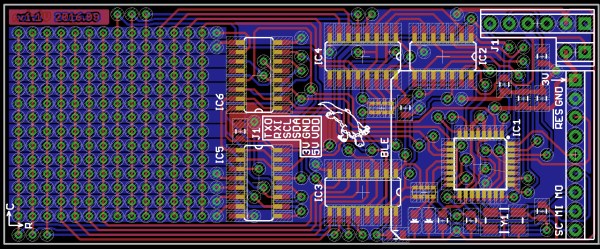

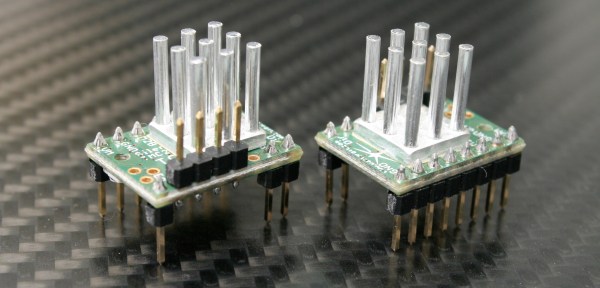

3D Printering: Trinamic TMC2130 Stepper Motor Drivers

Adjust the phase current, crank up the microstepping, and forget about it — that’s what most people want out of a stepper motor driver IC. Although they power most of our CNC machines and 3D printers, as monolithic solutions to “make it spin”, we don’t often pay much attention to them.

In this article, I’ll be looking at the Trinamic TMC2130 stepper motor driver, one that comes with more bells and whistles than you might ever need. On the one hand, this driver can be configured through its SPI interface to suit virtually any application that employs a stepper motor. On the other hand, you can also write directly to the coil current registers and expand the scope of applicability far beyond motors.

Continue reading “3D Printering: Trinamic TMC2130 Stepper Motor Drivers”

Hallucinating Machines Generate Tiny Video Clips

Hallucination is the erroneous perception of something that’s actually absent – or in other words: A possible interpretation of training data. Researchers from the MIT and the UMBC have developed and trained a generative-machine learning model that learns to generate tiny videos at random. The hallucination-like, 64×64 pixels small clips are somewhat plausible, but also a bit spooky.

The machine-learning model behind these artificial clips is capable of learning from unlabeled “in-the-wild” training videos and relies mostly on the temporal coherence of subsequent frames as well as the presence of a static background. It learns to disentangle foreground objects from the background and extracts the overall dynamics from the scenes. The trained model can then be used to generate new clips at random (as shown above), or from a static input image (as shown in pairs below).

Currently, the team limits the clips to a resolution of 64×64 pixels and 32 frames in duration in order to decrease the amount of required training data, which is still at 7 TB. Despite obvious deficiencies in terms of photorealism, the little clips have been judged “more realistic” than real clips by about 20 percent of the participants in a psychophysical study the team conducted. The code for the project (Torch7/LuaJIT) can already be found on GitHub, together with a pre-trained model. The project will also be shown in December at the 2016 NIPS conference.