Researchers at the Delft University of Technology wanted to use FPGAs at cryogenic temperatures down around 4 degrees Kelvin. They knew from previous research that many FPGAs that use submicron fabrication technology actually work pretty well at those temperatures. It is the other components that misbehave — in particular, capacitors and voltage regulators. They worked out an interesting strategy to get around this problem.

The common solution is to move the power supply away from the FPGA and out of the cold environment. The problem is, that means long wires and fluctuating current demands will cause a variable voltage drop at the end of the long wire. The traditional answer to that problem is to have the remote regulator sense the voltage close to the load. This works because the current going through the sense wires is a small fraction of the load current and should be relatively constant. The Delft team took a different approach because they found sensing power supplies reacted too slowly: they created an FPGA design that draws nearly the same current no matter what it is doing.

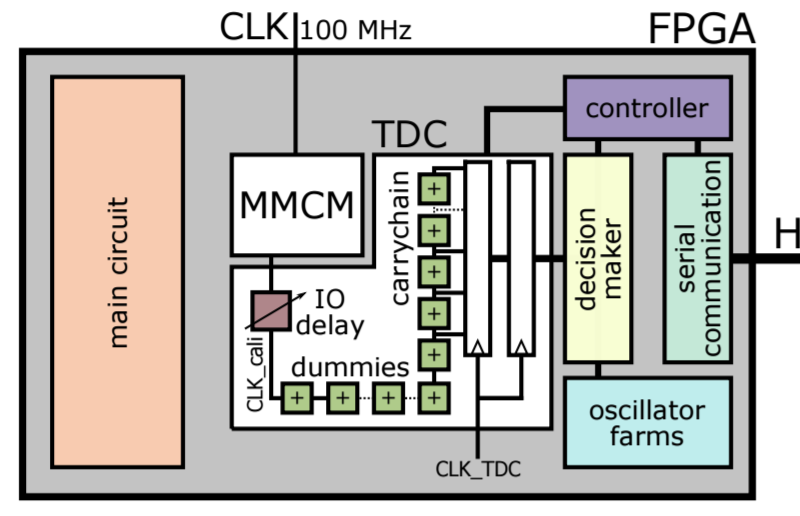

There are two parts to this trick. First, you have to know that the FPGA voltage dropped (implying a rise in current consumption). They do this by measuring the delay through a cell. Then you have to selectively control your power consumption. To do this, their design includes 4 “farms” of 128 oscillators each. These, of course, draw power. As other parts of the chip draw more power, the FPGA turns off 4 oscillators (one from each farm) at a time to keep the power consumption constant. As the chip draws less power, of course, the oscillators will reactivate to take up the slack.

The paper has some good data on how components behave at cold temperatures. We’ve actually looked at that before. Maybe this technique is just what you need to hack your rapid popsicle cooler.

I wonder if it would also be useful to thwart a power analysis side channel attack

It could, but they could still do invasive frontside and/or backside probing since there is no secure shield ( https://hal.inria.fr/hal-01110463/document ) protection in a FPGA.

Thanks. I thought those techniques were limited to places like Intel given the feature size. I see there’s been work on optical probing techniques too. Very interesting.

This video is pretty scary to those that want to put secrets in silicon. Long, but fascinating.

https://www.youtube.com/watch?v=Gt6VyuLZBww

In the paper, they reference this use in http://ieeexplore.ieee.org/document/7388574/

Is it predictive (built into the rest of the HDL) or reactive (feedback loop).

Read again from “There are two parts to this trick.” Spoiler: it’s reactive.

It just simply isn’t an affordable solution for long term scalability of the Spin Qubits as the cryochambers have very small thermal budget. Microwave signal I/Q single side band modulation (envelope signal generation by either AWG or DDS), analog and digital pulses has to be produced on the top of the consumption of the FPGA. It might sounds good on paper, but dissipating power even when it is not necessary will make you run out of this thermal budget very quickly. The previous problem which this tries to solve is to avoid sending a bunch of long analog “cables” from room temperature to 20mK.

Whatever thermal system they have set up, it’ll have to be designed to handle the FPGA’s waste heat at peak load. All this does is ensure that they’re always at that load. No heat is added to the system beyond what was already possible.

khm, thermal budget…

dissipating power, dissipating power, dissipating power…

Anyone tried https://github.com/m-labs/migen? Its a toolbox for building complex digital hardware in Python instead oif Verliog or VHDL.

Can see someone adding few transistors, some fast gates or an opamp combination, that control a second load to balance things in real time. Inefficient probably but smooth load. I guess gone are the days when you just chuck in a lamp to limit the current.

But why? It is one thing to cool an AMD Athlon to -40C in order to make it run ~25% faster and another to cool something to 4K (ca -270C) without getting any significant improvements in performance.

Is this intended to measure other circuits external to the FPGA?

Reading the paper, it seems that they were getting data from a high-speed ADC at that low temperature and storing the results in the FPGA – using the compensation system allowed them to reduce the noise floor from what they had without. In their example they only used the ADC against a known signal to calibrate and test the system, I’m assuming that the aim was to just generally improve test setups when trying to measure in low temperature environments.

More specifically, they needed to interface with a quantum processor operating at cryo temperatures.

Thanks to you both! Seem I skimmed the paper too fast :)

Point of order: There is no such thing as a “degree Kelvin”. The unit of measure of the Kelvin scale is the kelvin.

Maybe they’re just telling some guy named Kelvin that the temperature is around 4 degrees.

Well… While not technically* correct we all understand what was meant, right?

(* nowadays that is, it was known as degrees Kelvin up until the 70’s IIRC)

That is different. And I wonder if there would be an easier approach. As we can see that they use a constant clock rate, then it shouldn’t be too hard to make something that is inverted and does the same thing. For an example, if one inverts all the inputs and outputs of an OR gate, we get an AND gate instead, and vice versa. Same thing goes for all the other logic gates out there. Therefor we could make the same logic chip, but having it inverted, meaning that it will do the exact same thing, only giving the result in an inverted format, in other words, all ones will be zero, and vice versa. This would ensure that the combined power demand of the two would always equal a constant.

And for everyone thinking that, surely this couldn’t work! Well, then you surely don’t know logic well enough, so think about it a bit more and you will maybe see, and also knowing of this inversion trick is real nice if one needs a certain gate and only has other gates on hand, or simply wish to bake in a bit more logic in the same number of gates.

And as a minor aside, Kelvin isn’t measured in degrees. If one want to follow the standards set up by the International Committee for Weights and Measures.

CMOS power consumption depends on how many transistors are switching, not whether a bit is at a one or zero. You would need to have one transistor that switches on every clock cycle it’s counterpart doesn’t, which would be very impractical to build.

ECL works the way you describe, but not for power consumption reasons.

Yes, cmos consumes power when switching, therefor, if we have two circuits doing the same thing, but one logically inverted, then they would have a constant amount of transitions per cycle. Therefor these two would balance out into have a constant number of transitions per cycle.

Now this isn’t a true fact as the logically inverted gate might not be built with the same number of transistors, But this can be balanced if the application really needs the last few percent.

Biggest downside here is that we will also have a higher power consumption, but though this consumption will be constant per cycle, therefor if our clock is fixed, our power consumption is as well.

But there are other easier to implement methods of getting decently constant power consumption.

Easiest method to even out the power consumption of a device is to push it into the noise of the system, this can be done by having a large resistor in parallel with it. But this might not always be the smartest of moves, especially in a cryogenic system.

No, it doesn’t work for several reasons:

– If your input data does not have any transitions then neither the non-inverted or the inverted circuit will have any transitions.

– You need the current state and the next state of your logic circuit to determine how many extra transitions are needed. Whereas your ‘main’ logic does something with a set of bits, the ‘transition’ logic needs to do something with the current set of bits AND the new set of bits to determine how many bits it needs to flip itself. The act of determining how many bits to flip results in bits being flipped which defeats the purpose!

– You can’t determine beforehand how many bits to flip if your input is unpredictable!

Regardless of amount of transistors, a logically inverted circuit in any shape or form is not going to work.

“Well, then you surely don’t know logic well enough, so think about it a bit more and you will maybe see”

*cough*

1. This only works if one runs at a fixed clock speed. (If we use Field effect transistors. aka cmos)

Secondly, making sure we always transition roughly the same number of transitos isn’t hard. And fact is, it becomes even easier if we use buffers and always transition everything back to a known state between cycles. And from this we can easily build a “false” logic path that will make sure to even out the transitions.

And yes, we can not predict an unpredictable input, but that isn’t even what we are doing. After all, we don’t need to balance the future, or try to balance something out before it happened. If we balance things out while it travels through our implementation, then life gets so much easier, and it will give the same end result. (And yes, not instantly balancing something out will give some small current ripple, but that will be in the noise of the overall system….)

Do you notice that you change your arguments every time someone challenges your statements?

(Riddle me this:

Who transition compensates the transition compensator?)

It wouldn’t help.

Say you have a register that latches on certain operations. That means, in order to balance the power consumption of that, you would need to have a counterpart register that latches every time the actual one does not, and every time it latches it needs to match how ever many bits will flip on the next cycle, which it can’t know. You could do some averaging, but then your power matching circuit is drawing more power than anything, more than half of your fpga, and can’t even balance itself.

The idea fundamentally will not work.

Now I’m not sure I understand you correctly but how would making a new chip with all the associated costs including time be relevant when they can use a off-the-shelf FPGA and do the compensation in that?

Or did you mean to use inverted logic in the FPGA? It wouldn’t make any sense either given the design of those.

CMOS designs aren’t really symmetric BTW so I think it would be hard to get right.

It was just a quick idea of mine, doesn’t need to actually be practical.

And yes, cmos isn’t symmetric and compensations for that would be needed.

And implementing it on an FPGA would probably be a bit of a pain.

But I at least find it interesting that doing power compensation with hundreds of oscillators were the way to go here, it seems like an overly convoluted method when one just as well could have tried to make the logic itself do the balancing.

Damn this is such a dirty hack…

ECL gates have nearly constant current draw but use bipolar transistors.

So I was thinking along the lines of Alexander Wikström’s post.

Well, it was just a quick idea, but people these days seems to have a one track mind and only take cmos and fets into consideration. So sad when there are so many superior technologies out there in certain applications. (BJTs in this case….)

Second advantage with bipolar transistors is that their power consumption is rather flat over frequency. Compared to the rather huge gate capacitance in field effect transistors. (Capacitors act like a low valued resistor at high frequency.)

But well, cmos is perfect for low frequency, low power applications, like micro controllers.