Our better-traveled colleagues having provided ample coverage of the 34C3 event in Leipzig just after Christmas, it is left to the rest of us to pick over the carcass as though it was the last remnant of a once-magnificent Christmas turkey. There are plenty of talks to sit and watch online, and of course the odd gem that passed the others by.

It probably doesn’t get much worse than nuclear conflagration, when it comes to risks facing the planet. Countries nervously peering at each other, each jealously guarding their stocks of warheads. It seems an unlikely place to find a 34C3 talk about 6502 microprocessors, but that’s what [Moritz Kütt] and [Alex Glaser] managed to deliver.

Policing any peace treaty is a tricky business, and one involving nuclear disarmament is especially so. There is a problem of trust, with so much at stake no party is anxious to reveal all but the most basic information about their arsenals and neither do they trust verification instruments manufactured by a state agency from another player. Thus the instruments used by the inspectors are unable to harvest too much information on what they are inspecting and can only store something analogous to a hash of the data they do acquire, and they must be of a design open enough to be verified. This last point becomes especially difficult when the hardware in question is a modern high-performance microprocessor board, an object of such complexity could easily have been compromised by a nuclear player attempting to game the system.

We are taken through the design of a nuclear weapon verification instrument in detail, with some examples and the design problems they highlight. Something as innocuous as an ATtiny microcontroller seeing to the timing of an analogue board takes on a sinister possibility, as it becomes evident that with compromised code it could store unauthorised information or try to fool the inspectors. They show us their first model of detector using a Red Pitaya FPGA board, but make the point that this has a level of complexity that makes it unverifiable.

Then comes the radical idea, if the technology used in this field is too complex for its integrity to be verified, what technology exists at a level that can be verified? Their answer brings us to the 6502, a processor in continuous production for over 40 years and whose internal structures are so well understood as to be de facto in the public domain. In particular they settle upon the Apple II home computer as a 6502 platform, because of its ready availability and the expandability of [Steve Wozniak]’s original design. All parties can both source and inspect the instruments involved.

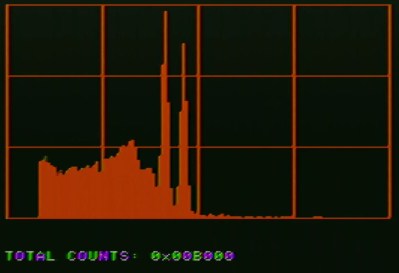

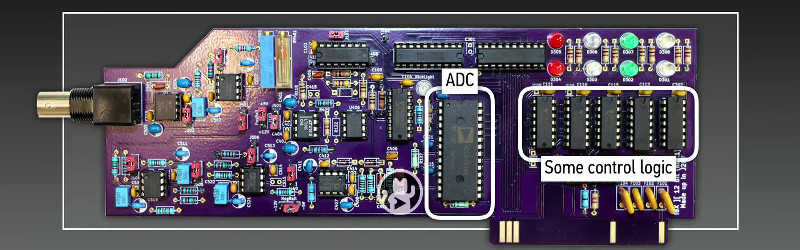

If you’ve never examined a nuclear warhead verification device, the details of the system are fascinating. We’re shown the scintillation detector for measuring the energies present in the incident radiation, and the custom Apple II ADC board which uses only op-amps, an Analog Devices flash ADC chip, and easily verifiable 74-series logic. It’s not intentional but pleasing from a retro computing perspective that everything except perhaps the blue LED indicator could well have been bought for an Apple II peripheral back in the 1980s. They then wrap up the talk with an examination of ways a genuine 6502 system could be made verifiable through non-destructive means.

It is not likely that nuclear inspectors will turn up to the silos with an Apple II in hand, but this does show a solution to some of the problems facing them in their work and might provide pointers towards future instruments. You can read more about their work on their web site.

There was an underhanded C competition about this issue:

http://www.underhanded-c.org/_page_id_5.html

Pretty interesting topic, was cool to see the talk in person. Couldn’t stop me from saying “yay OSHPark :D” when I saw the slide.

Same here on seeing the slide. :)

Is the 6502 less sensitive to ionizing radiation than modern processors due to its lower transistor density?

I believe so, mostly due to the larger semiconductor process (each semiconductor junction is much larger than modern designs)

There are probably space-rated versions as well.

I’ve heard the opposite from satellite flight computer hardware people–smaller feature sizes in modern processors.are less vulnerable to radiation-induced single event upsets. I don’t recall the details, though.

The 6502 here is used because it’s a well known chip and the design is easier to verify than an FPGA. You don’t hold the 6502 next to the radiating components, it’s sealed off from it. It’s about creating a device that’s testing nuclear warheads and to verify the design and validity of the tester itself.

I had that same thought at first, that they’d take old tech because of radiation resistance, but it’s about the complexity of the chip really. Interesting talk, worth watching.

I really enjoyed the talk and speaking to the two speakers afterwards. If you are interested, I have made a sketchnote about it: https://www.katjasays.com/34c3-tuwat-recap/#vintage

With today’s semiconductor technology a fancy processor and storage could be put into a 6502 die or other old chip design and go un noticed when X-Rayed. It could collect more data and do more processing on it then store the information to be read out later – completely unknown to the people using the instrument.

To me a re-programmable FPGA seems more logical than this nonsense (in my opinion). Then you would just upload the trusted CPU configuration and know that it would run exactly what you uploaded, you could even do some testing to verify that it’s running correctly. The rest of the circuits are easy to verify.