In one bad week in March, two people were indirectly killed by automated driving systems. A Tesla vehicle drove into a barrier, killing its driver, and an Uber vehicle hit and killed a pedestrian crossing the street. The National Transportation Safety Board’s preliminary reports on both accidents came out recently, and these bring us as close as we’re going to get to a definitive view of what actually happened. What can we learn from these two crashes?

There is one outstanding factor that makes these two crashes look different on the surface: Tesla’s algorithm misidentified a lane split and actively accelerated into the barrier, while the Uber system eventually correctly identified the cyclist crossing the street and probably had time to stop, but it was disabled. You might say that if the Tesla driver died from trusting the system too much, the Uber fatality arose from trusting the system too little.

But you’d be wrong. The forward-facing radar in the Tesla should have prevented the accident by seeing the barrier and slamming on the brakes, but the Tesla algorithm places more weight on the cameras than the radar. Why? For exactly the same reason that the Uber emergency-braking system was turned off: there are “too many” false positives and the result is that far too often the cars brake needlessly under normal driving circumstances.

The crux of the self-driving at the moment is precisely figuring out when to slam on the brakes and when not. Brake too often, and the passengers are annoyed or the car gets rear-ended. Brake too infrequently, and the consequences can be worse. Indeed, this is the central problem of autonomous vehicle safety, and neither Tesla nor Uber have it figured out yet.

Tesla Cars Drive Into Stopped Objects

Let’s start with the Tesla crash. Just before the crash, the car was following behind another using its traffic-aware cruise control which attempts to hold a given speed subject to leaving appropriate following distance to the car ahead of it. As the Tesla approached an exit ramp, the car ahead kept right and the Tesla moved left, got confused by the lane markings on the lane split, and accelerated into its programmed speed of 75 mph (120 km/h) without noticing the barrier in front of it. Put simply, the algorithm got things wrong and drove into a lane divider at full speed.

To be entirely fair, the car’s confusion is understandable. After the incident, naturally, many Silicon Valley Tesla drivers recreated the “experiment” in their own cars and posted videos on YouTube. In this one, you can see that the right stripe of the lane-split is significantly harder to see than the left stripe. This explains why the car thought it was in the lane when it was actually in the “gore” — the triangular keep-out-zone just before an off-ramp. (From that same video, you can also see how any human driver would instinctively follow the car ahead and not be pulled off track by some missing paint.)

To be entirely fair, the car’s confusion is understandable. After the incident, naturally, many Silicon Valley Tesla drivers recreated the “experiment” in their own cars and posted videos on YouTube. In this one, you can see that the right stripe of the lane-split is significantly harder to see than the left stripe. This explains why the car thought it was in the lane when it was actually in the “gore” — the triangular keep-out-zone just before an off-ramp. (From that same video, you can also see how any human driver would instinctively follow the car ahead and not be pulled off track by some missing paint.)

More worryingly, a similar off-ramp in Chicago fools a Tesla into the exact same behavior (YouTube, again). When you place your faith in computer vision, you’re implicitly betting your life on the quality of the stripes drawn on the road.

As I suggested above, the tough question in the Tesla accident is why its radar didn’t override and brake in time when it saw the concrete barrier. Hints of this are to be found in the January 2018 case of a Tesla rear-ending a stopped firetruck at 65 mph (105 km/h) (!), a Tesla hitting a parked police car, or even the first Tesla fatality in 2016, when the “Autopilot” drove straight into the side of a semitrailer. The telling quote from the owner’s manual: “Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph (80 km/h)…” Indeed.

Tesla’s algorithm likely doesn’t trust the radar because the radar data is full of false positives. There are myriad non-moving objects on the highway: street signs, parked cars, and cement lane dividers. Angular resolution of simple radars is low, and this means that at speed, the radar also “sees” the stationary objects that the car is not going to hit anyway. Because of this, to prevent the car from slamming on the brakes at every streetside billboard, Tesla’s system places more weight on the visual information at speed. Because Tesla’s “Autopilot” is not intended to be self-driving solution, they can hide behind the fig leaf that the driver should have seen it coming.

Uber Disabled Emergency Braking

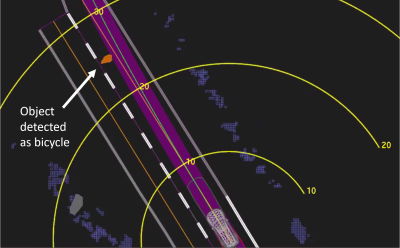

In contrast to the Tesla accident, where the human driver could have saved himself from the car’s blindness, the Uber car probably could have braked in time to prevent the accident entirely where a human driver couldn’t have. The LIDAR system picked up the pedestrian as early as six seconds before impact, variously classifying her as an “unknown object, as a vehicle, and then as a bicycle with varying expectations of future travel path.” Even so, when the car was 1.3 seconds and 25 m away from impact, the Uber system was sure enough that it concluded that emergency braking maneuvers were needed. Unfortunately, they weren’t engaged.

In contrast to the Tesla accident, where the human driver could have saved himself from the car’s blindness, the Uber car probably could have braked in time to prevent the accident entirely where a human driver couldn’t have. The LIDAR system picked up the pedestrian as early as six seconds before impact, variously classifying her as an “unknown object, as a vehicle, and then as a bicycle with varying expectations of future travel path.” Even so, when the car was 1.3 seconds and 25 m away from impact, the Uber system was sure enough that it concluded that emergency braking maneuvers were needed. Unfortunately, they weren’t engaged.

Braking from 43 miles per hour (69 km/h) in 25 m (82 ft) is just doable once you’ve slammed on the brakes on a dry road with good tires. Once you add in average human reaction times to the equation, however, there’s no way a person could have pulled it off. Indeed, the NTSB report mentions that the driver swerved less than a second before impact, and she hit the brakes less than a second after. She may have been distracted by the system’s own logging and reporting interface, which she is required to use as a test driver, but her reactions were entirely human: just a little bit too late. (If she had perceived the pedestrian and anticipated that she was walking onto the street earlier than the LIDAR did, the accident could also have been avoided.)

But the Uber emergency braking system was not enabled “to reduce the potential for erratic vehicle behavior”. Which is to say that Uber’s LIDAR system, like Tesla’s radar, obviously also suffers from false positives.

Fatalities vs False Positives

In any statistical system, like the classification algorithm running inside self-driving cars, you run the risk of making two distinct types of mistake: detecting a bike and braking when there is none, and failing to detect a bike when one is there. Imagine you’re tuning one of these algorithms to drive on the street. If you set the threshold low for declaring that an object is a bike, you’ll make many of the first type of errors — false positives — and you’ll brake needlessly often. If you make the threshold for “bikiness” higher to reduce the number of false positives, you necessarily increase the risk of missing some actual bikes and make more of the false negative errors, potentially hitting more cyclists or cement barriers.

It may seem cold to couch such life-and-death decisions in terms of pure statistics, but the fact is that there is an unavoidable design tradeoff between false positives and false negatives. The designers of self-driving car systems are faced with this tough choice — weighing the everyday driving experience against the incredibly infrequent but much more horrific outcomes of the false negatives. Tesla, when faced with a high false positive rate from the radar, opts to rely more on the computer vision system. Uber, whose LIDAR system apparently generates too-frequent emergency braking maneuvers, turns the system off and puts the load directly on the driver.

And of course, there are hazards posed by an overly high rate of false positives as well: if a car ahead of you inexplicably emergency brakes, you might hit it. The effect of frequent braking is not simply driver inconvenience, but could be an additional cause of accidents. (Indeed, Waymo and GM’s Cruise autonomous vehicles get hit by human drivers more often than average, but that’s another story.) And as self-drivers get better at classification, both of these error rates can decrease, potentially making the tradeoff easier in the future, but there will always be a tradeoff and no error rate will ever be zero.

Without access to the numbers, we can’t really even begin to judge if Tesla’s or Uber’s approaches to the tradeoff are appropriate. Especially because the consequences of false negatives can be fatal and involve people other than the driver, this tradeoff effects everyone and should probably be more transparently discussed. If a company is playing fast and loose with the false negatives rate, drivers and pedestrians will die needlessly, but if they are too strict the car will be undriveable and erratic. Both Tesla and Uber, when faced with this difficult tradeoff, punted: they require a person to watch out for the false negatives, taking the burden off of the machine.

Without access to the numbers, we can’t really even begin to judge if Tesla’s or Uber’s approaches to the tradeoff are appropriate. Especially because the consequences of false negatives can be fatal and involve people other than the driver, this tradeoff effects everyone and should probably be more transparently discussed. If a company is playing fast and loose with the false negatives rate, drivers and pedestrians will die needlessly, but if they are too strict the car will be undriveable and erratic. Both Tesla and Uber, when faced with this difficult tradeoff, punted: they require a person to watch out for the false negatives, taking the burden off of the machine.

I do have doubts whether autopilot will ever get off the ground properly. I think there is the potential to improve the technology a lot, but I don’t think many more fatalities will be accepted before the entire concept is outright banned.

Something worthy of note though is that in the Uber case, the woman was looking at her phone, the Tesla crash in March, the logs show they guy didn’t have his hands on the wheel leading up to the incident and the Tesla crash last year (or year before?) the driver also didn’t have his hands on the wheel and was warned by the system a bunch of times.

Isn’t the deal with autopilot is that it is made very clear to the driver that it’s not to be relied on and they must be alert and have their hands on the wheel at all times?

So the cynical side of me says that people misusing the technology will be its downfall, but then again, it is a lot to expect that humans could be trusted with such responsibility.

The problem is that humans aren’t very good at supervision. We get distraced and lulled into a false sense of security. We tend to relax and switch focus when things keep going well and nothing happens.Why grab the wheel when warned when nothing happened the first 20 times you did that?

This is a problem even in highly trained for the task airline pilots (see for instance the crash of AF447 https://en.wikipedia.org/wiki/Air_France_Flight_447)

Yeah, I mean, it’s totally true that humans can’t be trusted, right? Why stop at a stop sign when it looks like it’s open? Just gun it straight through it, there totally won’t be a problem. And red lights? Most of the time you can just zip right through them, so sure, just ignore it. Seat belts, too. No one ever wears seat belts, because 99.9% of the time, nothing happens. (*)

Wait, none of those things are actually true… Why is that, I wonder?

Oh, yeah. Education, along with deterrents (fines). Wonder why that isn’t working in this case? Hmm – maybe it’s because… there isn’t any?

(*: yes, many people still don’t wear seat belts, but mandatory seat belt usage and enforcement has been well-proven to increase seat belt usage, and that’s the point. You want people to do something, *make them do it*.)

I dont think ThisGuy was saying that people arent capable of following rules or even that they arent good at them. I believe his point is simply that a typical human is a better at driving than supervising a machine. And I agree – Driving requires a certain element of focus and engagement. Youre constantly scanning, processing, and using your body to control and make adjustments to the vehicle. The role of supervisor in a semi-autonomous vehicle is a very odd one indeed. Think about it – The car boasts having a feature that can drive itself – its even called “auto-pilot”. You pay extra for this feature because that sounds pretty cool – the car is smart enough to do some things for you, so you dont have to do them yourself. But wait, thats not exactly the case. Even though the vehicle can do these tasks, youre still expected to have full attention on the process as though you were actually driving. Its a little confusing, even hypocritical. After all, if you still need to keep your hands on the wheel, you still need to keep your foot hovered over the brake, and you still need to have full attention on the road and your surroundings, then what exactly is the benefit of this feature? I would pay the extra money if that feature safely and conveniently allowed me to eat a burrito while in the drivers seat (including bite by bite salsa application). But if I still have to act like the driver and have all the responsibility of the driver, then where is the benefit? If I cant eat the burrito blissfully then Id rather just drive the good old fashioned way.

To ThisGuy’s point – we’re better at driving for 4 hours straight than sitting behind the wheel, forcing attention on the road for 4 hours even though we arent controlling the vehicle.

And Pat, to your point “You want people to do something, *make them do it*.)” – agree! You know how you keep people focused on the road, their surroundings, and the status of their vehicle? You make them! If the person in the drivers seat wants to make it alive to their destination, THEY need to perform all the safety tasks and not assume the computer can do it just fine.

PS “Auto-pilot” is the dumbest name for Tesla’s system. It absolutely confuses the message they are trying to send and I would not be surprised if the Auto-pilot name does not come up in future litigation surrounding unfortunate events involving the Auto-Pilot feature and the human not properly supervising

“But if I still have to act like the driver and have all the responsibility of the driver, then where is the benefit? If I cant eat the burrito blissfully then Id rather just drive the good old fashioned way.”

Because the computer can’t get distracted as easily as I can, and it has better sensors than I do. I don’t want self-driving so I don’t have to drive. I want self-driving so I have something to improve my chances of getting there alive. Every time my kids say “Oh look, a deer!” I immediately am reminded how much it sucks to not have total 180-degree forward vision perception.

I’m sorry for the people that think that computer driving assistance is for letting them watch Harry Potter, but I would prefer the technology not be banned for those of us who actually want the real benefits.

“You know how you keep people focused on the road, their surroundings, and the status of their vehicle? You make them!”

Cool! So let’s just make Auto-Pilot only work if the driver’s hands are mostly on the wheel and eyes are mostly on the road. If it can watch the road, it can watch the driver, too. I, for one, welcome our robot overlords.

mandatory “remain in control of your vehicle” laws have been on the books from nearly day one. i don’t think there’s anything stopping the police from ticketing tesla drivers for taking their hands off the steering wheel, even if they’ve got the poorly-named autopilot turned on.

The FDA is extremely experienced in the problem of making sure new drugs and medical devices are safe and effective. There’s many steps before you get to a clinical trial and among these is a trial to show a trial is safe regardless of being effective.

I say this to point out that uber is conducting clinical trials without any of this established process. They are making it all up about what their safety process is. They did not do tests to determine if the testing process itself is effective or safe. ( and it’s not– other companies use multiple observers who don’t communicate)

If a drug company did this they would be shut down.

Moreover the claim of we have driven 5 million miles with no problems is rubbish. Here’s a quick reality check. How many of those million miles had an uber executive run out in front of a car going full speed ? None. So how can they test this on the public.?

I get the gung ho attitude and the Silicon Valley motto of failing fast. But this is not the same. It’s like testing radiation therapy by just irradiating people in public. Moreover there’s not even a clean roadmap to follow here: when you introduce a new Tylenol you already know a lot about what similar medicines do and test them . We don’t even have that. Even airplane testing didn’t have this path : it was done in the world wars.

They simply need to cancel all these and start over with a ten year plan of staged trials before one car is in the public.

I think your comparison with the drug industry is a bit of a stretch. Sure, there are procedures and processes in place NOW in the drug industry, but the automation of driving is an entirely new industry. Did Edward Jenner follow modern processes when he tested his smallpox vaccine? Not just no, but oh-holy-jeebus-HELL-no. Based on nothing more than observation and conjecture, he jumped straight to a human test subject. Or so they told me, but they’ve lied to me before, so let’s just call it anecdotal.

The state of automatic driving machines today IS roughly analogous to aviation development in the 1910s and 20s. This had nothing to do with the fact that this coincided with a couple of big wars. Did Wilbur & Orville get certified flight training before taking a glider and irresponsibly dropping a motor into it? Did they get their jalopy inspected by a certified A&P mechanic? Heck no; who was even qualified to do such inspections? Not even after the death of their biggest inspiration, Otto Lillenthal, in the crash of a glider of his own making, did they cease their dangerous experiments.

Just where are the standards supposed to come from, at this stage? Yes, of course, these systems have to be extensively tested on closed road courses before being turned loose on public streets. But they have been. The variety of conditions it takes to identify the flaws that caused the two incidents mentioned in the article, would have taken decades to produce under laboratory conditions. Kind of how it works with drug testing: phase 1 trials only identify gross flaws in a substance, since they don’t involve enough subjects to statistically show more subtle effects, which is why there are additional phases that involve more and more patients. But of course, all of the people involved in clinical trials are volunteers. How can we justify testing potentially dangerous machines using test subjects who have not been informed of the risk, much less given consent. But there’s an analogy here, too: if you are testing a vaccine, there is often some unknown risk that the vaccine, either by itself being infectious, or by its failure to protect the subjects from infection, or by increasing the subjects’ susceptibility to other infections, increase health risks to the people those subjects come in contact with.

That crash is a bad example, pulling trough a stall warning is retarded. However boredom is a big hazard. At the other hand, there are too many reterded drivers. I think roads need to support the autonomous driving, so certain roads are autonomous and the others you have to drive yourself. The autonomous roads can for example be fenced and have guides for the car (and maybe have humand driving prohibited) and at other roads the human has full control.

There is a 4-way intersection in Everett MA with a red light and three green arrows pointed to the right, to the left, and straight ahead. There are times when all four lights are simultaneously illuminated. What does a self-driving car do when presented with such a paradox?

Recently I saw a picture of a very busy downtown Boston intersection, in the image there was a fire-pull box with a red globe on the top. The globe is not illuminated, but in bright sunshine it still appears to glow red. You have to look good and hard at the image to see the the traffic signal is green, at first glance you see the red globe and assume the light is red. Humans have millions of years of experience with discerning objects in poor conditions, expecting cars to solve this problem is just foolish.

Reasons to build things towards the machines than the man since they are our future.

+1 – I still don’t understand how what Tesla does is even legal and they haven’t been sued out of this idea yet. Maybe they just haven’t run over the right pedestrian or crashed into the right person yet…

– “Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph” How can you even give consumers an option to use a feature like this on public roads? We regulate what a taillight has to look like, but not a feature that may kill people other than those taking the risk to use it? – At least in the US, with all the regulations that apply to highway vehicles (ie ‘not legal for road/highway use’ disclaimers on many aftermarket products and whatnot), how can you get away with a vehicle feature that flat out has a warning ‘may run someone over or crash into a parked car if used’?

– With current tech, my opinion is it should just flat out be shut down. It’s like trying to do this 15 years ago. Sure, we had cameras, radar, and image processing and whatnot, but that was as far away from something safe as we are today. There are too many edge cases, and always will be (most likely in any of our lifetimes), to have an ‘autopilot’ feature. Decisions to shut down emergency braking confirm this; if you need to disable a safety feature to make it ‘usable’, it is NOT usable.

– To the comments about wright brothers and whatnot, that is not remotely the same. They took their own lives into their own hands. I have no issues with this. If Tesla engineers want to play with this on their own dedicated site, great, but this is nowhere near roadworthy. Releasing something like this to be used on public roads is a whole other story. Even back to the wright bros, when at some point it went commercial, at least anyone getting on a plane made a voluntary decision to do so (unless possibly military related…). It was your own choice to risk your own life. It’s like if they issued a credit card that ‘may self destruct in purchases over $50, injuring or killing yourself and bystanders. Bad enough if it might take out you, but what about the family next to you who’s life you’re deciding to gamble for your fancy gadget that doesn’t really solve any problems?

– As a backup safety ‘copilot’, sure, but no way should these things be capable of taking over the controls. No way a human is going to keep a close enough supervision of it to keep it safe, and even if they did, what is the point of it at that point?

– ‘Dedicated roads to autonomous vehicles’ – maybe, but I can’t see them being cost feasible anytime soon, and you’d still have edge cases. Mirages, rain, snow, ‘mist catching reflections in it’, maybe road construction and someone forgot to update ‘the system’ to accomodate, debris, etc…

Read the NTSB reports (preliminaries of both incidents are currently available).

In the Uber case, what she was looking at was not a phone, but apparently a diagnostic screen, that, as part of her job as a test driver, she was supposed to be monitoring.

The Tesla case is a bit stranger, as the last warning that the driver received was some 15 minutes prior to the accident. Personally, I can’t understand how he was not paying attention at that moment, but it was nowhere near as bad as Tesla has tried to make it out in the press.

Isn’t that an unreasonable expectation for the user, though? People like to point to this and blame the user, say they were foolish for not always paying attention, but what else would an autopilot system be for?

In conventional driving, we have a system where a user must always focus on the task at hand to successfully and safely navigate the streets. Constant attention is the expectation. In a hypothetical perfect autopilot situation, the user never has to pay attention. The computer has better judgement and reflexes, thus human interference would only make it less safe.

What we have now is in between those two scenarios. We have a system that does not constantly demand the users attention, but the user must occasionally and INSTANTLY take over for the system at random times without any warning–to prevent property damage, human injury, and / or death. That is the most unsafe sort of system; one that lulls you into a false sense of security and then randomly demands you suddenly take complete control.

The current implementation of self-driving vehicles is utterly horrible and fundamentally unsound. All the statistics about how safely they can drive X number of miles completely discounts the number of driver interrupts which are required during that period, and most of the accidents are not blamed on a system which requires constant unannounced driver interrupts–they are blamed on the driver for not swooping in and rescuing the machine from itself quickly enough. That’s completely unfair and borders on bad faith on the part of the companies building these systems.

I do like how these companies resolve themselves of any responsibility by citing “the driver must remain in control of the vehicle at all times”. A legal cop-out that it seems is perfectly acceptable in the US.

My question is this, if the driver must remain in control at all times, then why have an automated driving system at all – when legally, you can’t use it!

neither of those systems are “automated driving” its rather “driving assist”, And it have and probably will save lots of lives. Humans suck at driving, they cant keep their focus all the time on traffic and have horrible reaction times. with assisted systems the system can react more or less instantly, but this comes at the cost of even worse reaction time for the driver that is now spending even less focus on the traffic…

“in the Uber case, the woman was looking at her phone”

The Uber driver wasn’t looking at her phone, that was a nasty piece of innuendo and propaganda put out by Uber when they released that video without giving any explanation or context. Previously Uber had carried out their tests with two people in the car, one to watch the road and the other to log various data (we don’t know exactly what). They went cheap, got rid of the second and required the driver to enter that data from time to time in what looks to us like a phone. The Uber driver was just doing her job in a way that she believed was safe because her employer, and the state government which allowed them to test on public roads, had given their assurances. Read the NTSB report linked above.

I think my problem with all this is the naming schemes. Look at Cadillac, their version of this technology is called ‘Super Cruise’ which gives a very different impression from ‘autopilot’. To an every day user if they hear Super Cruise, they think slightly better cruise control which gives 0 false sense of amazing auto driving car that in the pitch black icy road down a back alley it can drive without incident. Now think of teslas autopilot and people think, oh this will automatically pilot my vehicle so I’ll let it do the work.

People have a gross over expectation of what autopilot can do and I blame this at least partially on Tesla for intentionally over selling what the product can do.

“Super Cruise”, I like that, for just the reason you said.

The features in question are actually named Auto-Steer and Adaptive cruise control (controls speed only). The UI is pretty clear that you are engaging Auto-Steer.

There isn’t anything in a Tesla that turns on “Autopilot” that I can find. As far as I know, autopilot is the name of the package when buying the car that gives you auto-steer and other self driving mini features (eg: auto parallel park).

Auto-Steer still sounds a hell of a lot like it automatically will steer my car. Even still I do not see thousands of articles online discussing Auto-Steer I see them discussing Autopilot, the package which Tesla sells the customer. Tesla is not going around selling people on Adaptive cruise control they are selling people on autopilot.

Actually if you read the report on the Uber crash, she was looking at system diagnostic screen and logging data. She was not on her phone. Yeah, that was my first thought when I saw the video as well. But she was actually doing her job as defined by Uber.

The problem isn’t with autopilot, it’s the fact that there aren’t enough vehicles WITH autopilot features on the road, and that the manufacturers who are implementing it so far have not decided to implement a “cross-platform” standard for vehicle-to-vehicle communication.

With the sensory awareness of a much larger area granted by each car having access to the sensor data for potentially kilometers around it, it makes for a far superior autopilot algorithm.

Local Road Authorities also have a finger to blame in this, particularly in the case of the Tesla barrier incident. The authorities should by now be fully aware that these self-driving modes use road markings, so they should ensure that all the markings are of a sufficiently high enough contrast that the vehicle can correctly identify the lanes, and that any areas (like the “gore”) that the vehicle should most definitely keep out of have a large striped pattern painted in it so that the software can properly recognise it as a keep-out zone.

So, in the case of Teslas’ car driving into the concrete barrier, you’re saying that if all the other cars around it were ‘autopilot’, this wouldn’t have happened? Somehow I don’t think that every driver in the vicinity of the Tesla vehicle was driving like an idiot, and that *caused* the Tesla to crash.

Fred’s right. The car didn’t see a (worn, possibly moved) line on the pavement. The fact that it’s still happening in the same location and others is actually a bit scary, if understandable.

But this idea, that the machines are better at driving, is (still) demonstrably false. There may come a time when this is not the case, but that time is not now.

But the difference here is that different cars with different sensors, different code, all feeding back into a shared pool of data which may have correctly identified the barrier as a hazard, which the Tesla may very well have taken the consensus and not attempted to merge into the barrier.

Also, if the local authority had maintained the section of road and the road markings to a standard of sufficient quality to assist auto-steering vehicles which are now a very real thing on public roads, the outcome would have been very different.

Perhaps the regulations surrounding the road markings need to be revised for the new reality we find ourselves in.

No, what’s really scary is that Tesla thinks that the default action for “This road seems confusing” should be “accelerate back to set point”.

I mean, I know that when I’m driving in foggy conditions and can’t figure out what to do, I totally just gun it, right?

The problem with self-driving cars right now is that they pretend like they know what the hell they’re doing. Computer vision systems *can estimate their own damn error*. The default action for “I’m not sure about this” should be to throw on hazards, sound an alarm inside the vehicle, and decelerate to a stop.

Easy to say, but humans aren’t much better. Round here at the moment, there are large-scale roadworks in progress, and some lanes have been narrowed. Old markings are blacked out, new shiny white ones painted on.

In the ‘right’ weather/sun conditions, it’s virtually impossible to tell which are the old and which are the new. I’ve no idea how a CV system would fare.

Humans aren’t much better? So you’re telling me in your situation, where the lane markings are confusing in certain conditions, that humans say “screw it” and floor the accelerator?

OK, some idiots won’t, but the entire idea for self-driving cars is to make them *good* drivers, not dumb ones. If the road markings are confusing or dangerous you slow the hell down, not speed up to freaking 75.

We don’t need to improve the road markings, we need to improve the software that it can handle cases like this. Because there will always be cases like this somewhere.

I can drive on a road completly without markings and I won’t hit the divider. As a human that’s a pretty simple task. Markings help, but they are not necessary. Self driving cars need to reach this level.

Agree. Shifting the blame onto the Roads Department and claiming poor road maintainence is a complete cop-out. It really surprises me how people can even claim this as a defence!

But again I suppose that is the mindset of many (and in particular some geographical regions)… anything I do as a result of my own stupidity is somebody elses fault for not stopping me from doing it

Your argument is pretty poor when you consider that highway departments routinely make changes to intersections when they experience an above average number of accidents. From what you say, they are perfect and never make any mistakes.

Which is why I believe when fully automated motorways (UK highways) are introduced, there will be a need to remove human drivers altogether.

Just think of Minority report or the remake or total recall, I robot etc as examples, even demolition man lol

Only problem then is, what would happen if a criminal drove an old age petrol motor on the same motorway? Would the cars be able to react safely to the unknown car in their midst, or would the system just shut down the motorway to prevent an accident.

Then again they could just force the cars ahead of the newly detected threat to form a rolling roadblock that the petrol motor could not pass, then tpac maneuver it off the motorway and into police custody.

But I predict by 2040 we will be there and there will only be need for manual driving in local inner city, then again it will probably be a superior version of autopilot type software/hardware interface, tied into traffic light cameras, to detect pedestrians crossing well in advance through a super computer network to detect threats to the carriageway and cars.

The one benefit of such a motorway would be virtually no congestion ever! The computers could determine the vehicles that are using the motorway, determine their positions and speeds, then adjust them accordingly to ensure no one is holding anyone else up when entering or exiting the motorway, being held up by incompetent drivers in the fast lane will be a thing of the past, as will lane hogging.

I look forward to hearing stories of hackers who can prioritize their vehicle on the motorway and get to their destination doing 200mph+ lol

It would be truly excellent for the emergency services. Just this morning I experienced something that must frustrate the police to no end. A police car was approaching from behind me with its lights and sirens on, the car behind me pulled over to let it pass, I pulled over to let it pass. The car in front of me must not have seen it, but the one in front of that did so pulled over, the car in front of me slowly pulled out into the middle of the road to pass what must have looked like someone stopping, but blocking the police car.

It would be really good if parked cars could be part of the network as well, giving awareness to passing cars of pedestrians who might step into the road or the ability for emergency service vehicles to access roads which might otherwise be too congested for them.

I’m going to guess that the first successful assist system will work like the easy mode driving assists in video games. They still require you to control the !@#$ car yourself while trying to compensate for a driver’s shortcomings.

That makes the whole thing sound trivial, but doing this in reality means a lot of development in sensors, lane recognition, object recognition, etc.

It will feel like an evolution of adaptive cruse, lane keeping, and emergency braking tech today but hopefully be better than current solutions.

Then I think we can begin on the level 5 challenges.

Lack of understanding is one difficult to solve problem but another is security is a manufacture going to offer updates for a car for the 15 years or more which is the typical service life of a road vehicle.

Will people keep them maintained so all the sensors are working they seem to have enough issue keeping emissions equipment in a working state.

Plus lets face it most people probably 95% of them in the US are not going to give up their personal transportation for a ride share service.

Please buy our self driving systems. They are auto piloting *wink wink*. But you cannot actually trust them to drive themselves. We also set the level at which we will brake needlessly or make you at higher risk of getting rear ended and also set the level at which we will make you hit things and kill you. We also don’t share any of this information and also close source everything and also change things at will over the air without even telling you what we changed. Enjoy!

Look, I like the idea of electric cars but how is this ok to most people?

Isn’t driving a car a burden? How’d you like it if we took this burden from you with this autopilot. Oh, you thought that meant you didn’t need to pay attention to driving? How could you think that! How could you fail to react to a robot suddenly failing without any sort of warning at a random time while hurtling down the highway? Humans are the problem here!

Here we have a system which only requires your attention at random, long intervals to prevent disaster in less than five seconds. It’s like a five-second time bomb that you must hit the snooze button on, but the timer starts at some random moment several hours into the day when you might be distracted. Isn’t it convenient?

Don’t conflate electric cars with inadequately tested so-called auto-pilot systems. Many electric cars require driver attention at all times, which is the way I like it.

Sadly even if they perfect the system to be 100% foolproof and safe, what happens when a drunk stumbles in front of your car filled with your wife and kids?

Does it swerve your car causing it to crash and potentially kill you and/or your family to save the innocent pedestrian?

Or does it mow the pedestrian down, still risking your lives should he/she go through your windscreen impacting one or more of the passengers at high speed.

How would it detect a brick thrown from a bridge? A piece of concrete, metal etc falling from a lorry, the list goes on of things that would normally be detected through vigilant driving, but totally ignored by an automated system until it is a threat, which would be too late to react safely.

Pretty easy: It tries to avoid the collision without crashing somewhere. If it fails, it just tries not to crash into something else as the person running in front of the car (so just braking).

You say its easy but it is far from easy, so should it be a busy motorway, cars either side (or a crash barrier one side), your claiming it will just avoid going left or right and break in time? think not.

Should you be on the current smart motorway where you are allowed to drive in the hard shoulder at peak traffic times (as long as the gantry above shows you a speed limit and not an x) your telling me if a massive 30 ton articulated vehicle behind you, a car to your right, barrier to your left, should a threat appear in front of you, the car would just emergency brake? then the massive lorry behind would not be able to stop in time and plough through both you and the threat ahead?

You can say its easy this or easy that, but end of the day, in the real world, there is going to be a need to assess who to kill in certain circumstances, no ones family will accept the car decided to kill a pedestrian to avoid risk to the driver/s and neither will they accept vice versa. So until such an issue can be addressed fully, it would be a barrier to fully automated cars.

It’s not entirely clear from this article, but in the Uber case, the emergency braking system that was disabled was the EBS that came from the Volvo factory. Uber disabled it to prevent it interfering with their system.

A very important detail.

Adding semi-autonomous capabilities as a 3rd party add on to a vehicle that has some autonomous capability of its own (like emergency braking when approaching an obstacle) seems like it would be a tricky business (especially if the manufacturer isn’t keen to share internal details with you). You must then choose whether to incorporate the existing system unmodified, disable it, or hack and include it. None of those possibilities is a clear winner (disabling it seems simplest, but makes you look like an idiot when you run over a pedestrian who walks out from between parked cars or whatever).

We like to act like this is a good reason for humans being at fault instead of the system, but the emergency braking system was disabled because the car was practically impossible to drive with it turned on. In other words, the emergency braking system was turned off because it doesn’t work, and Uber considers that an acceptable scenario for a public road test.

The Volvo (radar-based) anti-crash system was _also_ disabled. But so was Uber’s LIDAR-based system. According to the NTSB, the emergency braking response was turned off during tests of the self-driving system.

At least that’s the way it reads to me, because the NTSB report makes no other mention of the Volvo system.

No. Google disabled BOTH Volvo native collision avoidance(Intel system, which Intel showed could easily stop even just using shit dashcam footage), and on top of that disabled its own system from issuing Brake commands even if its 100% certain of impeding crash.

Uber car was set to KILL.

You have your companies confused there. The Google self-driving cars spin-off is called Waymo. Uber self-driving cars are from Uber, Google has no sway over them. (some of the technology may be common: there is/was a lawsuit claiming Uber had copied systems from Google)

Yes, obviously I meant Uber in both sentences :)

The scanner system must suck pretty bad, if the cameras are prioritized over it. I can’t believe they would allow full automatic mode in the near future, as i have understood from the news just a little while ago, with such a useless scanner.

Very nice article but hard to believe that assisted driving algorithm are so chea

This approach will never work. The camera and LIDAR are dumb, they don’t understand the things they see. As a bicyclist I don’t want these systems used while I am riding along the side of the road. Better to have a system that monitors the driver to make sure they are paying attention to their driving.

And give them a jolt if they are not paying attention.

At this point, why would you have an autopilot system at all? If the driver must be coerced into paying attention, why not just have them drive? Conventional driving is a system that demands user attention by design, whereas a partial autopilot is a system in which user attention is a decision made by whoever is behind the wheel at the time, until some random moment when they immediately need to be back in control and up to speed on the situation at hand. A system that is self-governing for long periods of time punctuated by sudden and unpredictable moments when complete user control is needed to prevent catastrophe is inherently and insanely unsafe.

To expect people to be forever vigilant in a self-driving car is not reasonable and completely contrary to the concept of a self-driving car. People don’t even pay enough attention in a regular car. When a layman thinks about self-driving cars, they understandably think that they exist so the people in the car don’t have to pay attention to driving. That’s the entire point.

I agree with you, but I did forget to put a smiley after my comment.

How about you don’t and keep on forgetting about it.

Personally, I prefer technology that augments human abilities explicitly (think ABS brakes) than tech that aims at removing the human from the loop.

OTOH, the Uber system _did_ classifiy the pedestrian in time to have saved her life. The five seconds where it’s like: it’s a car, it’s a pedestrian, it’s a fish, it’s a bicycle are strange for sure. But these systems also have super-human reaction times that nearly halve stopping distance, so they don’t have to discriminate as well.

So? I have my prejudices, but I’m willing to be convinced.

Human knowledge that fish wouldn’t generally be found on land speeds up the process.

Not really. Human perception is notoriously slow at identifying unexpected objects. I’ve definitely thought I saw something that didn’t make sense in the context I was seeing them, many times. No fish on the street, mind you, but I once saw a hotel in the sky, which was of course a cloud pattern. But the error persisted for several seconds, even while I was actively aware that I what I was seeing could not be there. A passenger in my car saw the same thing.

Yeah – unexpected objects. A cyclist in traffic isn’t unexpected, while a fish would be, so it’s unlikely that a driver would suddenly hallucinate a fish in the middle of the road. Meanwhile, the computer just may, and then react as if a fish was actually there because it doesn’t know any better.

The possibilities are endless:

https://www.youtube.com/watch?v=63Xs3Hi-2OU

Of course they have to discriminate as well, because that lack of discrimination and algorithmic hallucination is exactly the reason why the system was disabled: the computer going “umm, err, fish?” is the fault that causes the erratic braking.

Also, the Tesla case was a bit more complex than the article makes seem:

>”Tesla’s algorithm likely doesn’t trust the radar because the radar data is full of false positives. ”

Actually, they don’t trust the radar because the radar has a really blurry vision. It sends out a cone of radio waves, and it gets an echo back from every distance. In the case where the Tesla broadsided the semi trailer, the radar was returning an echo _underneath_ the trailer and the system interpreted the conflicting information (near obstacle but road clear) as a billboard above the road. So, Tesla changed the algorithm to not trust the radar except when it unambigously says “obstacle ahead”.

And so probably in the case of the highway divider, where the radar gets echoes back from further down the road because the divider isn’t blocking the entire road, so the car goes “Okay, let’s see the camera instead”, and the camera starts following the lane markers ignoring the radar data as spurious, and the rest is history.

The fundamental problem isn’t so much a question of which data you emphasize more, but about the simplicity of the algorithms that are used to put weights on the data, because the AI that’s ultimately driving the car is dumber than a bag of hammers. It doesn’t have any sort of flexibility or ability to adapt to the situation because that too has to be programmed in advance, and it’s just an insurmountable task for any programmer.

Also, the companies claim they’re putting billions of simulated miles on the cars to test them out in various situations, but at least Google does not actually train the car’s AI by giving it simulated sensor input – because that would obviously be too computationally expensive – you’d have to emulate reality for the car. Instead, they simply tell the car what is there, bypassing the sensor system.

Self-teaching is being worked upon.

https://www.technologyreview.com/s/611281/a-machine-has-figured-out-rubiks-cube-all-by-itself/

>”Autodidactic iteration does this by starting with the finished cube and working backwards to find a configuration that is similar to the proposed move.”

There’s only one correct end state of a Rubik’s cube, so you can iterate backwards and try to see how far you are from your desired result, so that’s not exactly “self-teaching” in the same sense as what is required of an AI car.

Plus, this is computationally intensive, and you can’t afford a kilowatt-cluster of processors on-board your car because it can consume more energy than actually driving the car, so you have to implement whatever algorithm you have on the equivalent of a laptop CPU.

>” technology that augments human abilities explicitly (think ABS brakes) ”

Ironically, ABS often increases stopping distances because people stop braking when the pedal starts hammering back at you. You have to drill people to keep pushing it harder, and still they sometimes get the opposite reaction.

Or, ESP, which makes driving on the limits of your traction invisible to the driver by hiding the smaller slip-ups that serve as an early warning that you’re driving too hard for the road conditions. Then when you do slip up, you’re in the ditch.

Most cars on the road with ABS have no system to detect when the vehicle is still in motion (sliding due to minimal traction) once all the wheels have stopped rotating. Look up videos of snowstorms in Seattle. Cars and trucks sliding and bouncing down hills like balls in a pinball machine, with all wheels locked up.

Panicked drivers likely pushing hard on the brake pedal with both feet, wondering why their ABS hasn’t safely stopped their car.

In that situation the correct response is to *let off the brake* then push it again and hold. If the pulsing stops but the car is still sliding, off the brake and push again.

Some newer luxury cars may employ solid state gyros and/or accelerometers to make the ABS keep hammering until the vehicle has actually stopped moving.

There’s an idea for a hack. Add such a system to a vehicle, without affecting normal operation of the ABS. Monitor the accelerometer and gyro along with the wheel speed sensors. The speed sensor signals get passed through the addon without alteration. The addon gets ‘woke up’ by being connected to the brake light circuit.

When awake, the addon watches the wheel sensor signals and if they *all* stop pulsing while the accelerometer and gyro are indicating the vehicle is still moving. the addon ‘steps in’ and sends a burst of fake wheel sensor signal to the ABS control to kick it back into pulsing. After forcing the ABS to resume pulsing, the addon switches back to monitor mode – waiting for *both* wheel sensor signals and accelerometer / gyro to read the vehicle is stopped.

Even if all it manages to do is allow a vehicle to bobsled *straight* down a slick hill, that’s better than spinning and causing huge amounts of damage to itself and other vehicles and things along both sides of the road.

“Or, ESP, which makes driving on the limits of your traction invisible to the driver”,

If the driver has ESP (Extra Sensory Perception) they should know if a mishap is imminent and adjust their driving/route accordingly.

B^)

ESP, ESC, DSC, it’s a system that applies the brakes to control under/oversteering when the car starts to skid.

Normally you’d feel the car pushing (understeer) under icy road conditions, or wheelspin when you’re trying to accelerate, but the ESP does a kind of torque vectoring thing that keeps you going along without noticing that you’re at the limits of your traction. Of course you may notice that the system is doing its thing, it might even blink a light or give you a little sound warning, but people get comfortable with it and simply push on because the car seems to be handling the situation just fine.

Kinda like 4WD in the winter. It gets you off the line easier, but it won’t make you stop any faster.

Thanks for the clarification [Luke].

Your response reminded me of the first time I drove a girlfriends Ford Festiva (manual transmission).

Halfway down the street, a bright orange light in the middle of the cluster lit up and it said “SHIT!”

(I nearly did!)

My girlfriend laughed and told me that it was telling me to “SHIFT”.

I knew how to drive a manual transmission, but I’d never had a car tell me before (other than sound/vibration) when to shift.

A ‘semi autonomous’ car (autoplilot, whatever you want to call it) is worse than useless in my opinion. As is pointed out in the article, if the human driver is being relied on to make critical decisions, then they should be engaged with the minute-to-minute operation of the car. Humans cannot instantly go from a state of distraction to a state of action. Braking delays *when the human is already paying attention* already account for a large time; going from watching a video on a phone to braking will take much longer.

If the driver has a steering wheel / brakes and relies on a human for critical decisions, it is not a self driving car, and IMHO is going to be worse than a fully human-driven car. When / if fully self driving cars arrive (assuming some very serious problems are fixed, such as keeping sensors clear and working in cold Canadian winters), I could see using them, but these halfway ones are not for me.

As a driver of a vehicle I can choose to purchase a car without ‘autopilot’, or to not turn it on. I for one would keep the damn thing off!

My biggest concern is for the pedestrian who has no choice in whether they are going to be mown down by some errant AI.

Well the pedestrian can choose not to walk into traffic…

“I you don’t like my driving, stay off the sidewalk!”

-Bumper Sticker

They should design roads and walkways so pedestrian and high speed vehicle traffic remain separated from each other.

There are two ways to accomplish this goal:

1. Tear down the city and rebuild from scratch with wider streets

2. Hop in a time machine and tell the settlers that they should build wider streets to handle future vehicles

Honestly I can’t tell which one is more practical..

@F Or three learn our lessons and apply them to future things we build. Same as we’ve been doing with everything else since time began.

There’s another very difficult problem that has some discussion, but not as mush as the strict engineering issues: the ethics of the control system. It was brought to mind this weekend as I moved left to clear a cyclist near the top of a blind hill. If a car suddenly appears coming over the hill, does the system wipe the cyclist off the road to prevent a head on collision between two vehicles?

There’s an older, but interesting article at https://www.scientificamerican.com/article/driverless-cars-will-face-moral-dilemmas/

You are at fault in this case, I bet your driving instructor told you never to overtake before crests. What you always have to do is pull in behind the cyclist and wait with the overtaking until you have a view of the road.

Following on Tore: and this is the correct policy for a self-driving car to take as well. Always keeping following/sight distances that allow a complete stop in case of surprises.

If you assume that the vision system can correctly classify all situations, this is totally feasible. If.

Who is at fault isn’t the issue. The problem is that in cases where there are two hazards and no solution (whether real or only perceived), the driver has to make a choice. Modifying the case a little, you’re driving, and a bicyclists pulls out into the road ahead of you at a time when swerving to miss him will cause a head-on collision. When I was taught to drive, the order of priorities was given as: prevent hitting pedestrians, bicyclists, oncoming vehicles, other moving vehicles, and fixed objects, in that order. In an emergency situation, you don’t have time to be any more discriminating than that, and it still seems like a reasonable order of priorities. Keep in mind that in both cases, the outcome is unpredictable: as the driver, you can’t predict whether or not the bicyclist will realize his error and swerve into the ditch to avoid getting hit, and you can’t predict whether or not the driver of the oncoming vehicle will recognize the problem and swerve off the road on his side to prevent the head-on crash. So all you can do is react to minimize loss of life, which means avoiding the bicycle, because the bicyclist is more likely to be killed than the driver of the oncoming car is.

The problem is that if a self-driving car misidentifies an empty trash bag blowing out into the road as a pedestrian, and chooses to cause a head-on collision with another car to avoid it, the algorithm of the self-driving car will be judged as defective. If a human makes the same mistake, it will still be judged as “driver error”, but nobody will say “people shouldn’t be allowed to drive cars” because of it. Well, nobody but me – to me, self-driving cars are way overdue, because people really aren’t equipped to handle high-speed driving. It ruins lives, of both the victims and those at fault.

Any company that names their system “Autopilot” should not be allowed to hide behind any fine print stating it’s not really an autopilot. Once you’ve loudly touted it as “Autopilot”, you’ve established certain expectations and you should be held liable for those expectations you intentionally created.

I can understand why Waymo might be involved in more accidents. Their vehicles tap the breaks every 3 seconds to maintain a constant speed on the freeway. If you’re the human driver unfortunately following a Waymo, you have no idea why it’s braking unexpectedly, you have to respond by hitting your brakes, causing a chain reaction.

Any automated system that expects a human to be able to second guess the computer in time to override it is not being designed to work in the real world. Humans are slower, they have less information, and they have no idea how or why the system makes the decisions it does.

It is an autopilot, same as in airplanes. In airplanes, the lower level autopilots pretty much just keep the plane level and do nothing to avoid other aircraft. The better ones can terrain-follow.

Criticize them for tying it to “self-driving” in their marketing, sure, that is a valid point. But “autopilot” is absolutely an appropriate term here.

Auto pilot from sci-fi movies is much more familiar concept to people than regular current airplane auto-pilot. Sci-fi auto pilot is full auto. Besides i’m pretty that some planes can take off and land in auto pilot mode, so it’s not that far fetched.

Besides as i and many have said, semi-automatic control is pretty much complete crap. Changing focus couple times a seconds (as you pretty much should be doing since a lot can happen in a second) is not possible.

Car auto pilot does not even work like it does in airplanes. Airplanes have warnings about different situations, but all this “auto pilot” does is complain about hands not being on the wheel. It’s not the same. Obviously the reason is that airplanes have a lot less objects flying around in the air than cars do on the road, planes can see a lot further, which is why they and pilots have a lot more time to react. Car auto pilot does not have that time. It needs to work complelety autonumously. Waking up drivers from their fantasies takes seconds.

Not to mention that aircraft systems will *usually* be built to a standard, whereas vehicle/car systems are built to a price (and a cheap one at that).

Everything in a commercial aircraft is double or even triple redundant and there’s usually an air gap firewall between entertainment systems and the flyby wire system.

Plus on top of that it is subject to regular inspections after so many hours.

The only thing you probably going to be able to make happen in cars is the air gap fire wall.

It’s not the same as airplanes, because in airplanes, accidents don’t happen as fast. You don’t fly along at 30,000 and hit a concrete barrier.

Maybe Tesla took their marketing from Subway. “Subway Response To ‘Footlong’ Controversy: Name ‘Not Intended To Be A Measurement Of Length’”.

The longer term “vision” is a mesh communication network where automobiles communicate with other vehicles. 5G proposes this as a use case, but whether or not this is an autonomous mesh, 5G carrier network. or both is another discussion. The point being that any ambiguity in the road is logged and advertised to others that follow for fair warning, and also the successful solution. Similarly, once the anomaly clears, it is removed from that road.

The end result would be an autonomous WAZE for self-driving vehicles. A necessary requirement (or due diligence) is that responsible road crews and engineers can then consider ways to fix the road markings or hazard that is being identified by the vehicle algorithms.

Implications… Does that mean we can automatically avoid every police speed trap? On the flip side, police can see speeding vehicles and issue tickets based on your driving?

Standards… process… liability…privacy…. Its a new world!

In a system like that there necessarily must be zero human drivers and zero human interventions needed for automatic systems. Any variance would mess up the grid. That’s outrageously unlikely. And speed traps and speed limits would be unnecessary in such a system if it actually did work.

Never mind privacy issues.

Great idea… What could possibly go wrong once hackers figure out how to spoof it?

It’s much easier to cause a self-driving car pileup by painting a STOP sign on a piece of cardboard and suddenly slinging it up by the road during high traffic. The humans will ignore it, the computers won’t, because they can’t tell the difference.

It would not just be your typical hacker you’ll need to worry about such a system would be an attractive target to attacks by terrorist groups, nation state backed groups or even agencies inside certain governments.

Another stupid thing is to demand the attention of the driver all the time when running on “autopilot,” but if the driver is behind the wheel with nothing to do, the probability of being distracted is much greater than if he were driving.

Those self-driving systems are only as good as their programs. Every complex program has bugs. So … not a good idea. And no amount of hype is going to change that.

Humans have flaws as well, but they are allowed to drive?

Humans kill one for every 20 million kilometres driven, Autonomous cars so far kill 20 for every 12 million kilometres! Are we allowing our roads to be a test bed for pimpled millennial programmers, that apparently are 20 times worse at driving our car, because they spend all of their adolescence playing mario kart?

Except there’s lot more than programmers involved in autonomous vehicle design.

What is your source for the figure of 20 per 12 million KM? That is one death per 600,000km which is not realistic by any means

I cant remember where slashdot presumably last year sometime, and maybe it was total accidents not only fatal in that investigation.

Hey now, there have been studies that showed playing Mario Kart (and other racing games) makes for better drivers. https://www.psychologicalscience.org/news/releases/playing-action-video-games-boosts-visual-motor-skill-underlying-driving.html

Tore Lund said it well. I will add that humans go through the driver training, and are removed from the road if drunk or otherwise incapacitated.

Just consider this: 10 years ago self-driving cars were just a dream. Our tech did not improve so much in the last ten years. It is just hype, similar to 80s AI, cloud, etc. Someone will earn a lot of money before the balloon is popped. When the smoke clears, they will be on Bahamas.

I have trouble driving on dark and rainy roads. I would not trust even a top programmer to churn out a system that can flawlessly drive in such conditions. And even if he is a top programmer, sensors fail.

Designers of such systems are aware of that. Which is why they now often label them as semi-autonomous. Many more will be killed until people learn the distinction … average Joe has no idea.

+1

Most of the AI techniques making headlines recently have actually been around since the 1970s

The only thing that has changed is processing power and storage have become cheaper and there’s a lot of entities with deep pockets to fund projects with it.

Why can’t the “false positive” situations cause a warning light or noise to happen? If the car thinks there might be potential danger, wouldn’t it be better to give some indication rather than just do nothing?

Too many “false positives” will lull a driver/pilot into ignoring them. The FAA discovered that with the original LLWAS (Low Level Windshear Alert System) it had (IIRC) a 60% false positive. Pilots soon learned to ignore the warning from the tower, resulting in some crashes.

“Which car company did you say you work for?”

“A *major* one.”

Yay! Fight Club!

I’m missing one thing here, are humans any better? Do humans make more or less accidents per (thousand) kilometer? Or is that somehow irrelevant?

A previous article dealt with this. The answer is, we don’t know. Not enough data.

And since that article, there have been a bunch more crashes and a pair more fatalities. It doesn’t look good for the self-drivers, Tesla in particular.

That said, Google/Alphabet/Waymo and GM/Cruise have been doing very well. I don’t believe Waymo’s reported numbers as far as I can throw them, but Cruise seems to be playing the reporting game straight. Their cars get hit a lot, but very seldom do the hitting, even though they’re driving in a much more challenging environment (downtown SF). I suspect they’re taking the false positives seriously enough — maybe too much?

If you count total crashes, Waymo and Cruise are doing poorly. If you count at-fault accidents, they’re doing pretty darn well. If you count fatalities, you get a divide-by-zero error. So, that’s good, right?

The number of acceident is low, but the number of disengagement of the auto pilot by the operator is still realtively high.

Disengagements is a tricky metric — and that’s what I mean by not trusting Google/Waymo’s numbers. There are many, many disengagments that have nothing to do with the car malfunctioning. At the end of the drive, for instance, or if they’re going to fill up at a gas station. These are not required to be reported. Waymo seems to interpret this as “all non-safety” disengagments don’t need reporting, where they’re defining “safety”. The other firms seem to be reporting them all, maybe with a few technical exceptions.

Why? Waymo seems to see the disengagment number (as you do!) as a measure of performance. In as far as it’s a publicly viewable measure (in Cali) they want it to look like they’re making steady progress. Cruise, on the other hand, has said “we don’t care, the more mistakes we make, the faster we learn” which I think is a healthier attitude at this phase of the game.

But yeah. Don’t use disengagements as a hard-and-fast metric just yet, except to point out that none of them are anywhere near human performance if that _were_ the relevant metric. Your other point — maybe Waymo and Cruise just have more attentive human drivers — is plausible.

When self driving cars can dump control back to the driver and absolve themselves on any responsibility, it’s irrelevant.

Think of how many trouble-free miles humans could rack up if they were able to throw up their hands, say “Jesus take the wheel!” and not take any responsibility for what happens next.

Nice one!

+++

“Jesus take the wheel” really is the reaction of some people in emergency circumstances. People freeze up, and also under or over react constantly.

They are still found at fault though. Unlike the software engineer / manufacturer of a self driving car.

Yeah, that’s fair enough, I guess.

I’d argue that we’ve not had a true legal test of finding fault after an accident cased by a self driving car. I’m at least happy that the software engineers and manufacturers of the self driving cars are trying to learn from the mistakes of their autonomous minions. I see a lot of willfully bad driving practices among human drivers, they show little capacity for learning from their mistakes.

Humans are 99.99% better at stopping when stationary object blocks their way.

Lets say you have a choice between

A) average, status quo, does great job 95% of the time.

B) does great job 97% of the time, but will KILL pedestrians in 100% of cases.

As I’ve said every time one of these articles is posted on HAD: I will NEVER ride in an automobile that is not under the control of a human being. Computers simply do not process visual cues in a way that allows them to control a car safely and react appropriately in all situations. Roads are designed and built for human drivers and how they act and react. I really hope it stays that way.

I understand that humans are imperfect, but all of these accidents would have been completely preventable if the cars were being driven by alert human beings instead of computers.

Unless they were drunk :-)

Actually I have the same thought – I don’t think you can have an automated driving system that is completely reactive. All drivers are both pro-active and anticipatory in their driving. They anticipate that when a ball bounces across the street there’s probably a child behind it, and they anticipate the way a given animal is going to react to a given situation (a deer will react differently than a bicyclist)

So until anticipation (AGI) is built into these systems they will always fail to do the right thing for a set of unusual circumstances.

How about a deer on a bicycle?

Even a human would be so thrown by that they would plow right into it.

Pheasants will wait by the side of a road then do a kamikaze leap in front of a vehicle. Seems like they’re trying to get a boost from the bow wave in the air. Often they misjudge and take out your grille or a headlamp.

Mostly they’re just stupid. A bird watches the scenery with eyes on either side of the head, so it doesn’t have good binocular vision to judge distance, so it sees the far-away car as stationary (but inexplicably growing). When the car is close enough that they can understand it is moving, they get startled and try to swoop out of the way.

So far they’ve evolved to avoid actually standing in the road, but they haven’t yet figured out they can just turn around and walk away.

I think an important angle that was not covered by the article (relevant but out of scope) is the human reaction to false positives and negatives. I recently rear ended a car on the highway. I am a paranoid driver (which has saved me many times on the roads of California); I was driving about 150ft behind the car I struck, 5mph under the speed limit. I was fully focused, with hands at 10 and 2 o’clock and eyes on the traffic. The car ahead applied their brakes, and I naturally responded by lightly pressing on my own. It took me over a quarter of a second to realize that the car was attempting a full stop (perspective skew slowed this realization, as the car was breaking at the bottom of a hill that I was moving down). During this quarter second, I visually understood that the car was decelerating quickly, but my mind needed to recheck this (correct) determination with another few frames of vision before I fully believed that I needed to full brake. 65mph is 95.3 feet per second, so before I even thought that I needed to full brake, I’d travelled over 20 feet. Before I had my breaks fully engaged, I’d travelled at least 45 feet. I impacted at nearly 30mph (best guess based on my last look at speedometer before impact). Of course that hill, conditions of brakes and tires all play a big role.

My reaction time was better than most, but still incredibly poor due to needing to recheck the positive that my eyes were telling me. A self driving system would’ve mathematically determined the deceleration of the car ahead, and applied the brakes fully, before my untrusting mind believed there was any reason to.

Looking statistically, in 2015 there were 3.06 trillion miles travelled in the US (afdc.energy.gov), and 6.3 million reported accidents (statista.com). This yields about 1 accident per 500,000 miles. In 2016 telsa reported 1.3 billion miles of autopilot travel. In order to have the same accident rate, Tesla cars in autopilot mode would have had to have crashed around 3,600 times that year to be on par with the US national average. I can’t find the number of crashes reported for them, but based on how US news media hones in on them, it can’t be anywhere near that.

For a very new technology, I think that the number of false positives and false negatives that they are experiencing is well below the human average, and the reaction time and data completeness that lidar / radar equiped vehicles have is unparalleled.

Was there a light or stop sign the car in front stopped for, or did the driver do a version of the swoop and squat, intending to get rear ended?

You are not paranoid enough: you were at under recommended 2 seconds distance behind the car you reared. Case in point: “I was driving about 150ft behind the car I struck, … ” and “65mph is 95.3 feet per second, …”

>”A self driving system would’ve mathematically determined the deceleration of the car ahead”

Yes, but not always. For example, going downhill the car’s radar sees the ground ahead at the bottom of the hill as an approaching obstacle (it cannot tell a hill from a house), so it ignores the radar, and then it checks the camera, which also sees the approaching ground and a stopped vehicle, and then it has to decide whether that’s something to stop for.

Since it has no situational awareness, it may or may not judge that a car has stopped in front of it until it’s too late.

>”In order to have the same accident rate, Tesla cars in autopilot mode would have had to have crashed around 3,600 times that year to be on par with the US national average.”

That’s an irrelevant metric, as Tesla cars don’t crash as much as the US average anyhow. They’re nice expensive cars owned by rich people, driven in places where there’s little inclement weather, and they’re all new in good nick. Furthermore, the autopilot mostly handles the easy bits on long straight highways where nothing happens anyways, and then disengages when it comes to the harder parts where the fender benders tend to happen.

Sensor Fusion.

Isn’t that a razor sold by Gillette?

B^)

95 fps, 150 ft behind, an ass ident waiting to happen. CA DMV has said 4 seconds, then backed down to 3, last I read, for 65 mph. Given how much awareness most drivers give in routine 65+ mph moving gridlock, I have to agree. As close as you were, you knew you had 2 possibilities; the o e you acted upon, or a full emergency stop… being our under-test 1/3-1/2 sec reaction time is at best 3/4 when on tbe road. You knew that, and choose the false-positive, by hoping your situation was going to be of the more common type, versus the entirely possible worst-case type. You would equal these sometimes deadly algorythms, or, the guys who shut the safeties off.

I want these auto-poilt/et al cars to so indicate… just as Student Driver signs do.

“Tesla reported 1.6 billion miles with Autopilot in 2016” Do you have a source for that?

When I looked, the last number I saw was something like 130 million (with “m”) in August of 2016. (https://hackaday.com/2016/12/05/self-driving-cars-are-not-yet-safe/) Tesla was not coming out with statistics in response to the second US fatality this year. (https://hackaday.com/2018/04/02/self-driven-uber-and-tesla/) I would presume that another 100-200 million miles passed? Divide 300 million by three fatalities (counting the one in China) and you get something just a little worse than the US average, which also counts struck pedestrians and multiple people in cars.

But that’s fatalities, and I only cover those b/c I can get the data. We have no idea how many Teslas are involved in simple crashes with no loss of life.

If the numbers I found were off by a factor of a hundred, Autopilot is the best thing ever.

I’ve noticed that too – If you judge a car to be slowing at a reasonable rate at first it takes some time to correct when you realize that it’s stopping quickly. I’ve been practicing the art of swerving every time this happens so I get the reaction trained and hopefully when I don’t have enough time to stop the swerve will be automatic.

We should go back to the Nightrider system from 20 years ago. It didn’t have any of these problems unless it was gunning down a bad guy on purpose.

Agreed

“Turbo Boost” and blow right through an obstacle.

“Our competition is working on self driving cars…