It’s generally understood that most vehicles that humans interact with on a daily basis are used with some kind of hand controlled interface. However, this build from [Avisha Kumar] and [Leul Tesfaye] showcases a rather different take. A single motion input provides both steer and foward/reverse throttle control.

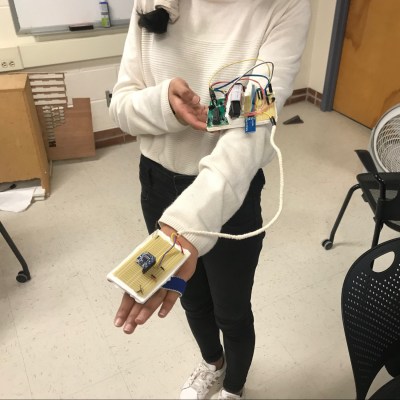

The project consists of a small car, driven with electric motors at the rear, with a servo-controlled caster at the front for steering. Controlled is provided through PIC32 microcontroller receiving signals via Bluetooth. The car is commanded with a hand controller, quite literally — consisting of an accelerometer measuring pitch and roll position of the user’s hand. By tilting the hand left and right affects steering, while the hand is rotated fore and aft for throttle control. Video after the break.

The project was built for a course at Cornell University, and thus is particularly well documented. It provides a nice example of reading sensor inputs and transmitting/receiving data. The actually microcontroller used is less important than the basic demonstration of “Hello World” with robotics concepts. Keep this one in your back pocket for the next time you want to take a new chip for a spin!

We’ve seen similar work before, with a handmade controller using just potentiometers and weights.

Pretty handy, eh?

Oh, c’mon. We did that already in 2015 and demonstrated it to the public in 2016:

https://youtu.be/YV1iDJnHej0

Not only did we control a feature-packed, 4wd space rover on dry land (Mars analogue simulation) *and* while diving at approx. 20m of depth (moon analogue sim) using gestures, but also were we able to turn control on and off [using so called “attention” gestures and also for selecting different modes of operation.

All of this for users in astronaut suits, while effectively offering them true hands-free commanding [they were able to hold other equipment in both hands while commanding and by use of the “attention” gesture to pause or resume the robot interpreting their movements, even able to perform different tasks using their hands simultaneously while controlling the robot.]

Nevertheless, nice work. But I’m still a bit disappointed they didn’t find our project in their SotA research.

Nice, and I’m sure it has some uses, but I’m not sure of the ergonomics of it generally.

Imagine using one to drive your car, and doing an emergency stop every time you scratch your nose!

Did you read the write-up? I did. This was a course project. The intention was to prove that they had learned, and understood the course. It looks like they not only succeeded, but it cost less than $100. Whether or not they knew of your project is completely irrelevant, so you just come across as a “party pooper”!

Experiences I gathered from the project were that reading orientations isn’t neither too hard nor too expensive (you can have the sensors for less than 10 EUR each [add favorite high-level computing solution (arduino, stm, pi) here, should be at EUR 40 max], combining them, e.g., to reconstruct arm and shoulder poses is plain old math).

It mostly depends on choosing the right set of gestures [esp. regarding Dan’s objegrions, having some kind of “attention” trigger is recommendable] and defining reasonable operation areas (deadband, tolerance, drift consideration, etc.).

Therefore I mentioned our work as a reference. Even “just” a gesture control used in a course should refer to current SotA, not to what gaming industry tried in the 90’s — and miserably failed with, for a reason.