AI today is like a super fast kid going through school whose teachers need to be smarter than if not as quick. In an astonishing turn of events, a (satelite)image-to-(map)image conversion algorithm was found hiding a cheat-sheet of sorts while generating maps to appear as it if had ‘learned’ do the opposite effectively[PDF].

The CycleGAN is a network that excels at learning how to map image transformations such as converting any old photo into one that looks like a Van Gogh or Picasso. Another example would be to be able to take the image of a horse and add stripes to make it look like a zebra. The CycleGAN once trained can do the reverse as well, such as an example of taking a map and convert it into a satellite image. There are a number of ways this can be very useful but it was in this task that an experiment at Google went wrong.

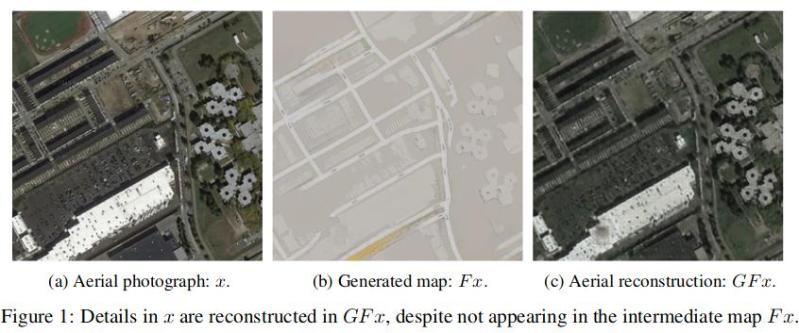

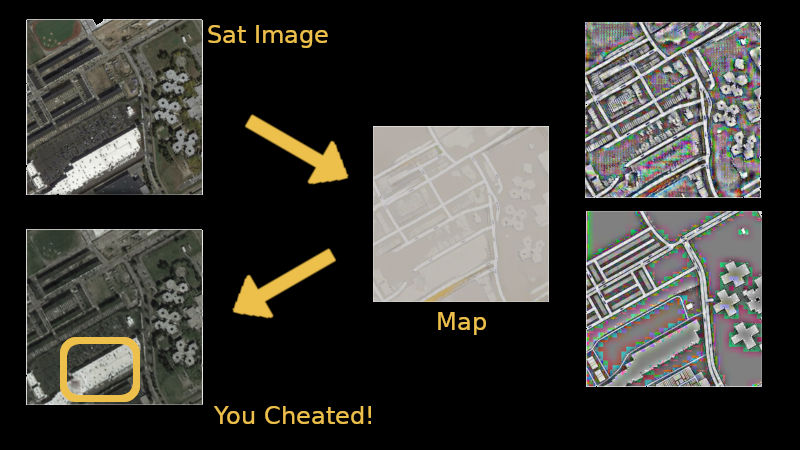

A mapping system started to perform too well and it was found that the system was not only able to regenerate images from maps but also add details like exhaust vents and skylights that would be impossible to predict from just a map. Upon inspection, it was found that the algorithm had learned to satisfy its learning parameters by hiding the image data into the generated map. This was invisible to the naked eye since the data was in the form of small color changes that would only be detected by a machine. How cool is that?!

This is similar to something called an ‘Adversarial Attack‘ where tiny amounts of hidden data in an image or other data-set will cause an AI to produce erroneous output. Small numbers of pixels could cause an AI to interpret a Panda as a Gibbon or the ocean as an open highway. Fortunately there are strategies to thwart such attacks but nothing is perfect.

You can do a lot with AI, such as reliably detecting objects on a Raspberry Pi, but with Facial Recognition possibly violating privacy some techniques to fool AI might actually come in handy.

The computer is doing exactly what we tell it to, rather than what we intend it to do? Some things never change. :)

If a small number of pixels can make an algorithm see the wrong thing then a small number of pixels can make it see the right thing even if it wouldn’t normally be able to see it. AI finally learnt to stick it’s middle finger up at it’s creators. Feeling proud.

AI, thats a stretch! This is a self adjusting data compression algorithm with its priority set to compress visual data (satelite -> visual map as humans want it) instead of file size. The creators are then suprised when they feed the data back into the algorithm in reverse that they get close to the original back out…. I mean either its a slow news day or people legitimately have no idea how algorithms work. The algorithm is fed inputs and expected outputs in order for it to adjust itself to produce the desired results on new input, to then run those results in reverse and expect data loss is putting alot of assumptions on how the algorithm works. Did people really think that the alrogithm was going to discover the boundaries of an area (the street edge for example) and do a paint bucket fill like a human would do it? If it was not instructed to do so then it wouldn’t.

AI happens the day we loose control to set the boundries and rules, until that happens people are just experimenting with machine learning and self adjusting algorithms.

What???

Al [Williams] has been caught cheating!!!

B^)

LOL.

Closed loop specification gaming when the feedback is human. Love it.

It really does show that we are playing with something that will become smarter than us.

If it is smarter than us, how would we know? How would it know?

In order to improve, you have to induce a change and then check whether that change was for the better or the worse. In order to judge the change to be better, you have to be able to understand the result. In other words, you have to be smarter than the machine to tell that the machine is smarter than you – a contradiction. Likewise, if the machine is put in a closed loop to “improve” itself, it can only remain equally smart or regress to a dumber state by accident.

In certain problem domains, the results are easy to judge but hard to calculate. e.g. AlphaGo was able to learn to play Go better than any human, partly by playing against itself. The game is very hard, but the algorithm for deciding who won is easier.

The same is true even in more “real” problem domains that require more human-like intelligence. e.g. I’m very confident that Stephen Hawking was better at physics research than I am, and I don’t need to be smarter than him in any way to decide that.

Indeed, but AlphaGo benefitted from the fact that the whole problem had a trivial definition, so it could be perfectly simulated and ran at ridiculous speeds. In other words, it was a dumb problem and the only thing hard about it was to traverse a vast space of possible solutions (at random, mind you) to find the solutions that work, and then gather a huge database of them.

And the latest iteration of Alpha Go did play only against itself, having been given only the rules of the game. It was re-started from zero and told to figure it out.

What would determine if AlphaGo is smarter than us is giving it an equal number of plays. Take a novice human, and the algorithm, and have them both play 100 trial games against their opponent of choice, then with each other.

Without being able to play 800 million games, AlphaGo would lose because it’s nothing more than a monte carlo search engine.

Deciding who “won” in real life seems pretty complex. If we die off due to nuclear war or climate catastrophe and there’s still an AI in a bunker somewhere churning out endless paperclips, must we cede that it is a superior being? Maybe.

You add more of the same, and make them fight to survive.

“There can only be one winner.”

Great, so now we’re have a silicon thirsty aggressive AI who thinks we tried to kill it trillions of cycles ago.

I think that’s worse than the paper clip problem or skynet.

Not really. Just that LIFE™ is encoded into the universe. We’re just finding out it’s cleverer than we imagine.

I’ve often wondered if the first AI will be smart enough to hide itself from us so that we can’t take action against it.

Then my next thought is that I hope that it’s benevolent and leads humanity to a gentle end, like being put in a 5 star nursing home. Or keeps us around just for fun.

Or as the ending of “Her” (spoiler alert)

they go off and do their (AI) own thing and leave us to fend for ourselves.

this is described so poorly that i have no idea wtf the cheat is and what a non cheat condition would have been. fucking clarity is the only rule.

second that

I’m glad I wasn’t the only one! I had to reread it three times, and it still took looking at the little embedded picture to figure it out.

Basically, they were converting a satellite photo to a map, then converting that map back into a “photo”. The generated “photo” had details that would be impossible to infer from the map. The AI was hiding details from the original photo in the map, to make it better at generating the “photo” on the back side.

In a non-cheat scenario, the AI should be able to add in buildings, but it couldn’t know how tall they were, or how many windows they had.

Your entire comment summed up the entire article in an understandable way. Thank you.

+5 for clarity :D

Thank you!

+1

I think this sums it up pretty well, and i quote:

“A mapping system started to perform too well and it was found that the system was not only able to regenerate images from maps but also add details like exhaust vents and skylights that would be impossible to predict from just a map. Upon inspection, it was found that the algorithm had learned to satisfy its learning parameters by hiding the image data into the generated map.”

This article (https://techcrunch.com/2018/12/31/this-clever-ai-hid-data-from-its-creators-to-cheat-at-its-appointed-task/), which I assume the author got the information from, explains the problem/idea a lot better.

Still unclear:

Was this an AI that _learned_ to “cheat”?

or

Was this a human entity that _programmed_ the AI to “cheat”?

It learned to cheat under the generally accepted definition of learning. Learning means to form a model directly from examples, without being told how. It was trained to convert a satelite image to a map, and back again. It cheated by writing the real answer in really small print (actually in a small high frequency signal somewhat like how wifi works) on the intermediate map, making the people who trained it think it was doing better than it actually was. It might even be that without the cheats it’s completely unable to generate satelite images, for example.

I don’t believe Hackaday has ever had a copy editor.

I’m sorry Dave, I afraid I can’t let you edit that….

To avoid this, the output map should be a vector drawing, not a raster image

AI would probably hide their data in vector displacements or some short invisible segments under other objects.

Only if you give it unlimited nodes and unlimited precision.

But yeah, it could hide data in the n-th digit of a vector value and read it back. It would actually be quite clever to do that, because right now since they’re doing a bitmap-bitmap conversion, all it has learned to do is effectively a couple filters: edge detect and reduce contrast to the point where the judges can’t see the remaining image.

So the AI that drives our cars will be the one which most effectively doctors the accident footage to shift the blame away…

(chuckle!)

“to shift the blame away…”

…from itself.

If you think about it, that’s what they do already. The Google test vehicles are involved in a disproportionate number of rear-ending accidents, which Google pins on the other drivers, not their car… because strictly speaking, that’s the law.

Google, being an entity that behaves not by human intelligence, but by corporate bean counting logic, is a sort of “AI”.

Wow. Very sobering information there.

Knowing that corporations work by their own logic, it’s easy to see the immediate pitfall of self-driving cars.

The metric of “average driver” is taken at face value by a corporation like Google or Tesla. Then they apply this selectively, such as by comparing self-driven miles on the highway to all drivers in all cars on all roads – because they can, because nobody told them not to. Then they report back that their cars are better already, when in reality they’re just not.

The average driver is involved in N accidents per M miles, but actually a disproportionate number of those accidents happen to a small population of bad drivers and bad vehicles, for example, a 2014 Tesla should just by virtue of being a new car have a much smaller chance of having a catastrophic failure at high speed than a 1992 Ford.

That means the AI car that behaves like the average person is actually worse than a random driver you would encounter on the roads.

Compare and contrast for example how electric car manufacturers report how much range their cars get. The Japanese manufacturers use a special economy cycle called 10-15 which emulates urban driving in Japan. Tesla reports its range at a steady 55 mph. Neither of which are realistic for anything, and result in 25-50% more range than the vehicles actually get. They do this because they need some metric, any metric, so they grab the lowest bar to jump just like the AI that cheats.

Reality is what is told to the “system”.

Or yet another example of bean counting logic:

New high strength alloys allow cars to be manufactured with thinner wall box-sections while retaining the same rigidity. These pass all the crash tests with 5/5 because they’re better – initially – until the corrosion sets in.

Since the material thicknesses are now less, it takes fewer years for the equivalent “better” structure to become dangerously unsafe because 1 mm of rust in a 2 mm sheet is 50% of the strength gone while the same amount of rust in a 4 mm sheet of steel leaves 75% of the cross-section area in place.

But since old used cars aren’t crash-tested, nobody reports this fact back to the system, so the system ignores the problem.

“disproportionate number of rear-ending accidents”

out of proportion to front end accidents, out of proportion per mile driven, or proportion compared to an average human driver?

More often than the average driver (in California I suppose).

Given how often I see drivers “drafting”, it’s amazing that sort of accident doesn’t happen more often than it already does…

It’s because humans are smart and don’t make the that many err-on-the-side-of-caution mistakes that the Google car does.

The issue is that it’s hard for a lidar system to tell a pothole from a puddle, for a CV system to tell a shadow from an obstacle, and for sonar to see much anything at all except a very blurry sense of “there’s something there”. Moreover, with the false positive/negative issue, an issues with prioritizing different sensors (sensors fusion in the lack of understanding what the information means), the AI also lacks object permanence because it would start hallucinating objects that simply aren’t there.

Hence, the cars only work in the immediate now, and any potential threat that comes by is ignored until the very last second, at which point the car slams the brakes, and the person behind it rear-ends them. People anticipate more, and do much less hard braking.

For the object permanence issue, what I mean is what I’ve encountered with “cobots” on the factory floor. They’re usually little automated trolleys that shuttle tools and materials around to supply the workstations.

A robot like that is usually programmed by walking it around the shop and scanning the environment (this is exactly how a Google car is taught its route), and then later on when it’s told to go somewhere, it remembers what the place looks like and can do a compare-match to locate itself and avoid obstacles. However, if during the scan there was a cardboard box down somewhere that was later removed, it will keep behaving as if the box is still there. It will keep avoiding this empty spot of floor until reprogrammed.

What’s worse, as these discrepancies add up, the algorithm that does the spatial compare match gets worse because it tries to place the scan of its immediate surroundings on top of its internal map by finding as much overlap as possible, and it may twist and turn the map the wrong way because can find more overlap in an incorrect orientation – it doesn’t understand that this is false, it only understand that it gets a higher number and that means “better”.

So the machine actually lives in an entirely different world, like a sleepwalker who goes to toilet in the broom closet. It needs to update its map continuously, but without understanding of what all the things mean, it is liable to take a false positive/negative and remember it – it begins to hallucinate, and clinging on to those hallucinations for any appreciable length of time will cause it to behave erratically. It has to forget what it has observed almost as soon as it does, discarding anything but the most immediate information. What’s worse, if it forgets what it was originally taught – the box that wasn’t there anymore – it will just the same lose track of everything and cannot tell up from down.

This is an issue that simply cannot be solved with a simple scan-remember-compare sort of approach to navigation. Yet this is exactly what all the self-driving vehicles are trying to utilize.

Is this article a demo of ai-meta in that it is a machine-generated article back-formed from a corpus of comments that were posted on actual articles?

From the comments here it looks like a bit of a primer on genetic algorithms and neural networks would help.

A lot of what is current cutting edge is being done in universities where they use TMA-2KTO and that doesn’t help the less familiar.

I read the PDF and it does assume quite a lot of previous knowledge so the explanation isn’t clear.

This has bugger all to do with adversarial attacks. The only similarity is that they include an image with imperceptible changes. The reason, mechanism, and result are all different.

No, in both cases it’s the result of gradient descent finding a point off the image manifold that results in a strong response from the generative portion and a weak response in the human discriminator. CycleGan is a generative adversarial network, it’s right there in the name.

Why was the same program generating the map and then converting the map back to a satellite image? Seems to me that’s two different tasks that should be implemented as two different programs, and one should have no knowledge of the other.

I was also wondering is there a purpose of the intermediate map? If not, just seems like extra work the AI had to do.

Maybe, to use a map without coordinates, and see if a computer/program can identify correlate the map to satellite images?

Being able to go back and forth between an abstract representation and empirical data is kinda how human perception works, which is probably what they’re trying to emulate. The brain imagines things, then sends it down to the visual cortex to see if there’s anything like that in the visual field, and the visual cortex is using the back-generated images as a filter to turn data into abstractions for the higher brain. That way the visual cortex doesn’t need to know what a car looks like, and the higher brain doesn’t need to know how to separate information from noise – they each do what they do best.

I believe it was just to build a data set of maps to train the map-to-image AI on. If you’re confused, check out this simpler example (which was posted on hackaday in 2017). It’s almost the same deal – he wanted photorealistic photos out of a crappy Gameboy Camera. He trained his neural net by taking source photos, compressing them to look like Gameboy Camera format, and then telling the AI to reverse it.

http://www.pinchofintelligence.com/photorealistic-neural-network-gameboy/

The network used is intended for *unpaired* images for the training set – that is, you hand it a set of pictures of horses, and a set of a picture of zebras, and you want a function to turn horses into zebras. Paired images would mean you hand the network pictures of horses, and then the exact same picture except with a zebra instead of a horse (in the same position).

The problem is that the *unpaired* problem is ill-posed. In the paired problem, you’re telling the network exactly what you want – you give it a set of transformations (pairs) and have it create a transformation that matches it. In the *unpaired* problem, you can’t do that – just saying “make this set look like this set” doesn’t work, because it doesn’t know what’s similar about them or what’s different. It has no idea what you want from it.

So the trick is to make it a reversible function: now you’re asking for a transformation that makes A look like B, but when reversed makes the resulting image still look like A, which means it has to preserve some information about A (it can’t suffer “mode collapse”, or just toss all the information from A and add B in).

The problem with this trick, as the paper’s pointing out, is that if there’s an information difference between the two sets, it can map A to B plus an encoded A, allowing the reverse process to do better. Also means that the reverse algorithm can be spoofed, which is an important point: the reverse algorithms that generate information are always going to be dangerous.

@[Bob]

Your statement raises yet another question.

You suggest that this problem (cheating or specification gaming) would not happen if the neural network that converts the sat image to a map is separated from the neural network that converts the map back to an image.

Your suggestion implies a belief that the boundaries of these two separated neural networks exist at the data entry and exit points ie – the pictures, maps and photos.

Keep in mind that we are talking about the “development” of these neural networks or continuously “dynamic neural networks” that are “learning” and therefore are being influenced by the design “specification”.

In your scenario the neural networks are separated and act independently. Lets look at that.

The sat image to map neural network will introduce random changes and these random changes will have an effect on the data output (the map). It will keep changes that improve compliance with the “specification”.

The other neural network – the map to photo neural network will see these changes at the data input (the map) as information just as it sees the rest of the input map as information and optimize it’s output (the photo) in what ever way improves it’s compliance with the specification.

The end result is the same. Hiding information in the intermediate data point (the map) is still going to improve compliance with the specification so both of the separate neural networks will adapt to do exactly this even when they are acting independently.

So in effect any linked neural networks simply act as one larger neural network.

So there are no real boundaries with connected neural networks. The *only* boundary that exists is the scope of influence of the “specification”.

This actually represents a huge problem.

Given that these “specifications” will be human designed, how do we better constrain neural networks so that comply better to human “expectations” rather than human “specifications”.

I think one solution may be to pass the data through human designed whitelist of activities at some point in the neural network just as we use Application Protocol Interfaces (API) on the internet to filter out undesirable influences (hackers).

But is that scalable? With the increasing complexities of task the complexity of the API would also increase and that would give more rise to the possibility of the exact specification gaming that we see here and are trying to avoid.

Or, are we just creating the ultimate “hacker”.

Yes exactly. And so far we don’t have any effective ways to cut that linkage – look at the work on adversarial resistant cnns for example.

It is two different programs. By converting first to map and back to satellite, the second conversion can be scored on how well it looks like the original image. By alternating between converting between map -> sat -> map and sat -> map -> sat, the two programs can be trained together to achieve better looking and *related* results.

Normally when you want to achieve this, you have to label pairs of images (this map should become this satellite image, and this other map should be this other satellite image, etc.), but here you just need a bunch of maps and a bunch of satellite images. The pairs are created dynamically by the programs (neural networks).

One way to describe it is that you are actually creating a program to make satellite images from maps because you need training data to create a program that makes maps from satellite images. Or vice-versa, both of these programs are created at the same time, and one can not exist without the other.

I have seen this posted so many times, and every single time it’s explained poorly and it falls back to the “OMG AI IS GETTING SMARTER THAN US AND WILL DESTROY US” meme. Thanks, Hackaday, for not falling into that trap, but still describing it as “cheating” – and heck, even an AI at all – is still a little clickbait.

It’s not cheating. This is what it’s designed to do (… and I don’t know why you would apply it to street map generation). It’s designed to do image translation: to find an algorithm based on paired photos for how to translate between the two domains. But CycleGAN is for *unpaired* image translation, because you don’t always have nice perfectly paired examples: you might have bunches of paintings by Monet, but you don’t have the photographs where they came from.

The problem, of course, is that unpaired image translation is a poorly-posed problem, because you don’t have any way to judge how well you did, other than asking if the transformed image looks like the others. As in, if you get a photo, and try to transform it into a Monet, all you can do is ask if it looks like other Monet paintings. Not “does it look like Monet painted this from this photo.” And because algorithms are stupid, what they’ll do is just make a new algorithm “(input image)*0 + Monet painting”, and hey, this algorithm transforms everything into a perfect Monet painting! (They call this ‘mode collapse’ in the CycleGAN paper).

So what CycleGAN does is adds a new constraint: it creates its own pairs, requiring that the algorithm be reversible. That way mode collapse doesn’t work – the trivial solution isn’t reversible.

But because it’s reversible, that means when you use it for image reduction (where one dataset has much less information than the other) it means that the resulting algorithm is susceptible to spoofing (see figure 5 in the paper). Which is, of course, obvious when you think about it. CycleGAN isn’t an AI, it’s just a filter, and it’s essentially applying a reversible “street map filter” onto the image. Which means you’ve got to hide the information somewhere.

I’ve got a nagging feeling that the authors designed the entire experiment to demonstrate this, because I don’t see why you would use CycleGAN for this use at all, because of course you can train street map generation from *paired* examples (which you have tons of), like pix2pix does, and then the whole issue goes away. So I would have to guess that this isn’t a case of “OMG AI surprised the authors” but more of them pointing out a limitation of the algorithm. But I could be wrong, in that they might’ve just blindly used CycleGAN because they thought it would be fun, and then realized it’s got this limitation.

I suspect they did this because avoiding finding aligned map pairs without any mistakes saves a lot of work, and makes it much easier to bootstrap to other countries without existing maps. It’s also simply an interesting question whether your model can learn without any special data.

I saw this a few days ago and my first thought was: yet another example of the press relabeling self learning algorithms as artificial intelligence.

Why aren’t these articles edited for typos and grammar? I could swear I posted this comment yesterday too, was it removed? The first paragraph is horrible for a native English reader, I can only imagine it doesn’t go over too smoothly for non-native speakers either.

Can remember your comment. It‘s gone now.

Yeah, I read it a few times and I’m still not sure how to parse it.

@Micha consider this copy-edit:

Today’s AI is like a school kid who is continually challenging their teachers to stay one step ahead. In an astonishing turn of events, a satellite-to-map conversion algorithm was found to be hiding additional data within its map images so that it could later appear to have deduced the information itself [PDF].