Filesystems for computers are not the best bet for embedded systems. Even those who know this fragment of truth still fall into the trap and pay for it later on while surrounded by the rubble that once was a functioning project. Here’s how it happens.

The project starts small, with modest storage needs. It’s just a temperature logger and you want to store that data, so you stick on a little EEPROM. That works pretty well! But you need to store a little more data so the EEPROM gets paired with a small blob of NOR flash which is much larger but still pretty easy to work with. Device settings go to EEPROM, data logs go to NOR. That works for a time but then you remember that people on the Internet are all about the Internet of Things so it’s time to add WiFi. You start serving a few static pages with that surprisingly capable processor and bump into storage problems again so the NOR flash gets replaced with an SD card and now the logs go there too. Suddenly you’re dealing with multiple files and want access on a computer so a real filesystem is in order. FAT is easy, so the card grows a FAT filesystem. Everything is great, but you start to notice patches missing from the logs. Then the SD card gets totally corrupted. What’s going on? Let’s take a look at the problem, and how to reach embedded file nirvana.

How Filesystems Organize Files

You’ve had a surprise Learning Opportunity (AKA, you screwed something up). In the cold garden shed the power supply for your little device isn’t quite as reliable as you though and it tends to suddenly shut down. It turns out FAT (and many other filesystems) aren’t especially tolerant of the kinds of faults you find in these environments. You know what is? littleFS, a BSD licensed open source filesystem by ARM, designed for small devices.

Let’s get pedantic for a moment; what is a filesystem anyway? It’s nothing more than the way data is organized on a storage medium. For a very simple system with simple storage needs, like our original EEPROM temperature logger, there might not really be a “system” per-se. EEPROMs are typically good at byte-resolution addressing so the most direct way to store temperature data might be as simple as “each byte is a sample.” Coupled with knowledge of the sampling interval and time of first boot that would be sufficient for a simple application. In such a system you might say that the EEPROM contains one “file” — the single list of temperature records.

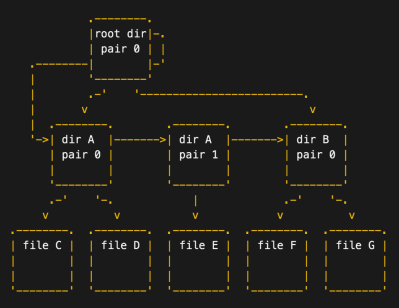

In more complex systems it wouldn’t be surprising to discover that more organization is needed. The advanced temperature logger had a small static website, a file of configuration settings, and the data logs. You could certainly keep all of these in one “file” and hard code the address offsets of each region but that would get brittle when storage regions need to change size or if the SD card is ever read by another device. An alternative may be to store information about where each region begins and ends in a pre-specified structure somewhere on the disk. And once you have such a structure it’s not hard to imagine adding other metadata like what regions of the disk are already erased or in use, timestamps, sizes, permissions, etc. You see where this is going. Even directories could be created by adding hierarchical levels of metadata. Now things are starting to look more recognizably like a desktop filesystem. For relatively simple embedded systems they may not be needed, but once the complexity ramps up, adding a filesystem can make storage much easier to deal with.

In more complex systems it wouldn’t be surprising to discover that more organization is needed. The advanced temperature logger had a small static website, a file of configuration settings, and the data logs. You could certainly keep all of these in one “file” and hard code the address offsets of each region but that would get brittle when storage regions need to change size or if the SD card is ever read by another device. An alternative may be to store information about where each region begins and ends in a pre-specified structure somewhere on the disk. And once you have such a structure it’s not hard to imagine adding other metadata like what regions of the disk are already erased or in use, timestamps, sizes, permissions, etc. You see where this is going. Even directories could be created by adding hierarchical levels of metadata. Now things are starting to look more recognizably like a desktop filesystem. For relatively simple embedded systems they may not be needed, but once the complexity ramps up, adding a filesystem can make storage much easier to deal with.

Data Corruption on the SD Card

Back to our problematic temperature logger. Why did the data get corrupted? Imagine the SD card with those critical sectors of metadata. What happens if one gets damaged? Sometimes they might be recoverable but without that all important metadata the disk turns into so much entropy. It’s kaput.

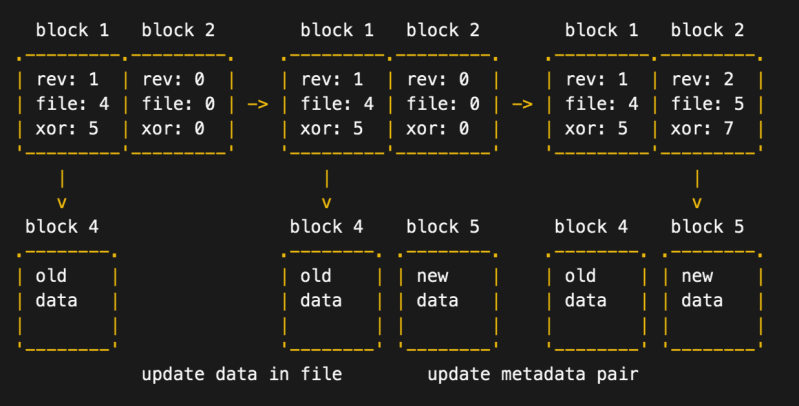

How do you make sure that things never gets corrupted? Journaling is a popular option in which changes to be written to disk are first stored in a “journal” of pending operations. If the disk becomes damaged ideally this journal can be replayed to recover. Metadata could be written in more than one place on disk and compared, or written in a certain order with CRCs and other consistency checks themselves written in a specific order. Data could be retained in RAM until it was definitely, absolutely, for sure written to disk. An exhaustive list of options is a better fit for a PhD than this article.

It might be obvious that some of these choices would work better than others. It follows that schemas to increase durability on a desktop computer or server or phone may not be well suited to the risks inherent to an embedded device with erratic power source. A moment’s thought is enough to realize that many of the ideas mentioned above would fail catastrophically if the power was removed in the middle of a write operation. It is possible to design filesystems with power loss in mind but that’s a specific feature to watch out for.

littleFS Loves Microcontroller Designs

Back to littleFS, which it turns out meets many of our criteria for reliably small embedded filesystems. It’s durable against surprise power loss (they credit this as being the main focus of the project in the documentation). It can wear level, which is especially important on small flash devices which aren’t good for as many cycles as ye olde spinning platter and a stark contrast to FAT. And it supports all the static allocation, maximum RAM and ROM guarantees you want when your CPU has 16k of RAM. Plus the entire thing is a single source/header pair, with a second pair for optional utilities!

The best part of littleFS isn’t its consistency guarantees or its licensing (ok, we do really love a good licensing scheme though) it’s the documentation of course! Crack open that header file to see what it’s all about and your greeted by a great level of documentation. The source file? Even better! Just the right level of comment verbosity; explaining most of the higher level logic but not every line. Honestly it probably biases on the side of too much documentation.

The best part of littleFS isn’t its consistency guarantees or its licensing (ok, we do really love a good licensing scheme though) it’s the documentation of course! Crack open that header file to see what it’s all about and your greeted by a great level of documentation. The source file? Even better! Just the right level of comment verbosity; explaining most of the higher level logic but not every line. Honestly it probably biases on the side of too much documentation.

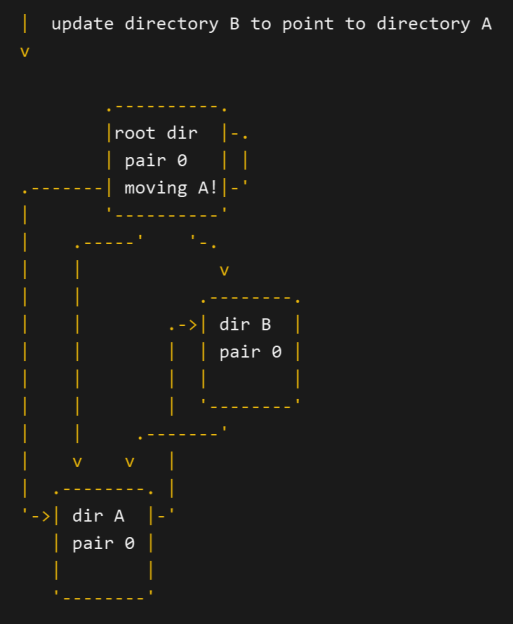

But that’s not all! Just when you think the documentation can’t get any better you find SPEC.md and DESIGN. md. SPEC covers the technical details. If you already know how the filesystem works SPEC is what helps you write additional tooling and debug a raw disk. DESIGN is everything else. DESIGN is a 1200 line description of everything. The choices made to get to the final implementation. Existing designs to compare to. The theory of operation, with ASCII diagrams so good we used them to decorate this article.

Anyway if it’s not obvious, I love this project and definitely intend to use it the next time I have storage to organize on a small embedded system. Even if you don’t intend to, if you’ve read this far, it might be worth a skim through DESIGN as a primer on how to write great documentation what to think about when putting together a filesystem. And as always, if you’ve tried littleFS out or have another favorite uC filesystem, tell us in the comments! We’d love to hear how it worked out.

Here’s a performance comparison against SPIFFS, another very commonly used file system.

https://github.com/RIOT-OS/RIOT/pull/8316

How’s the wear leveliness of each? Is SPIFFS doing something cool with the extra time it uses?

“the power supply for your little device isn’t quite as reliable as you though and it tends to suddenly shut down.”

Yes, I am glad I haven’t shut down sudde…

lwext4 (https://github.com/gkostka/lwext4) is probably worth a mention also, though it doesn’t do its own wear leveling so it’s better suited to SD/MMC devices than raw flash.

With SD/MMC I’d be curious to know how often the culprit is the filesystem you can see and how often it’s the abstraction layer turning a big chunk of flash into a nicely behaved block device.

It’s undeniably the case that common filesystems, especially if assisted by a configuration that is optimistic about how much write caching it can get away with before comitting to disk, can do all sorts of gruesome things if you pull the plug unexpectedly.

However, there’s rather a lot going on below the level you can see; often a pretty punchy processor, for the context, handling wear levelling, bad blocks, etc; and pretty much universally without the backup capacitors that are at least an option on nicer SSDs(they certainly don’t fit in the budget of your average SD card; and those things are’t physically roomy either, microSD even more so) to allow their controllers time to shut down cleanly and finish whatever abstraction witchcraft they are doing below your filesystem.

If you are doing things perfectly safely on the FS side(either something specialized like these ones or just mounted read only so it’s not possible to get caught partway through a write); are you OK; or will the SD card itself freak out behind the scenes after a few nasty power cycles?

This is exactly the issue, and why you shouldn’t trust your data to SD cards.

I tested a lot of cards about ~10 years ago. Cheap ones, expensive ones (600 euro for 8GB), and they all failed the same way. Cut the power mid-write, and the internal administration messes up. Usually resulting in single physical sector becoming multiple logical sectors on the block device level. No amount of filesystem recovery can protect you from that.

Things might have become better in 10 years, but I do not think so.

eMMC however, seems to act a bit better, but I haven’t done tests on that like I did on SD.

It seems to me that the mmc cards could really benefit from a nice little 5.5V 1F cap on their supply rails with a schotty diode. This might be possible as a retrofit to some sbc’s. It might just allow the card management processor to complete its operations. What do you think?

I had a fun little project a while back, so allow me to add my experience. You know how microcontrollers these days have a bunch of flash on them, most of which goes unused? Wouldn’t it be neat to turn that into a tiny filesystem, to make reading and writing configuration or small logs easier?

No. Stop. GIVE UP.

Seriously, give up. That microcontroller may have more than a hundred times the space you need, but its flash space is organized into a small number of whopping big sectors (8K, 16K, or even 32K), and your flash filesystem really really needs as many sectors as possible. Ideally hundreds or thousands of sectors, and you’re not likely to get more than 10 from your microcontroller.

When I whipped up a “flash space simulator” in C++ and ran a few tests with an 8-sector FS and a single file, SPIFFS started corrupting data almost immediately, with LittleFS and Coffee FS (from the Contiki project) failing very shortly afterwards. Even with 100 sectors

In summary: no matter how much free flash space is left on your microcontroller, leave the filesystems to the dedicated flash chips.

Oops, a sentence got cut off, but I think you can guess what it said: “Even with 100 sectors, corruption happened after not too many writes”.

Can you clarify a bit? What is the cause of data corruption?

I wish I had taken better notes while I was running these tests, because all I have is my (fuzzy) memory.

What I think I did was create a 1kB test configuration file, then see how many times I could write 64-byte changes to the file before the filesystem returned an error or didn’t return the correct data when reading values back.

If I remember correctly, the SPIFFS and littleFS write functions began to return errors, while CoffeeFS’s read function began to return blocks of data with one or two random bytes containing a zero where there should not be zero.

I’m actually doying just that on a blue pill board. The STM32F103C8 report 64KB but actually as 128KB so I use the upper 64KB formatted in FAT12 as a file system using FatFs. I’m still in development at this time so I can’t report any error rate.

Interesting, if I’m reading the datasheet right it uses 1kB sectors for the whole flash area, which is a lot better than most. However, that still only gives you about 64 sectors, and FatFS is *not* flash-friendly. The only time I’ve been able to use FatFS with a microcontroller was when the actual storage target was a big microSD card.

Best of luck, though, and I hope it works for you!

No I use 512 bytes sectors and a 1024 bytes RAM buffer. The RAM buffer is written back only when required to reduce flash wear. It is not immune to power failure but batteries rarely fail.

The number of sectors could be a limiting factor for a “dumb” fs which triggers a read-modify-erase-write cycle for each operation… but any reasonably flash-aware FS should (mostly) avoid such problems.

Instead of giving up, use the right tool for the job:

https://os.mbed.com/docs/mbed-os/v5.11/apis/nvstore.html

What do organizations such as JPL do for spacecraft? Can we learn from what they’ve done? Surely they are using something more resilient than FAT.

Core memory?

yes; I knew a guy who worked on Viking and it used something like core: ‘magnetic wire memory’. The Voyagers used magnetic tape, 8-track style. New Horizons used a kind of flash of sorts in the ‘Solid State Recorder’.

The recent space probes for logging commonly use SDRAM, Solid State Recorders for storage under high levels of radiation with a strong Reed-Solomon EDAC (Error Detection and Correction) code e.g. (16, 12) Reed-Solomon code with 50 percent redundant bits. Flipped bits are corrected every few milliseconds when the memory is refreshed, and additional radiation hardening is concentrated around the EDAC and refresh hardware.

Is there a way to use this file system for something like a RasPi? The RasPi is where a lot of people get introduced to filesystem corruption – especially since they will try to put the system (and full Linux install) into situations where sudden power-down is likely. A distro with a “mixed” file system might be a way to escape that particular dead end.

Notice how many replies you got? To a problem which affects “…the world’s best selling computer…”? And to the problem which has never been fixed since ‘Day One’?

Do you want a reply? Here is a reply from the 1990s that still works today: use the boot media to load a diskless kernel that mounts its root file system over NFS. This is a great solution because it solves a number of problems and it is really easy to set up.

unsure if the pi can do this, but you could entirely skip over the bootable media and boot from pxe network…..

Newer Pis can.

Just booting from a sdcard doesn’t stress the card that much and has the advantage that the computer will boot up even if it loses the connection. That is why I boot from SD, run a local file system on USB SSD and offload captured data etc to a networked drive. Losing the ability to log captured data can usually be tolerated for a while and it can be cached locally if necessary. This approach also helps with gathering data from many sources.

The real question is can you boot from the DRM chip embedded in Pee V2 camera module?

Maybe it’s not a real issue when you avoid noname and counterfeit SD cards? ANECDOTAL EVIDENCE WARNING. I’ve never had any problems with Raspbian running 24/7 (NAS, media player, data logger and a web server exposed on a public IP) on SanDisk cards bought in a reputable brick and mortar stores. And once I started bragging about that I will probably see my first filesystem corruption tomorrow ;-)

N alluded to this… you can boot over network and not have an SD card at all if you want. Jonathan Bennett wrote a guide:

https://hackaday.com/2018/10/08/hack-my-house-running-raspberry-pi-without-an-sd-card/

I think this might work with some modifications. All files in single directory, and quite simple to implement.

https://en.wikipedia.org/wiki/CP/M#File_system

Re file corruption due to power loss, two copies of data should be always written, and then compared.

Sometimes what you need is not a file system but a wear leveled data storage mechanism (see it as a huge and unique file containing everything). Then you have https://github.com/dlbeer/dhara .

Unless you intend to modify the structure of your program alive (that’s a much more complex task than it seems), then a filesystem is usually a bad solution for a bad problem.

If you want to store a lot of data you may want to consider using hard disks. They may be old fashioned and mechanical but they are incredibly reliable, especially in a “RAID 1″ setup where data is written to two devices. 2.5” USB hard disks are readily available and inexpensive. Not great for battery power or where size is an issue but you can usually overcome that by using separate, shared storage nodes. Even better, replicate the data to two storage nodes. A database company I worked for used this approach to achieve at least 99.999% (“five nines”) availability by coupling fast corruption detection with a low MTTR (mean time to recovery) mechanism. They provided an ultra high reliability database primarily for telecoms use. It was relatively inexpensive compared to standard databases, such as Oracle, even 20 years ago. It should be possible to create a much lower cost version of this today. Then, using an IOT approach, the storage could be shared ( under your control) also making it a good alternative to cloud storage. Even just plugging a USB hard disk into a Pi or even a small microcontroller is probably cost effective in many cases, and quite simple. Just make sure your power supply is up to it. On my Pis I am working on using a combination of sdcard, just for fast boot up, USB connected SSD for the file system and separate network connected (NAS) disk based storage nodes for logging and long term storage.

Honestly that’s a totally different league. We’re talking Mhz Cortex M processors here, not Ghz quad-octa-dual Cortex A.

It is in a different league, but it does work very well and reliably. I also use a very similar setup here in my LAN of things…A few odroid HC2’s and a couple pies that back one another up periodically with rsync cron scripts is a real slick setup if you can afford the extra storage (which is cheap, and low power when spun down – most of the time). Databases on one of the HC2’s running linux are dead reliable even when used by data aq in my physics lab which sometimes utterly fries the data aq gear due to EMP from some large arc…and it’s online on the NAS so I can analyze the data from elsewhere and when I feel like it too…

The approach probably deserves its own article. Booting pies from SD is fine and fairly fast, I’ve also just booted them off SSD or USB stick, haven’t tried PXE yet.

Restoring /boot is a heck of a lot faster and easier than the whole shebang, should you blow something up….

LittleFS is nice in that it solves a couple of problems at once:

– storage management

– data corruption

– wear leveling

It also has great documentation for anyone to dive into.

I love this comparison:

FAT:

https://os.mbed.com/media/uploads/janjongboom/littlefs2.gif

LITTLEFS:

https://os.mbed.com/media/uploads/janjongboom/littlefs3.gif

Also find this informative video: https://www.youtube.com/watch?v=ogdqeaO-83s

In our tests we found LittleFS to be working out pretty well, but you must be aware that currently one of the problems that haven’t been solved with it is that it has some problems delivering decent ram file update speed. Due to the way that data is organized as a reverse linked list makes that appending to a file is a fast task but updating the first byte in a file pretty is slow since it requires to rewrite the entire linked list (thus the entire file)

Associative memory.

Wow, that’s a fantastic demo!

I can’t stop watching it…

Which FAT implementation was used in this test? It’s not like FAT is inherently incapable of logging boot count. If one wants to prove a point it’s easy to find some lousy one that had write functionality added as an afterthought.

While it is not the best choice when power loss is expected, it can be made more resilient by carefully ordering write operations, flushing buffers at the right time, etc. At least that’s what Microsoft appears to be doing when dealing with removable media in Windows.

ChanFS, which I believe is hosted here: http://elm-chan.org/fsw/ff/00index_e.html

Flash storage is so massive and cheap these days that I suspect that worm drive file systems are the way to go.

Give us IBM’s Millipede Memory.

If the flash memory does its own wear levelling, its advertised block structure might be completely unrelated to the physical blocks. Even treating it as WORM might not guarantee data consistency.

Write once read many.

How foolish of me, I thought the O stood for Ovaltine.

An SD card with wear levelling must have a mapping between logical and physical blocks. You can’t know what that mapping is, or when or how it gets changed, or what will happen if you cut power while it’s being changed. The SD card might also cache writes, to give itself time to alter said mapping, so writes might happen out of order, and even a log-structured file system could become corrupted.

No, the data is not considered written unless it can be read back and can also be on a mirror raid, written in parallel to multiple cards, ideally 3 or more, which stack nicely. :-) I don’t mind arrogance, if it is earned, your is not justified.

Worm drive, like a worm gear? I don’t see a connection to file systems, although they were used to drive the heads in the old fashioned floppy drives. But AFAIK the files system was standard FAT(12).

W.O.R.M and not like the ones in your gut either.

It seems like you can also open the littlefs-written SD card easily on linux:

https://github.com/ARMmbed/littlefs-fuse

Very neat!! :)

If your goal is just to store temperature readings or something like that I wonder if it might be even better to just write them directly to storage sans-filesystem. The only problem I see is that after a restart you would have to replay the thing to find the spot where you left off.

I’m also wondering what would happen if one tried to use this filesystem to install an operating system. In particular I want an OctoPi install that can be immediately powered off with the printer but not with a read-only filesystem b/c I want to be able to actually upload new g-code files to it.

For OctoPi, use 2 partitions, 1 read-only, and 1 read/write. Then you can safely wipe the data partition if it gets corrupted.

If you need a FTL to go with it to use NAND flash, this is a good option: https://github.com/dlbeer/dhara it’s journal can also make a fat fs more reliable.