I was a little surprised to see a news report about Andreas Schleicher, the director of education and skills at OECD — the Organization for Economic Cooperation and Development. Speaking at the World Innovation Summit for Education in Paris, Schleicher thinks that teaching kids to code is a waste of time. In particular, he seems to think that by the time a child today grows up, coding will be obsolete.

I can’t help but think that he might be a little confused. Coding isn’t going away anytime soon. It could, of course, become an even deeper specialty, and thus less generally applicable. But the comments he’s made seem to imply that soon we will just tell smart computers what we want and they will just do that. Somewhat like computers work on Star Trek.

What is more likely is that most people will be able to find specific applications that can do what they want without traditional coding. But someone still has to write something for the foreseeable future. What’s more, if you’ve ever tried to tease requirements out of an end user, you know that you can’t just blurt out anything you want to a computer and expect it to make sense. It isn’t the computer’s fault. People — especially untrained people — don’t always make sense or communicate unambiguously.

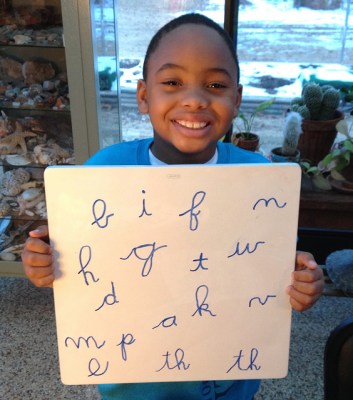

But there’s a larger issue at hand. When you teach a kid to code, what benefit do they actually get? I mean, we can all agree that teaching a kid Python isn’t necessarily going to help them get a job in 10 years because Python will probably not be the hot language in a decade. But if we are just teaching Python, that’s the real problem. A Python class should teach concepts and develop intuition about how computers solve problems. That’s a durable skill.

Schleicher almost agrees. He said:

For example, I would be much more inclined to teach data science or computational thinking than to teach a very specific technique of today.

I’m not sure what computational thinking is, but I would expect it is how computers work and how to architect computer-based solutions. That’s fine, but you will want to reduce that to practice and today that means JavaScript, Java, Python, C, or some other practical language. It isn’t that you should know the language, you should understand the concepts. I do a lecture with kids where we “code” but not for a real computer and it illuminates a number of key ideas, but I would still classify it as “coding.”

I’m not sure what computational thinking is, but I would expect it is how computers work and how to architect computer-based solutions. That’s fine, but you will want to reduce that to practice and today that means JavaScript, Java, Python, C, or some other practical language. It isn’t that you should know the language, you should understand the concepts. I do a lecture with kids where we “code” but not for a real computer and it illuminates a number of key ideas, but I would still classify it as “coding.”

This isn’t a new problem. Gifted math teachers or gifted math students build intuition about the universe by understanding what math means. Dull teachers and students just learn rote formulae and apply patterns to problems without real understanding. Many programming classes turn into a class about the syntax of some programming language. But the real value is to understand when and why you would want, say, a linked list vs a hash table vs a binary tree data structure. It seems like teaching those concepts in the abstract with UML diagrams and hand waving won’t be very effective.

You also have to wonder about unintended consequences. Kids aren’t taught to write in cursive in many schools, yet that’s really good for fine motor skill development. While the slide rule was harder to use than a calculator, it forced you to think about scales and estimation.

You also have to wonder about unintended consequences. Kids aren’t taught to write in cursive in many schools, yet that’s really good for fine motor skill development. While the slide rule was harder to use than a calculator, it forced you to think about scales and estimation.

If you listen to Schleicher’s other addresses and his TED talk, he has a lot of great ideas about education. Perhaps he doesn’t mean the word “obsolete” as strongly as I’m taking it and only means most people don’t need to know specific coding techniques. Maybe this is the press taking things a bit out of context. Oddly enough at the last summit, one of Schleicher’s colleagues appears to disagree with his position, as you can see in the video below.

I would submit that as more people try to do more with computers, they need to be increasingly able to think logically about how a computer solves problems. And the best way we have to do that today is through teaching coding, but teaching it in the right way.

What do you think? Have you taught kids to code? How do you get past the specifics and develop the general understanding required to formulate computer-based solutions to real-world problems? Do you want kids to learn about coding in school? Why or why not? Let us know in the comments.

Same reason you learn Latin. To learn how to learn, and the background of other, modern stuff.

This +100

No this +c, oh, no that roman. Sorry..

This C++?

Well said

Or you can just learn to code instead of learning Latin. It’s built right in to python, of course:

>>>’ROMANS GO HOME’.encode(‘latin-1′)

b’ROMANES EUNT DOMUS’

Linguam Latinam occidit Romanorum!

Translation:

Latin is a language

As dead as it can be

It killed the ancient Romans

And now it’s killing me!

omnia dicta fortiora si dicta Latina…

“One of the main causes of the fall of the Roman Empire was that, lacking zero, they had no way to indicate successful termination of their C programs.”

–Robert Firth

But then again, they didn’t need to deal with division by zero, check for NULL pointer and dereferencing,

And think of the off-by-one errors when referencing positions in arrays if you can’t zero offset!

&a != a[1] if you know what I mean.

And it bloody well shouldn’t be – you’re comparing an int** and an int. (or whatever your element type is)

LIAR.

I just tried it.

Have I just wandered into the Peoples Front of Judea, or the Judean Peoples Front?

HOW many Romans, boy???

Tutene? Atque cuius exercitus?

B^)

“Or you can just learn to code instead of learning Latin.”

No.

you are probably not even serious, I can’t tell. I’ve ran into this enough before though where people equate learning a computer programming language with a conversational human language. It is NOT the same thing.

I speak 3 human languages and am learning a fourth. I code in 2 languages professionally and like most have dabbled in several others. All of this has exercised my brain in ways that are not the same. To equate them is like saying there is no reason to do push-ups if you already do sit-ups!

I might question the choice of Latin though. While I admit it might be somewhat helpful in understanding latin-based scientific terms why go to all that effort when the same effort could be applied to a modern language that would actual give you a whole new community of people that you can communicate with?

I’m with you on this one. Computer language != human language. One is really instructions, while the other is really communication between equals / sentient beings.

For that reason, I’ve always thought the “computer code is expression” bit that’s required to make it copywriteable is a bit more than strange.

I like both your perspectives, but I feel that “computer language != human language” it’s a too strong statement. They both share rules and dictionaries, we may call it syntax for both, communication instead for sure is missing from the first and I agree… it’s something like body/soul relation. Syntax is the body, communication is the soul.

A big piece of learning to code is learning to break problems down into smaller pieces, which you can solve with the tools that you have. This seems to me like the most valuable part of teaching kids to code. Languages come and go, the thougth processes, not so much.

What is this? People called Romanes they go the house?

All you needed to say is “Learn how to learn”, excellent comment.

That’s really the source of the problem. End of story. Today, it’s all about reducing a specific task to whatever steps require the least amount of thought.

We’re going through this with our 4th grade son being taught math by the “common core” method. It’s absolutely asinine. We just met with the math teacher today to voice our concerns. I showed her how cumbersome and error prone the techniques are when you start dealing with larger numbers and was told “oh, sure, but once we get to 3 digit numbers, the kids will just use calculators”.

One wonders if this “expert” endorses not teaching kids to operate vehicles since surly every vehicle will have level 4 autopilots by the time they’re of driving age.

A skill is a skill, and a means to conceive and solve problems has benefits far beyond the technicalities of the specific tool.

I think the problem is most primary school teachers aren’t that well versed in math themselves. The common core stuff is supposed to help kids understand numbers/methods better, but its being taught by people who only had to take basic college math to fill their math requirement. Once kids get to high school they can find some teachers that have a real desire to teach math.

Whoa hold up. Common core is designed precisely to illustrate *why* the algorithm works, not *that* the algorithm works.

Multiplication by parts is the standard example. The algorithm we all learned is a black box: perform these steps (correctly), and at the end you’ll have the (correct) answer.

Multiplication by parts gives an exploded view of the common algorithm – multiply all pairs of place values, then calculate the sum. This illustrates exactly which products need to be calculated.

I do agree that multiplication by parts is impractical for large products. I also agree that your child’s teacher’s response of “they’ll just use calculators” is misguided. Ideally common core should be used to introduce the process, then the common algorithm/calculator/mental math can be used, but only once the fundamentals are understood.

I understand the logic behind the method, but feel it’s implementation and possibly even it’s objective is faulty.

First, teachers have clearly not been trained to teach it effectively. Before they got to multiplication and division, my son’s class spent weeks filling full 8.5×11 pages with number charts, just blindly filling in numbers. Also, from what I’ve read of the Common Core standard, the focus is less on a particular method and more on offering multiple methods of problem solving. That sounds great, but the end result is that of the limited number hours available to teach a subject, multiplication, for example, that time is now divided among 3, 4 or 5 different methods, none of which children are allowed to master before jumping into the next.

Second, I don’t believe a lack of understanding why the “standard algorithm” works is the primary cause of the US falling behind in math and other areas. There are endless examples of people being productive using tools for which they have limited, if any, understanding of exactly how they work. You don’t need to know how an engine works to drive a car, you don’t need to know how a microprocessor works to use a computer.

In my (humble ;) opinion, adding the complication of the Common Core methods is exactly opposite to what is needed in teaching – application. It further removes the value in learning math and places it behind more and more steps. Not everyone will or needs to learn advanced math. The way to get more people to do it is to interest them in the things they can use it for, not making it even more abstract than it already is. Ask my son to do a few multiplication or division problems just for the sake of practice and his eyes glaze over. But I can retain his focus and engagement easily if I apply it to something tangible, even if it’s just lighting some WS2812 LEDs light up in some pattern.

Learning how to learn or realizing that life is all about lifelong learning. Reminds me of a famous quote:

“A human being should be able to change a diaper, plan an invasion, butcher a hog, conn a ship, design a building, write a sonnet, balance accounts, build a wall, set a bone, comfort the dying, take orders, give orders, cooperate, act alone, solve equations, analyze a new problem, pitch manure, program a computer, cook a tasty meal, fight efficiently, die gallantly. Specialization is for insects.” – Robert A. Heinlein

Note: he said “program a computer” in 1973 which is still very useful today and will always be in the future as no automation will provide the same level of control and understanding.

On one hand, learning the basic decision structures of digital computer control might encourage applying logic in everyday life. On the other hand, I think learning it early leads to developing habits to use brute force to find solutions to problems (and many that will not yield to brute force). From teaching high school math and physics, I would hold off on the computer programming until they can learn computer science.

Ideally a person will have mastered linear algebra first and have a feel for solution spaces and null spaces and all that and how matrix methods can describe networks and relationships and data-bases. Then a deep dive into computer programming and languages will be very concrete.

But this top down approach is just an impression since I learned bottom up. Aside from a Fortran IV course on a batch processing system, it was all mysterious until I bought an AIM65 with BASIC and FORTH and the FORTH had an interactive assembler. I knew analog and some digital electronics and began interfacing new hardware.

From what I have seen in schools, STEM is like Special Olympics. With a few exceptions, it is all about the parents. It also makes very hard things look easy, which is misleading. Eventually (usually quickly) you hit the “Oh, you need to know math?” point and your tech club membership drops to 5 boys and 1 girl. And at least 3 of the boys are there because they hope things will blow up.

What is the down side of waiting until there is some mathematics competence? Seriously, want to know.

Luckily people like you weren’t in charge in the 80’s when home computers and programming took off. You method would have killed it deader than a door knob.

You would have restricted it to the intellectual elite and those who want to learn to code would have to jump through your hoops. There would have no Apple, Wozniack would have been kicked tot he curb or grown so disgusted with the BS he’d have walked away.

Not so! We are talking about what to teach children. PC’s in the 80’s were all about self learning. Where do you think Woz learned (and invented) all that stuff?

Ha! So it’s because of shit people like you that I never learn coding…

In the early ’90, Parent, Teachers would all tell me Oh, you need to learn math and be good at it before you can THINK about touching programming. Much later when I was 20 and struggling with math, I had EE class where we were taugh ASM and then C. That improved my math skill so much, I was actually able to do mental calculation, using all those neat ASM tricks.

computer science is not useless but programmer will learn it as they improve their programming skill.

Linear algebra? Tell me that you need it to program in 2019 with a straight face!

Maybe it was anxiety?

https://www.repository.cam.ac.uk/handle/1810/290514

The rational part of the brain doesn’t finish growing until 27 years old. Maybe you had to grow into your math ability. It definitely happens.

“Linear algebra? Tell me that you need it to program in 2019 with a straight face!” Exactly. Being able to frame such a question implies you “write code”, as opposed to develop algorithms. We will never know if it would help you.

You do not need linear algebra to develop a stupid social media app or some web content delivery, but we use linear algebra every day in our business doing computational data science. It depends on what type of programs you are developing. So yes, to work here as a programmer you had better know your linear algebra. Sorry.

So programming in a certain niche can require understanding of that niche? How surprising.

I know, right? Kids spend years learning detailed procedures without the life experience to know how they are useful(and the teachers don’t know either). Instead, one should cover applications, and be engaged in projects, then spend the year filling out the skills needed to finish the project.

“Wax on, wax off” worked for The Karate Kid, but Miagi got lucky. When I tried to introduce an adult to programming, she got frustrated, and eventually demanded to know what the goal was. Once she had a goal, the disparate pieces integrated, and she bootstrapped herself. Kids can be like that too. Give them a project, then teach them math to get them there.

As a kid I can confirm this

Wow. Sorry to hear that.

Was that attitude common in the 90s? Or did you just get dealt a really shit hand?

As an 80s kid BASIC was almost the first thing I ever saw on a computer. RadioShacks were everywhere and Tandy ads were plastered all over the TV and printed media. No Rat Shack salesman worth his salt would let you get out the door without up-selling you a bunch of accessories that included one or two beginner’s BASIC programming books. Those certainly were not written for mathematicians!

Also, with parents who didn’t believe in spending a lot of money on software making the computer actually do something meant checking out a bunch of computer magazines and typing out code that was printed within. It was hard to tell what was in those programs because they were almost always absolutely wretched spaghetti code but I’m pretty sure that the vast majority contained no higher math at all.

I find the assertion that one needs linear algebra to program especially amusing. In my late 1990s college linear algebra class the textbook included a bunch of programming exercises. They wanted the student to implement the math we were learning in code. I think there might have been some appendix with a very cursory introduction to BASIC or PASCAL or something already arcane like that but really they expected the students to already know how to code. Anyway, early on in the class the professor actually assigned one of those exercises. About 2/3 of the class were computer science majors. We all saw it as an easy A. The other 1/3 of the class was just about ready to revolt! I guess to those adults in your life learning to code or do advanced math would have been an impossibility due to circular dependency. LOL

I would point out though that learning Linear Algebra is not a bad idea once you are ready. Matrix transformations are used a lot in 3d design and physics simulations of both the gaming and scientific variety. Still, there are certainly an awful lot of fields one can program for and never use the stuff.

I started with BASIC at age 10, and my feeling is that if I had waited until I was old enough to worry about what the different types of algebra are, or what problem spaces are, I wouldn’t have been able to develop digital control structures and logic as a native language skill.

When you start comparing things to the Special Olympics, you should learn to recognize that you’re saying something deplorable. When the context is education, that means you’re trying to damage the brains of the little ones. For shame.

The silliest part about the math stuff is that lately I’ve been writing a lot of firmware, and building mixed circuits, and it involves a lot of math. Middle-school level math. That is best completed with a calculator. There is absolutely no good reason to scare kids away from programming with math. They need to understand math to become an engineer who designs the computer, but as a programmer the computer is never going to ask them to do the math and tell it the answer; it will be very much the other way around.

When they show fear of math, just tell them, “No, the computer has to know the math. You just have to learn what some of the math stuff is called, and when to use which one.”

I think I was 12 or 14 when timesharing became a “thing”. I took my first course in summer school with a Teletype and paper tape. The language itself wasn’t anywhere near as valuable as the process of analyzing a problem, breaking it down into steps and developing the logic necessary to solve the problem.

That’s what your first programming course should teach you – how to attack a problem. And that’s valuable all through life, regardless of how much math you have. Even English majors can benefit from a programming course.

“When you start comparing things to the Special Olympics, you should learn to recognize that you’re saying something deplorable. When the context is education, that means you’re trying to damage the brains of the little ones. For shame.”

Nope.

Teaching a kid to code even something simple like logo or basic will if anything show them math can be a useful tool vs a boring and repetitive subject that results in lots of homework and teach them how to do problem solving.

+1 – I loved logo, and was another one of my early programming intros that was actually school-based. School may have been very minimal instruction, but that is enough you can look into what other commands are available yourself, and run with nested loops and things to draw interesting math-based patterns (at least for a 5th grader at the time). Picking of the idea of program flow, loops, and API’s that early on was likely had tons of benefits for later in life.

Yep, same here, I started with very simple BASIC on a TI TV-console well before 10. Started as simple as ‘print repeated characters to the screen (HCHAR?)’, and not so much as a program as just enter a command to make the computer do something. That is all it takes, to think ‘hey, I can tell the computer what to do, that is pretty cool’, to get hooked and go from there. Heck, I likely didn’t even get into multi-line ‘programs’ until some time later, but just getting the early grasp on how to interact logically with a computer made things sooo much easier to pick up later on. I wasn’t more than 12 by the time I had a ‘real’ PC and was cramming wires into the parallel port and had figured out to drive a stepper motor and read switches from QBasic. 99% self-taught between a local library and beginnings of the internet. Can’t imagine if I’d had to wait until late high-school or college to be introduced to programming, because ‘you need to know maths first’. Baloney. Sure, if you’re developing sorting algorithms or need highly efficient processes for large volumes of data, or the like, knowing maths and big-O is ideal, but you by no means need it to start, and for that matter starting with it might just kill any interest altogether. Learning to think logically and in terms that can be related to systems/computer/process/program are much more important to pick up early on to grow with from my perspective.

> What is the down side of waiting until there is some mathematics competence? Seriously, want to know.

The downside is that you are waiting.The downside is that you are learning abstract concepts without learning why or how to apply them. Do you really think that all these mathematical methods were developed in a vacuum, just for the sake of math? At least whenever I looked, I found that the people who invented them needed those because they were trying to solve another problem.

Being confronted with a problem is a great motivator to learn something.

I don’t think it’s easy to answer if skills will be important . I don’t trust people who make claims about how the world will look like 20 years from now. Learn to program, and even if it becomes an obsolete skill, it’s still more likely to be useful than whatever claim some dude says you might need 20 years from now.

Actually, yes, quite a lot of incredibly useful math that we use today was developed simply for the sake of math itself. In fact the majority of known math math still has no practical application outside of use in finding more math. When you say that the people that invented these concepts needed them for something, you aren’t considering that the something they needed them for tended to be solving an obscure and irrelevant math problem.

Thus, the majority of known math is basically irrelevant to the vast majority of programming.

“Quite a lot” is a subjective quantity so short of proving that all or no math is without application it cannot really be proven or disproven.

Still, I would like to lay down the challenge that anyone who thinks this is true name “quite a lot” of math theorems, methods, etc.. which have no known practical application outside of generating more math. And for those that read the responses and know of practical applications, please respond in kind.

I think you will find that a “whole lot” (yup, another subjective quantity) of math has real world applications that you never would have guessed.

I call ‘Teapot’ (of the Russell variety). He’s dead now.

Talking of dead mathematicians. Try Boole. His mathematics went exactly no where, until much much later.

Just teach symbolic methods as early as possible, it’s a perfect vaccine against the bruteforce-centric thinking.

No, teaching them to apply logic would be a bad thing as then you would have a generation of children who won’t vote for any politicians. /s

Also, if you want better membership of your tech club, do things people enjoy

Take your suggestion and replace Programming with Driving a Car, then mathematics with knowledge of how to build all pieces of a car, assemble and maintain it. Can you honestly say you could design the microchips in your computer? If you weren’t allowed to code until you have that understanding you would never likely code. Taking your example to the automobile, we’d all be using Ubers driven by geriatrics.

Instead of saying ‘wait for the math’, teach the programming while you teach the math. Nothing helps a struggling algebra student (me, my son for identical reasons) like having a real world use and example for doing so. When I showed him that the classic mx+b thing drove all of his computer game animations he started paying attention, not just lip service. Even –no especially- straight A kids need a reason behind the structure while they learn it.

Ex: Do you really expect carpenters to teach their skills to apprentices by hammering 10,000 nails –one way, into the same kind of wood, over and over just to get the basics of hammering? No, they get shown how, then they ‘do’ in multiple variations until it is muscle memory. Humans learn from doing –both mistakes and successes– and coding (now that we’ve gone past the two hours of feeding punch cards into a machine) is just another tool that speeds the learning.

STEM? sigh. STEM is not what is being talked about. That is the ‘gifted’ mentality that says to only teach advanced stuff to the top 10% and let the 90% idle. Teach the same stuff to everyone. Require everything up to, but not including the advanced. Let the ones that get it explain to the ones that don’t. Real world examples with results win out over ‘Johnny has 25 watermelons’ crap (and when has that EVER happened?) for pity’s sake.

25 watermelons, not too often I would think. I did have a worksheet on estimating how much energy it takes for Godzilla to swing his tail, and the acceleration and forces on joints, etc.

FORTH is a great (Fourth Generation!) language. It encourages thinking and a free form, can do, attitude that still requires precision and care. It was a standard for Astronomy for a while because it could be made to _work_

It is not for everyone because of it’s lack of the crutch of standardised syntax, bnf, fancy compilers, IDEs, etc. but as an education tool (rather than a training tool) it allows everyone to go at their own speed.

One must remember that teaching has two (grades of) purposes. At one level it is just training the masses to become adequate members of the workforce, and at other level it is to encourage the future thinkers and leaders. Much of boot camp coding practices is in the carpenter/joiner/bricklayer tradition, where solid practice makes for consistent products, but rarely ‘new’ products. The other level is that push for the new, some of which is ‘suck it and see’, while others are prediction and thought based.

FORTH, more than other ‘languages’ allows breadth of development .

Yes, I didn’t do very much with BASIC, but the Forth was fantastic – and 20 times faster and smaller. After I saw how the assembler was written (Bill Ragsdale), I added to it to access a floating point coprocessor. From there on there was no holding me back. I even bought a board to add enough RAM to go from 4K to 28K! And a PROM burner to keep my extensions to the language.

Everything in computing has seemed stupidly complicated compared to using Forth back then.

Actually those three boys are there because the girl’s there.

True enough. But the other two hoped things would explode!

If you check out Gilbert Strang’s Linear Algebra video lectures (Free from MIT) and book, you will see he uses MATLAB in the course to get rid of the tedium of working examples by hand and the students all learn the MATLAB language. There is no expectation that a proficient computer user Arduino hacker will know any modern computer languages. Translating the basics of matrix manipulations into C code is not trivial and is a course of its own with an exhaustive look at precision and round-off errors and how to deal with them.

As I said, IDEALLY a person with the linear algebra pinned down first is better off. But I would not throw out the good in search of the ideal.

This seems a very strong argument against learning code because you don’t need to know anything about Latin to learn or use other languages and it is only useful academically.

Though I would pretty much never dissuade anyone from learning everything, I do think that he may be right about the future of coding. Given the proliferation of coding as a career and the evolution of the field to often treat software as less of an engineering endeavor and more of a service organization, I buy it. How is outsourcing code to a low-cost code center unfamiliar with the product really any different from having a machine do it? I mean, the tendency is toward more and more abstraction. Of course the goal would be to do away with esoteric syntax and move to a plain-language “code”…I mean we’re essentially talking about an advanced compiler. Who of your is learning assembly and then actually using it? Almost no one.

Why teach children math. There are spreadsheets and calculators now.

Grabs smart phone… “OK Google what is a spreadsheet and a calculator” :P

Oh no no, I’m teaching my kids to code, starting with my 10 year old son and it’s not to teach him how to learn or any of that. I’m putting this kid to work with me! I can always use a jr coder to do the grunt work!

Learn Latin? While it may have been taught in the late 19th/early 20th centuries, it wasn’t touugh at our rural high school during in the early ’70s.

To learn how to learn? Lol seems like you could find better ways of accomplishing this goal besides learning a dead useless language. And Latin doesn’t teach the background of other modern stuff, at all. Even if you justify it as laying the foundation for learning other Romance languages (of which Spanish and French are the only ones still taught in schools), that’s also incorrect, as the Classical Latin they teach has little connection to Romance languages, which developed from Vulgar Latin.

Tl:Dr, using Latin as an example just strengthens the argument against coding. And contrary to the author’s claim, AIs will definitely be coding nearly all computer programs in the next decade or so. The only thing left for humans will be to program the AIs.

Coding _can_ be taught along with Boolean Logic.

Classical education places logic in the middle school years.

So, teach middle school children a code that also teaches logic.

I took a formal logic class in college as a philosophy elective which was prompted by my statistics professor saying “Think of the logic and it will make sense” while trying to explain Baye’s Theorem. Formal logic turned out to be fun and surprisingly useful in my math classes and life in general. I wish I’d had been exposed to it in my early school years instead of informal logic as taught my English teachers. I think society in general would benefit from formal logic classes taught during grade school.

If you just teach kids to code, then they only know how the computer works today. How computer works today will not be how computer work in 10 or 20 years (quantum computing). Highly efficient software actually bad for block chain.

Well that’s nonsense. How computers work today is built upon how computers worked 20 years ago, and the same will be true of computers in 20 years. Even if we have quantum computers in 20 years they’ll still have non-quantum interfaces.

The class of problems can be solve by quantum computer are nearly unsolvable by today’s computer. The computer interface transform from punch card to now keyboard and mouse. Twenty years ago “AI” is just a toy concept and lisp will help make it happen. Today, “AI” is used to mine every internet searches. Think back 20 years, if you are old enough, “coding skills” at that time works in today’s world.

COBOL

Cobol at least has decimal math. It is surprising how many languages have difficulty talking about money.

That’s probably why it is still a leading language.

I have a friend do Cobol, when he get out of school. Thinking all business at the time use it and all business need to pay people. He open is own business and his business never use software base on Cobol. I am sure buggy wipe still used somewhere but it most likely not the most sought-after career choice.

There very probably will be new things to learn, but learning those new things is made easier by knowing the background upon which it is built. The techniques used in machines with punch card interfaces are still used in today’s machines, and they will still be used in the machines of tomorrow (whether or not those machines are quantum). Coding skills learned 20 years ago are just as relevant today, but again, with a bit more on top that’s made easier by having a firm base upon which to build.

Still working on my toggle switching skills.

Looks like you don’t understand what quantum computing is.

No one does.

AI isn’t much different today than it was when I took my first “computer thinking” course over 20 years ago. This difference is that then it was something of a pipe dream, since the computers of the early/mid 90’s didn’t have the power to train the “AI” in a reasonable amount of time. Now, we’ve reached a level where we can generate sufficient cycles to do useful work, so we can apply and improve upon methods that were known, but at the time, not really useful.

Your comment shares an assumption that has be made by the article author.

You have used the term “quantum computer”. Unfortunately a quantum computer is not a computer, it’s a permutation synthesis and analyses tool.

Language is the issue here. We tend to old terms even when their no longer accurate.

In a the future most of the textual aspects we call code will be gone. Instead someone may design feedback networks for neural networks.

But if ask that person what they do then they will probably say that they are a … Coder.

That’s just ridiculous. Coding will never be gone and more that calculators have disappeared. While popular languages will evolve the basic logic concepts present in ANY language will never disappear. How that logic is applied may change but old languages will never cease to be used. In my work I STILL program in Basic from time to time. I STILL use RUBY. Progress is backwards compatible.

That is not true, any (gate based universal ) Quantum computer is also a fully universal classical computer.

D Wave type “quantum computers” are really quantum annealers, are not what Quantum Computer generally means, they can’t run Shor’s algorithm (the one that factors vary large numbers, and thus defeats most generally used encryption) . These are what I think you are referring to.

That being said, quantum computation is really hard, and will be very expensive for a very long time, so even when (if? ) we reach the age of quantum computers, most classical logic will be offloaded to classical computers for cost purposes.

I am very certain about the way computers work in 20 years: exactly the same way they worked for the last 20 years. We will have programming languages, we will have compilers and we will have all the same problems that software engineering had for the last 20 years. We built abstraction layers on top of abstraction layers on top of abstraction layers to solve the same problems over and over again. And this will never change because every generation of software engineers will re-invent the same wheel again but with the newest “technology” of their time. Which will be in fact just another programming language or worse just another fancy framework.

Indeed, as long as 1 and 1 continues to make 100 we’ll be fine.

Shouldn’t that be 1 and 1 makes 10? ;-)

Typical off-by-10 error!

Erm… 1 and 1 makes 1 ? :-o

There you go assuming you understand the context. This is why the only grammar I clam to understand or be any good at writing is context free one.

They might work the same but likely won’t need the same skills in programming. A few decades ago there was huge stress in compression techniques and before that searching and sorting methods were very hot. The technology changed and storage became cheap and easily accessed, processor speed became so high that less than ideal searching and sorting methods work OK. Nobody cares now if your code branching scheme causes a pipeline reload or even flushes cache in most cases. Deep leaning and AI methods from the present will be as archaic as a backwards chaining inference engine from the 1980’s is now.

I can make a case either way, but if the next 20 years are anything like the past 20, you will have Jarvis+. Just tell it what you want and it will generate just-in-time code on the fly. Or the code for a couple hundred embedded processors as needed. Or maybe the AI will never communicate except to give you a shock to keep the treadmill going.

That turns out not to be the case. I’ve been programming for over 40 years and not much has actually changed in specific or general concepts. Sure, we have guis now, but we had terminal screens then – and the same basic principles of getting input – doing something with it – and getting data back to the user still apply.

Even the languages haven’t changed that much, with the tools for object oriented programming improving but not really fundamentally changing in decades. And even before them, when using (say) fortan 66 (or the much better fortran 77) many of the principles that we latter used with c++ were still just as valid – ie encapsulation – but just done a different way.

So far from thinking that it will be all different in 20 years, I think it will be vastly the same – as we have not been moving particularly quickly with changing how we do programming. The only significant feature to likely change is that more people will be writing multi core/ multi threaded programming with a lot more cores and threads.. Yes, that does change how you do things, but then again many of us have been writing multi CPU programs since the 70’s, and mainstream PCs running windows have been multi core for coming up 25 years – I remember the changes I had to do to my code on my first duel cpu pentium box..

As for python, yes another scripting language may be popular in another few years. But that’s fairly irrelevant as it’s just syntax – the fundamentals don’t really change… And neither do the skills…..

The sorting and searching hellscape was merely replaced by SQL.

I hope they do write an AI that writes their SQL, the early versions will be such a disaster it will cause a database consultant shortage and rates will be through the roof in a bunch of areas.

I see myself writing SQL in emacs in 20 years. Maybe via an ORM, but I predict you will still have to know what happens under the hood when you tell the tool to do something, so it won’t have any non-deterministic behaviors.

people NOT knowing what is happening under the hood now is responsible for many bugs, much bloatware, and programs that run slower on modern hardware (that is 100 times faster) than what the earlier version of the same program did 15 years ago..

“Give a person a computer, they will be frustrated for a day. Teach a person to program a computer, and they will be frustrated for the rest of their lives.”

:P

We need a “Like” button.

Also, a pro tip: the nearly black button to the bottom left does not say “Reply comment” and there’s no way to cancel it.

You mean the one I just clicked?

B^)

You don’t need like buttons, you want them. One should know the difference. Anyway there are like buttons at this alternate address that nobody cares about, so probably nobody will ever see any thumb counters: https://wordpress.com/read/feeds/53736

(as an aside, I think this goes a long way toward explaining the analytics and tracking crap being here, maybe it would be totally different if someone installed WordPress on their own server instead of just paying for a foo.com to point to their WP-hosted WP blog. [i]Maybe.[/i])

Don’t worry, everyone clicks that.

I wonder if authors report themselves?

I’ve done it!

Well above the comment field, and to the right there is a cancel reply option.

…nor does it say “edit comment”, which would be REALLY helpful.

Many of us agree that an edit feature would be great. That is not one after all this time diminishes Hackaday’s hacker credibility, as it appears the quit after the fist attempt didn’t

go as they wished. Perhaps there truly isn’t a solution that satisfies everything that powers that be desire, but I don’t recall any communication to that is the case. Any silly to get to too criticalover free to use content

Enlighten them further and they will go into management and frustrate a whole team of programmers.

We teach kids to code because then they have a tool to make cool stuff. For example, they can make robots and program them to do things. They can take CNN software and make the robot record what they tell it to do and then do it. It will help them understand what is going on under-the-hood of so many things they use today so instead of feeling like they are allong for the ride, they have some understanding of the “magic” going on in the hands, in the cars, etc.

We teach coding because code is all around them and today, with that skill, they can create things.

See, i learnt to use logic to deduce things, and it lets me do this, while not being able to code, I can decifer the coding decisions used to come to a point in a process.

But I also can deduce how a car engine works, how a fire alarm works, how a pump works and many other things.

Teaching kids to code is great and all, if they’re going to be coding all their lives. Teach them the ability to process problems using logic, deduction, you know, all the things we used to teach in science before the dinosaurs became a forbidden subject….

You didn’t learn logic in isolation, you learned it while doing other things. Learning to code can be one of those things.

djsmiley2k in his new job as philosopher.

How do you even get a job as a philosopher?

But when you are taught to take a wheel and tire off and explore _a_ braking system then, if you’ve been taught to deduce, process and generalize, you’ll have no problem understanding how any braking system works which uses friction to stop rotational objects. And even other means of braking. You don’t have to spend all your schooling on learning braking systems, just a good exposure to it once is often all that’s needed.

The idea is not everyone is going to be a professional coder but teaching the skill once throughout primary schooling would be fine if done well. But it’s also a good idea to have the maker spaces and clubs so students can expand those skills if that’s their interest.

Aye, and coding can teach effective computer use, not just continual mouse usage. I’m trying to up my game that way myself

“They can take CNN software and make the robot record what they tell it to do and then do it.”

But, only if it is a liberal robot that trusts what CNN is telling it.

B^)

He is confusing CODING with THINKING and the Design Process. Almost nobody today even thinks about the billions of lines of code inside their Phones and Laptops and Airplanes. Because they are INVISIBLE.

I teach kids to recognize: UH-OH! ELECTRICITY is INVISIBLE and SOFTWARE is INVISIBLE !

Kids NEED to experience the ways we can make those invisible things visible and understand them. They don’t need to end up in a profession that makes Electricity and Software visible every day (But those are the jobs of the future).

Three of my Sons and two of my Grandsons are Engineers, and my daughter is a cell biology professor at Yale. They all work on things that are INVISIBLE until you use tools and techniques that make them visible.

We need to start young kids on the path where they at least begin to understand that the invisible world is a very interesting and important part, and that learning about it can be fun, and involving and maybe even a life’s work.

I have been working for a while on helping Elementary School kids get hands-on experience with these invisible things, like this:

https://arduinoinfo.mywikis.net/wiki/EasyConnectKit

Regards, Terry King

…In The Woods in Vermont, USA

terry@yourduino.com

Why waste time teaching your kids basic math when they have access to a calculator? Why teach them history when they have access to google?

When I go to purchase a drink and gas at the local gas station and I tell them the amount of gas I want which is based on the cost of the drink + tax so the total purchase is an even amount like $20 the employee behind the counter thinks I’ve performed some kind of magic trick b/c I did that without a calculator. We teach kids basics they may not ever be required to use so they have a more well rounded grasp of reality and knowledge.

This recommendation to not teach kids to code and to focus instead on very narrow/specif knowledge sounds more to me like an attempt to mold more narrowly focused employees so they ask fewer questions because they are less informed about anything/everything outside what they’ve been taught. Kids use to be educated, taught how to see and understand the world and ask questions instead of accepting at face value without question whatever leadership says.

“Why waste time teaching your kids basic math when they have access to a calculator? Why teach them history when they have access to google?”

That could be said of anything. Why do [this] when [that] is available? Question is where the lines are, and it becomes a liability than an asset?

I think he was being sarcastic!

Maybe as part of something greater. Teach problem solving, logic, algorithms. There is a bag of tricks. Divide and Conquer is great starting point for a lot of problems. The Wolf Fence works for car problems. Teach them how to read and follow instructions, and how to write instructions other people can follow. A good grounding in Liberal Arts is important. Literature, poetry, music, art, philosophy, ethics, comparative religion, all make for better thinkers, better technologists. Above all, teach Defense Against the Dark Arts: informal logic, modern rhetoric, the limitations of our minds, how we can be hacked.

http://debuggingrules.com/

That is one of the best books I love to give to new programmers. They begin to see what programming is.

I think Andreas Schleicher spent years learning C and now needs python or rails or something equally not C, or he learned how to measure rafters for complex rooves and now there’s an app for that, in other words, don’t bother to learn anything current because it’ll be out of date by the time you get a job doing it.

Obviously he’s forgotten the importance of learning how to learn and know what to apply to the problem.

Just teach them Logo, that’ll do the trick :)

LOGO does have one pretty clear advantage for kids: It’s easy for them to get it to do something cool, even if not particularly useful. For an introduction to how computers work, a bit of instant gratification can go a long way.

Hit them with Smalltalk instead.

https://amturing.acm.org/award_winners/kay_3972189.cfm

If YOU dont teach your kids about code, then they will just learn about code on the street…

… this is your brain … this is your brain on code …

Watch out for ghetto code.

“I learned it by watching you!”

Yeah, and they might grow up to use LabView…and THEN where would we be?

An idiot says kids shouldn’t learn, more at 11.

Teaching kids programming throws so many different problems in to one, concrete project at once.

It is very broad-spectrum in the skills it learns.

The language doesnt matter, it has never mattered, the SKILLS are what matters.

The concepts of variables, branching statements, looped code segments, functions, objects, and so on.

Also how to follow sequences of events in order to make sure you actually cover your projects needs properly, doing dry-runs of your code from start to every possible finish and dealing with errors when they happen at each major stage of its operation.

All of that is incredibly useful.

NO matter what these morons think, as you said, computers aren’t going to magically be able to understand everything in 20 years. It ain’t happening. Period. We’ll be lucky if it happens in 40 years in fact!

Computers are just barely beginning to understand basic concepts, and even that is horribly manual in terms of coding. It’s too biased. Doing it automatically is hilariously hard.

These nutjob futurists are the worst. They have no knowledge of how HARD it is to make AI do useful things.

These are the same kinds of idiots pushing self-driving cars… right in to a person crossing the street and killing them.

Its nowhere near ready for the roads! Nor is supposed “smart coding” systems. When a piece of tape can totally change how an AI sees a sign, you know it is bad!

No sensible person would ever trust a self-coding system to produce proper, decent code. NOBODY.

Self-coding systems produce horrific messes of code that even most experts cannot understand. You seen the messes autoencoders spit out?

That whole mess created an entire job role just to understand the nonsense they spit out.

As a person that does this nonsense myself, I can assure him that in 20 years time, it will barely be any different to now.

We’ll have new languages and maybe at best a different processor substrate slowly making its way in to computers, be it graphene or similar, but nothing else revolutionary will happen in that time. Especially not useful quantum computers. Quantum computers won’t leave specialized industries for over half a century at minimum!

The only thing that will happen in the next 20 years is more crap being forced down our throats, more cloud-crap, more spyware crap and IoT-everything. How awful.

I’m cancelling my subscription to Life. It sucks now.

“Given the unbearable proliferation of Web standards, and the comically ill-expressed semantics of those standards, browser vendors should just give up and tell society to stop asking for such ridiculous things. However, this opinion is unpopular, because nobody will watch your TED talk if your sense of optimism is grounded in reality.” –James Mickens, To Wash It All Away

It comes down to pedagogy. I assumed the topic was teaching programming in public schools. At middle school and high school levels, success depends a lot on the kids and the administration’s support of teachers. Maybe most of you are not aware, but in the US, a large number of students qualify for Individual Education Plans or IEPs. Counselors lay out the “accommodations” required. Teachers must account for the accommodations for EVERY IEP in their lesson plans. This can mean teaching everything several times by making the lesson equally strong for visual learners, attention deficits, and a long list of specifics. I have had math classes with 30% IEP’s! Also, modern education theory (which sucks) says “learners” (that is what students are called now) must learn from group cooperative activities. In other words, they are supposed to discover programming with each other. There are no lectures, and books are pretty much all about diversity training – or making everyone the same by studying everyone’s cultural differences. Textbooks are just plain ludicrous.

I can see it working in elective classes for motivated curious students. For everyone? Very difficult given the amount of personal attention required. (And never an AP course. They are far too structured to get good scores on the exams. And the exams come too far before end of school, leaving zero motivation to continue afterwards.)

Pet peeve:

Coding is generally the process of taking a design or plan and translating it into a programming language. Knowing which end is up during the design process is a separate skill that is both more generic and quite durable.

Programming is the union of these skills. You often see entry level developers doing almosy exclusively coding and architects doing almost exclusively high level design and there’s spectrum across the middle of the experience gradient.

Like the dull students and dull teachers there is little to any value in teaching kids to code in a vacuum. Teaching them the critical thinking and abstraction skills to understand how a computer functions and how to map real world problems to these operations usefully (i.e. programming as a feedback cycle including both design and coding) is much more likely to catch and hold their interest as you must to get any of it to stick.

I believe everyone should have the opportunity to learn to program so that the world doesn’t stratify into those who can program and those who merely use others’ programs and are at the mercy of those who can program.

Actually many kids are first learning SCRATCH (based on LOGO) or similar Drag-Drop languages.

See: https://arduinoinfo.mywikis.net/wiki/EasyConnectKit#LEARNING_TO_CODE_AND_MAKE_THINGS_WORK

That drag-drop language “MIXLY” is similar to SCRATCH and creators of new functional hardware blocks can put them in a Library. So the easy-to-connect blocks I teach are in the palette of things to drag out from the left.

AND, just click on the right and the ‘just-compiled’ Arduino CODE appears. To see that, scroll down on this section:

https://arduinoinfo.mywikis.net/wiki/EasyConnectKit#LEARNING_TO_CODE_AND_MAKE_THINGS_WORK

So the path is:

– Hands-On Arduino sensors and actuators , LCD display and IR remote. Kids plug them (easy cables) into an UNO compatible with easy connectors. The controller board comes PreProgrammed with 9 experiments that can be selected with the handheld remote. No laptop/etc. needed. 9V power or USB is enough. No wire running around a classroom.

– Then Drag-drop programming similar to Logo/SCRATCH. The Arduino code can be popped up.

– Arduino IDE programming with working examples of each of the sensors and actuators.

I feel there HAS to be something past Hour Of Code introductions…

Having started a bit later in life, learning to code. I can report, that you never stop learning to code. The basic I learned on my commodore 64 was absolutely useless once I entered college at the age of 24. The languages I learned college, FORTRAN, Pascal, 8088 assembly, C and C++, where mostly irrelevant once I entered industry. Most of languages I learned or used in Industry over the years RPG, C##, python I don’t use on a daily basis. Today I code in Java, use Spring, Camel, JBoss, … I don’t know what I will be doing in 10 years, but I am ready.

The important thing was that when I was 20 I purchased a C64 computer, that forced me to learn to code (I was a Hacker). Learning how to learn to code is way more important the the language you are learning. It lead me to a career in software engineering, I have never looked back.

A lot of people mix up coding, programming, software design and software architecture. Depending on how you choose to define them, they will probably start to disappear as jobs in that order. If coding is taken as meaning “translating a solution into a form that a computer can execute”, it has been on its way out for years with high level languages, pre-defined libraries and IDEs etc. Software design, when defined as “making a solution match the capabilities of the hardware” (e.g. efficient, fast, safe, secure, fault tolerant etc.), will probably be impacted soon as AI marches on. Software design and architecture, on the other hand, will probably only be “automated” when the machines take over! So, planning on coding as a career is probably not a good idea but, as stated earlier by others, learning coding is a good way to understand logic, which will always be important. What is interesting is that understanding advanced mathematics, such as statistics and calculus seems to be becoming more valuable than ever. AI and quantum computing rely on them. Maybe coding should be taught in kindergarten to develop logic skills, and “real” math, beyond simple number manipulation, should be started as early as elementary school.

On the other hand, I have encouraged my son to concentrate on people skills and liberal arts, rather than technology, as they will probably be the most valuable skills in his lifetime. There will probably be a glut of technologists in 20 years as large countries, such as China and India, focus on technical skills and as AI eventually reduces the demand.

Computational thinking is understanding how someone else’s code does what it does. I work in the automotive industry on engine control software, and I don’t write more than about 5 lines of C per year — everything is auto-generated code from control system design software. This is the trend for several industries, but it still pays to know how to tweak things once in a while, or just where to put the glue layers, header files, etc. to get the system to work.

That said, a mechanic needs to learn the strictures of code to understand the limitations of what someone can get an engine computer or brake controller to do. This is the moral equivalent of teaching someone simple math so that they can detect when something is wrong because the number seems way off. This sort of debugging thinking is going to become more necessary in the future, not less, but it doesn’t require a particular coding standard. You could build that class around reverse engineering the behavior of electro-mechanical systems of today without discussing anything more complicated than boolean logic, though I think it would make more sense to cover the rudiments of algorithms first.

For several years, help teach a summer program that started with Discrete Math review and C++ and Python, then diverged into separate ‘data science’ and embedded systems tracks. But this was a non-public school. The students had a burning desire to learn (we had to force them to leave at the end of the day), and the school provide a secure learning environment.

Last summer, a math teacher at the local (public) high school asked me and another engineer to help with a summer program of “whatever – something with computer science and electronics”. Many public school systems are a dysfunctional circus composed of well-meaning staff, demanding yet disconnected parents, and demanding yet disconnected students; and this school was not atypical of that description.

It is important that kids understand how stuff works, and learning ‘coding’ can be a path to that end. But how can we expect American schools to teach this stuff in a divisive, socially fragmented environment? Very few kids take physics and chemistry, and fewer take any math after basic Algebra. If we can master and implement a way to teach this fundamental stuff, and not teach a test, and not teach social stuff that should be the parental domain, then perhaps we can learn how to teach broader logic and ‘coding’.

My experience too, except in the classrooms all day. In public schools the environment changes drastically from class to class depending on disruptive students and the administaration’s refusal to deal with them.

Also the nature of the Principal can is all-important. Principal who wants to be liked by students = a terrible school with big discipline and classroom control problems. A Principal who wants to be respected and listens to the faculty can = an outstanding and high performing school. I have seen a high school transform from a complete mess to a nationally recognized high quality school in one year.

All machines will be running Scratch variants in the future. Download now and force children to sit in front of machine until they develop something meaningful

I’ve heard it works with monkeys. There seems to be disagreement over the required specs, though.

Runs on Raspberry Pi and pretty much everything else modern that could be deemed personal computer and wide range of ‘smart device’. Though if one were to necessitate an older class machine like a Commodore 64 or some kind of Fruit then I dont see a great difficulty to modify one of the Pilot or LOGO languages to run similarly to Scratch. Where there seems to be need to re-create archaic tech amongst this particular forum I fully expect it’s FORTHcoming. C64 being a likely candidate due to its’ graphic and sound capabilities. In natural progression I would see the Amiga series a better choice but perhaps excessively modern and not nearly as completely reproduced as its predecessor C64. Furthermore if memory serves there was a complete PILOT implemented in CBM(Commodore BASIC which is another M$ product) in COMPUTE! Magazine.

It should be noted that children/young adults are considered to be more intelligent than most primates. Most and most of the time but not exclusively. It should be easier to instruct children than monkeys. Expert Educators however…

That was supposed to have a hah in it. Maybe.

Well, if somebody at the OECD said it, I think we should all pay attention. Those guys are the best. No question about it.

He’s missing the point. (Which isn’t a total shock since he’s never been a teacher.) While students are focused on the language or the robot or the project (“the dessert”) – the educators are focused on the meta learning (“the meal”).

The point of coding in the classroom is not to teach proficiency in any one language, it’s to teach the Computational Thinking (abstracting, deconstructing a process, forming a repeatable series of steps, finding a pattern, logic) which this guy agrees is important. Nobody teaches coding because they think any one language will be around forever (if they do, they’re too new to the game).

Languages come and go, Computational Thinking practices persist.

Alan Perlis said, “When someone says ‘I want a programming language in which I need only say what I wish done,’ give him a lollipop.”

For those slow on the uptake, including Andreas Schleicher apparently, ‘saying what I wish done’ is a lot harder than it sounds.

Let me guess, this guy also thinks that calculators obsoleted teaching basic arithmetic?

“I’m not sure what computational thinking is, but I would expect it is how computers work and how to architect computer-based solutions.”

Yikes. There’s this new thing, called a “search engine”? https://en.wikipedia.org/wiki/Computational_thinking

Also, here’s a protip: If you start a sentence with, “I don’t know what $foo is but” you may not be adding as much to the conversation as you think you are.

Perhaps you might search irony, He clearly knows what it means but was trying not to come off as a know it all. Strange as that idea might seem to you. Second search: common courtesy.

I read that Wikipedia page, and it’s a bunch of woo. “Teaching people to think the way computers do.”

If they were serious, they’d start with assembly. They are instead interested in teaching people to think the way people have decided that they would think about programming, and then design (four, five, six?) levels of abstraction over the way the computer does.

Still: I’m all for the things they teach. Notice repetition and encapsulate it. Make things useable by others. Combining many blocks together makes a bigger whole — figuring out which blocks to build is important.

But these things aren’t about the way computers work. They’re about the way people work with computers.

“But these things aren’t about the way computers work. They’re about the way people work with computers.”

Reading through the comments that’s more insightful than people think. How much of the advice given in these comments are dependent upon the deficiencies of current computers and learning how to overcome them, and what happens when all that changes? The basics of solving problems should be taught. ANY problem, not just related to computers. Starting with being a good listener…

The true gain from learning to code is understanding how to think in the form of a process. I it’s most basic form, learning to code is a study in logic.

Sadly, this is the largest failure of the education system, it’s not about what knowledge we teach children, but giving them a framework of rigid logic, process and analysis skills that can be applied to a variety of task.

Programming and electronics force you to think this way, which is why so many good problem solvers emerge from the field into entrepreneurship

I still recall giving a bunch of high schoolers a bit of insight into thermal expansion and the way that cyclic localized heating can cause localized failures, such as on a certain car engine that routed the exhaust manifold close to one corner of the engine head causing the head gasket to fail and leak; ultimately solved by adding a thermal barrier. As I mentioned that leak one girl in the class jerked like I’d just applied a cattle prod – she said that that’s was why she’d had to pay so much to get her engine fixed.

It was that connection that was the most valuable thing. For her it was with hindsight. With working on the bits of how computers work and how what they do reflect that someone told them what to do (or guided in the case of AI) that maybe there will also be foresight, or at least recognition of the connection between a person’s current relation to a machine and how that machine go to be the way it is.

Coding is a low level skill. Programming, which is much more about problem solving, critical thinking, and how things work is what makes “coding” worthwhile.

Programming is writing source code, thus coding.

I think if there’s one thing the comments illustrate is that we don’t have hard and fast solid definitions for all of these terms. Even if we think we do they tend to be local. The devil’s DP dictionary has the joke about conjugating data processing verbs (what we would call IT today). The one I remember is they hack, he/she codes, I create. So whether he means the same thing by computational thinking that someone else means is as dangerous as assuming we all agree on one common definition of what coding means.

Teach them not to call it “coding”.

I remember 2nd through 5th grade had classes in LOGO. I discovered the wonders of subroutines when I was 8 as a result.

When did computer programming become “coding”? It seems to me “coding” is usually used to refer to designing web pages, as if that’s the only thing that matters.

Coding is not for every kid and shouldn’t be mandatory. My son saw his friend do coding for a school project and has seen me code for Arduino, yet he doesn’t care for it. He is doing very well in traditional subjects. I’ve taught coding to kids after school and the kids who show up are excited about it and make some great programs. I continue to code for data analysis at my job and I see Data Science being a big field that benefits you and your job if you know coding because manipulating data with Excel spreadsheets is time-consuming and prone to errors. I know Visual Basic to help me, but it’s a lonely world, so I’m playing with R and Python.

I agree that coding is certainly not for everybody. I would say that all students should at least learn a little about what it is, and then be offered the chance to learn more if they are so interested.

Programming (in some form) will be the last job before our robot overlords take over.

Once I saw offshore engineer putting some data to carefully prepared spreadsheet and them summing them up on a calculator to finally put result into “total:” cell. We don’t teach kids coding to open their IT career. We do that because computer is what they will find either at work or at home (and most probably thay already have it in their pocket).

PC mode off.. The tech to automate the labor industries of the world has been around for a long time.. Learn something or have a great personality and be attractive and do law or sales.. PC mode on

On the tidbit that ‘in 10 years, Python might not be the hot language’: as a counter-example I offer you the fact that some embedded C programmers still are scared to use designated initializers, because it has ‘only been added very recently’. The updated standard where this was added is C99. The 99 is an indicator for the year it was introduced. Go figure.

Having coded in that space, they are not wrong. Some of the compilers that you end up using in that space are older than the c99 standard or have a very loose definition of conformance.

The biggest problem is HOW children are taught; the classroom setting may be fine for fully-grown adults, but it is utterly harmful to a developing child. As well, children aren’t actually taught; they are only stuffed full of knowledge that they will mostly forget in six months. (Seriously. It has been proven over and over that, if you give a student an exam on what they were taught six months before without giving them a chance to refresh their knowledge, they will fail miserably every single time.) What is being taught has also degraded over time. For example, colonial children were once taught formal logic, which is now only taught in universities. Check out this article on how and what people used to be taught, and shake your head in despair.

Most colonial children did not get any schooling. We were largely an agrarian society. Even the wealthy educated their children by paying for tutors. Public schools were essentially non-existent. So, making the general statement that colonial children were taught formal logic is simply nonsense. Perhaps some were, but by and large, they were not. And, if you were a girl, maybe you learned to read (if lucky), but you were probably not taught how to write.

The biggest problem may be that they are not taught at all today. No lecturing! All group cooperative activities with worksheets and projects intended to lead them to discover math/science/??? Lots of web searching with copy-paste group reports, etc.

That is the methodology of today in the US. Proven many times to be completely stupid. But there you have it. Straight from the University Ed department professors who do curriculum development and teach pedagogy, like Prof. Bill Ayers. I wonder if he and his friends have your kids best interests in mind?

Anyone here actually regret having learned to code?

Not only not that, but not even any of the individual programming languages! Even the least useful among them have taught me something about the bigger picture.

Just Java.

B^)

I very much regret the time spent in my first exposure to anything but HP calculators. FORTRAN IV on a batch system and the truly painful typing of lines of code on a keypunch machine with no previous typing – no backspace when you are punching holes in cards. Any card with too few or too many spaces in the first 6 places is an error. FORTRAN was usually full of math so lots of shifted characters. It took us non-typers hours to get a 20 card deck typed without errors. Hated it. Never used FORTRAN again until a job at Boeing, and by then it was FORTRAN 77 and unreadable manuals from DEC.

(First 5 chars are statement number if needed – target for a goto or loop. 6th char is continuation char if the previous line was too long. Next 72 are your code.)

Ahhh…you were never introduced to the wonders of the “Control Card” on the 029, then. Programmed to skip the first 6 places on command. Much less frustrating.

This gaggle of techno-roosters is why there will still be coding to do for more generations. I was told 30+ years ago that: 1. ‘We’re going to be using the ‘Basic’ language for this class; it’s a dead language, only for instruction, you’ll never use this in the future for a job.’ – I was contacted about a VB.net job yesterday. 2. ‘Pay attention in this ‘Into To Computer Operations’ class. In about 7 years, we won’t need programmers anymore; all programs will have been written and computers will program themselves’. – That was 35 years ago, in the College of Mathematics at Penn State.

*Into should be Intro – stupid computers.

Personally I agree with Andreas Schleicher. Stephen Wolfram has been arguing the same thing for a while now and I believe makes a compelling argument in this blog post:

https://blog.stephenwolfram.com/2016/09/how-to-teach-computational-thinking/

I think the reality is that, apart from some toy coding in middle school as an introduction, real coding fits in the same area of education as any other specialist subject area. It is important to distinguish this from computational thinking which I think should be a core part of the curriculum. The danger is that education initiatives pushed by people from the computing field risks skewing the material strongly towards the applied areas which will generate a curriculum that ages poorly and also leaves a significant portion of students with little of value from the effort (consider how many adults supposedly learnt trigonometry and school and now have no idea how any of it works).

If we are going to restructure our education system to make space for technology and computing we need to make sure the most powerful and timeless elements are at the core of it, and computational thinking, not coding, is what we must be focusing on. It’s also important to realise that curriculum moves slowly, new ideas today can take 5 to 10 years to be reliably delivered by teachers who are trained and confident in the material at all schools (not just the well resourced ones).

“consider how many adults supposedly learnt trigonometry and school and now have no idea how any of it works”

Because in the real world the majority of people don’t require using it to make money, and making money, like it or not, is what puts a roof over ones head and food in their belly. In other words “the world needs ditch diggers too”, and this doesn’t just mean actual ditch diggers. Every field has ditch diggers and there is nothing wrong with having ditch diggers because they get the task at hand done for a cheaper cost.

We abstract and black box things so others can progress on top of it. If we keep having to teach all of those in detail we’d simply run out of time to start making money because there is too much to teach one person. When a person wants to deep dive into the black box then they can, but we give a very light overview of those black boxes and if a person doesn’t fully understand them and isn’t interested in understanding them it doesn’t mean they can’t still be a programmer who can fill needs and make money.

And this “computational thinking” depends on programming skills. I.e., exactly what they call “coding” for whatever reason.

I would argue thatyou have it backwards, that coding (beyond rote copy/paste garbage) requires computational thinking. Being able to formulate the problem that you’re trying to solve in a computational way is 90% of programming, the actual syntax is largely irrelevant.

Lots of people, especially self taught, start with the coding and successfully extrapolate the computational thinking (although there are also plenty of cases where people don’t make that leap). When I taught computer science at university a lot of effort was put into choosing programming languages based on what “computational thinking” concepts could be easily conveyed through that language. Haskell was the language they chose for first year computer science exactly because it was an excellent tool for computational thinking, but as a real world language its application is limited.

This depends on how you define “coding”. In my book, it *is* a computational thinking, an ability to go all the way from the problem definition to a sequence of steps (or whatever else formally defined) that is equivalent to this problem definition and can be used as a solution. You cannot have one without the other.

Of course, if by “coding” they mean shitting out some javascript or whatever, that’s a totally different story.