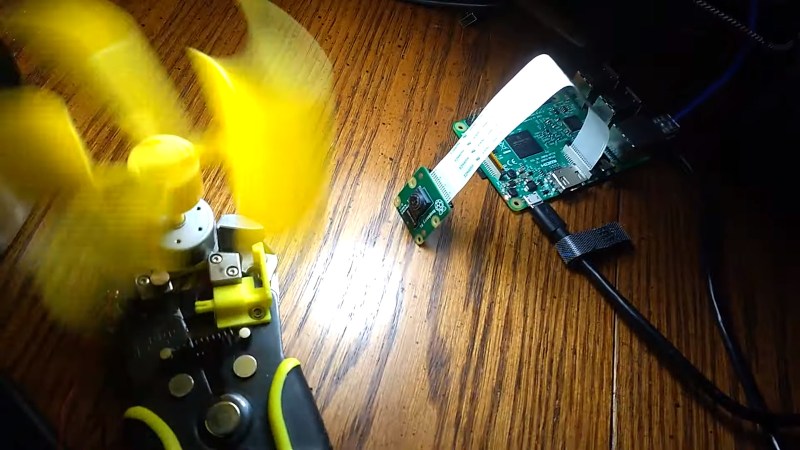

Filming in slow-motion has long become a standard feature on the higher end of the smartphone spectrum, and can turn the most trivial physical activity into a majestic action shot to share on social media. It also unveils some little wonders of nature that are otherwise hidden to our eyes: the formation of a lightning flash during a thunderstorm, a hummingbird flapping its wings, or an avocado reaching that perfect moment of ripeness. Altogether, it’s a fun way of recording videos, and as [Robert Elder] shows, something you can do with a few dollars worth of Raspberry Pi equipment at a whopping rate of 660 FPS, if you can live with some limitations.

Taking the classic 24 FPS, this will turn a one-second video into a nearly half-minute long slo-mo-fest. To achieve such a frame rate in the first place, [Robert] uses [Hermann-SW]’s modified version of raspiraw to get raw image data straight from the camera sensor to the Pi’s memory, leaving all the heavy lifting of processing it into an actual video for after all the frames are retrieved. RAM size is of course one limiting factor for recording length, but memory bandwidth is the bigger problem, restricting the resolution to 64×640 pixels on the cheaper $6 camera model he uses. Yes, sixty-four pixels height — but hey, look at that super wide-screen aspect ratio!

While you won’t get the highest quality out of this, it’s still an exciting and inexpensive way to play around with slow motion. You can always step up your game though, and have a look at this DIY high-speed camera instead. And well, here’s one mounted on a lawnmower blade destroying anything but a printer.

Would the Pi 4, with its DDR4 memory, work better?

it’s not about the speed (DDR3 is plenty fast for that), but the size!

Keep in mind you’re capturing uncompressed footage, the h246 encoder is way too slow to do this real-time, so you have to store raw images in the RAM and then either store them to a flash disk or let the h246 encoder crunch them over and then save to disk.

“but memory bandwidth is the bigger problem, restricting the resolution to 64×640 pixels”, so it’s about a speed.

It is more likely a limitation of the camera IC transfer rate. I think that line you quoted is not correct

Raspi4 has (real) gigabit ethernet, would that be enough to stream the high speed video to some faster storage? Eg. to some PC with more RAM and possibly SSD array?

or you could just use a pc…

A PC doesn’t have the level of access to any camera like the Pi does (unless they have a CSI port I supose) and in terms of this hack, streaming directly the data to a PC with more ram sounds like a good idea if it can be done without adding more overhead to the prosesor

If you read the github for raspiraw (660 fps) or fork-raspiraw (up to 750/1007 fps for v1/v2 camera) the issue is one for free frame skips, they clearly staye “Using /dev/shm ramdisk for storage is essential for high frame rates.”

That should be taken as a fact, so then the question is can you transfer the data to local or remote storage device without causing frame skips. The first step I would take would be to reserve two CPU cores at boot (isolcpus) and dedicate them each to one task only. One to receive data from the camera (taskset) and other to either write the data to local storage or to transfer the data to remote storage.

660 fps 64×640 is roughly ~27MB/second that in theory could be written local SD or even a USB device.

Using dedicated cores for each task is probably the best hope of reducing frame skips.

That would be my approach anyhow.

An RPi 4 with 4GB LPDDR4 RAM and USB 3.0 may have enough bandwidth :D

Well, I presume each pixel has more than 8-bits of data being sent, but yeah, it’s not memory bandwidth that’s the problem.

It’s the camera -> SoC bandwidth, which IIRC isn’t improved on the Pi 4. It’s why the smartphone cameras that can do 960fps actually embed 512MB of memory into the camera itself.

But a 4GB Pi4 should hold a lot more video at least. I’d have gone for 320×128 myself.

“…an avocado reaching that perfect moment of ripeness.” Hahahahahahahahahahahahahaha

You underestimate how huge uncompressed video is.

At a frame rate of 24/sec, a 640×480 pixel full RGB video would come in at roughly 22MByte/s, which (roughly, again) translates to 220 MBit/s.

That’s going to put some load on your GBit ethernet, unless the processors on both sides can make efficient use of it — but it seems achievable.

660 frames per sec, otoh…

We should be more appreciative of the deep magic video codecs do for us.

You misunderstand “raw image data”. All modern sensors just have the stated number of pixels (2592×1944 for a V1 camera) and then have one of three filters in front of each pixel. The raw image thus has 8, or ten bits per per pixel and the color information has to be deduced from the surrounding pixels having different filters. High end sensors have more bits per pixel.

The filters are usually two “mostly green” and a “mostly blue” and a “mostly red” filter. Each 2×2 area of pixels then has two green, one blue and one red filter.

Here is a Python script to easily interface raspiraw with OpenCV. Great job unlocking the higher FPS!! :-)

https://gist.github.com/CarlosGS/b8462a8a1cb69f55d8356cbb0f3a4d63#gistcomment-2108157

Now this IS A HACK! :) Great work.

*sigh* all the answers to all the above comments are in the vid.

This deserves a resounding “Now That’s A Hack!”

But y’all seem not to be getting it, so here we go [I dunno why I waste my time if y’all can’t be bothered to watch the vid, you’ll certainly TL;DR this]:

Check it: he’s using a regular camera.

The trick is to grab the data from the visible pixels’ capacitors more often than normal. How? By reading fewer pixels than normal.

How? Well, most regular cameras output the entirety of a row before moving to the next. So, e.g. switching to low-res 320×240 30Hz on an old 640×480 15Hz camera meant reading back only 240 rows of the 480 available, *at* the full 640 pixels wide. Then disposing of the left 160 and right 160 pixels. Note the frame-rate’s doubled, but the actual amount of data transferred *from the camera* per unit time is the same.

So, bump that down to 640×48 [or 64×48 if you prefer] and you can bump up the framerate to 150Hz.

Newer faster cameras, and faster ADCs even better.

But, note, unlike the 320×240 case, the extra pixels on the sides aren’t being disposed-of, so there’s more data to be stored to disk, *but* no more than, in fact exactly the same as, the original 640×480 15Hz!

So this guy’s technique isn’t limited by the ram/disk speed/size any more than the camera’s [and ADC’s] normal/full-potential is *equally* limited.

So his solution for this odd-case is equally-relevant for normal use of the camera in this configuration to its full potential.

Now, the pixels themselves aren’t being exposed to as much light between each frame, thus the need for brighter lighting. [I’m guessing newer sensors and higher-resolution ADCs these days are more sensitive than the old 640×480 discussed here]

This technique for upping framerate to the point of absurd, and keeping the pixels to the left/right since they’re transferred anyhow is new to me, and relevant to any camera setup where its software can be manipulated… THAT’s the cool part…

But y’all just want to fixate on Pi…

What about teaming up two Raspi-Cameras connected to two RasPis?

Of course: Both cameras have to mounted tight and first the image-locations have to be calibrated, and maybe the cameras have to be fixed even closer than just the two cameras modules.

And than the two raspis have to be synchronized.

But after that (after all this points). One Raspi could shoot Area 0…63×640 and the second raspi-cam-combo could shot Pixels 64..127×640.

Than on a PC or on one of the raspis you have to do the image-stitching.

This way you could take 128×640 images at 660 fps.

Another possiblities would be to do a 64×640 movie with even 1320 fps:

Both raspi-cam-combos do a 64×640@660 movie. But the synchronization has a delay between both systems:

they do not take the same time the images, when the first system has read out its camera sensor the half way, the 2nd system starts to read out his camera.

This way you still have the same illumination of the pixels like on 660fps (1,52ms).

Now again, after capturing the both videos, you have to do some image-

processing:

From the 660fps-image series, that are delayed by half the time you may calculate a series of 1320 fps-images.

This is not easy, but there are well known algorithms in mathematics.

To be genuine, you could do all the processing on the raspis and still say:

This system with 2xRaspi & 2xCams is still cheaper than high-speed cameras (“Streak-cams” doing up to 10 trillion fps).

Ah, of course I do not know whether a RasPi (4 model B) has the ability to be synchronized at ~756µs precision. As the RaspI is CPU based it even needs a better sync-precision, maybe @100µs.

If this is not possible there would be a third way, the hardest:

Shot videos with several Raspi-Cams with 64×640, that are calibrated by position but not synchronized in time.

More than: You have to achive that the different raspis start the “same” time, but with a little random delay, that does not need to be known. You bet on the random delay.

Know with more sophisticate algorithims you can derive a higher framerate video from all the lower rate videos.

I know that there are ready to use algorithms for “shape by shade” for images with “not known positions”: You make shot images from random angles and distances, as long the object and lightsource is not altered/changed.

If the light source and the camera angles/distances are constant than the synchronization and movement of the object maybe varied.

But I am not in anymore in this kind of high mathematics. Maybe you still need some help:

Put a flickering lightsource in the image that flickers at very high frequency (let say 2×1320/s). So got a synchronization beat in every image. When you than modulate your synchronization heart-beat in intensity, you get the time-synchronzation information into each pixel/each image of every raspi-cam.

It eager to find out, what would be possible this way with 10xRaspi/cams teamed up togehter.