In today’s episode of “AI Is Why We Can’t Have Nice Things,” we feature the Hertz Corporation and its new AI-powered rental car damage scanners. Gone are the days when an overworked human in a snappy windbreaker would give your rental return a once-over with the old Mark Ones to make sure you hadn’t messed the car up too badly. Instead, Hertz is fielding up to 100 of these “MRI scanners for cars.” The “damage discovery tool” uses cameras to capture images of the car and compares them to a model that’s apparently been trained on nothing but showroom cars. Redditors who’ve had the displeasure of being subjected to this thing report being charged egregiously high damage fees for non-existent damage. To add insult to injury, if renters want to appeal those charges, they have to argue with a chatbot first, one that offers no path to speaking with a human. While this is likely to be quite a tidy profit center for Hertz, their customers still have a vote here, and backlash will likely lead the company to adjust the model to be a bit more lenient, if not outright scrapping the system.

slow motion16 Articles

The Moment A Bullet Turns Into A Flashlight, Caught On Film

[The Slo Mo Guys] caught something fascinating while filming some firearms at 82,000 frames per second: a visible emission of light immediately preceding a bullet impact. The moment it occurs is pictured above, but if you’d like to jump directly to the point in the video where this occurs, it all starts at [8:18].

The ability to capture ultra-slow motion allows us to see things that would otherwise happen far too quickly to perceive, and there are quite a few visual spectacles in the whole video. We’ll talk a bit about what is involved, and what could be happening.

First of all, the clear blocks being shot are ballistic gel. These dense blocks are tough, elastic, and a common sight in firearms testing because they reliably and consistently measure things like bullet deformation, fragmentation, and impact. It’s possible to make homemade ballistic gel with sufficient quantities of gelatin and water, but the clear ones like you see here are oil-based, visually clear, and more stable (they do not shrink due to evaporation).

We’ve seen the diesel effect occur in ballistic gelatin, which is most likely the result of the bullet impact vaporizing small amounts of the (oil-based) gel when the channel forms, and that vaporized material ignites due to a sudden increase in pressure as it contracts.

In the video linked above (and embedded below), there is probably a bit more in the mix. The rifles being tested are large-bore rifles, firing big cartridges with a large amount of gunpowder igniting behind each bullet. The burning powder causes a rapid expansion of hot, pressurized gasses that push the bullet down the barrel at tremendous speed. As the bullet exits, so does a jet of hot gasses. Sometimes, the last bits of burning powder are visible as a brief muzzle flash that accompanies the bullet leaving the barrel.

A large projectile traveling at supersonic velocities results in a large channel and expansion when it hits ballistic gel, but when fired at close range there are hot gasses from the muzzle and any remaining burning gunpowder in the mix, as well. All of which help generate the kind of visual spectacles we see here.

We suspect that the single frame of a flashlight-like emission of light as the flat-nosed bullet strikes the face of the gel is also the result of the diesel effect, but it’s an absolutely remarkable visual and a fascinating thing to capture on film. You can watch the whole thing just below the page break.

Continue reading “The Moment A Bullet Turns Into A Flashlight, Caught On Film”

Supersonic Baseball Hitting A Gallon Of Mayo Is Great Flow Visualization

Those of us who enjoy seeing mechanical carnage have been blessed by the rise of video sharing services and high speed cameras. Oftentimes, these slow motion videos are heavy on destruction and light on science. However, this video from [Smarter Every Day] is worth watching, purely for the fluid mechanics at play when a supersonic baseball hits a 1-gallon jar of mayo.

The experiment uses the baseball cannon that [Destin] of [Smarter Every Day] built last year. Ostensibly, the broader aim of the video is to characterize the baseball cannon’s performance. Shots are fired with varying pressures applied to the air tank and vacuum levels applied to the barrel, and the data charted.

However, the real glory starts 18:25 into the video, where a baseball is fired into the gigantic jar of mayo. The jar is vaporized in an instant from the sheer power of the collision, with the mayo becoming a potent-smelling aerosol in a flash.

Amazingly, the slow-motion camera reveals all manner of interesting phenomena. There’s a flash of flame as the ball hits the jar, suggesting compression ignition happened at impact with the jar’s label. A shadow from the shockwave ahead of the ball can be seen in the video, and particles in the cloud of mayo can be seen changing direction as the trailing shock catches up.

The slow-motion footage deserves to be shown in flow-visualization classes, not only because it’s awesome, but because it’s a great demonstration of supersonic flow phenomena. Video after the break.

Continue reading “Supersonic Baseball Hitting A Gallon Of Mayo Is Great Flow Visualization”

Hackaday Links: October 25, 2020

Siglent has been making pretty big inroads into the mid-range test equipment market, with the manufacturers instruments popping up on benches all over the place. Saulius Lukse, of Kurokesu fame, found himself in possession of a Siglent SPD3303X programmable power supply, which looks like a really nice unit, at least from the hardware side. The software it came with didn’t exactly light his fire, though, so Saulius came up with a Python library to control the power supply. The library lets him control pretty much every aspect of the power supply over its Ethernet port. There are still a few functions that don’t quite work, and he’s only tested it with his specific power supply so far, but chances are pretty good that there’s at least some crossover in the command sets for other Siglent instruments. We’re keen to see others pick this up and run with it.

From the “everyone needs a hobby” department, we found this ultra-detailed miniature of an IBM 1401 mainframe system to be completely enthralling. We may have written this up at an earlier point in its development, but it now appears that the model maker, 6502b, is done with the whole set, so it bears another look. The level of detail is eye-popping — the smallest features of every piece of equipment, from the operator’s console to the line printer, is reproduced . Even the three-ring binders with system documentation are there. And don’t get us started about those tape drives, or the wee chair in period-correct Harvest Gold.

Speaking of diversions, have you ever wondered how many people are in space right now? Or how many humans have had the privilege to hitch a ride upstairs? There’s a database for that: the Astronauts Database over on Supercluster. It lists pretty much everything — human and non-human — that has been intentionally launched into space, starting with Yuri Gagarin in 1961 and up to the newest member of the club, Sergey Kud-Sverchkov, who took off got the ISS just last week from his hometown of Baikonur. Everyone and everything is there, including “some tardigrades” that crashed into the Moon. They even included this guy, which makes us wonder why they didn’t include the infamous manhole cover.

And finally, for the machinists out there, if you’ve ever wondered what chatter looks like, wonder no more. Breaking Taps has done an interesting slow-motion analysis of endmill chatter, and the results are a bit unexpected. The footage is really cool — watching the four-flute endmill peel mild steel off and fling the tiny curlicues aside is very satisfying. The value of the high-speed shots is evident when he induces chatter; the spindle, workpiece, vise, and just about everything starts oscillating, resulting in a poor-quality cut and eventually, when pushed beyond its limits, the dramatic end of the endmill’s life. Interesting stuff — reminds us a bit of Ben Krasnow’s up close and personal look at chip formation in his electron microscope.

LED Art Reveals Itself In Very Slow Motion

Every bit of film or video you’ve ever seen is a mind trick, an optical illusion of continuous movement based on flashing 24 to 30 slightly different images into your eyes every second. The wetware between your ears can’t deal with all that information individually, so it convinces itself that you’re seeing smooth motion.

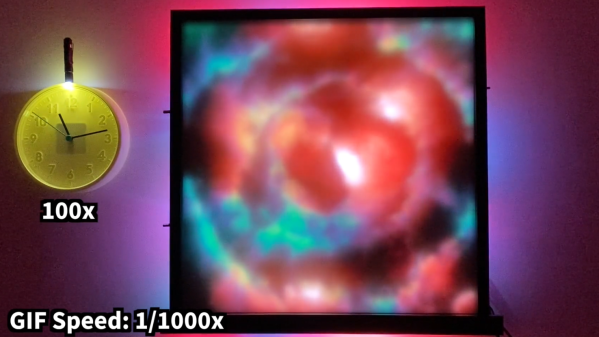

But what if you slow down time: dial things back to one frame every 100 seconds, or every 1,000? That’s the idea behind this slow-motion LED art display called, appropriately enough, “Continuum.” It’s the work of [Louis Beaudoin] and it was inspired by the original very-slow-motion movie player and the recent update we featured. But while those players featured e-paper displays for photorealistic images, “Continuum” takes a lower-resolution approach. The display is comprised of four nine HUB75 32×32 RGB LED displays, each with a 5-mm pitch. The resulting 96×96 pixel display fits nicely within an Ikea RIBBA picture frame.

The display is driven by a Teensy 4 and [Louis]’ custom-designed SmartLED Shield that plugs directly into the HUB75s. The rear of the frame is rimmed with APA102 LED strips for an Ambilight-style effect, and the front of the display has a frosted acrylic diffuser. It’s configured to show animated GIFs at anything from 1 frame per second its original framerate to 1,000 seconds per frame times slower, the latter resulting in an image that looks static unless you revisit it sometime later. [Louis] takes full advantage of the Teensy’s processing power to smoothly transition between each pair of frames, and the whole effect is quite wonderful. The video below captures it as best it can, but we imagine this is something best seen in person.

Continue reading “LED Art Reveals Itself In Very Slow Motion”

E-Paper Display Shows Movies Very, Very Slowly

How much would you enjoy a movie that took months to finish? We suppose it would very much depend on the film; the current batch of films from the Star Wars franchise are quite long enough as they are, thanks very much. But a film like Casablanca or 2001: A Space Odyssey might be a very different experience when played on this ultra-slow-motion e-paper movie player.

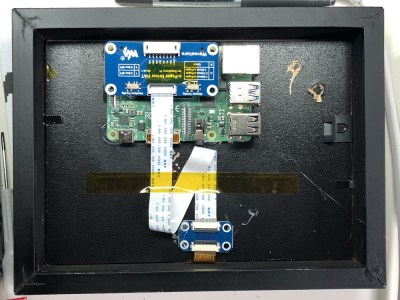

The idea of displaying a single frame of a movie up for hours rather than milliseconds has captivated [Tom Whitwell] since he saw [Bryan Boyer]’s take on the concept. The hardware [Tom] used is similar: a Raspberry Pi, an SD card hat with a 64 GB card for the movies, and a Waveshare e-paper display, all of which fits nicely in an IKEA picture frame.

[Tom]’s software is a bit different, though; a Python program uses FFmpeg to fetch and dither frames from a movie at a configurable rate, to customize the viewing experience a little more than the original. Showing one frame every two minutes and then skipping four frames, it has taken him more than two months to watch Psycho. He reports that the shower scene was over in a day and a half — almost as much time as it took to film — while the scene showing [Marion Crane] driving through the desert took weeks to finish. We always wondered why [Hitch] spent so much time on that scene.

[Tom]’s software is a bit different, though; a Python program uses FFmpeg to fetch and dither frames from a movie at a configurable rate, to customize the viewing experience a little more than the original. Showing one frame every two minutes and then skipping four frames, it has taken him more than two months to watch Psycho. He reports that the shower scene was over in a day and a half — almost as much time as it took to film — while the scene showing [Marion Crane] driving through the desert took weeks to finish. We always wondered why [Hitch] spent so much time on that scene.

With the proper films loaded, we can see this being an interesting way to really study the structure and flow of a good film. It’s also a good way to cut your teeth on e-paper displays, which we’ve seen pop up in everything from weather stations to Linux terminals.

660 FPS Raspberry Pi Video Captures The Moment In Extreme Slo-Mo

Filming in slow-motion has long become a standard feature on the higher end of the smartphone spectrum, and can turn the most trivial physical activity into a majestic action shot to share on social media. It also unveils some little wonders of nature that are otherwise hidden to our eyes: the formation of a lightning flash during a thunderstorm, a hummingbird flapping its wings, or an avocado reaching that perfect moment of ripeness. Altogether, it’s a fun way of recording videos, and as [Robert Elder] shows, something you can do with a few dollars worth of Raspberry Pi equipment at a whopping rate of 660 FPS, if you can live with some limitations.

Taking the classic 24 FPS, this will turn a one-second video into a nearly half-minute long slo-mo-fest. To achieve such a frame rate in the first place, [Robert] uses [Hermann-SW]’s modified version of raspiraw to get raw image data straight from the camera sensor to the Pi’s memory, leaving all the heavy lifting of processing it into an actual video for after all the frames are retrieved. RAM size is of course one limiting factor for recording length, but memory bandwidth is the bigger problem, restricting the resolution to 64×640 pixels on the cheaper $6 camera model he uses. Yes, sixty-four pixels height — but hey, look at that super wide-screen aspect ratio!

While you won’t get the highest quality out of this, it’s still an exciting and inexpensive way to play around with slow motion. You can always step up your game though, and have a look at this DIY high-speed camera instead. And well, here’s one mounted on a lawnmower blade destroying anything but a printer.

Continue reading “660 FPS Raspberry Pi Video Captures The Moment In Extreme Slo-Mo”