Home directories have been a fundamental part on any Unixy system since day one. They’re such a basic element, we usually don’t give them much thought. And why would we? From a low level point of view, whatever location $HOME is pointing to, is a directory just like any other of the countless ones you will find on the system — apart from maybe being located on its own disk partition. Home directories are so unspectacular in their nature, it wouldn’t usually cross anyone’s mind to even consider to change anything about them. And then there’s Lennart Poettering.

In case you’re not familiar with the name, he is the main developer behind the systemd init system, which has nowadays been adopted by the majority of Linux distributions as replacement for its oldschool, Unix-style init-system predecessors, essentially changing everything we knew about the system boot process. Not only did this change personally insult every single Perl-loving, Ken-Thompson-action-figure-owning grey beard, it engendered contempt towards systemd and Lennart himself that approaches Nickelback level. At this point, it probably doesn’t matter anymore what he does next, haters gonna hate. So who better than him to disrupt everything we know about home directories? Where you _live_?

Although, home directories are just one part of the equation that his latest creation — the systemd-homed project — is going to make people hate him even more tackle. The big picture is really more about the whole concept of user management as we know it, which sounds bold and scary, but which in its current state is also a lot more flawed than we might realize. So let’s have a look at what it’s all about, the motivation behind homed, the problems it’s going to both solve and raise, and how it’s maybe time to leave some outdated philosophies behind us.

A Quick Introduction To Systemd

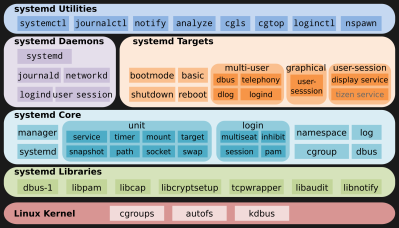

homed part of the endeavor, let’s bring everyone on the same page about systemd itself. In simple words, systemd handles everything that lies beyond the kernel’s tasks to start up the system: setting up the user space and providing everything required to get all system processes and daemons running, and managing those processes along the way. It’s both replacing the old init process running with PID 1, and providing an administrative layer between the kernel and user space, and as such, is coming with a whole suite of additional tools and components to achieve all that: system logger, login handling, network management, IPC for communication between each part, and so on. So, what was once handled by all sorts of single-purpose tools has since become part of systemd itself, which is one source of resentment from people latching on to the “one tool, one job” philosophy.

Technically, each tool and component in systemd is still shipped as its own executable, but due to interdependency of these components they’re not really that standalone, and it’s really more just a logical separation. systemd-homed is therefore one additional such component, aimed to handle the user management and the home directories of those users, tightly integrated into the systemd infrastructure and all the additional services it provides.

But why change it at all? Well, let’s have a look at the current way users and home directories are handled in Linux, and how systemd-homed is planning to change that.

The Status Quo Of $HOME And User Management

Since the beginning of time, users have been stored in the /etc/passwd file, which includes among other things the username, a system-unique user id, and the home directory location. Traditionally, the user’s password was also stored in hashed form in that file — and it might still be the case on some, for example embedded systems — but was eventually moved to a separate /etc/shadow file, with more restricted file permissions. So, after successfully logging in to the system with the password found in the shadow file, the user starts off in whichever location the home directory entry in /etc/passwd is pointing to.

In other words, for the most fundamental process of logging in to a system, three individual parts that are completely independent and separated from each other are required. Thanks to well-defined rules that stem from simpler times and have since remained mostly untouched, and which every system and tool involved in the process commendably abides by, this has worked out just fine. But if we think about it: is this really the best possible approach?

Note that this is just the most basic local user login. Throw in some network authentication, additional user-based resource management, or disk encryption, and you will notice that /etc/passwd isn’t the place for any of that. And well, why would it be — one tool, one job, right? As a result, instead of configuring everything possibly related to a user in one centralized user management system that is flexible enough for any present and future considerations, we just stack dozens of random configurations on top of and next to each other, with each one of them doing their own little thing. And we are somehow perfectly fine and content with that.

Yet, if you had to design a similar system today from scratch, would you really opt for the same concept? Would your system architect, your teacher, or even you yourself really be fine with duplicate database entries (usernames both in passwd and shadow file), unenforced relationships (home directory entry and home directory itself), and just random additional data without rhyme or reason: resource management, PAM, network authentication, and so on? Well, as you may have guessed by now, Lennart Poettering isn’t much a fan of that, and with systemd-homed he is aiming to unite all the separate configuration entities around user management into one centralized system, flexible enough to handle everything the future might require.

Knock Knock – It’s Systemd

So instead of each component having its own configuration for all users, systemd-homed is going to collect all the configuration data of each component based on the user itself, and store it in a user-specific record in form of a JSON file. The file will include all the obvious information such as username, group membership, and password hashes, but also any user-dependent system configurations and resource management information, and essentially really just anything relevant. Being JSON, it can virtually contain whatever you want to put there, meaning it is easily extendable whenever new features and capabilities are required. No need to wonder anymore which of those three dozen files you need to touch if you want to change something.

In addition to user and user-based system management, the home directory itself will be linked to it as a LUKS encrypted container — and this is where the interesting part comes, even if you don’t see a need for a unified configuration place: the encryption is directly coupled to the user login itself, meaning not only is the disk automatically decrypted once the user logs in, it is equally automatic encrypted again as soon as the user logs out, locks the screen, or suspends the device. In other words, your data is inaccessible and secure whenever you’re not logged in, while the operating system can continue to operate independently from that.

There is, of course, one downside to that.

Chicken / Egg Problem With SSH

If the home directory requires an actively logged-in user to decrypt the home directory, there’s going to be a problem with remote logins via SSH that depend on the SSH decryption key found inside the (at that point encrypted) home directory. For that reason, SSH simply won’t work without a logged in local user, which of course defies the whole point of remote access.

That being said, it’s worth noting that at this stage, systemd-homed is focusing mostly on regular, real human users on a desktop or laptop, and not so much system or service-specific users, or anything running remotely on a server. That sounds of course like a bad excuse, but keep also in mind that systemd-homed will change the very core of user handling, so it’s probably safe to assume that future iterations will diminish that separation and things will work properly for either type of user. I mean, no point to reinvent the wheel if you need to keep the old one around as well — but feel free to call me naive here.

Ideally, the SSH keys would be migrated to the common-place user management record, but that would require some work on SSH’s side. This isn’t happening right now, but then again, systemd-homed itself is also just a semi-implemented idea in a git branch at this point. It’s unlikely going to be widely adapted and forced onto you by the evil Linux distributions until this issue in particular is solved — but again, maybe I’m just overly optimistically naive.

Self-Contained Users With Portable Homes

But with user management and home directory handling in a single place and coupled together, you can start to dream of additional possible features. For instance, portable home directories that double as self-contained users. What that means is that you could keep the home directory for example on a USB stick or external disk, and seamlessly move it between, say, your workstation at home and your laptop whenever you’re on the move. No need to duplicate or otherwise sync your data, it’s all in one place with you. This brings security and portability benefits.

But that’s not all. It wouldn’t have to be your own device you can attach your home directory to, it could be a friend’s device or the presenter laptop at a conference or really any compatible device. As soon as you plug in your home directory into it, your whole user will automatically exist on that device as well. Well, sort of automatically. Obviously, no one would want some random people roaming around on their system, so the system owner / superuser will still have to grant you access to their system first, with a user and resource configuration based on their own terms, and signed to avoid tempering.

Admittedly, for some of you, this might all sound less like amazing new features and more like a security nightmare and mayhem just waiting to happen. And it most certainly will bring a massive change to yet another core element of Linux where a lot could possibly go wrong. How it will all play out in reality remains to be seen. Clearly it won’t happen over night, and it won’t happen out of nowhere either — the changes are just too deep and fundamental. But being part of systemd itself, and seeing its influence on Linux, one has to entertain the idea that this might happen one day in the not too distant future. And maybe it should.

Times Are Changing

Yes, this will disrupt everything we know about user management. And yes, it goes against everything Unix stands for. But it also gets rid of an ancient concept that goes against everything the software engineering world has painfully learned as best practices over all these decades since. After all, it’s neither the 70s nor the 80s anymore. We don’t have wildly heterogeneous environments anymore where we need to find the least capable common denominator for each and every system anymore. Linux is the dominant, and arguably the only really relevant open source “Unix” system nowadays — even Netflix and WhatsApp running FreeBSD is really more anecdotal in comparison. So why should Linux really care about compatibility with “niche” operating systems that are in the end anyway going to do their own thing? And for that matter, why should we still care about Unix altogether?

It generally begs the question, why do we keep on insisting that computer science as a discipline has reached its philosophical peak in a time the majority of its current practitioners weren’t even born yet? Why are we so frantically holding on to a philosophy that was established decades before the world of today with its ubiquitous internet, mobile connectivity, cloud computing, Infrastructure/Platform/Software/Whatnot-as-a-Service, and everything on top and below that has become reality? Are we really that nostalgic about the dark ages?

I’m not saying that the complexity we have reached with technology is necessarily an outcome we should have all hoped for, but it is where we are today. And it’s only going to get worse. The sooner we accept that and move on from here, and maybe adjust our ancient philosophies along the way, the higher the chance that we will actually manage to tame this development and keep control over it.

Yes, change is a horrible, scary, and awful thing if it’s brought upon us by anyone else but ourselves. But it’s also inevitable, and systemd-homed might just be the proverbial broken egg that will give us a delicious omelette here. Time will tell.

The thing people don’t realize is that with ChromeOS and Android, the dream of regular user Linux has been realized. And really, it looks a lot like MacOS X! Love it or hate it, it covers that niche. Systemd and GNOME are abominations. They don’t do a good job of what they do, and then they wreck everything around them. Yuck. If you want an over-integrated user experience, at least use one that has seen some serious effort at debugging, use one of the google projects.

ChromeOS by the looks of it already has development efforts towards systemd…

I cannot find anything definitive, but there are tickets tracking development and one comment that it uses systemd-journald on op of its init tool “Upstart”.

The problem is for someone that is not a linux person by default is trying to keep up.

Take Ubuntu. it seems every version that comes out they fundamentally change something. Why !?

And which distro do you pick? Ask some people and it’s religious zealotry. But the one thing I cannot get is a zero bullshit answer.

So you end up with ubuntu cos you read a few projects of people using it. You get used to one version of it then another comes along and they’ve decided to change the window manager which makes no sense to you because frankly you dont care, but you just want the icons to be in the same place and called the same things. But apparently the changes are good. Becasue, well, ponies

We’ll also move things that lived in /var/etc in fedora to /usr/etc in ubuntu because someone hairy thinks it is more logical.

Use one distro and you know F-all about the others.

Oh and the little apps that change setitings, again we’ll randomise these and where they live between versions and distros just for gigglez too.

You end up having to embrace these changes, like you would your dentist, because you’ve little choice but weather the painful experience.

Oh and the various cockups that happen between versions because your bit of hardware gets a patch which breaks it. or it gets deprecated or it gets forgotten. But you need to know how to remake the kernel to get it working again.

I dont like how my S4 doesn’t get updates to latest android. I dont like how it’s locked out of the alternative firmwares becasue samsung are assholes and wont release drivers. I begrudingly upgrade because people writing apps use the latest android version to get some special widget which has F-all to do with my phone coping hardware wise, just not running the right OS version.

But where it excells is by not exposing me to linux. It’s in there somewhere but it’s irrelevant. Someone has tested all my hardware and it just works.

The whole linux model is a royal PITA for a lot of people that have survived windows torment and frankly things just working.

This is why linux other than sandbox’d versions like macos and android is doomed for failure. By failure I mean never being mass adopted as a desktop OS.

The year of linux on the desktop will never happen because it’s such an utter pile of crap to deal with when SHTF. For most nonsavvy IT users, simply forget about it.

There is too much choice and 3245235 ways to break it ever so slightly differently.

And updates will break something on your system if you made a “wrong” config choice 4 years ago and forget about it.

But here comes systemd. I don’t understand it, but I see it’s fundamentally going to change my relationship with linux once more and for no good end user reasons. Just developers saying “it’s good for you”

Like when they change the GUI on ebay or on android or xyz and say “its’ better” and ignore the 90% of users that tell them it’s dog shit.

A zero BS answer? None is really better, each has its own learning curve and it is whatever you have experience and familiarity with. I use Fedora, not because it is “better” (whatever that means), but because I have been using it before there was Fedora (Red Hat days) and key people I work with do Fedora and are resources when I get stumped. If you have Ubuntu resources, then go with Ubuntu. Windows has its own learning curve too, many people come to linux with years of Windows experience and wonder why they aren’t immediately up to speed.

As for systemd, it has never made me happy. Neither has Gnome 3, but at least there are alternatives, so I can blow it off.

” and wonder why they aren’t immediately up to speed.”

It’s more like they wonder “why can’t I just do XYZ?” such as, “I just installed a new hard drive – why can’t I just install my software on it?”, or, “Where’s the setup.exe? Why do I have to get this piece of software from the packet installer thingy? It’s not there!”, or simply, “Why does xyz not work? C’mon they said you don’t need to install any drivers – but my audio/video/GPU/special hardware just doesn’t appear anywhere.”.

Funny, I plugged a new spinning rust drive into my Windows 10 desktop just a few months back, and couldn’t install anything on it. I needed to go to the computer manager, then select drive management, and then format the sucker before Windows would even give it a drive letter. It would not show up in File Explorer in any location until I did that. And that is a management tool I usually only use when I need to check log files to see why a crash occurred!

But the process is something I’ve done so many times, now, on my own and other people’s PCs that I don’t have to look up “how to assign a drive letter” anymore. When I was running Gentoo, after about a year of just using Fluxbox as my window interface, I had picked up all of the same tricks and could track down whichever .conf file that needed a change when the hardware changed.

You can put the time in learning about the tool you are using, or you can complain that “this tool doesn’t work like the tool I know; therefore it sucks” until you are blue in the face. Doesn’t change the fact that someone using Linux for the last ten years could solve those problems without blinking. Because every piece of hardware needs drivers, and if you picked a disto without kernel detection enabled after install, or have a device with a newer USB VID/PID combo, or you want proprietary graphics drivers, you have to fix stuff.

“”But the process is something I’ve done so many times, now, on my own and other people’s PCs that I don’t have to look up “how to assign a drive letter” anymore. “”

But it’s been the same method since Windows 95. It’s in all the server OS’s to.

Ubuntu and I’m sure others distros change the location and the software for doing this between versions!! But there is no help guide unlike windows to reference.

They also fail to install certain applications. Chicken and egg problems. How do you know what the application is called in order to install it ?

Take for example editing hte bootloader in Ubuntu. Need to use grub cos that’s what I remember, nope, no longer using grub, using something else.

Window’s strength is that it’s stuck to the same tools for like forever.

The onoy time I’ve really use Windiws was when I won a Surface 2 tablet a few years ago. I’ve gone from RSDOS and Microware OS-9 on my Radio Shack Color Computer in 1984, to a Mac from late 1993 through mid-2001 when I moved to Linux.

I tried Debian briefly, and then stuck with Slackware all this time. It’s way easier to adapt than keep flitting to a different distribution, and certainly not jumping to whatever distribution is getting the hype now.

One of the problems with distributions like Ubuntu is that they try to target a specific set of users, mostly newcomers. They promote tgemselves, but any distributiin pulls from the same kernel and application pools. The installer may differ, but the rest is generally a philosophy. The more traditional distributions are vast, so the user decides what to use. I have a choice of Desktops, on the DVD. Deravative distributions, like Ubuntu, cherry pick from the foundation distribution, the selling point often to give the user less complication (or in other cases “for older hardware”, or whatever). Rarely does a distribution make modifications to the pools, though there are some ootiins when compiling. So a small distribution intended to “run on old hardware” can’t speedup tge software, just chooses select apps that run faster or in less RAM than other apps. But with a foundation distribution, I can do the same thing, the choices are on the DVD. Hard drives have become so large that I might as well install everything, and just use what I want.

If I want “” less bloat” or faster ooeration, then I need to dig and compile things to my hardware or some other need.

Systemd is a rare exception, since it doesn’ t standalone but needs other software to change. So the distributiin has to decide, and the user has no choice. Hence Slacjware and maybe some others have chosen “to be different” and not follow along. With time, that may cause other problems.

Michael

As a daily-driver desktop Linux user for the last 10+ years, I think I can safely say, Linux isn’t (and never will be) for non-savvy users. Open source software is a community, not a product, and the end-user of FOSS software is expected to learn, not merely to consume. Now, many distros have greatly lowered that bar, and I’m certainly grateful for that, but the freedoms that come with FOSS come at a cost. The cost is an educated userbase, and that is part of why I like Linux as a whole. The overall knowledge of the userbase is much higher than mass-market product-ified OSes like Windows.

What are the users learning. How to deal with the unfinished software that is Linux?? It’s like buying a crappy power saw that comes without guards and claiming the user is better off learning to use it safely.

Except you didn’t buy anything. You got a free power saw that does not include the guards.

It may not be as safe, but it can fit into more places.

Any OS is a tool, use the one that fits your needs.

@Torriem

Actually it’s only free if your time is ***WORTHLESS***!!!

Why is it people bitch about how little they earn, always claiming their time is worth more, yet they don’t see when their time is being stolen by some piece of useless software

@Hue

Sorry I can’t agree. I believe you are greatly biased by your own experience.

People don’t want to be forced to tinker under the hood of their computer just as much as they don’t wand to be forced to tinker under the hood of their car.

Using FOSS has turned into this “must learn” situation because the quality of a lot of FOSS is so bad. I know a lot of people are going to be upset by this statement but that doesn’t make it any less true. The fact is that so many FOSS authors think that it is their right to leave things in a mess because “it’s free and you can fix it if you want to”. Some of them are incompetent wannabe programmers that don’t know any better. Some are arrogant a*holes that think they know it all. Some of them have learned a little bit of programming and have cobbled together something that helps them and kind of works 95% of the time – but it’s their baby and they wont be told how to improve it. Then you get those that muscle in on an existing project and try to take it over without actually being any great shakes themselves. Oh then there are the ones that have this hair brained idea that is going to make them a lot of money if they can only get enough people to work on it for free.

So if the situation is so bad how come it continues? Because of people like Linus Torvald. Now when he complains about systemd and its author people should really listen.

I agree that there’s a problem, but disagree about the cause. It’s not that the code quality is “bad” or that many programmers suffer from the A*hole disease. It’s that they are constantly churning out new, improved versions of things, and they don’t always interoperate.

To use the automobile analogy: imagine you had a car and every month or two it got a brand new, improved carburator that installed itself. It runs fine, until that day when the mounting holes on the carburator move a few millimeters, and it won’t fit anymore.

Whose fault is that? Maybe the carb designer’s: should not have moved the mounting holes. But maybe the new feature xyz just doesn’t fit with the old mounting holes. It’s up to the system distro to find all of these mis-matches.

Yes, they are “bugs”. However, you misunderstand the problem, if you think they are always the fault of the programmers. We do not have any good, reliable tools that will flag issues like “carburator A is no longer compatible with mounting bracket B”. We do have library versioning, we even have symbol versioning, and this catches some of the bugs. But its not automated, its optional, there’s no agreed-on process other than test test test and hope for the best.

@linasv

“It’s that they are constantly churning out new, improved versions of things, and they don’t always interoperate.”…

“However, you misunderstand the problem, if you think they are always the fault of the programmers. We do not have any good, reliable tools that will flag issues”…

Respectfully…

No I really do understand how and why this happens but IS IT NOT the responsibility of the programmer to fix the faults in the current version before ditching it and moving on to the new improved incompatible version? Why is it that my software includes version information in the data files that it uses and is able to read data produced by old and new versions of the same software and other programmers can’t be bothered to even try? Apart from my brain what magical tools do I have that they do not?

How do you think we managed to write C programs before the advent of function prototypes and type safe linking became available. Now people bitch if they can’t use an IDE that draws a big neon sign around a syntax error. I’m sorry but you know what they say about a bad workman and his tools.

In any case, trust me I have had to deal with the guts of several open source programs over the years and what I have found has been unbelievably bad. It’s really not surprising so much FOSS gets abandoned.

Friendly Regards

ospry

OK, yes, lots of code is badly written. This is not a FOSS issue, though: proprietary code bases are worse (in my experience, at least, having been paid to create/maintain proprietary code.) Yes, programmers should maintain their own code, instead of wishing that someone else will (yes, this is where Pottering, Sievers have failed) But proprietary corporations fail at this too. And you can’t actually fix their broken code.

As to usability: when’s the last time you tried to use an Apple computer? Sure, it works great if all you do is surf the web. But if someone gives you some desktop-published magazine/diary/book, just try moving it around. Incompatibility, bugs, breakage, total non-portability and ultimately, dataloss (you have to export to PDF and then re-typeset everything. Is that a painful, time-wasting hack, or what?) So, if you are using Apple to do anything professional that *actually has to work*, it sucks bad, and Linux is a far superior choice. Apps actually work on Linux. But if you are just going to screw around and watch youtube and post to fb, Linux is just as good as Apple.

Ubuntu on the laptop is a charm. Systemd on servers with RAID and logical volumes and multiple ethernet cards and shared filesystems and overlay mounts, its a waking nightmare. Systemd is buggy, immature, incompletely-thought-out code.

I don’t know of anyone forcing people to use Linux. I see Linux more as a haven for people who know or want to know more about how a computer works, because it’s a system that lets you crack open the hood and take a look.

I’m perfectly willing to handle the occasional bug to avoid the forced hand-holding of Microsoft and Apple. As for the bugs, I have encountered more frustrating bugs/”features” in Windows than I ever have in Linux.

To use your car analogy, using Windows for me is like leasing a car that gets towed by the manufacturer once a week for maintenance. People who say Linux requires too much knowledge to use probably leave driver management to PC optimizing freeware/Trojans and pay their mechanics for a headlight fluid change with every checkup.

@Travis

“I don’t know of anyone forcing people to use Linux.”…

That’s right nobody forces you to use it but once you do they dictate how you use it. If you don’t keep updating it you’ll have problems somewhere down the line. And if you do keep updating you’ll still have problems.

“I see Linux more as a haven for people who know or want to know more about how a computer works, because it’s a system that lets you crack open the hood and take a look.”…

Good luck with that – keep watching as it changes before your very eyes. What you learn today will probably be obsolete in six months when some new fancy bug ridden alternative elbows its way in.

“To use your car analogy, using Windows for me is like leasing a car that gets towed by the manufacturer once a week for maintenance. People who say Linux requires too much knowledge to use probably leave driver management to PC optimizing freeware/Trojans and pay their mechanics for a headlight fluid change with every checkup.”…

Linux used to be great. I used to love Linux. It used to let me use my machine the way I needed to. I used to use it to run dedicated servers hooked directly to the net on big pipes. I used to use it to develop real software without winblows crap getting in the way. Man I was the number one Linux fan. But it’s really gone to shit over the last few years. It doesn’t matter how careful you are to keep the a*hole software off you machine some determined a*hole will find a way to screw you. Take ext4 for example (the filesystem that is). I decided to stick with ext3 for a while after ext4 moved out of the experimental stage and into the production stage. A couple of years down the road I was still using etx3 (saw no need to switch) and I heard some horror stories which revolved around the main dev’s views of how certain conditions should be handled – which happened to go against everything that normal admins (with a great deal of experience) expected and required. So since ext3 had proven itself rock solid over the past several years and given the ext4 revelations I resolved not to bother using ext4. Imagine my surprise when several years later (a few months ago) I start seeing a great number of ext4 related processes running on a sick machine which was supposed to be using ext3. After a careful examination I concluded that the machine was indeed configured to use ext3. All the filesystems were built and mounted as ext3. While researching this mystery on the net I discovered that the ext3 drivers had been dropped from Linux and their job had been taken over by the ext4 drivers. Somehow I and a great many other people missed the memo. There are other annoying problems that I’ve encountered due to a*holes breaking stuff using spooky action at a distance but if this one story isn’t enough then you really don’t get it and I seriously doubt any number will make any difference.

And by the way, when you get to a certain level, you don’t want to waste your time playing “computers”, you want to use them as tools to do serious work.

“Open source software is a community, not a product, and the end-user of FOSS software is expected to learn, not merely to consume.”

Wow. Just wow. The “FOSS Community” everyone.

This is why everything is a damn mess, this stupid mentality.

Lazy developers punting their shoddy code on others and expecting them to figure it out.

If you can’t put out Good Code – DON’T make excuses. Stop it.

This same mentality is like saying “hey, hey you stupid doodoohead, stop buying cars, build it yourself, I did!” and pointing to some rods with wheels and a stool on it and expecting them to take you seriously because “it can get me from A to be EZ PZ!”

This is why Linux will never be a desktop OS – this dumbass mentality of “lol u r too dumb u need to get bigger learned” is the STINK surrounding the entire community.

Not to mention the extreme elitism that is sadly so pervasive as well.

Note that this is coming from a person (me) that thinks there should be a licence to be able to use the internet, but I still think FOSS developers should be held to even higher standards because they ARE (supposedly) smarter.

I’ve been programming most of my life. Plenty of my code has been a mess, but not once have I made any code public that was a mess. Not once. Never will. I have code that would have a relative stroke if you entered wrong data because I prefer speed over error handling in most of those cases. If I did release it, I would have a wrapper written on top of it that does the error handling, I still wouldn’t put the error handling in to the main, speedy core of it because of inexperience.

But generic tools should never be like that. Ever. It’s lazy and it gives a bad impression.

You’d be fired if you gave the typical FOSS project to any company to solve a problem. Most of it is literal trash. It sucks. It could be better, but “it’s good enough since others are also super intelligent like me and know everything ever about this tiny library” or some other nonsense excuses.

Nobody gives a single damn about your flavour of the week libraries. Just make Good Code.

Linux is not for you … you must calm down first. It’s not a kind of machine that can be fixed with a hammer.

You better stick with Microsoft Bob.

– Well put across the board. The rate of forced obsolescence and change is ridiculous. I also had an S4 until recently, which worked fine, until developers of enough apps I use decided to mandate updates (‘you must upgrade’ upon opening app – then find ‘upgrade’ is not compatible) and remove support for my OS. You want to keep using that WiFi thermostat you bought, which worked fine with your phone when you bought it? Sorry, we’re going to mandate you get a new phone to do so now, because someone still using a 5-year old perfectly functioning device is preposterous. Piss off.

– And yes, I love linux for it’s stability, but upgrades and differences between flavors is a major PITA. It’d be nice to be able to upgrade or jump between versions without learning where the heck everything went, and trying to remember which flavor (or even release) has what where. I’d like to just upgrade a production linux VM, without needing to spend 20 hours learning where someone thought it’d be ‘better’ to move a config file to, or new format thereof, etc, and risk updates breaking things. Thank goodness for VM snapshots and instant restores I guess…

– Like developers on the ‘lets rewrite it’ bandwagon. Guess what – that development style was perfectly acceptable 2 years ago – and this rewrite that will ‘pay for itself in the long run’ doesn’t really pay for itself, and your rewrite to latest trend/fad will also be outdated to the latest trends in another 2 years, and someone else will want to rewrite it. Could have just left well enough alone that was working fine (and you quite possibly introduced new bugs or broke while refactoring). Sorry, but sometimes ‘if it ain’t broke, don’t fix it’ has some truth to it… If you think your new way is better, is it really better enough to force countless people to spend hours to go through the learning curve of said new way? If so, fine, otherwise keep your ego of your newfangled better way in your shorts. /rant :-)

Right on!

Wow. For the longest time I was sure that go suck a penguin’s comment was a post in support of systemd and general hatred for Linux

I was very wrong… Forgive me.

It is true. About ebay, android or whatever changing their GUI completely. And just kicking most of their userbase in the teeth by doing so.

And at least for this reason, systemd is a total abomination. Because it strives to change EVERYTHING about a Linux system. And there is a massive chunk of users who are NOT happy with the changes. And weren’t happy from the start.

P. S. Most UI overhauls verily do look like utter dogshit.

Android’s Linux roots are nice but I hate developing Android apps, the Android SDK is a mess. I wish Ubuntu Phone took off instead. ChromeOS is working out pretty nice though.

Android software development and Android SDK are probably THE worst pieces of software bloat WTFs ever made.

What, you mean application lifecycle states don’t just roll off your tongue? And fragments, what a beautiful way to improve by adding another layer of incoherent states on top of everything.

Anything Google – the biggest ad company on Earth touches I want nothing to do with. Meta and Google just want to suck every piece of data from you and your family, bundle them up and sell them to Ear wax advertisers. These two companies are total dog shit.

Not even close, OSX doesn’t have swappable desktop environments, and I can’t stand the one they give you, and there’s no built in package management.

Systemd so far as I know only has a few real issues, and the biggest only happens if you use a systemd service to run something as a user other than root and mess up the config. A big deal, but I’m still using it.

GNOME is kind of awful trash, but KDE is great, and cinnamon et all are decent.

~/.ssh can be overlayed from an unencrypted location or the location of it can be specified in /etc/ssh_config like say AuthorizedKeysFile or so.

Also openssh supports loading keys through other means

Yes, but that breaks the relationship between the home directory and they key information that protects it.

Ideally, the home directory container should be encrypted by something related to the ssh key or be some metadata attached to the home directory.

Semi-stupid question, who creates the artwork that is used at times for the postings. These are really creative and well done.

An artist by the name of Joe Kim

Thanks! I want the whole comic-book now! and the origin story! and open-source tea-party merchandise! :-D

Slackware still says no to systemd. Though it’s now been three years since the last full release. I’m thinking Patrick was burned too badly from the company handling DVD sales, so he just keeps uodating without an actual new release. So it becomes a rolling release, except nobody has decided to announce that.

Michael

Dang! If I had know this I might have switched to Slackware years ago!

Came here to say this myself but noticed you had beat me to it. Running Slackware since ~2002/3

Slackware was my first distro — still holds a warm place in my heart for its stubborn retro stance. You really don’t need a GUI during install, for instance, and a single-user or singly deployed system has no need for systemd either. Tarballs are just fine. That said, I don’t think I’ve actually had a Slackware install since 1998…

I do understand why other distros have chosen administrative convenience over simplicity and UNIXy idealism.

Rolling release is awesome, when done right. (Arch 4 Lyfe!)

I’ve got to ask you about….the Ken Johnson action figure. Where do you get those?

That’s Ken *Thompson*, dammit!

Send five wrappers from Jolt Cola to….

I enjoyed the Perl-loving action-figure-statenment so much, reading the archive from the author now ;-)

I can’t even read this article in its entirety, because I’m still scouring the internet for these action figures. The world NEEDS these.

Computer science? That itself is an issue as a CS degree (which I do hold, so I’m speaking first hand) is really more about computation and theory rather than software engineering, and many “best practices” from the 70’s, 80’s and even the 90’s before we had the incredible tools at our disposal today are antiquated. Modularity, reuse, and and having an overall framework/architecture are key to modern software maintainability. One tool one job was great when your maybe 10’s of thousands of lines programs took 10 minutes to compile, but we work on 10’s of millions of lines codebases now. 1 tool 1 job doesn’t fit. The glue logic between them is an absolute nightmare. I watched this when I developed a very nice multithreaded data flow model for a job I worked, which though it mostly worked, it still had a few bugs (1 developer, 250K lines of code, 6 months, even the best architecture/framework development will have bugs!), so they decided to hire someone else to make “one tool one job” “small utilities” to do what I did. Initially, it seemed to work, but then when you needed this tool to feed that one, but not when event x happens, then it needs to feed tool y, and tool z needs to be able to take input from tools a, b, and c, suddenly you’re writing temp files, temp file converters, and getting everything to play nice is an unmaintainable nightmare of spaghetti code. It took longer to develop, and did far less than my work. The old way is the old way for a reason.

To develop on modern systems you need to figure out which architectural design pattern your problem fits (Your problem is NOT original!), find some middleware that deals with the vast majority of the framework you need to make to fit that design pattern, and pull it all together. Then develop the modules that fit in the framework. So this looks less to me like going against software engineering, and more like going against antiquated practices for smaller codebases with less functionality and less reuse.

I don’t see why you blame pragma because somebody made a bad design and even worse implementation. I assure you when you have framework with million lines of embedded code multiple architectures and OS variants and legacy implementation burden from 20 year guaranteed lifetime to support, monolithic is not the easiest type of implementation to debug.

systemd is not all that bad (though it reminds me of the windows startup process). In a way the very existence of systemd is proof that the UNIX way is still sound. The fact that you can replace the root of the user space process tree with something so different and get a largely functionally equivalent system really says something.

I think many people hold to the UNIX philosophy _because_ it predates the evils of cloud and *-as-a-service, and because its many independent small tools are easily augmented, replaced, or changed out based on your needs.

As far as I can tell *-as-a-service is something that commercial software companies do when their software reaches a level of maturity that precludes an ongoing revenue stream from customers buying new versions for the features. Finance people love being able to cut out capex and replace it with opex but I don’t like the sort of world that results from letting bean counters run the show. Same with the cloud: do you really think letting corporations rent their critical infrastructure by the hour (all opex no capex, thus no skin in the game and no inertia, and when they flail around wildly with this newfound freedom it’s employees and users who get hurt but shareholders are isolated).

Hm. I, for one, could perfectly well live in the “Rosedale home” if it wasn’t so close to the road and if it was in reasonable condition inside. I’m not particularly picky when it comes to looks. Besides, one can always roll one’s sleeves up and do something about the paint if it bothers one.

It’s haunted.

(oh, wait, that shouldn’t bother a werecat)

B^)

A nice little chat with the ghost to tell you its problem will solve this. Unless its a Fomora, these vile beings of Calash, the dogs call him the Wyrm, just needs to be removed by any means. Preferable with a claive.

Why resist a change like this?

First, because the systemd team regularly fails to understand the diverse way Linux is used in the real world. You hit on SSH issues, but what about shared storage home directories? Jobs executing on a user’s behalf after logout (a facility that systemd *already* disrupts without special configuration)? Custom PAM extensions for complex environments? What about usages that neither I nor the systemd team have thought of? Every time systemd swings their hammer, I end up paying for it with a broken workflow next time I upgrade distros.

Second, because the concept of “one tool, one job” isn’t just marketing. It’s a hard-learned lesson about avoiding the difficulty in adapting big, monolithic systems. Why was UNIX easily able to integrate innovations like encrypted home directories, network authentication, and SSH key login in the first place? Because the underlying system was flexible enough to allow such integration. Part of the objection to systemd is the hubris of thinking that *this* time, we know enough to build it right, without easy extensibility and compatibility; that we no longer need the lessons of the past. This is a mistake.

Third, the attack surface of systemd in general and this change in particular is very troubling. It again balloons the amount of binary code running as root, much of it under PID 1. All things being equal, I’m not a fan of that, and systemd doesn’t have a stellar track record.

I’m all for questioning how we do things and making changes where needed, and systemd has done much good, but in their arrogance, they’ve broken a lot along the way. The thought of that group redefining what ~ means puts a knot in my stomach.

I could not agree more. I would also add that as much as I like JSON, it is a nightmare to edit using non-pty command line tools, which you very well might find yourself doing if you break your authentication. One of the wonderful things about passwd and shadow is that they are easy to edit when things break.

As an atheist: Amen! :)

I use void linux+i3wm+vim, and am looking into guix linux atm, b/c I do not like soviet-style top-down “it’s good for you” changes, the saying goes “love it, leave it or change it” and void and guix let me do this.

I can’t second it more.

I run average network (60 machines) with home directories on shared storage and centralised user management.

Do not start me on how many times things broke. Last one, after switch to systemd was…. autofs ordering/shutdown

bug, which is unresolved in Debian for the last 6 years.

In short – if autofs doesn’t unmount the /home directory by the time shutdown happens, machines are stuck in “reached target shutdown” state, and not powered off.

I’ll third that! Systemd is far too focused on ‘real users’ and not on actual use cases. Even something as simple as really configuring logging is a pain or not possible. In practice it’s also hard to debug systemd properly since there’s so much interdependence.

Breaking ssh in any way is terrifying from the admin point of view. Broken backups… Broken maintenance tasks… Broken security scanning… Centralized user management isn’t actually _that_ hard if you commit to going all the way instead of trying to do it with baling wire and bubble gum, so I don’t see much benefit from that either. Making users fully portable in that way sounds like a great place to hide a hard-to-detect rootkit to me.

I’m not against change, and some of the points in the article sound like they could be useful in theory. I just get the very uncomfortable feeling that systemd has built a big security hole with flashing neon signs saying “Pwn me please!” They’re just waiting for someone to find the switch for the signs.

I am simply not qualified to discuss the technical ins and outs of systemd, but I get the feeling that it opens up vunerabilities which could bite hard at some point, the number one on distrowatch at present is MX which so far has not adopted systemd, but it may get harder to avoid it, I am posting this from DevuanDog Beowulf.

Agreed.

One config/tool for each job may make initial setup of even simpler systems more complex but it lets the admin choose all they need. I can see the appeal of unifying large chucks but in practice it is never going to be the best choice and starts making it into another clunky insecure windows like environment. Likely means rookie admins can’t leave gaping holes through ignorance as easily, but that should not be a problem – the rookies should be using a distro that defaults to a reasonably locked down setup. And when even the competent admins are at the mercy of one monolith functioning as intended,,,

That said I think every one of the systems I’m using daily is now systemd and only moderate annoyance caused by it. (When the distro goes that way its more expedient to tag along. I keep meaning to sort out MY own choices for this but who has the time to do all they want?)

We’re seemingly asked to embrace that while it wasn’t broken and didn’t need fixing, it’s getting “fixed” anyway and oh, by the way, sorry, but we’ve broken SSH.

Once again, I have to wonder why we’re permitting these fundamental changes dedicated to the desktop use case when Linux’s primary utility is on the server. Don’t misunderstand me; I use Linux for both myself and consider them both vital and wonderful. But even as a mid-beard, mostly desktop guy, I SSH into stuff constantly and I don’t want to leave a user session logged in.

They’ll fix it eventually..? How bout they keep coding until current functionality is at least matched instead?

I’m totally mystified why Poettering is being allowed to do all he’s doing.

It is way easier to change something then to improve something.

So please “change” the “improvement”

More than that, I’m mystified why he’s still allowed hands. He has the faecal-midas touch.

I’ll be taking that phrase, thank you

If you lived in the server realm, systemd actually has a lot going for it in that it actually makes things that eventually become extremely complicated… simpler.

I’ve been writing shell/perl scripts to solve these same problems over, and over, and over again… for the last 20 years now. I don’t like change. I know everything there is to know… about what I know. However, I have come to realise *that* is my problem and this is not a failing of systemd itself.

Systemd is a decent solution for the complicated problem of dependency management in a logical/extensible/future proof way.

That all being said, the side step they would need to take into the realm of PAM for home directory/ssh management does make me a bit nervous.

This affects way more than just ssh. Crontabs, at scripts, loading unique user profiles for database/application startup stuff… anything we currently just dump into a user home directory that is intended to run without the user currently logged in would need to be carefully thought out and possibly excluded from this brave new world.

I don’t think anyone objects to the idea that systemd makes complex things easier.

The problem is that it makes simple things harder. Try explaining how to make a RasPi set its clock from an RTC ,or how to make the system run a Python script when it boots, to someone who started using Linux a month ago.

Yes, systemd is a solid framework for managing a server farm that runs virtual systems in Docker containers, and needs a robust load sharing and failover strategy.

No, systemd does not make it easier to create an embedded test system that runs maybe four critical processes, and has to operate in, say, an assembly house fabricating a batch of boards for you.

At the point in history where a full Linux computer can be purchased for $5, and small embedded systems promise to outnumber desktop computers by at least an order of magnitude, the people who manage Linux distros have chosen to say, “hey, let’s turn Linux into VMS!”

The encrypted home idea and its incompatibilities (cron, ssh etc.) aren’t new at all. A faculty at my university used to have homes stored on AFS with Kerberos authentication. It had exactly the same issue with cron (files weren’t available unless there was a valid Kerberos ticket). Of course SSH can authenticate using Kerberos so authorized_keys weren’t required to provide strong authentication. Keeping cached copies of users’ authorized SSH keys extracted from their ~/.identity seems to be not a bad solution.

Well written article and nice artwork.

You pretty much summed up my experience with systemd. Having worked through dependency hell myself before, systemd comes with a certain elegance at solving those problems. I still don’t like it, but I do understand how/why it came to be. I have made peace. I’m sure I can roll with this new piece, as long as that elegance is maintained.

But, you can pry my vi(m) from my cold dead fingers.

BTW: Whatsup is no longer bound to Freebsd :)

When did that happen, source?

Like it or not, SystemD and the “movement” it is part of has made Linux far more end-user and serious medium size project friendly. I stopped using Linux for a time in the mid naughties because it was painful to use and maintain efficiently. Also projects would break on trivial issues.

A couple of years ago, I started to play with Linux again and mucking about with it made me realize that this environment was not the usual type of environment that tried to fight me at every point, but one that was a stable, reliable, portable and scalable one. A lot of that is thanks to SystemD and the philosophies behind that.

In the end, I do not think the core concept of everything needs to do one thing very well works on the entire spectrum of use-cases.

I truly believe that SystemD has saved desktop Linux as it stands.

I started using (Slackware) Linux in the mid naughts because it was easier to use and maintain more efficiently than Windows or Mac.

“In the end, I do not think the core concept of everything needs to do one thing very well works on the entire spectrum of use-cases.” I appreciate the insight that it took to write this so directly.

You’re dead wrong of course :P

Correlation does not imply causation. There’s nothing in systemd that would have impacted the end user experience to the degree you’re talking about.

Desktop Linux is more refined now than it was a decade ago because software generally improves with time. There’s not one single service/program that is responsible.

“There’s not one single service/program that is responsible.”

Which I stated. It is a mentality, not a single thing, but I believe SystemD is part of this.

Nah. Systemd is an symptom of a developer that worships a closed ecosystem (MacOSX) who works for a company that wants to have more control of the ecosystem (IBM/Redhat).

I was sad and disappointed when Redhat et al were able to push systemd because of their dominance of the GNOME project. The gray beards anticipated all of the horseshit that would come with systemd, (broken screen, broken logs, dns exploits, etc ), but the software world is often about change for the sake of change, not really for improvement.

Systemd makes systems more fragile, more difficult to recover from various failure scenarios, and more difficult to configure for many scenarios (syslog daemons or log aggregators [graylog] come immediately to mind.)

It’s a pox, but sadly it’s a pox that we will be dealing with for another 5-7yrs before someone with enough money decides that it’s shit and it should be a more independently modular init system.

For now, my NixOS and Arch systems eschew systemd.

Is abandoning five decades of documentation of slowly changing features in favor of rapidly replacing every part of the system a great mentality for improving usability?

Chrome OS has saved desktop linux.

– frequent updates without regressions

– proof this works in extreme circumstances: swift deployment of Spectre mitigations

– compartmentalized state and large signed read-only partitions

– high-quality hardware support across many devices with a uniform tree

– open source BIOS

– gentoo-based build system

– total rewrite of crappy portions NetworkManager (flimflam) and pulseaudio (cras)

– wayland

– Crostini: Cubes-like KVM containerized app model, including plan9-based and FUSE-based safe filesystem APIs

Chrome OS rejected systemd because it brought nothing concrete to the table but complexity. They use systemd-journald for early logs but nothing else from the lennart2020 make unix great again clown crew.

Chrome OS has their own implementation of ecryptfs-based homes called cryptohome. They don’t use systemd. There are specific reasons to use ecryptfs instead of LUKS like lack of a MAC and difficulty deleting data without decrypting it.

systemd guys are not serious people. Every other time anyone else tries to do something that systemd does, the implementation is higher quality, more secure, and less hated. SMF, launchd, containers in libvirt / LXC tools, encryption in Chrome OS, yellow pages. This is not about resistance to change but a visceral disgusted reaction to low quality poorly productionized needlessly complex code that is borne out as valid by the record of security bugs. Changes as deep as systemd have routinely been celebrated in Unix, even going back to the 90s, when they meet the quality bar. People are confused about this because there is nearby “linux desktop” code of lower quality, but none of that other code is running in such a critical spot where it cannot be ignored by people doing simpler non-desktop things, and little of that low quality linux desktop code exists in for example Chrome OS.

It’s systemd, like any other daemon. Please spell it correctly.

Between “mid nighties” and “couple years ago” there is around 20 years of development – are you sure that during this time only startup process was improved and that it was the major game changer?

I do have one question with the statement, we don’t need one tool for one job anymore. OK sure PAM is a nightmare and there’s to much clutter there, but where do you draw the line? If Systemd should gobble up $HOME then why not let it gobble up my repo managers, or my bash interface? What is the argument for why these things should be gobbled but these others shouldn’t? I mean I’ve written Linux Kernel drivers and that is absolutely one tool for one job because shockingly the registers for my Intel NIC are not the same for my NetGear NIC. Yes there are pieces that are shared (NAPI) because they share common ground but objectively they cannot all be gobbled into one super thing unless you bury everything in a million config options. At that point what makes the super mega config file different from just different files to begin with?

I’m not against the idea of handing more to systemd. I think it’s a pain in the A$$ sometimes but everyone fights with Linux on occasion. I just want justification for why this goes in systemd and this doesn’t besides the argument, “one tool for one job is old and as we all know old is bad”. Let’s discuss why it’s bad, make an argument not a statement. Sometimes old things are good because they came from a place built on mistakes that we should not repeat instead of blindingly running forward through silicon valley reinvention of phrenology https://www.faception.com/

At first, systemd copied the launchd system in Apple’s OSX, where process/daemon were started only on first connection to their port. This is very effective to solve dependencies and parallel starting and now, thanks to this change, the linux boot is very fast (easier to set up than upstart).

There’s nothing that prevent doing the same for user’s home.

What’s missing from a functional point of view is a tool like sudo but for your data. You want to allow some binary/process to access your data (like SSH as you’ve written, but also your backup process, and any other server process).

This can be done easily like the current webstack is done, you’d call a command like: systemhomectl –generate-token

and store this token in your user’s JSON file. Then you’d go to the service file for the process and add the generated token here. As soon as the process tries to access the “home” directory, systemd will spot the access (just like autofs), check the service’s file, find the token, assert the token exists in the user’s home and if it does, mount the user’s home and resume the access. If the service is a forking service (like SSH), then it can also unmount upon child’s exiting. If it’s a permanent process, then it’s trickier and that would requires some inotify tracking (but it’s still doable) or cooperation from the permanent process.

Systemd is a wonderful operating system, it only needs a good init system.

The more I learn about systemd, more inclined I am to switch to BSD or systemd-free Linux distros.

Mmmm… so I can deduce from your article that the next piece of the puzzle will be…

systemd-sshd!!!!!!!

:-D

don’t be silly it’s….

SUPER-SYSTEMd

then will come….

SUPER-SUPER-SYSTEMd

All your RAM are belong to us!

Durn whippersnappers!

Too lazy to rebuild a kernel the same way we did it,

with a clay tablet and a reed stylus!.

clay is for pussy’s, we did it with fingernails in granite.

I admit that systemd *works*, and solves several problems with older init systems.

However, I can’t help but imagine that in 20 years everyone will be moaning about how impossible it is to keep the “systemd monstrosity” up-to-date, because everything depends on everything else and trying to modify one part breaks the rest.

Maybe. But then we’ll have 20 years more computer science to help us figure out another way. We’re still in the early days of the computer.

No, we aren’t. Computer science has been a thing for going on 80 years now. We have a pretty solid handle on it.

I’m reading “No, we aren’t. Yes we are. I don’t understand waaaa”

If I wanted an OS with a Registry, I’d be running Windows. Systemd needs to die.

Linux has had “a registry” all along, it’s just in a slightly different format now.

Oh really? Please explain.

All of /etc and the scattered files with different formats in home directories and hidden in other obscure locations. Just because some of it was easier to read with cat doesn’t mean it’s not a registry.

Please tell us which one.

Some years ago I noticed Gnome running a Corba server on my desktop and gobbling lots of RAM. I said something to the effect of, “wow Linux is just as complex as Windows now”.

“Technically, each tool and component in systemd is still shipped as its own executable, ”

Huh?

So, somewhere in the Mint distribution is the ability to run a “system console” in a term window in the corner of the screen?

How?

And no, cat dmesg, or top is not the same thing.

Next up there’ll be a service where you can check which distro uses homed. Let’s call it haveibeenh0med dot com…

Homed.sux?

I’m with the author – I’m sick of all the people who claim that System D creates more problems than it solves.

Too many people seem to believe that Microsoft funded and promoted Lennart’s work behind the scenes via Redhat.

Too many people believe that Lennart is a tool for the people behind the scenes working on the inevitable slide into a closed Linux.

Too many people believe that the GPL license leaves only one route for chaining users down, and that route consists of making it more complex and opaque to understand what is going on in your system. They say that if anything, it makes it more painful to maintain, debug and develop software. And that it is making room to slide in external code to spy on you that almost nobody will detect. And they complain very loudly that it will only get worse.

In the end, they say, systemd (and it’s ilk, such as udev) will destroy the last vestiges of what was once an open system.

However, worrying about this is pointless. Nobody cares about the point behind GNU/Linux. Stallman doesn’t photograph well, and he’s pedantic. Cox is a cat loving nut-job on a tiny island. And all those KRT old guys and countless others will never appear on dancing with the stars, nor make anyone say “I want to be like them!”

Those old guys, the ones who came up with these designs (and surely couldn’t understand anything about the foundations of CS) created a defective ecosystem right from the start. Oh, sure, four or five decades of improvements on a paradigm that has delivered no more meaningful results than COBOL or nand gates. Pish Tosh, I say!

We don’t have to respect them, or what they did, any more than we do Babbage, Einstein, Turing, or all those old dead guys who wrote the constitution, or the ones that started the EFF, or even the nut-jobs who think that the right to repair means anything. Screw those guys. They were yesterday, and yesterday’s gone!

So I say, lets get on the progressive bandwagon. It’s time to wipe away this old doddering nonsense once and for all. We need to promote Lennart and system-D to all the nay-sayers and luddites who think that old concepts like freedom and access matter. And lets make sure that WE TOO knock all these old bearded guys off their pedestals and turn the future of linux over to people like Lennart – people who know how to get things done in the face of other people’s inability to think, act and reason. And that’s why I want to propose the following:

I want to be the first to suggest that Lennart run for president in 2020!

I think we’d all agree that what’s good for Redhat (and Microsoft) is good for America!

Having seen what he’s done for Linux, imagine what he could do for our government! Many in the computing world believe he brings the same vibe to Linux that Kennedy brought to the nation in 1960. Large numbers of Linux admins, IT people and system programmers would love to see him get the kind of recognition that he deserves.

So get off my lawn! I’m gonna put a “Vote for Lennart” sign over there right where you’re standing.

I’m SO happy there is a pond between us…

If that’s what it takes to stop Lennart from creating even more mess, then yes, I’d gladly vote for him!

This whole project is one abstraction layer too many. Had so many problems due to it, so many simple things that can’t be done any more … Really tired of it. Only a JSON away to fsck-ing my brain. I should be happy it’s not XML or what? What a pile of crap!

Nice bait

You make a fundamental error when you write the above. It’s not about “belief”. Its about the lost hours and days and hair-tearing of trying to make a system boot again, after some OS upgrade. Copying cryptic error messages onto pieces of paper so that you can run them over to another machine that has a working web browser. Hoping that they don’t scroll off the screen before you’re done copying them. Trying to figure out how to edit some config file, when the system hasn’t yet booted to the point of mounting a file system, or allowing you to run a text editor. After you do that 3 or 4 or 5 times in the space of a few months, you too will be cursing systemd.

Stability is key. I want my computers to boot. I don’t want to be a rocket surgeon. I don’t want to live in trembling perma-fear of an accidental reboot, knowing full well that I have a 50-50 chance it won’t work after the reboot. It’s an insane way to live. If/when systemd “just plain works” out of the box, that will be great! But that day still seems to be far off in the future. FIX IT SO THAT IT WORKS!

;)

I’ve had almost only pain with SystemD, mainly due to its monolithic nature and that it seems like they’re going the Microsoft route with “we know better than the user” design, combined with a proprietary way of debugging it.

Honesty, if you threw it in win10 it’d fit like a hand in a glove given they’re both similar kinds of looming disasters, bad practices, forced design decisions and Molly coddling even when you don’t want to be.

Sounds more secure if anything to me, because home directories are no longer “just another directory” in “some filesystem somewhere who knows only the kernel on any given day”

One has to wonder about the soul, character, and humanity of seemingly brilliant people such as Kay Sievers and Poettering and Harald Hoyer. Yes, the sbin,bin,lib file structure was messy, and they were probably doing the Linux and BSD communities a favor with the merge to the usr tree. But their character problems emerge when you look at their response to criticisms of their pulseaudio, avahi, and systemd projects. They hid behind and used the corporate power of their employer (Red Hat), said that you were stupid if you could not agree with ALL of their design choices, blamed Torvalds for all of the ills of all Linux distributions, and insisted that only they can know what is best for us plebeians.

And I used to respect German engineering…

As all three of a server developer, desktop user, and mobile user of Linux, I’ve personally found systemd in general to be far superior to the available alternatives I have any experience with in all three scenarios. It’s not perfect and can be a pain for sure but SysV either couldn’t do or delegated to a hairball of distro-specific services most of the things that SystemD is a pain with.

I have very little experience with BSD to be fair but I wonder what circle of Hell whoever came up with the MacOS launchctl diskutil and the entire Android developer ecosystem crawled out of and through which portal because we might need to send Doomguy there

I always stop reading when I run into the word “seamlessly”. A bad joke and the most annoying word in the english language.

But ignoring that. There are many questionable “typical use” cases that are assumed in this new systemd design. Here is a short list:

1 – That typical use is a real human locally logged in. This is rarely the case for me on most linux systems I work with.

2 – That a user wants their home directory encrypted. Not me.

3 – That users log out. I rarely do, the session I am on now has been running for weeks.

So this new design seems to be driven by use cases that don’t apply to me. All change will cause disruption. A designer naturally feels that their “good ideas” will outweigh the pain involved in change. I am stuck with systemd, but can’t point to a single thing it has “made better” in my use of linux.

“I’m the minority user. Listen to me and cater to my needs. Linux is all about me me me!”

Grow up.

ME, is embodied in the OSS philosophy, where people write software for their needs first, THEN donate to the community.

Getting rid of /etc/password and administrator-managed accounts on every machine has to happen sooner or later. Instead of logging in with a {username,password} pair, a digitally signed and expiring assertion of attributes that might include username should be sufficient. For read-only users, you may want to assert attributes about a user to avoid personally-identifying information in access control lists. For users that do update the system, they should be able to setup their OWN home directory given that the username is already signed. A user could be in a role that grants update access, yet the user is not necessarily identified as an individual. Users may rotate through job roles that are written into the access token. GDPR is one example of how the world screwed up and required you to identify yourself everywhere you go, and there was an attempt to fix it without creating a technical enforcement mechanism that reaches the goal.

Servers without an /etc/passwd or necessarily enumerating their users will have to happen. You may well login to one machine to get a token, and go to a volume mount that is not associated with any /etc/passwd in particular.

I avoid systemd like plague. Fortunately there are enough distributions out there which are systemd free:

https://sysdfree.wordpress.com/2019/10/12/135/

You can still run Debian without systemd by simply adding in /etc/apt/preferences:

Package: systemd*

Pin: origin *

Pin-Priority: -1

Package: systemd*:i386

Pin: origin *

Pin-Priority: -1

and then do: $apt-get install sysvinit-core sysvinit and reboot.

I have also replaced udev-systemd (which is not as toxic as PID 1 systemd but it was constantly breaking things) with libeudev1_3.2.7.3_amd64.deb and I’ve been happy since.

Another Poettering creation which was constantly giving me pain and now I remove from all my systems is pulseaudio.

Agreed, when practical this type of roll-back solution often makes sense.

As the Second-system Effect will not stop till it collapses a project:

https://en.wikipedia.org/wiki/Second-system_effect

While I have found some utility in Systemd timers and service configurations, the functional roll is user Desktop focused and tends to break a lot of other use cases already. Anyone that uses Linux for serious networking tasks will find Systemd a royal pain as you spend 80% of project hours fighting its default poorly-documented restrictive behaviors. Also, the unified logging system is a poor reward, when the memory footprint takes more space than an old kernel.

init was far from perfect, but at least it was well defined enough to be usable.

In some ways, bringing the same concept to /home seems like yet another Ivory Tower project.

https://en.wikipedia.org/wiki/Ivory_tower

It took several weekends just to get Ubuntu 18 to be usable again as some frameworks are only for Canonical’s OS, and one can only wish it was as easy to handle as a pure Debian release.

For containers most use this now:

https://www.alpinelinux.org/

For small board systems I would choose:

https://www.gentoo.org/

Ugh that’s right, he did write pulseaudio. What an utter bastard.

@Sven this is well-written incitement but two nits: “it won’t happen over night” – overnight is one word. “It generally begs the question” – no, it doesn’t, begging the question is a rare logical fallacy; it *raises* the question or omit the verbiage and simply ask “Why do we insist …?”

systemd is fine. It uses cgroups in Linux for better resource allocation, unit files are a fantastic distribution-independent way for developers to specify how services and programs should operate, many of its utilities are better than what came before, and the documentation is excellent. I understand none of that matters to old-timers with working systems, good luck keeping them running while the majority progress.

I accept that systemd unified the init system, and in certain cases sped up booting.

Problem is – it broke at least some niche (and not so niche) use-cases, and broke them badly. Some bugs in debian will soon be old enough to go to school. But as long as it is single user laptop – everything is fine, come along.

What I do not like, is how it absorbs more and more components, like grey goo. And how it’s author reacts to criticism.

Also – as someone wrote – for every feature in systemd – there are 3 versions – when it is promised to be implemented, when it works as described, and when it gets broken again and replaced with shiny new feature.

“I understand none of that matters to old timers with working systems, good luck keeping them running while the majority progresses” — what IS that, number 3 on your list of clever put downs? You guys love that line. First off the condescension is blatant. Secondly, you love to speak of “the majority” as if everyone were attending your rallies, when in fact the majority of system derp users are just dragged along due to your relentless empire building. Thirdly, to anyone content to ride the system derp crazy train, good luck keeping your systems STABLE when LP gets taken by the Next Shiny

The fact you guys pop lines like this one at the first sign of dissent only proves you aren’t operating on behalf of the community, but on your own ambitions to own all of Linux.

hmm. this is becoming more a Poetterix operating system than debian or suse. I dont know if I am going to like it.

Portable homes were a very real thing long ago. Like so much, they were forgotten and the abortion that is systemd has re-invented them badly. The comments about the issue with ssh is what makes me say badly. That was a solved thing back under automount.

Automount was a general solution too… It worked for more than just home directories, automatically making resources as needed and then making them go away.

@sven

you make it sound as though stuff that should be logically gathered together is strewn about with wild abandon and the system used is somehow a mess brought about by stupid people who did not know any better.

You gloss over the reason why the “passwd” and “shadow” files are distinct and separate from each other. And that is to deliberately prevent passwords (even in their encrypted form) from being accessible by software which does not have the necessary access rights. By putting everything in one file you end up needing a specialised program to control access to the data held in that file. You also need a special mechanism that allows the special access program to distinguish between which other programs can access which data.

In case this seems like a week contrived argument to the less well versed *nix user I should stress at this point that there are ***MANY*** software components that need to protect their configuration data. Consider Postfix (the email system used on many systems). Would you be happy with someone else reading your mail or sending spam out in your name. Then there is vsftp, dovecot, mysql and a whole lot more. Look at the way these configuration files are locked down in /etc.