A fundamental truth about AI systems is that training the system with biased data creates biased results. This can be especially dangerous when the systems are being used to predict crime or select sentences for criminals, since they can hinge on unrelated traits such as race or gender to make determinations.

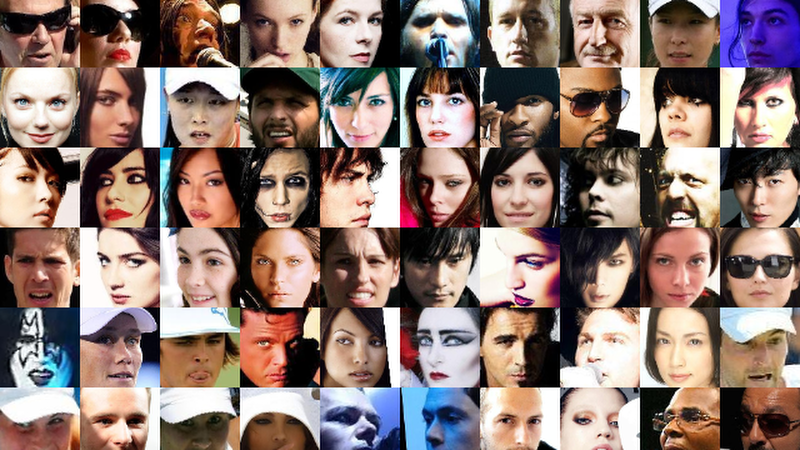

A group of researchers from the Massachusetts Institute of Technology (MIT) CSAIL is working on a solution to “de-bias” data by resampling it to be more balanced. The paper published by PhD students [Alexander Amini] and [Ava Soleimany] describes an algorithm that can learn a specific task – such as facial recognition – as well as the structure of the training data, which allows it to identify and minimize any hidden biases.

Testing showed that the algorithm minimized “categorical bias” by over 60% compared against other widely cited facial detection models, all while maintaining the same precision of detection. This figure was maintained when the team evaluated a facial-image dataset from the Algorithmic Justice League, a spin-off group from the MIT Media Lab.

The team says that their algorithm would be particularly relevant for large datasets that can’t easily be vetted by a human, and can potentially rectify algorithms used in security, law enforcement, and other domains beyond facial detection.

How about we just don’t go down this road? Do we really need this tech? Do we really think it’s not going to turn into something miserable sometime in the near future?

But I guess there’s no point, since the zeitgeist is that technological progress is inevitable no matter how questionable or grotesque, and we are powerless to stop it so we may as well make it ourselves. It’s really horrible. I wish I had a choice as to whether I or my children are surround by this garbage in a decade.

I guess the shorter way to have said this would simply be “Minority Report was not meant to be an instruction manual.”

Or that The Police song “Every Breath You Take”, was meant to be an entertaining tune about an extremely creepy lovelorn stalker and not a instruction manual on how to setup a police state.

https://www.youtube.com/watch?v=TH_YbBHVF4g

It is a tool — how it is applied is a matter of ethics. It is a luddite response to just wish technology went away.

Sorting recyclables for 8 hours a day is soul crushing work, manually sorting items into bins of clear glass, green glass, paper, cardboard, plastic #1, plastic #2, etc, and trash. It is a great application for a trained image classifier to do this tedious work quickly and cheaply.

Yes, it is also troublesome that we may soon have cameras everywhere ID’s every citizen many times a day tracking our whereabouts in the name of preventing terrorism or keeping children safe. But we don’t have to simply accept that this happens — we can push for laws to put boundaries on what is allowed.

…because surveillance institutions are famous for obeying the law and respecting boundaries :-D

Sure laws will protect us.But they are never enforced. The NSA, Google and Apple all spy on us with impunity. One big take away is that BigTech does not respect human rights one iota and will in fact get into bed with the worst tyrants around for the right money.

Oh yeah it’s cool to throw workers in the unemployment line in favor of machines. Like being unemployed isn’t soul crushing.

Yes please! Replace every worker in robotic soul-crushing jobs with actual soulless robots. At the same time, do what you have to to mitigate the growing problem of mass unemployment. Change brings problems but there are many possible better situations at the far end…

History has shown that automation leads to more employment, not less. Sure, if you could snap your fingers and replace every human job with a robot, then you’d be SOL, but robots are typically brought in to augment human work force. They also increase output and profitability and usually lead to higher pay for the humans around them.

@Jake – You may want to educate yourself on the population of horses during the industrial revolution.

@ThantiK – people are not horses…

https://www.forbes.com/sites/kweilinellingrud/2018/10/23/the-upside-of-automation-new-jobs-increased-productivity-and-changing-roles-for-workers/#784438557df0

I’m an engineer that makes these “JOB STEALING ROBOTS!!” and I see it in every single business I work with. They are using robots to increase productivity and streamline the most mundane tasks. There isn’t a single company that I’ve been on site with (at least 200 factories in the last 8 years) that was straight up replacing people with robots or other automation. People were either shifted after the automation was running, or the automation was brought in to do a new task. Most businesses were actively hiring humans as well.

What you don’t see is that the people who are replaced by robots are shifted up to more managerial positions, to do paperwork and sales, or design and planning. These are specialized/expert positions that require knowledge and experience.

The trouble is that when the shop floor jobs vanish, there’s no more entry level jobs for people who don’t know the trade already. There’s no new hires coming in to stamp a bunch of sheet metal for minimum wage while they’re learning the ropes, because the machine is doing that. This means the future hires for all the specialist positions become people who have no concrete idea of how the processes work down at the nuts and bolts level. Finding qualified workers becomes very expensive.

The responsibility and cost to actually train people into these remaining jobs falls onto the education system, and ultimately onto the people themselves. Then the businesses complain that there are no qualified STEM people for low enough wages. Well guess why? You’re not going to get a 22 year old MSc with 5+ years of work experience straight out of the school. But if you can’t hire them without work experience, then how are they supposed to get any?

The group of people who actually qualify for the remaining jobs gets smaller, and the quality of new hires is dropping, because people opt for the easier options that don’t leave them with hundreds of thousands in debt and shaky career prospects.

The bar for entry into trade and services is lower and there is still the need for actual human labor. That’s where the people will go. Unfortunately, these are not productive jobs in the same sense as industry. More people leave the industry and enter services, which causes over supply and lower wages, as well as high economic inefficiency because more and more people are making their living consuming rather than producing wealth – so the gulf between rich and poor becomes wider.

All this just goes back to the old point: if a human being can do the job, it’s always more reasonable to hire the person. If you throw the human onto the curb and build a robot to do the job, you end up paying both the man AND the robot – one way or the other.

>”or the automation was brought in to do a new task.”

Aka. the automation was used to displace future entry-level hires. Same effect. Some undergrad is now dropping out of school because nobody is hiring, and they have to make ends meet somehow.

Meanwhile, your factory owners are complaining and demanding more funding for STEM education because they’re seeing a lack of qualified applicants and are blaming it on the education system.

No one is shutting down the recycling plant AI program. What again is the need to use humans?

You should go and read about what the Luddites actually represented. They weren’t some kind of cult wishing to smash all machines in general and go back to a barbaric, primal state of living. That’s the connotation they now have after over a century of demonizing labor movements.

And it’s disingenuous to take a comment saying “maybe we shouldn’t be building this one specific technology” and blow it out of proportion by implying that is equal to wishing all technology should go away. Come on, think. It’s fine to ask the ethical question of whether a specific tool should be built or not. It’s not reactionary and it’s a question that should be asked much more often. It never is these days; the adherents of ‘disruptive’ tech wantonly build machines of enormous and irresistible power without seriously considering how that technology will be used. That’s not inevitable. It’s irresponsible.

All tools can be used and misused, but that doesn’t give literally everything an ethical green light. That’s a cop-out. Some tools have obvious legitimate uses and some tools absolutely beg for abuse. Say we had an invention that on one hand could easily remove bottle caps but it could also create a nearly inescapable state of totalitarian control for the rest of time. Hyperbole for the sake of example, but it’s actually not ludicrously hyperbolic with this tech. We wouldn’t be able to say in good faith that it’s just a question of the user’s ethics and pass the buck to them. A whole gaggle of them throughout the future. One of those people is obviously going to be exploit it. The ratio of legitimate utility to potential for abuse is out of balance. Maybe we should just find a different bottle-opener design, eh?

Also facial recognition is not general-purpose CV like sorting recyclables by material.

I have very little faith that laws will ensure it’s used ethically. That has almost never happened historically. Not until it does monstrous damage at least. You’re putting a tremendous amount of faith in all future regimes in the world, and that just seems insanely short-sighted to me. We’re fine without this one.

I’d say we don’t really have a choice, not anymore certainly if we ever had one – might as well try and turn the progress in the right direction.

Why would you start that negotiation by ceding so much to the enemy? Starting a movement by saying “well we got no choice but I guess we could adapt to it a little and only get screwed severely instead of catastrophically” isn’t going to garner any support. We ought not to be fatalistic like this; all the good in this world started at a point from which it seemed impossible to most people. Yet some group of optimistic lunatics can often make things work.

We can hedge our bets by trying to steer it in a better direction while simultaneously pushing for it to be banned entirely.

If you impliment machine learning to determine sentancing then you should be the one in jail.

It is imperative that anything related to law be heavily scrutinized by all parties, and pretty much all vaugely-modern AI is black-box magic to everyone who hasn’t spent the last decade studying that particular implimentation.

Even a spreadsheet is dangerously obtuse for legal matters if it’s not just storing plain data values.

AI is the exact opposite of the legal process. You’ll still have a hard time pin pointing the exact rules and circumstances why a certain decision is made. The actual AI math really doesn’t care i it was for driving a thermostat or a truck or determining that if someone is guilty. It is just a bunch of numbers into the system with some coefficients you get from some training process. The training process can be easily skewed or misapplied.

The legal process is highly depends on rules and previous cases. As such, any outcomes are clearly documented the reasoning. The old if-then-else rule based coding at least give you some idea how it arrive at the answer. i.e tractability.

AI is useful for finding the reverent case laws or stats for preparing/arguing a case, but someone should be in the loop.

Now that you mention that, I wonder what would happen if an expert system( if-then-else chain) was used to teach a neural network. I would love to see if the network can mimic the expert system to a high enough accuracy as to replace it.

Why are all these AI projects about faces?

Leave peoples faces alone, its gross and creepy.

We’re going to end up in one of the Scifi stories where everyone has to wear masks in public to keep a hold of their self worth.

It’s all about the control of humanity and total destruction of privacy.

And if you need any more proof? Look at where it’s banned. It’s the same spots where it’s being developed. For some reason the bay area doesn’t want facial recognition on their own streets. Hmmm.

They are fully aware of what they’re doing.

I agree about the faces. Leave them alone. We have 4 dogs in the house and they have figured out that they can crap in the house and we don’t know who to blame for what, so the problem is spiraling out of control. What we really need is fecal recognition software.

This is no world to bring not-yet-conceived souls into.

Don’t be a defeatist. Don’t let them win with their “the sky is falling” brainwashing. The only thing evil needs to triumph is for good men to do nothing. The world needs good men. Go forth and make them.

And do your part to prevent this tech from becoming ubiquitous. It isn’t inevitable like so many people seem to think.

So, because we don’t like the outcomes, we change the inputs, automatically?

Rather than use more real data in our dataset and a clear process, we reweigh certain data points to get the outcome that we want?

If the first problem is that we don’t get good (reliable) outcomes because our dataset does not have a large enough population of a particular type, doesn’t that just mean that we need more real data rather than more fudge?

It’s incredible to me that AI systems are used for prison sentencing. Why would any defense attorney not challenge that sentence!?

Take your outrage elsewhere.

Social justice now guarantees research dollars.

Be smart and find a way to cash in before someone else steals it.

This comment made me cringe, laugh and sad at the same time. Chapeau monsieur!

I also farted at the same time. It was intense.

And now I know where Google got it’s people to work for a bloody tyrant like Xin of China.

What revolting cynicism. You aren’t going to be able to make a decent future with that.

The tragedy of progressivism is that the present is never good enough, and when tomorrow becomes today, all your beautiful solutions will turn to objectionable atrocities.

There will always be “social justice” to guarantee that there’s new banners you can march under, to provide reasons to raise money, and to do research under a grant from a right-thinking government.

All you have to to is take the money and keep a low profile; you don’t want to become the driver of the bandwagon because then you can’t jump over to the next one. You just want to ride it for as long as it runs.

This is what I just learned in science class:

Make an observation.

Ask a question.

Form a hypothesis, or testable explanation.

Make a prediction based on the hypothesis.

Test the prediction.

Ignore test results and change data if needed to support prediction.

Same joke told from a slightly different angle:

An empirical economist uses data to reject incorrect theories.

A theoretical economist uses theory to reject the data.

Change “economist” to your favorite field of study…

Still trying to overcome the old (racist, sexist) ideologies, actually. As long as we keep feeding our AI the result of, for example, past sexist hiring practices, it will keep learning and thereby reinforcing those past acts of discrimination.

And why? Because it’s an ideology proven not only for decades, but for millennia. :-)

It’s a self-reinforcing bias. You start with two populations in 1:1 ratio, and if there’s a slight random preference to one, that turns appears in statistics like those people have better qualities for the job because they’re more represented in the sample. If the AI starts to prefer that which is popular, it makes it popular, which makes the AI prefer it even more.

This is what happens with people as well, because if men are preferred over women, the women won’t bother training for the type of job and so there’s fewer qualified applicants, and those who are qualified face a large number of equally or more qualified men applying for the same job simply by probability. Add in the fact that positions and job opportunities are gained through nepotism and friend networks, and men and women have different social circles, there’s natural barriers why they segregate in the workplace as well.

This is why there are men’s jobs and women’s jobs still in the era of gender equality and why they aren’t going away any time soon. What those jobs are is really arbitrary – they’re not based on whether men are better at this or women better at that. They’re mostly just inertia of history.

“and if there’s a slight random preference to one” sounds exactly like evolution, doesn’t it?

[not saying it’s a good thing or we should keep it that way. most of the world is acting against evolution anyway. e.g. by using medicin]

It’s not proven, it’s just used.

They made an algorithm and fed it data. It didn’t give them the results they wanted, so now they are changing the rules. That isn’t science. But, I guess it is the natural evolution of the “Everyone is Special” and “Everyone Wins” culture.

yep if the outcome doesnt meet the expectations just change the input – isnt that how “science” is done these days.

How is this not just weighted estimation? Survey statisticians have been using sampling weights for eons to make estimates based on samples be more reflective of the population of intetrest.

As a (former) statistician, I second this comment.

AI people are essentially doing statistics with an equation/tool that they don’t understand, without really bothering about any of the difficult mathematical rigor, and applying it to high-visibility projects. It’s like statistics with none of the hard work, but added parties and VC funding.

Not bitter at all.

Importance sampling.

upvotes: 2^1000!

They are just reinventing statistics, in much more nebulous way.

Even worse that our tax dollars our funding it often with students who never grew up in a free society, and don’t see its value.

That was exactly my though…

Or more precisely, if the accuracy didn’t change after removing the bias, then the bias has no impact on the decision making. So, it’s not a bias. At best, it’s noise.

Guys, when Adam Savage quoted DungeonMaster and said “I reject your reality and substitute my own!” he was joking around. He wasn’t trying to teach you an alternative to the scientific method.

It looks like you’ve had a little too much to think today. Maybe a little bit of re-education is in order….

I mean the accuracy rate of facial recognition on the general public (which it hasn’t been specifically trained for each individual) is abysmal. Reductions in bias could be pure noise at such low success figures.

It’s not like the face unlock on your phone, which is used to recognize just one person and is rigorously trained on that one person’s easily-accessible data. That works fine. So yeah, it’s probably going to continue being really shoddy and inaccurate and biased for a good while. And perhaps it should. It’s basically an updated law enforcement technical scam like the polygraph machine.

Considering all polygraph machines are basically snake-oil and props used to pressure a person into taking a plea bargain or forcing an investigation to open whereas it would have otherwise been thrown out.. I think this design is precisely what they want. I don’t believe they really want it to work reliably, they just want people to think it works.

Those of you talking about ‘justice’ etc really need to go read the paper. The issue is that facial recognition algos are often trained on datasets with too few people of racial/gender/hairstyle/background-color etc. Because of this, they have trouble identifying people in this smaller set, largely b/c they’ve been badly trained due to proportionally little data.

If you train an image classifier on one black person, Fred, and everyone else white, it will say that every subsequent black person it sees is Fred. That’s the issue. They’ve managed to train the network to ignore the “minority” features, so they’ll mis-identify a black person who looks like Bob (a white guy) as Bob instead.

Why the researchers can’t get more photos of women or black people is application specific, I guess.

But the point here is not that any social injustices are being corrected. If anything, this and other facial recognition systems will surely be used for evil. But here, they’re at least getting at a more accurate evil. Or something.

(I’m not saying that facial recognition is per se evil, just that every use of it that I’ve ever seen so far is.)