Who would have thought that software packaging software would cause such a hubbub? But such is the case with snap. Developed by Canonical as a faster and easier way to get the latest versions of software installed on Ubuntu systems, the software has ended up starting a fiery debate in the larger Linux community. For the more casual user, snap is just a way to get the software they want as quickly as possible. But for users concerned with the ideology of free and open source software, it’s seen a dangerous step towards the types of proprietary “walled gardens” that may have drove them to Linux in the first place.

Perhaps the most vocal opponent of snap, and certainly the one that’s got the most media attention, is Linux Mint. In a June 1st post on the distribution’s official blog, Mint founder Clement Lefebvre made it very clear that the Ubuntu spin-off does not approve of the new package format and wouldn’t include it on base installs. Further, he announced that Mint 20 would actively block users from installing the snap framework through the package manager. It can still be installed manually, but this move is seen as a way to prevent it from being added to the system without the user’s explicit consent.

The short version of Clement’s complaint is that the snap packager installs from a proprietary Canonical-specific source. If you want to distribute snaps, you have to set up an account with Canonical and host it there. While the underlying software is still open source, the snap packager breaks with long tradition of having the distribution of the software also being open and free. This undoubtedly makes the install simple for naive users, and easier to maintain for Canonical maintainers, but it also takes away freedom of choice and diversity of package sources.

One Package to Rule them All

To understand the situation, we should probably take a step back and look at what snaps actually are. Put simply, they are a containerized software packages that include libraries the given program requires to run. The idea is that developers could release a single snap that would work on essentially any modern Linux system, rather than having to create distribution specific packages. In theory this saves time and effort on the developer’s part, and makes sure that even users of more niche distributions can get access to the software they want.

Naturally, there are downsides to distributing software like this. For one, a snap package will always be larger than a traditional package for the same program, as all the dependencies need to be shipped with it. Since many programs will naturally have the same dependencies, this means a system with many snaps installed will be needlessly wasting storage space on redundant data. Although when even entry level systems are now including terabyte hard drives, this is perhaps not as much of a concern as it would have been in years past.

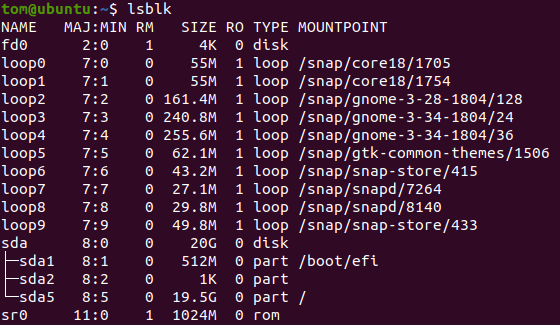

Snap packages also tend to be slower to run, in part because they are actually compressed filesystem images that need to be mounted before they can be executed. Some users find this element to be especially annoying from a system maintenance standpoint, as every snap package you install will actually show up as a mounted filesystem.

There’s actually been some talk about adding a special flag to mounted snap packages so that common tools like mount or lsblk won’t show them, but obviously that leads to its own problems. After all, there’s value in being able to determine just how much of your disk space they’re taking up.

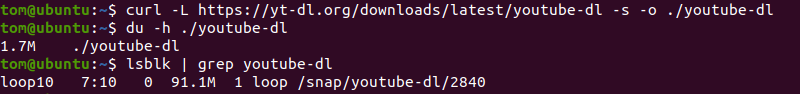

For example, let’s take a look at how the snap package for a common tool compares to installing it directly:

As you can see, the difference is substantial. If we download youtube-dl directly from the developer’s website, the script only takes up 1.7 MB on disk. But the snap package of the same program weighs in at an incredible 91 MB. It’s clear how this problem would be compounded as more snaps are installed.

That being said, there’s undoubtedly demand for this sort of “universal” Linux package. Enough that there are at least two other competing approaches which operate under similar principles, Flatpak and AppImage.

The Chromium Debacle

From a system resource standpoint, containerized packages clearly aren’t ideal. On the other hand, many would be more than happy to take the performance hit if it meant they had access to the latest versions of popular programs without having to wait for them to arrive in their distribution’s native package repository. Users should be free to decide for themselves which route they want to take based on their personal needs.

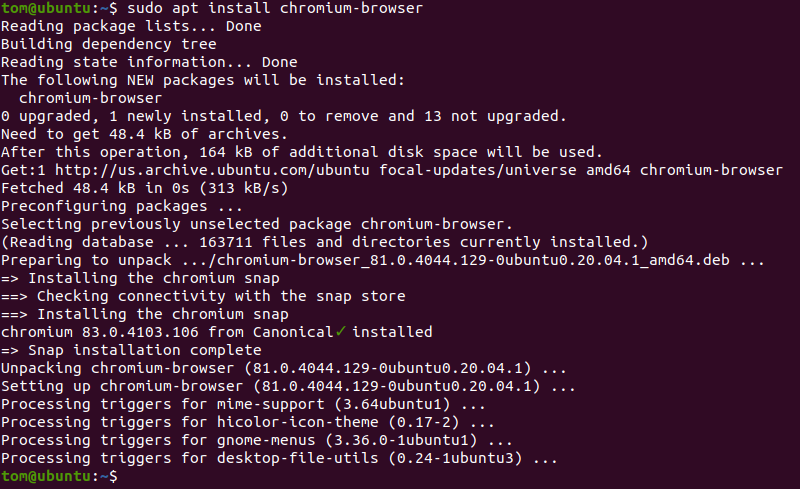

Which is what makes Canonical’s handling of the Chromium package in Ubuntu 20.04 so troubling. Let’s take a close look at what happens when you attempt to install it through apt:

While we asked the system to install the native package, what we actually receive is the snap. The user is given no choice, no warning. If they weren’t paying close enough attention, they wouldn’t even realize what happened. At the risk of sounding overly dramatic, this is subversion.

To be sure, there are valid reasons that Canonical would want to distribute Chromium as a snap. Rather than building versions for each supported release of Ubuntu, they can push out a single snap that will work on all of them. This is especially true of older LTS (Long Term Support) Ubuntu releases, which might otherwise be stuck on an older version of the browser due to outdated system libraries.

By using this “stealth” installation method for the Chromium snap, they can ensure that the process is as streamlined and painless as possible for their users. Indeed, the majority would likely not even notice the change over happened.

But for those that did notice, it’s a huge deal. Many users left proprietary operating systems specifically to get away from this sort of behavior. These people want to be the arbiter of their own computer, and don’t take kindly to important decisions being made on their behalf without even so much as a warning they’re happening. These are the users that Clement Lefebvre had in mind when he promised future versions of Mint would never install snap packages without prior consent.

Snap to the Future

While Canonical is no stranger to walking back on unpopular decisions, snap packages are almost certainly here to stay. The logistical advantages of containerized packages are simply too great when your whole company is structured around providing support for multiple versions of a Linux distribution. Conversely, the users who have strong feelings on snaps will inevitably be a small (if vocal) minority. Canonical designed snaps to be the solution to the unique challenges of maintaining a huge and multi-faceted distribution like Ubuntu, and it’s working exactly as intended.

That said, it seems unlikely that snap packages will be embraced by the larger Linux community. Currently, the repository back-end is actually proprietary. While Canonical does allow for companies to create “branded” versions of the Snap Store, it’s just a cosmetic change and doesn’t allow you to run your own server. So even if another distribution such as Mint decided to embrace the snap package format, they would have to rely on Canonical to provide the infrastructure for distributing the packages to their users. This single point of failure is bound to be a point of contention for adoption outside of Ubuntu itself.

Snaps make zero sense for small apps and single apps, but they make more sense for bigger things, like e.g. I use the Nextcloud Snap. I could, of course, install all the requisites manually and configure them and whatnot, but it’s far easier and faster to just install the Snap-package and be done with it.

I just wish Canonical/their devs would stop using Snap-packages for things where they don’t make any sense.

I can’t wait for the first 200GB text editor.

This is a company being a company, things will get worse. Go back to the mother ship Debian

I agree

This is a good idea, anyway. The only reason I use Ubuntu, is that its installations tend to work better than those in Debian. I think that if some of the efforts going into Ubuntu were to go instead into Debian, everybody would be happier. I also think that if the Mint people had just started with Debian instead of being one layer further down the food chain, this would also have been better.

A long time ago, Ubuntu had better support for the latest hardware. Is that still the case any more? I’ve always installed Ubuntu on host systems and Debian on guests. Ubuntu had pre-configured apparmor as well, also handy for host systems. Luckily since I use Ubuntu Server as a desktop (with i3-wm) and don’t have snap installed, my chromium is micro-packaged, though running chromium in a VM with spice isn’t awful either.

Neil – I’m not exactly a distro expert but Ubuntu tends to be far more cutting edge on packages and up to date than Debian that tends to take sure measured steps keeping everything stable.

That said I’d say you can run Debian if you want to easily enough. At worst pulling down a few updated packages to support your cutting edge new hardware as needed and I would expect it to just work in general. Certainly I have never had problems with Debian on that front, though I would say the trend you mention is still there.

Debian is an awesome districbution, even with systemd it is light weight enough to run on my 2006 NAS using less than 200MB of ram. It also supports enough new technology that I can (barely) get it running SteamVR on my main PC, something that I would never have expected from Linux even 5 years ago. Not that Debian did all the heavy lifting, Valve has poured a lot of work into Linux support, just that Debian doesn’t deserve its reputation as an out-of-date dinosaur.

“I also think that if the Mint people had just started with Debian…” I’m so glad Philip Newborough, sole developer of the now defunct Crunchbang Linux distro, chose to eschew Ubuntu for Debian.

After Canonical tried to shove-in their crappy unity interface, I was done with Ubuntu. I moved to Mint and never looked back.

Meh. I, for one, am happy with Ubuntu and will be sticking to it.

I’m happy with Linux Mint, and glad they are eschewing snap.

I need to correct my previous comment.

I’m not sure where I read that Linux Mint was not going to use snapd,

last week an update installed it.

when I ran

sudo apt purge snapd

it cleaned it out, but also removed Brave browser.

B^(

Yeah, I tried just yesterday to go back to the mother ship – XFCE on Buster. First I had to go to some really inconvenient lengths to grab an Ethernet connection because my WiFi drivers are non-free. Then ‘sudo modprobe’ didn’t work while I was trying to get the drivers to work. It took a bit of searching to determine that super-user paths aren’t set correctly in this incarnation of Buster, and ‘su’ wasn’t installed, so I had to do ‘sudo bash’ and execute from the command prompt. Still couldn’t get WiFi working – finally found an unofficial ISO that incuded WiFi drivers, did a full re-install, then WiFi worked. But my Trackpoint is still unusable – it was fine during the graphical installation process, but under the full OS it’s as twitchy as a meth-head and none of the configs I’ve tried so far work. Hibernate is enabled by default but doesn’t work correctly. Power management doesn’t work properly – when my laptop is powered and I close the lid it’s supposed to keep running, but it suspends, and that doesn’t work for me.

I used to love Debian when I was on Squeeze and Wheezy. Upgrade to Jessie broke a bunch of stuff and I couldn’t get it working so I jumped to Xubuntu. Because I’m overdue for an upgrade and Canonical is pulling the ‘snap crap’ outlined in the story, I decided to try ‘the mother ship’ again. So far I’m not impressed, and I’ll likely end up on Mint. That means that I’ll have to do a full re-install when Debian moves a new candidate into Stable, but at least in the meantime I’ll have a hope that boilerplate functionality will just work.

> Go back to the mother ship Debian

Not as long as they are [SystemD]oomed.

*cough* its gotten bad there too… Real Debian = Devuan…much less infiltrated with ubuntu and gnomeisms.

But Debian is so outdated and old (looking) to be just unusable. I will go for Arch before I ever go and use Debian as my main OS

You could always use Debian Unstable for more current packages. Additionally, Debian Stable does have the Backports repo that has some newer packages.

Maybe you missed Emacs? :D

+1

>200GB text editor

Rust or C# no problem

They make sense for even small apps if those apps have unsupported dependencies, like 32-bit libraries or Python 2.7.

Ideally those apps should be refactored to remove those dependencies: but it’s a sad truth that some apps are themselves no longer supported, and dependent users either have to migrate away from them or take over maintenance of them… Or use a snap to eke out a little more life from them.

So you want to make it easier for unsuspecting (and probably less-knowledgeable) users to transparently install programs that due to lack of updates use older, possibly insecure dependencies?

Also this may enable some projects to push back updates (like moving to python3) even farther since it’s still easily install-able. I don’t really want to see python2 live on a zombie like Flash has until now…..

There are arguments for something like a snap; but for the use-case of easier the sacrifices of disk space, performance, transparency and using system libs instead of per-package seems like to much.

That’s exactly what (some) developers want to do, and the whole reason why those developers love containers. They can’t be bothered with such hassles as outsourcing dependency security to the distro their company has often paid a license for. They’ll worry about that later, for now they just have to get something working.

Oops, it’s later and now too late/too hard to go back and set it right!

I’m sure there are devs that want to use containers for whenever containers are justified, but you know how new technologies and new buzzwords get picked up. Anyway, thanks for giving the exact example of why I at the very least still distrust things like snap.

Or they could NOT ditch libraries just because they can instead?

This is precisely it. I was dependent on SageMath for a long time a Frankenstein of other software that I found nearly impossible setup manually so it’s be harmonious and even binary tarballs are dependency nightmares.

I know use it via docker and couldn’t care less if it worked just as well with snap if it truely started just working.

Personally my Nextcloud is manually setup but what’s important is that we have a choice here.

Canonical don’t owe you a binary package for Chromium and if you want it via them they’ll give you a snap.

Snap are a security disaster. No thanks

How is it a security disaster? It runs apps in a sandbox.

This honestly reminds me of the nightmare that is systemd. I came to Linux because I wanted power and control, not to be babbied. When I want to be babbied I get out my Windows or Mac box, they just work and do their job but when I need fine tooth control I use Linux. I hate these pushes towards making Linux more user friendly at the cost of control (user friendly with no downside I’m very happy to see).

I just want to know that when I do X, X will happen without the side effects A-W. To me this post just is another point of Mint and another kick for Ubuntu. Ubuntu always was weird to me, it wants the title of Linux but to pretend in every other way that it’s a Mac, I don’t want a Mac I want a Linux box. Although I guess that’s the joy of multiple distros.

Quick question though with the apt install pasted above. Is the install using snap because there is some hidden snap repo change in apt or has chromium changed their apt repo for ubuntu to just point to the snap repo, aka who is at fault for the change?

They addressed this on a recent episode of the Ubuntu podcast: shifting Chromium to a snap vastly simplified packaging and maintenance for all the supported versions of Ubuntu, which freed up developer time to work on other things.

You do understand that as things slowly shift more and more to a “snap only” solution with spotify doing similar you become more and more dependent on Ubuntu’s sole discression. With apt it is incredibly easy for me to create a local repo so machines can point to my repo and I can have whatever version of software I want from that repo and it all just works. With snap you can only use the snap repo and if in 5 years when 80% of software can only be installed from snap Ubuntu decides that maintaining all these snap servers is really expensive we would like $5 a month to connect to the snap repo what exactly is your alternative? How exactly will you install software now, everyone shifted to the convenient but not even remotely open source “snap” method.

RedHat did something similar to this but at least they have the decency to allow CentOS to co-exist. This move is an effort to not only mimic money grabbing tactics but be much more hostile in purging all other distros. Sure it frees up developer time, they need more time to create pretty graphics for the store.

I’m just sharing the rationale they presented – not arguing for or against it.

Canonical doesn’t owe you anything (well other than GPL commitments).

You can make your own repo and in there provide binary packages for whatever you want for whatever platforms you want.

Yes I’d prefer a “Hey! We didn’t find a binary package can we install it via snap instead?” Prompt but it’s not really a deal breaker.

Problem is not with those packages you can build yourself, problem is with closed source packages that you can’t rebuild, but they will be available as “snap only”.

Ubuntu also has a running habit of being *years* out of date on packages they do not care about. Looks like the Krita package is currently almost up to date (4.2.9, current release is 4.3.0) For most of the last decade, the package was several years out of date. If you wanted up to date software, you had to track down the dependencies and compile it yourself. Krita started using AppImage for x64 just to cut down on all the time helping people compile.

Another note, Ubuntu has been a favorite for years in cross-platform development. I have it running on several different ARM and MIPS platforms. I think I saw it running on Microblaze. Are snaps going to simplify this world? Seems like snaps would break it.

Like building a more buggy sound server?

> This honestly reminds me of the nightmare that is systemd

Couldn’t agree more, which is precisely why I “still” use Gentoo. I can do whatever I want because there’s no hard dependence on systemd (which is the Lotus Notes of inits), and that makes me happy. I don’t like being told what I can or cannot do with MY computer, or that I absolutely require software that I loathe.

Hello there from 2021! Can you please clarify if you are using Lotus Notes on Linux distro? I want to create a snap for it so that’s why I am interested in that!

I can see how it is useful, I can remember a couple of packages that were horrible to get working where it would have been a boon. However, to subvert my chosen install method is horrendously uncool.

Easy fix is ‘apt purge snap*’, made sure to do this on my Mint.

Personally I will not be using snaps much if at all. I like the package system as it is now and I’d rather go through the hassle of compiling from the source to using snaps (gaining a proper understanding on dependencies – which is very useful when something doesn’t work perfectly).

I can however see they have a purpose, and hopefully won’t have as many issues around dependencies as it comes with everything it wants with controlled versioning (though I bet it will have issues here and there with odd Pulse/Alsa setups for example) . Making it ideal for those coming from the walled garden’s as its going to be a familiar and simple install that just works in theory. These sort of things that make Linux easier to use for the non-techie always seem to suffer from blowback from the community… Which is stupid – if it gets more people using GNU Linux there will be more money to pay developers so Linux will get better for everyone! Its not like the hugely configurable customisable nature of Linux is going to change – if you really hate it that much it don’t use it!

As long as Ubuntu remains able to play well with both apt and snaps and it stays out of other well supported distro’s I am happy enough they exist. Even if Canonical themselves stop packaging up much I’ve no doubt the community will and of course Debian’s baseline should remain available.

Will admit I am currently using Ubuntu (and Mint/Fedora/Suse probably some others too) and its thrown a few snaps at me. And i dislike that it isn’t clear you are getting a snap with apt. That is damn cheeky and really not proper behaviour for a Linux distro!

“These sort of things that make Linux easier to use for the non-techie always seem to suffer from blowback from the community… Which is stupid – if it gets more people using GNU Linux there will be more money to pay developers so Linux will get better for everyone!”

More money?

https://staltz.com/software-below-the-poverty-line.html

New to the “patreon economy”? The vast majority of projects on patreon in general are panhandling amateurs whose projects are an excuse to collect donations for a little bit of extra beer money, with little commitment to finish anything. It’s about monetizing your hobby.

I was thinking more – as you make Linux household common you won’t get companies etc defaulting to paying microsoft/IBM etc to support windows every time they will go to Redhat/Canonical etc more often…

If more folks want to run Linux desktops then game development houses and publishers will put more investment into the tools they want and put pressure on Nvidia to open up their drivers for example.

Its getting people to think Linux might be for them, rather than always defaulting to windows/mac that will bring in much of the development funding. And the companies being paid to provide the services or making money from users have an interest in improving things. The nature of Linux means no matter how bad those companies might become much of the work they do will benefit every distro and the community as a whole – its hard for them to avoid it even if they tried!

Okay, first everybody stop saying “distro” like car guys should stop saying “tranny”.

Face the facts. Red Hat and Red Hat Certification = Gold Standard. Red Hat Certification started out as “If you can install Linux, you’re certified.” It’s now an excruciatingly difficult test that smarter people than me fail. Over the course of two days.

I’d just like to interject for a moment. What you’re referring to as Linux, is in fact, GNU/Linux, or as I’ve recently taken to calling it, GNU plus Linux. Linux is not an operating system unto itself, but rather another free component of a fully functioning GNU system made useful by the GNU corelibs, shell utilities and vital system components comprising a full OS as defined by POSIX.

Many computer users run a modified version of the GNU system every day, without realizing it. Through a peculiar turn of events, the version of GNU which is widely used today is often called “Linux”, and many of its users are not aware that it is basically the GNU system, developed by the GNU Project.

There really is a Linux, and these people are using it, but it is just a part of the system they use. Linux is the kernel: the program in the system that allocates the machine’s resources to the other programs that you run. The kernel is an essential part of an operating system, but useless by itself; it can only function in the context of a complete operating system. Linux is normally used in combination with the GNU operating system: the whole system is basically GNU with Linux added, or GNU/Linux. All the so-called “Linux” distributions are really distributions of GNU/Linux.

If you find the above disturbing and wonder what you can do to fight this rampant abuse of the cut and paste function and spurious posturing, why you can just support a linux that has Gnu stripped out as much as possible…

https://www.alpinelinux.org/

Or you can support porting efforts of the kernel to clang, use busybox, live life how it should be since you freed yourself from Norton/MSDOS

>if it gets more people using GNU Linux there will be more money to pay developers so Linux will get better for everyone!

counter argument: WINDOWS!

For some reason money seems to only make things worst.

That is the cultural and license differnces – The world of Windows is closed off, you want something new you have to work around whatever crap M$ documentation would tell you and you can’t update, change or streamline anything, you have to get M$ to do it for you. Which only happens if they feel its in their interests not yours.

But anything you want to do on a GNU Linux system you can (assuming you have the time and skills). So if its in your interests to improve the Bluetooth stack, how X interacts with GPU’s to make something like the hotswapping of GPU’s some laptops can do work you can. And if you did it right then odds are very good every other GNU Linux system will move to your improved version, and further improve it to meet others needs!

Equally important if some other group produces something that breaks what you were doing you don’t need to use it at all, where M$ will force broken updates on you the best you can do is delay it…

This https://bugs.launchpad.net/ubuntu/+source/libreoffice/+bug/1729821 is a 2½ year old bug in the LibreOffice snap that makes opening documents on an SMB share impossible. The deb version just works.

I’m just not sure about this snap. It feels like there are just too many things that need to go into a snap to get it to work. And no one looking at the bug reports.

I am sure a lot of people will find a new distro after this move, and some will actually start using Ubuntu because of this. Mint is probably as ‘plug-and-play’ as Ubuntu is/was. I use Debian BTW

I definitely suggest Mint for anybody wanting to try Linux, or who doesn’t want some of the bloat that Ubuntu comes with. Its a very nice user experience makes running a linux based system usually easier than windows systems.

After installing Mint, be sure to run:

sudo apt purge snapd.

mint still does not fully support the more secure wayland while many other distros do. Mint said they should have full support in 2026. Install plex on mint as a new user and they are lost when they can not figure out linux’s permissions to give them access to folders then they end up having issues with usb media unable to locate or use. Install plex on ubuntu with snap and everything works without issues only thing you may have to do is open up tcp port 32400 if ufw is enabled which is much easier.. Snaps and even Flatpaks will make things easier for everyone as time goes on and I use both since I have had issues with a few flatpaks.

I invite people to examine the three-year trainwreck which is the ‘Can I have a way to disable automatic updates?’ thread on their forum:

https://forum.snapcraft.io/t/disabling-automatic-refresh-for-snap-from-store/707/274

It’s not so much that the snap devs don’t want to implement it, but their attitude is NO YOU ARE WRONG FOR WANTING SUCH A THING. There’s just a flat refusal to admit that use cases other than the ones they thought of exist. That… does not sit well with me from people who are implementing a package manager.

Oh, and it insists on installing things in ~/snap (no dot) and you can’t change that default either.

Yes, this forced update policy is just as big a deal to me as Canonical controlling the only source of distribution.

While for most things, and probably for most less techie users, an always update is fine, there are times when the update changes functionality in a way I don’t want. In that case I want to lock the old version on my system.

And the snap (no dot) thing is an additional minor annoyance I’d love with, but not the other 2.

Snap upgraded helm silently from v2 to v3 on my desktop causing mayhem on my kubernetes system – this affected all my work colleagues and we had to reconsutitute the deveopment system from scratch. Then I discovered that I could not pin helm to v2 because it was a snap and ubuntu refused to allow this on a matter of principle.

The irony is that helm is a golang program – i.e. it is already a statically linked executable. I moved to Pop_OS which supports native and flatpak ( and you can choose when installing) and snap is removed. We now always install from the provider (all our tools are written in golang) and not from apt and use docker for more extreme cases where deps are required.

They harm reproducibility, absolutely unsuitable for docker containers, and, well, just a very wrong thing to do. Very disappointed that Canonical went this way, and now in search for a better baseline distribution.

I like the AppImage concept a lot more.

While snap ships basically all libraries an application needs, AppImage chooses some distribution as the “oldest supported”, and only ships anything that is not available & installed by default there. It also avoids all the filesystem mounting mess and having to have a root-privileged daemon, by having a bit different mechanism for bundling the libraries.

The tradeoff is that a snap is more universal, while AppImage works only for a limited time range of distributions. But even snaps will be limited by how e.g. graphics APIs and kernel interfaces change.

Or have I misunderstood? Is AppImage actually also using a suid helper for mounting a filesystem image?

I use AppImage for Cura. It’s pretty bad. Not sure if it’s worse than snap.

I run Cura via AppImage as well. Why do you say that it’s pretty bad? Other than taking a while to load, I haven’t really had any issues…

I like static linking better, if you **really must** rely on a specific version of a library. Other wise compile against older libs to be able to share in more places.

I came to say the same thing.

It seems like every problem snaps solve could be better solved with static linking combined with an application “home directory” in /opt.

You forgot to mention flatpack and other valid alternatives providing similar set of benefits and drawbacks.

I am surprised there was no mention of flatpack, as they have the same performance trade offs but you can pick and chose what you want to install and many are built on the excellent freedesktop-sdk and have there sources published.

Did you forget to read? Both Flatpak and AppImage are mentioned.

Canonical reminds me so much of Microsoft in the early days. They’ll do sleazy things to break compatibility in a way that helps them control the market. Unlike Microsoft, they don’t have a monopoly so it’s difficult for them to get away with it. However, it doesn’t prevent them from trying.

Oh God, not this again. Name one DOCUMENTED API that Microsoft broke.

The removal of int 21 and realignment of lparam bits into wparam doesn’t count since 1) it’s a different subsystem and old code still works under the win16 subsystem and 2) they told everyone years in advance it was going to happen.

Now go read Raymond Chen’s blog and see the lengths that Microsoft goes through to ensure compatibility on new versions of Windows. This includes appcompat shims that reproduce buggy behaviors.

Undocumented APIs were undocumented for a reason – the company was not committed to maintaining them long term. Programmers of that era were lazy and foolish to use them. I, for one, never did.

You mean like “DirectX”? From Win95 all the way up to today, we have to download drivers every few months due to changes in the underlying OS. Win10’s been the worst about this, but I remember the day an XP update hit and I had to buy a new video card. It didn’t need to be fast. It’s only purpose in life was to attach to a composite video TV, but Microsoft broke it and there was no new driver.

You’ve chosen a particularly bad example. DirectX 12 is the latest but DirectX 9 applications from 2002 are still fully supported in Windows 10 today, 18 years later. I still occasionally play Unreal Tournament 2004 (which I bought in 2004) on my Windows 10 box.

The video card problem you’re running into? That’s on the video card OEMs who have very, very bad track records of doing some of the worst prohibited, undocumented, lazy things in their drivers or simply stopping support cards not because of technological reasons but because they want you to buy their latest product. My friends in the video game industry have told me too many stories of finding video card drivers doing the most stupid things and breaking their games.

I personally had a laptop whose GPU OEM dropped support when a new version of Windows came out, but it turned out that if you changed a string in the driver install files for one of their newer GPUs, the old GPU worked just fine. The OEM just didn’t feel like supporting the older chip anymore.

Yah. Like I posted on the Chromebook = Netbook article today too. I hate sandboxes!

So, I upgraded my daughter from a Raspberry Pi to a full Desktop with Kubuntu so that she could run the latest Minecraft Java edition. We actually had that working on her Pi (no, not just the Pi edition) but after version something or other they dropped 32-bit support.

Well, anyway, the only way I could get it running on Linux was by using snap. There were a few very long tutorials for making it work without snap but none worked for us.

So, using Snap means that all her worlds are stored in some arcane folder deep down in the snap’s filesystem instead of the nice, obvious ~/.minecraft folder where they lived before. I had to move them. I tried a symlink but it wouldn’t work.

She has been building those worlds for years, some we played together and she is attached to them. Now I’m afraid that the next time we go to upgrade I might not be able to find them again!

I don’t know, maybe I should try Minecraft in Wine. It’s getting to where one has fewer things getting in the way running a Windows program in Linux than when running anything actually built for Linux. At least it’s not that hard to remember that everything in Wine’s “C: drive” is at ~/.wine/drive_c and windows programs can see the home directory or even the whole directory tree at h:/ and v:/.

Just install an older JRE and MC will run just fine.

Another big problem with snaps is the way they totally ignore your theme customisations. This is not just about looking pretty, but about usabity. For example I have to use bigger fonts than standard and a red mouse pointer so I don’t lose it. But snaps say f.you, we know better than you what you need. No thanks.

That I didn’t know, I assumed snaps would respect your baseline as there is no reason they shouldn’t. Can you at least set up each snap or snaps in general sepertly?

I believe 20.04 LTS has made some progress in this direction, but still not working as the user would expect ie. use the settingts you have already set so there is no difference between snap apps and “normal” apps. (I’m still on 18.04 LTS with the snap system uninstalled.)

My problem with snaps are the security around it. I got to a point where I had ffmpeg as a snap to work with my NVidia card but it couldn’t operate on files in….NFS shares? There was no way to disable that limitation.

“But for users concerned with the ideology of free and open source software, it’s seen a dangerous step towards the types of proprietary “walled gardens” that may have drove them to Linux in the first place.”

Yeah. Yeah. Android and Ios, but can it really be called proprietary if one can get everything from the source? Seems people just want to stretch words were they don’t apply. This is open source that even Microsoft couldn’t bring down. And yet we use the language of victims like “walled garden” and “proprietary” when the very keys to our freedom is written in legalese like GPL.

The Snap Store is literally proprietary. The software you are installing may be open source, but it’s being packaged and distributed by a single company with no transparency.

“Mint founder Clement Lefebvre made it very clear that the Ubuntu spin-off does not approve of the new package format and wouldn’t include it on base installs.”

“Further, he announced that Mint 20 would actively block users from installing the snap framework through the package manager.”

Those are not entirely correct. They don’t disapprove of Snaps by themselves, they disapprove of snaps and the entire snap subsystem being installed invisibly. The intention is not to block users from installing snaps from the package manager but rather to stop the package manager from installing snaps behind-the-scenes.

From linked blog post:

“This breaks one of the major worries many people had when Snap was announced and a promise from its developers that it would never replace APT.”

“In Linux Mint 20, Chromium won’t be an empty package which installs snapd behind your back. It will be an empty package which tells you why it’s empty and tells you where to look to get Chromium yourself.”

That is the key. They’re not against snaps itself, they’re against it breaking existing expectations _without notice_.

Did you actually read the article, or just get two paragraphs in and write out this unnecessary comment?

The rest of the article doesn’t change that it starts with incorrect statements.

Maybe you’re correct that I didn’t need to include the quotes from the Mint blog, since they were in this article, but they are the evidence that the quotes from this article are incorrect, so I restated them for clarity.

The point is that you picked out a single sentence to try and make it sound like the article says something it does not. So either you didn’t read the article, or you are trying to further some kind of narrative to discredit it. Which one is it?

You’re also wrong, BTW. Clem absolutely does not approve of Snaps. The Chromium package was the final straw, but even before that he has explained why the idea is a bad idea for Linux.

You coincidentally didn’t quote those parts from the blog though, which kind of answers that first question.

Bad idea, but not bad enough to unsupport it. Until promises were broken.

Doesn’t change that it’s kind of sensationalist to open with those stretches, when the actual immediate cause of the sudden change was the broken promises and doing things behind the scenes, not _just_ the subsystem itself.

Wouldn’t this lose all the benefits of dynamically linked libraries. That the common files are only loaded once, and basically live in cash?

(sarcastic) That’s the whole beauty of snaps, flatpacks, and heck even containers. They duplicate all of the dependencies, but still load them dynamically, giving them all of the size benefits of static binaries, but with the security benefits of DLLs! (/sarcastic)

(OK, there’s some clever library sharing going on with containers, which helps out on big systems.)

My understanding of the “benefits of DLLs” is that it saves disk space, at the expense of dependency hell and a can of security worms. (OK, maybe also cache memory if two resident progs use the same libs.) But are disk space and memory really the constraints anymore? Or is it usability, ease of install, and security?

Static 4 lyfe!

On a desktop computer, no. On a RasPi, other SBC, or more limited computing application hellz yes! Using vast amounts of memory and disk space because “eh, it’ll be there anyway” is a stupid decision.

Or is it usability, ease of install, and security? Why is that an either/or question? Limiting bloat doesn’t have to come with worse useability, ease of install or security.

> But are disk space and memory really the

> constraints anymore?

This question is as old as the concept of personal computers. Even the 486 folks tried to tell the 386 folks: “oh, with 2 megabytes of RAM and endless room on a 60 megabytes hard disk you’ll never have to think about size constraints again!” Today just the Linux kernel chimes in at 60 MiB, compressed, and a simple ‘ls’ would exhaust these 2 MiB RAM.

Disk space and memory was always a constraint and will always be a constraint. The reason is simple: disks and RAM grows, but software does as well. Relation between these two stays coarsely the same since like 30 years (or longer).

May be it’s time to move from ubuntu to mint

This reminds me of developing in Node.js. Before you lay down one line of code, your project can already comprise 10k files over hundreds of MB.

Very strange “we know best, bug off”+”newer is always better” BS philosophy.

Not really, more like “Hey, don’t do stuff behind our backs that you previously promised not to do.”

Snap/Ubuntu devs said snap would never replace apt, yet Mint has shown that some apt packages have been “upgraded” to install Snaps behind the scenes. That’s what they’re pissed about.

Not really what? It’s not strange? It’s not BS? It’s not a combination of those two philosophies?

Linux shit the bed when it started on packages. Back in the good old days, you compiled everything yourself.

I get a kick out of people wanting conflicting ends. Many people have moved to Linux for the security and lack of viruses that seem to plague Windows.

So, in the old days, you were trusting that the source code you were building was sound and you got an unmolested copy of it. I mean the ultimate laugh a black hat could get would be for you to compile our own virus, malware, or back door. Assuming you got unmolested source code, you could at least trust the person building it from malice, perhaps not stupidity as far as options and what not, but at least from outright malice.

Than came the distros. They of course did not play well with any user built software as they did not know what pieces you had built as opposed to what pieces you got from them. And of course you were totally trusting them to build the packages they claimed they were building and giving you unmolested binaries from unmolested source code. The IQ of the linux user could now start to spiral down to the same level as your average PC or mac user.

People of course needed freedom dammit, so the linux distros had to start letting people point at other package libraries and some hell broke out over this on occasion as far as dependencies and what not not having consistent names or places they lived. Not to mention you have yet another group of people building your software for you, and you are trusting them. God only knows who they are. A friend got the line to add to your repositories file from the internet so it must be safe, right?

And now, we come damn near full circle. Essentially static linked packages, or if they are dynamically linked, they have everything they need along with them. You pay for the size overhead. A lot of people for a long time were wanting just such a thing after getting stuck in non resolvable dependency loops. Right now at least you know the folks behind the linux distro are the folks building it, and hopefully you get an unmolested piece of software. Of course the freedom lovers want to be able to bring in the new packages from other sources. I don’t see much difference between this and grabbing packages from other sources.

Is it sneaky having it on by default? I dunno. I have not installed Ubuntu in a long time. Have you? Really. Is there a check box or a switch to tick off? I suspect it should be something that can be turned off. On the flip side, for a lot of Linux users out there these days, turning it on makes things “just work”. And I suspect the snap packages can be uninstalled if you want to return to fighting with the dependencies. I don’t think it is a bad thing. The cat is already out of the bag. It has been for decades. Someone has just realized there is away to maintain packages on a much simpler, though wasteful level.

The good news is you can still go as far as downloading the source for most packages, or playing the pre built package and dependency game.. This does not stop that. It just gives more pc like users a faster and more reliable solution.

The world has moved to Linux for a lot of reasons, not just the lack of viruses. It is the most common operating system in the world (yep, I include Android).

Er… package managers, which is just one of the things that makes Linux distributions over other OSes better!

You rightly said that SNAP does not preclude (in cases where the software is available in traditional and opensource forms) users from downloading the software and managing the dependants themselves. The reality is that it will lead to bloat and technical dept that the developers will shy away from.

Libraries, libraries, libraries. It always comes back to DLL hell.

Shared libraries are why people were tearing their hair out when building applications themselves in Linux. Application ‘A’ wants at least version 5 of library ‘X’, and Application ‘B’ has never heard of version 5, and won’t even try to use anything newer than version 3. And of course, library ‘X’ versions 3 and 5 can’t coexist on a system due to incompatible header files, or some such oversight. This is where package managers, and packages themselves came from. I remember having to go through a complicated procedure to install Kdenlive (a non-linear video editor) in Ubuntu, in which all of its dependencies were installed in subdirectories of Kdenlive’s build directory. And then there’s Firefox, which appears to hide all of its dependencies, avoiding using the system-installed libraries. But at least Firefox handles all of this transparently in its installation.

Apple thought they could get around this by installing applications as bundles, i.e., directories containing the applications themselves along with their dependencies. Which, as reg says, makes their shared libraries act like statically-linked libraries, since every application ends up linking to its own version of a given library, with the twin consequences of a) requiring much more space both on disk and in RAM, and b) causing many applications to be using obsolete libraries, and not being able to take advantage of updates to libraries.

And I’ll pause right here to say, it looks like this is how snaps tackle the library problem.

But then, someone at Apple saw the folly of this, and came up with something called Frameworks, which from all I can see, is another name for shared libraries, but they are stored in a system directory instead of in the application’s own bundle, and multiple applications using the same Framework share the same object modules at runtime. You know, like shared libraries. Which is good, right? Well, it’s good as long as the Frameworks are properly maintained. But by the way, not everybody was happy with Apple’s approach, and not every application developer wanted to make a Mac version of their app, so we also have brew, which bills itself as “the missing package manager for MacOS”, which attempts to do Linux-style libraries in MacOS, maintains its OWN directory for shared libraries, separate from the system’s directory for Frameworks AND the traditional Unix /usr location.

So is this going to be the future of snaps? Will we see the proponents of snaps recognizing that there actually is some advantage to shared libraries, and coming up with some kind of uber-snap that re-implements these in another layer?

Reminds me of when Docker came out and the never ending battle between those who write, and those who deploy and maintain.

They already did, the snap environment is expandable with other base snaps.

For instance here’s Wine :

https://snapcraft.io/wine-platform-runtime

PS. something I didn’t see much mention of in this discussion is that Snap (and Flatpak and appimage) also function as a sandbox. It’s not just about dependencies.

This. Yes. And then it’s like shared libraries, but everything is more bloated, slower and much more confusing. Well a least we have sandboxed applications then (most of those with old, vulnerable libraries).

Sorry, I’m being mean.

Linux From Scratch is a thing, and you can run it if you want to.

I tried LFS years ago, in the end, packages won because ultimately, one wants to get something done rather than spending endless hours typing “./configure && make && su -c ‘make install'”

Apt was perfect, LFS is for hobbyists

Faster? Definitely not (Bigger downloads, more disk space, longer startup times).

More secure? Not so convinced yet (Sandboxing, but possibly vulnerable libraries).

More reliable? Why should it be? It all comes down to package maintainers again.

It is not just a case of resource limited development teams being able to release software with less testing as well as being compatible with a wider range of platforms – It is shitty development philosophy!

Waterfall is a Liner Sequential Life Cycle Model whereas Agile is a continuous iteration of development and testing in the software development process. Agile means that they will get it right eventually (hopefully) with enough time and resources (and probably a shit load of retrospectives). Snaps allow for technical debt which equates to either ill thought out solutions or resource limited teams deploying unfinished software. Can you imagine the design team of a heart and lung machine company iteratively deploying a machine that just has to work and being in a position of technical debt?

For some strange reason, no developer will agree with the above.

Not sure what this has to do with snap, but I’ll bite anyway.

Agile development is used in the complex (not the complicated) problem space to “hit a moving target” which most modern products and software are. The machine you’re describing is complicated, but not complex, so it might be better to use a different development framework / method. Also, when you’re saying agile development comes with less testing and accumulating technical debt built in, you’re very wrong. The contrary is true if you do it correctly. You seem to have some painful experience with agile methods and I feel sorry for you, because it does not have to be this way.

Last I checked snaps don’t support extended attributes and are therefore unable to run under many modern linux security systems.

If the snap includes all the libraries that you need to run the program, and all programs have their own copy of the libraries, what happens when a serious bug is found in one of the libraries? If you have the libraries installed in one place, you simply update the library. If you have each snap with a private copy of the library, then now you have to update all of your snaps in order to make sure you’ve gotten rid of that bug.

Positives and negatives there – as equally the bug fixes and updates to a lib can break stuff with some programs too.

Still don’t like snaps though, and a local copy in each snap isn’t the only way around version incompatibilities.

Well, at least LD_PRELOAD still seems to work, but your library’s target arch and the snap’s arch have to match, and a snap can freely have any older arch. So running with a locally patched library systemwide as usual became less trivial.

Easy on Ubuntu and totally recommended: Uninstall snap (https://askubuntu.com/questions/1035915/how-to-remove-snap-store-from-ubuntu), block snap installs (https://askubuntu.com/questions/75895/how-to-forbid-a-specific-package-to-be-installed), install flatpak, the flathub repo and the gnome-software plugin (https://medium.com/@uncertainquark/how-to-install-flatpak-apps-on-ubuntu-20-04-lts-6c3f632cc605). Be happier.

Packaging all dependencies for each package is not just inefficient due to the hugely increased disk usage, it will also create a huge patching problem in case of security vulnerabilities. Let’s assume there is yet another critical vulnerability in a popular library such as OpenSSL. With traditional packaging, Ubuntu could just push out an updated openssl package for all supported versions (maybe 3 or 4 versions at a time) and all applications will automatically use the updated library. Now every single snap-based application will come with its own libraries and there may be hundreds of different snap packages containing a vulnerable library and all of them need to be updated manually by their developers.

Yep. And the whole thing makes those security problems even worse in practice, because it encourages upstream projects to get lazy about integrating newer versions of libraries… or even to fork libraries.

Just say no.

Which is exactly what LMDE is – Linux Mint Debian Edition. Mint’s efforts to build the same user experience but without the Ubuntu layer in the middle. It’s their Plan B should Canonical ever make unscrewing the Ubuntu base too difficult.

Give it a try: https://www.linuxmint.com/download_lmde.php

Well, time to find and install a new distribution I guess

“the snap packager installs from a proprietary Canonical-specific source. If you want to distribute snaps, you have to set up an account with Canonical and host it there.”

This is not true: you can download a snap file from anywhere, and install it with the –devmode option. We distribute our app this way, and it works fine. I don’t like the idea of lock-in either, but snap as a system doesn’t mandate this.

We had issues before with AppImage (and other package systems) not working in some distros, or certain versions of distros. And while snaps are huge, it does mean that everything the end user needs is in one installer. From a dev point of view, that also means all users are running the app with the exact same versions of the dependencies, so we see much fewer bugs.

Snap allready lost me as a user 10 seconds after I installed it and noticed that it just made its home at /snap in the root directory.

That already says it all about their relationship to unix traditions.

Snap uses far less space than the article suggests. The article is assuming that snap takes up the amount of disk space reported by the mounted path but it general it’s far less because Snap is heavily compressed. The values reported are the uncompressed sizes, which never actually make their way to your filesystem. This is explained in the Snap docs https://snapcraft.io/docs/system-snap-directory

I’m afraid you’ve misinterpreted that page from the docs. The size of the filesystem image is accurate, what’s larger is the directory it gets mounted to.

In the case of youtube-dl, the downloaded snap file is 92 MB and the expanded filesystem has an apparent size of 285 MB.

Is that why snaps start so horribly slow?

Bottom line is Canonical is a company and they need to appeal to a wider user base that just command line ninjas. While there is a sacrifice, my whole family can install software in ubuntu. I don’t have to fix stuff ever now. For the foreseeable future there will be distributions for those that have the skill set to compile from source. I don’t see that going away with groups of people like the readership here. It seems natural to me that linux would evolve into simpler clunkier versions for the masses and no frills power user distributions to push devlopment.

Sorry for reporting. Will use that other button in the future ;)

Well, canonical could have just used flatpak and be done with it (with NO drawbacks for users compared to snap as far as my experience with flatpak goes), but they chose to implement a proprietary snap server for whatever reasons.

As a non-programmer I would ask:

So what is advantage of snap over statically linked binary? Why we would like to deploy software with dynamically linked libraries as a heavy compressed package of all libraries and software when we could link everything statically and provide binary? I think this is how SciLab used to be deployed for years.

Statically linking just means you put the object code from your libraries into your binary, versus grabbing it externally from a shared library (dynamic linking). All snap does is bundle the dynamic libraries together with the application, so that it doesn’t have to rely on the OS to provide them.

Suppose a security vulnerability is found in a common library that your application uses. If it’s statically linked, then the only way to patch the vulnerability is to recompile the application with a new version of the library, distribute it, then have users update their app. With dynamic libs, the idea is that all a user has to do is pull in the updates from their package manager. No action is required on the part of the app maintainer (in most cases).

And as I understand it, that is a weak point of snap as well as flatpak or AppImage. There are some applications where those libraries will never get updated, because the maintainer just doesn’t. This a drawback. What’s left as an advantage imo is the sandboxing features that most people find annoying and are a real problem with some application (e.g. Firefox / Chrome not being able to talk to KeePassX in my case).

The main reason I dislike snap packages is the additional security requirements they impose. It gives you annoying windows-style security popups when saving files. (You know, the security popups that become so frequent that it trains you to click through them without reading them.) These popups in the Chrome snap are completely redundant with Chrome’s own save-file dialog.

Additionally, it can be difficult accessing files outside your user folder. I had to ditch the VSCode snap because I couldn’t edit anything in my root-level /work folder despite owning the folder and all files. It also made modifying the app impractically difficult.

The politics around snap sucks for sure, but there’s enough usability reasons alone to avoid it.

Useless. Doesn’t work with Slackware.

(note for the hipster reader, Slackware has nothing to do with the bloated corporate Slack IM chat tool you’re using).

Slackware has nothing to do with snaps either. make install is your true way.

In marketing it is ‘supposed’ to be customers first. Here, snap packages sounds more like developers convenience first, customers last.

So far I haven’t seen a good enough reason to jump to a ‘snap’ style application architecture.

Ubuntu and Canonical are dead to me because of this. Never going back.

i love Linux, (Ubuntu) and would not be using it if there was no snap. New to Linux don’t know how to use the terminal.

No. As you’re click-installing software from the Software manager application you probably don’t even know or care if you’re installing packages from a repo or snap (or flatpak which integrates 100% the same as snap into the Software manager). And you shouldn’t care as a new user, but you should care as a person that values FOSS.

We are interested in Debian!! :D

> Rather than building versions for each supported release of Ubuntu, they can push out a single snap that will work on all of them.

Well, yes, but actually no. Snap has features of its own, and an older snap will not be able to launch newer snap packages.

Also, Flatkpak aims to be supported (and maintained) by every distro out there, while Snap is Canonical-only.

Every time they do something alone, they kill it some years after (Unity, Mir,…) and use its concurrent (Gnome, Wayland,…).

Very good article, still it misses a few crucial points:

1. Snaps are advertised to make packaging easier for developers. It’s true, building APT packages is a pretty complicated and stubborn process (e.g. insisting on changelog entries and version updates for test builds). But the right solution is not to replace APT packages, but to make building them easier.

2. Satisfying a diversity of distributions with a snap package plain out removes this diversity. If every distribution version comes with the same set of snaps, they’re no longer distinct. Snaps aren’t the right solution, reducing diversity would be. If the latest version of a distribution is always stable, there is no need for “LTS” versions. Make your development cycle more robust and reliable!

3. To get latest versions of packages on an older distribution there are PPAs, Personal Package Archives. Ubuntu (still) supports them nicely, even with a build server, and adding a few of them to the local installation as needed works extremely well. They just integrate with the regular upgrade process, zero additional hassles.

One common thread of the desktop Linux community is that nobody can agree on anything. Its so fragmented with different ways of doing something that it really discourages developers and users. Seems to me that if Snaps is rejected by many Linux distro’s then it becomes yet another problem rather then a solution.

Geek people has Terminal to install things and after so many years we have store. Why they’re jealous? Nobody’s deleting Terminal or APT from Ubuntu! Snap is just a good way to install things for non-geek people like us, why they don’t want it to exist? Do they want to be the only kind of people who use Linux?

Actually, they _are_ removing APT packages from Ubuntu (as the article explains). And from the user perspective, clicking an APT package is pretty exactly the same procedure as clicking a Snap package.

Snap is marketed and designed as developer convenience and that is it: zero advantages for users, even the App store was there before.

Snap is a cure that is worse than the disease.

I install Ubuntu biannually, so I’m sufficiently up-to-date anyway. Snap is just bloat, hogging bandwidth and disk space. I do not want snap automatically updating my packages. As far as I am concerned, dependency management is already a “solved” problem: it’s called a distro.

The fact that Ubuntu is the only distro to have adopted it means that it doesn’t actually solve anyone’s problem. It’s also a walled garden, and consequently doomed to fail.

I’m now evaluating Debian Stable. I’ve Firefox, Zoom and Skype working. Everything else is relatively trivial. My gameplan is to use Debian Stable, and compile the few apps that I particularly want to be fresh, like video editors or compilers.

Snap is a terrible solution to a stupid problem. Bloated, inelegant and unnecessary. If software doesn’t run because of diverging libs there are real solutions that make a lot more sense:

1. Reduce dependencies.

2. Static linking (Unused functions are trimmed, people!)

3. Fix the source and recompile (is it not Open Source???)

If this is not enough, then simply delete the unmaintainable garbage and don’t look back.

Snap doesn’t make any sense on a server.

Just realized that docker was installed with snap by default on ubuntu 20.04.

Just find out the mess, I had installed docker from the official repo with apt.

Never realized that snap had installed it.

The snap service isn’t properly supported by apparmor.

I ended up with a bunch of odd security related behaviors.

From my point of view with the support work I have to do, snap sucks. Period.

This thing looks half-baked. I have much more pressing issues than debugging

an incomplete ‘craft’.

Now I still have 7 servers with a creepy configuration that I need to straighten up.

For today. It sucks. ‘good work’ Canonical.

Either you commit to a package manager or you don’t and having two different ones

at the same time is a bad idea.

Docker in snap? How can even people think that putting a container in a container makes sense. Next time, someone will run program in snap under docker in snap and brag about it as if it’s the next best thing.

Also, there is this hip thing to install a package manager via a package manager that is installed… via a package manager! All those npm’s, pip’s, (ana)condas and whatnots.

I found this article while I was banging my head against the wall when I wanted to install adafruit feather support in arduino. Oh, I need to install this little extra python tool (via pip… ehm…). Fine, done, the tool works. Oh, wait, how the heck the arduino cannot find it? It’s in the $PATH. Oh, arduino is installed in snap. Facefalm.

After an hour of messing around… why the heck is the arduino snap in strict mode? It cannot run anything from the outside. Neither you can put anything inside (without diving in snap’s guts).

I downloaded arduino tarball, unpacked it somewhere… and it just runs fine. No snap needed.

My $DAYJOB is full of handling compilation, linking, hw etc. problems, solving the unsolvable, issues that could be described as putting a round peg in a square hole while standing on my head. I really like that. But this? THIS? When I finally find some time for my hobby? There goes anger management.

I really wanted to like snaps. They make so much sense to me as an end user of Linux. I don’t have time to mess with my system (or, rather, wished I didn’t have to mess with it). Dealing with broken dependencies is a nightmare for someone whose primary work is NOT in information technology. I just want my system to work and I want to be able to install my apps (both open source and commercial) without having to track down why this or that doesn’t work (or worrying about how an update to the dependencies for App A will wind up breaking App B).

apt has worked for me for the pre-packaged items I need, but I find it annoying, confusing, and frustrating when it breaks (on dependencies) which happens more often than I have time to deal with.

I freely admit that I am not a sysadmin and my knowledge is fairly limited so my view comes from an end user perspective. But I have never understood why applications are EVER allowed to modify the system.

As far as I am concerned, installing software is the Achilles heel of desktop Linux and every time I switch back to my Mac it is because some thing I was trying to accomplish on my much beefier Linux box turned out to be so difficult that I wind up finishing the work on my much much slower 2011 Mac Mini.

So snaps seem like a perfect solution. Until I started using them.

Now I don’t care that they take up a lot of space (hard drives are stupidly cheap these days and if I could save even half a day of frustration dealing with installs I would not think twice about dropping $250 for a 2TB nvme SSD). The slow start up times are frustrating, but I can live with that as well even though I wished there were improvements there. I hate that I cannot install them to a location of my choosing, but that is also something I can overcome.

But the fact that these apps cannot see my whole filesystem is a deal breaker. I have a setup that has worked for me for years (mounts, extra directories in places that make sense for my work) and snaps simply cannot access those locations. I have to have a directory structure that matches what the snap system expects and if any files are outside of that… well.. they just don’t exist as far as my snap apps are concerned.

I finally switched to PopOS and it uses flatpak. So much better in every way (from an end user’s perspective). Installation is stupidly simple. I have yet to find a flatpak that does not work on my system. I have not had to deal with any dependencies at all – I just install an app and use it… just like $DIETY intended. I can open up parts of my filesystem to be visible to each flatpak on an individual level.

I only wished more apps were available (especially commercial apps for content creation).

I demand 100% control over my system, and that includes the ability to completely disable any kind of updates or update notifications, this is absolutely non-negotiable and not up for discussion. This push toward Snap is exactly why I dumped Ubuntu after 12+ years and moved to Mint, won’t be looking back.