The SD card first burst onto the scene in 1999, with cards boasting storage capacities up to 64 MB hitting store shelves in the first quarter of 2000. Over the years, sizes slowly crept up as our thirst for more storage continued to grow. Fast forward to today, and the biggest microSD cards pack up to a whopping 1 TB into a package smaller than the average postage stamp.

However, getting to this point has required many subtle changes over the years. This can cause havoc for users trying to use the latest cards in older devices. To find out why, we need to take a look under the hood at how SD cards deal with storage capacity.

Initial Problems

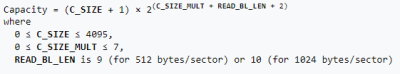

The first issues began to appear with the SD standard as cards crossed the 1 GB barrier. Storage in an SD card is determined by the number of clusters, how many blocks there are per cluster, and how many bytes there are per block. This data is read out of the Card Specific Data, or CSD register, by the host. The CSD contains two fields, C_SIZE, and C_SIZE_MULT, which designated the number of clusters and number of blocks per cluster respectively.

The 1.00 standard allowed a maximum of 4096 clusters and up to 512 blocks per cluster, while assuming block size was 512 bytes per block. 4096 clusters multiplied by 512 blocks per cluster, multiplied by 512 bytes per block, gives a card with 1 GB storage. At this level, there were no major compatibility issues.

The 1.01 standard made a seemingly minor change – allowing the block size to be 512, 1024, or even 2048 bytes per block. An additional field was added to designate the maximum block length in the CSD. Maximum length, as designed by READ_BL_LEN and WRITE_BL_LEN in the official standard, could be set to 9, 10, or 11. This designates the maximum block size as 512 bytes (default), 1024 bytes, or 2048 bytes, allowing for maximum card sizes of 1 GB, 2 GB, or 4 GB respectively. Despite the standard occasionally mentioning maximum block sizes of 2048 bytes, officially, the original SD standard tops out at 2 GB. This may have been largely due to the SD card primarily being intended for use with the FAT16 file system, which itself topped out at a 2 GB limit in conventional use.

Suddenly, compatibility issues existed. Earlier hosts that weren’t aware of variable block lengths would not recognise the greater storage available on the newer cards. This was especially problematic with some edge cases that tried to make 4 GB cards work. Often, very early readers that ignored the READ_BL_LEN field would report 2 GB and rare 4 GB cards as 1 GB, noting the correct number of clusters and blocks but unable to recognise the extended block lengths.

Thankfully, this was still early in the SD card era, and larger cards weren’t in mainstream use just yet. However, with the standard hitting a solid size barrier at 4 GB due to the 32-bit addressing scheme, further changes were around the corner.

Later Barriers

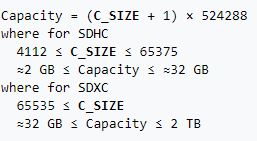

2006 brought about version 2.00 of the SD specification. Heralding in the SDHC standard, it promised card sizes up to a full 32GB. Technically, SDHC was treated as a new standard, meaning all cards above 2GB should be SDHC, while cards 2GB and below should remain implemented as per the existing SD standard.

To achieve these larger sizes, the CSD register was entirely reworked for SDHC cards. The main C_SIZE field was expanded to 22 bits, indicating the number of clusters, while C_SIZE_MULT was dropped, with the standard assuming a size of 1024 blocks per cluster. The field indicating block length – READ_BL_LEN – is kept, but locked to a value of 9, mandating a fixed size of 512 bytes per block. Two formerly reserved bits are used to indicate card type to the host, with standard SD cards using a value of 0, with a 1 indicating SDHC format (or later, SDXC). Sharp readers will note that this could allow for capacities up to 2 TB. However, the SDHC standard officially stops at 32 GB. SDHC also mandates the use of FAT32 by default, giving the cards a hard 4 GB size limit per file. In practice, this is readily noticable when shooting long videos at high quality on an SDHC card. Cameras will either create multiple files, or stop recording entirely when hitting the 4 GB file limit.

To go beyond this level, the SDXC standard accounts for cards greater than 32 GB, up to 2 TB in size. The CSD register was already capable of dealing with capacities up to this level, so no changes were required. Instead, the main change was the use of the exFAT file system. Created by Microsoft especially for flash memory devices, it avoids the restrictive 4 GB file size limit of FAT32 and avoids the higher overheads of file systems like NTFS.

Current cards are available up to 1 TB, close to maxing out the SDXC specification. When the spec was announced 11 years ago, Wired reported that 2 TB cards were “coming soon”, which in hindsight may have been jumping the gun a bit. Regardless, the next standard, SDUC, will support cards up to 128 TB in size. It’s highly likely that another break in compatibility will be required, as the current capacity registers in the SDXC spec are already maxed out. We may not find out until the specification is made available to the wider public in coming years.

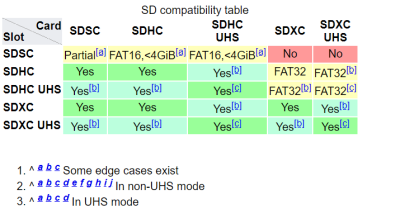

Where The Problems Lie

The most obvious compatibility problems lie at the major barriers between generations of SD cards. The 1 GB barrier between 1.00 and 1.01, the 2 GB barrier between SD and SDHC, and the 32GB barrier between SDHC and SDXC. In most embedded hardware, these are hard barriers that simply require the correct size card or else they won’t work at all. However, in the case of desktop computers, there is sometimes more leeway. As an example, SanDisk claim that PC card readers designed to handle SDHC should be able to read SDXC cards as well, as long as the hosting OS also supports exFAT. This is unsurprising, given the similar nature of the standards at the low level.

Thankfully, newer readers are backwards compatible with older cards, but the reverse is rarely true. However, workarounds do exist that can allow power users to make odd combinations play nice. For example, formatting SDXC cards with FAT32 generally allows them to be used in place of SDHC cards. Additionally, formatting SDHC cards with FAT16 may allow them to be used in place of standard SD cards, albeit without access to their full storage capacity.

These workarounds are far from a sure thing, though. Many devices exist that have their own baked-in limits, from quirks in their own hardware and software. A particularly relevant example is the Raspberry Pi. All models except for the 3A+, 3B+ and Compute Module 3+ are limited to SD cards below 256 GB, due to a bug in the System-on-Chip of earlier models. Fundamentally, for some hardware, the best approach can be to research what works and what doesn’t, and be led by the knowledge of the community. Failing that, buy a bunch of cards, write down what works and what doesn’t, and share the results for others to see.

Unfortunately, the problem isn’t going away anytime soon. If anything, as card sizes continue to increase, older hardware may be left behind as it becomes difficult to impossible to source compatible cards in smaller capacities that are no longer economical for companies to make. Already, it’s difficult to impossible to source new cards 2 GB and below. Expect complicated emulated solutions to emerge for important edge-case hardware, in the same way we use SD cards to emulate defunct SCSI disks today.

In other words when one doesn’t design for trends. How many design for a future when hardware becomes, less capable over time?

Yeah of course we can predict the future, what will get faster and bigger and what won’t.

Would you like to purchase some used steel? The Eiffel tower is only a temporary structure, I can get you a great deal if you send me your money now. After all we can predict the future!

Designing “for the future” is more expensive, and in a competitive market, looking too far ahead too early can kill a product as easily as as failing to look far enough ahead. SCSI was designed to be future-proof, compared to IDE. That’s why it’s still possible to adapt modern SD cards to legacy SCSI interfaces… but it’s also why it’s easier to get an SD-to-SCSI interface adapter for old hardware, than it is to get *new* SCSI hardware. SCSI lost in the consumer market despite its superiority — in fact, in some ways it lost BECAUSE OF that superiority, which made it complicated and difficult to work with, in addition to being much more expensive.

These interfaces were originally specified at a time when every byte was precious, and nobody could justify “wasting” extra kilobytes of storage on excessive possible future expansion options. Especially since they couldn’t possibly know what the needs of future designs would be. Both the cluster size multiplier in the original SD standard and the variable block length of SD 1.01 *WERE* attempts at future-proofing — ones that seemed to make sense at the time, because the filesystems they had available to them were similarly limited as to the maximum number of logical blocks they could address.

As it turned out, though, both excessively large block counts per cluster and variable-length blocks proved to be the wrong approach, so later generations of storage interfaces were redesigned to eliminate the multiplier fields in favor of enlarging the ones used to store basic parameters like block count. Storage capacity has actually been increased by just adding on more and more small, fixed-size blocks, instead, with filesystems themselves redesigned the same way.

Expecting the designers of SD 1.0 to have first correctly predicted that, then designed their systems to support it, is like complaining that an old stereo from the 1970s is missing an optical digital input or 7.1-channel surround processing.

I have a few devices (dash cams, digital photo frames) that accept at maximum 16 Gb SD cards, where does that fit into this?

Product segmentation. The more expensive model reads the full 32 Gb.

Every time I see these restrictions that say max 16 or 32, the device almost certainly reads 64gb and sometimes 128gb. I find that it seems to be only the really cheap devices that even state a limit in the first place, and let’s be honest, the technical specs for those are already highly suspect.

I’m sure. Too often, also, the limit is the largest capacity that was available at the time the product was released, simply because the manufacturer were never able to do any real-world testing with anything larger.

PC builders have been doing the same thing with RAM slot capacities for decades. I feel like it’s only in the past, oh, 5-10 years or so, that it’s not almost unheard of for motherboard specs to define their maximum RAM capacity in any terms other than the options that already existed at its debut.

The elephant in the room is the inverse relationships between robustness, performance, and storage density.

For cameras, I treat the cheap TLC cards like a consumable, as they are a backup of what I consider personally important. Thus, tend to use low cost 32GB Sandisk for their compatibility and reliability for the battery life. I won’t trust a large-card file-system when the camera data writes hammer a single area like FAT does, or reuse the same cards to transfer and erase data per use.

For a Pi: The Sandisk Pro Extreme will do cell-replacement/wear-leveling internally if I recall, but they tend to still benefit from F2FS log based file-systems (usually extends card life by 1.7 times or more with a higher ratio of empty space). However, higher capacity (above 32GB) almost certainly means MLC flash, so you could start hitting your cell write count limits 5 times sooner than expected on a 64GB card. Note if your disk layout is wrong, the write performance can also be slower than expected… some cards were designed for FAT32 and only expect FAT32 in the hardware (some of the disk areas don’t actually exist in some brands). Sandisk and Adata are by far the most compatible cards I have used.

I have piles of different cards I have tested over the years… some counterfeits too (serial number all 0’s).

The lack of labeling standards means reliability is often card model-number specific, so community documentation can get less reliable over time. This is one product class I will always buy retail at a large chain supplier.

F3 scanning can help ID bogus/broken cards if you can afford to wait around an hour:

https://github.com/AltraMayor/f3

Best of luck,

=)

Thanks for the article. I learned a lot of the whys and wherefores!

Speaking of PIs, I only recall using Sandisk and PNY for my PIs. I’ve only had to flash a new OS on one so far due to corrupted disk. It was a 16GB SanDisk SD Card.

I am experimenting with a headless PI4 4G using a USB Samsung T5 500G as it’s boot drive (no SD card installed). It has been working fine and it is very responsive, I like it. I just cannot execute a fstrim command on it for some reason. I also have setup a powered USB 3.0 hub for an additional external USB 3.0 4TB HDD. So far all is working well as a server. Not my primary home data server yet. It does seem more than responsive enough for a file/media server, I am still seeing though if I can trust the configuration over time. So far so good. A ‘lot’ less space taken up than the current mid-tower case for the primary server!

F2FS defers auto-erasing areas as a low priority background task, supports trim just fine, and treats “hot” files listed during the format differently than less likely to be changed “cold” files.

On laptop SSD, I usually use inird+overlayFS for a read-only ext4 root /, F2FS for /home, and tweak the kernel parameters to supersize low-write-out priority block caching. A good sized $34 240GB Adata SSD will last a long time if you have enough ram to get away with this method.

Some usb-to-sata drive adapters can cause all sorts of issues, and some even block s.m.a.r.t access to the drive to hide bugs etc. If the drive model says it supports the mode, than I would start there if your kernel is recent.

If your files are at all critical, I’d encourage you to look at a commercial NAS running a raid system. These are designed to run solidly, and recover reliably.

If you’re just using it for data you’ve got backup of or can re-rip/torrent, then fine. But don’t use it for your only copies of photos, etc.

Source: have had DIY’d, cheap brand, or Pi based systems die and corrupt the drive too many times. Vs Have done two perfect and quick recoveries with Synology systems.

Problem with many of those is that the proprietary formats effectively ransomware your data if the unit breaks down. Just build your own based on a low cost PC platform.

Synology is Linux underneath.

In my case, I have backups. Several backups going back years. Plus off site backups. Not too worried about it. I have mid-tower Ryzen based system (Linux of course) currently as my home server. Problem with it is it does take up space even running headless. Therefore the experiment with the RPI4 (no SD Card)… The whole setup takes up very little desk space and less power which is the point. BTW, I had tried this with RPI3… but the USB2.0 and slow network access killed that idea. RPI4 though… It is doable. USB3.0 and Gigabyte Ethernet made all the difference.

FYI, I use my file server more than just a NAS. It also is my home redis server for IOT projects, DHCP server for the local network, and probably few other things I’ve forgotten. Nice and flexible! For file serving, I use mostly NFS … and SAMBA for the one Windows 7 VM that needs access to it.

exFat seems like a bad plan. I wish they had used something with better data protection like f2fs.

What were you expecting from Microsoft? Intelligence? Shame on you.

I guess it was a precedence for win8 and up.

Actually, I’m one of the few who love FAT for not supporting permissions and other *nix nonsense. They always get in the way if you want to work with embedded/headless devices. You can’t simply inject files to an EXT filesystem.

Instead, you have to boot up the whole *nix system and copy each single file tediously by hand. Before this,you have to find the mount point, find out the name for the USB pen drive, specify file system, cluster size etc. Ugh. 🙄

In reality you can put any file system you want on an ad card, as long as the host system supports it. All this exfat stuff is only necessary if you want to use a dumb device like a camera or a windows box

The problem is that the card’s internal structure and controller are optimized for the FAT layout that the card is formatted with. It knows that the FAT itself will be rewritten frequently and in small areas, whereas the file contents will be written infrequently, and in large chunks.

Reformatting the card will impact the lifespan.

Regarding the second elephant in the room. exFAT, until MS provided GPL drivers for Linux was NOT supported by Linux, I’m not sure people quite understand or appreciate that.

Reality is exFAT is not FREE nor OPEN, FAT32 wasn’t either, only when the company recognized they could no longer profit from it (and only then) was it freed from litigation. So implement exFAT at your own peril. fatFS has some notes regarding these issues (http://elm-chan.org/fsw/ff/00index_e.html) and exFAT requires a license of some sort to use. See

https://www.microsoft.com/en-us/legal/intellectualproperty/tech-licensing/programs?activetab=pivot1:primaryr5

And click exFAT tape (oh I mean ribbon) if you don’t see information regarding it.

Read

“Our exFAT licensees”

for your own use, means you can get away likely without licensing. Anything other than that, has the possibility of getting you in litigious situations.

https://cloudblogs.microsoft.com/opensource/2019/08/28/exfat-linux-kernel/

“We also support the eventual inclusion of a Linux kernel with exFAT support in a future revision of the Open Invention Network’s Linux System Definition, where, once accepted, the code will benefit from the defensive patent commitments of OIN’s 3040+ members and licensees.”

Now Linux usage is made conditional by patents and OIN membership. No, thank you.

“Now Linux usage is made conditional by patents and OIN membership. No, thank you.”

I see. But what alternatives do exist?

Did the open source sect* community provide any simple to use drop-in replacements so far?

FAT32 is quite limited by today’s standards.

4GB max. file size is is a nogo for HD/UHD video files, ISO/UDF images, HDD images etc.

So it is only natural that users seek out for a different filesystem, such as NTFS for external backup HDDs.

Unfortunately, NTFS is not the most friendly filesystem in respect to flash media.

On the other hand, FAT/FAT32/exFAT are supported by macOS and Windows since XP (via update). And Android, even. This makes exFAT an easy to use alternative. For the moment.

(*just kidding 😜)

Mostly fair, but…

Of course they did, F2FS. But it doesn’t matter when universal adoption of any such filesystem is effectively contingent on it being supported by Windows.

Microsoft has never once included a driver for any third-party filesystem in their consumer OS releases, at least (I think some Windows Server releases, maybe), so it’s effectively useless to publish one and attempt to have it gain widespread adoption.

Even when paying lip service to open standards, Microsoft’s walled garden effectively means they hold all the cards.

I was hoping this article would be about the physical size of these things. As a mostly amateur pcb designer it’s nice to get some storage with a card that only has a few pins instead of the mmc chips which cost more and require 64 pins and some cleaver firmware.

Biggest drawback of sd cards is that there is nothing smaller than micro (there needs to be a nano at this point) and that they don’t have just a few more copper pads further back so you can just smt them onto your board without glue.

I know plenty of people who are bumblefucks with inserting/ejecting micro-sd’s, so there’s a reason they stopped there.

And like the USB-A connector, there is very little indication which way is “up” when inserting one.

Sure there is, label side out, pins down, towards the board.

Would be nice to know which cards still support the SPI interface mode.

Rule of thumb is “classic” SD card standards supporting cards.

Basically anything 2GB or less.

But there exists SPI to USB adapter chips now, so you can read fat32 of a small USB.

If you *really* need small cards, you can still get them but they are marked as industrial or high endurance and come in SLC or hybrid-slc variants.

Cactus memory is a good example, but beware before you even start, these things are painfully expensive and are not a really good solution for your cheap photo picture frame or random battery powered widget. :(

I had some really sad sticker-shock when I started looking into these.

The article on the Raspberry Pi site was written before the release of the Raspberry Pi 4. That model will also boot from larger SD cards.

Until recently, no Raspberry Pi could claim SDXC compatibility despite the fact that the hardware has been in place for many years, because the Linux OS did not support the required exFAT file system. Larger cards would work, but only if they were reformatted with a supported file system such as ext4. Microsoft required payment of royalties to implement exFAT. Microsoft now has a program that allows open source software to provide exFAT with no royalty payments, so exFAT has been officially added to Linux and specifically to the Raspberry Pi OS distribution.

Pi’s just need hardware support for the low level parts so they can see the 256 to 512MB boot partition needed to boot.

Rest is software and fair game, exFAT being completely irrelevant.

It’d be nice if the next standard had SLC-mode as a part of spec. Most eMMC flash can be switched to psuedo SLC mode which cuts the storage to 25% or so but makes the bit lifetime and write endurance significantly better. As we go to TLC and QLC for these cards, being able to take a 1TB card and drop it back to 256GB with real endurance would be a nice option.

Why keep making these little incremental expansions every time the industry starts bumping into the standard’s limits? Just make it a 64-bit field and just let maximum size be a solved problem for a good long while.

That was my thought too. Couldn’t ‘cost’ that much to make available 64/128 bit registers to read from very beginning and then you’d never have to keep making special cases as new technology comes along. Of course we saw the same type of problem with seconds since 1970 (Epoch)…. Where was the foresight :) . Of course I remember sticking foot in mouth by saying “man o’ man, this 486 at 100mhz is all I’ll ever need” …. Yeah right! Now I think my Ryzen 3900x ‘may’ be around for awhile now…. But never say never!

When a technology is new it really can’t afford extra waste costs supporting theoretical standards compliance for devices that won’t exist potentially for decades.. In many cases trying it would only degrade performance too as you are asking the computer to work a larger address space, bigger packet etc than it can handle simply.

There is only so much ‘future proofing’ you can reasonably do. Though now everything is getting so powerful adding just one extra bit gives much much more increase in scope than in the past I’d hope the next increase would last a long time.. With the rate of data generation and consumption though I’d be surprised (at least if massive space rocks, wars don’t slow us down.)

Yeah, such as the Xd cards cards my old digital camera used.

They disappeared about 15 years ago.

Its about money.

Each time the tech behind it change then they know most consumers they will buy new hardware as well.

Those companies who is making those ‘ chips ‘ also make many other ‘ chips ‘ as well so ………

They also deliver ‘ chips ‘ and components to 3’rd parties who making/selling consumer products.

They wont be able to increase their business so much if existing hardware

could just read them ‘ native ‘ as you read an external harddrive.

But by change systems then they force those companies to build this new tech into their new products.

( example a laptop that can read/write bigger cards ect. )

So now the consumer think their ‘ laptop ‘ ( whatever product it is ) is obsolete and they need to buy a new.

Simply because most people doesn’t know how to do a work around / modification of their existing one .

The way I read ‘ big ‘ sd cards and micro sd cards on my laptop

( that cant read anything above 4 gb with its built in card reader )

is to use an external converter that connect on usb as a harddrive and not use the internal for

anything than small cards ..

Now it doesnt matter the size of the card it just read/write fine and I can format them as any

filesystem I want to use….

Problem solved.

( for now until they do something ”” new ”” )

This is my thought as well, and the obvious counter is that if it’s fundamentally a serial protocol then more bits in your address takes time and energy. But I think the remedy is obvious, the initial handshake should include a specification of the number of bits in your addresses. Then small devices can use small addresses and big devices can use big addresses, and both be compatible to the standard.

when SDXC came out, it was capable of addressing 4x as much data as the previous version. That should be enough for everyone. Right?

I can not believe “industry” is stupid enough to not realize this.

So what’s left is that they do it on purpose.

If for nothing else, lots of slight incompatibilities makes users more inclined to buy a new card if the old does not work, or buy a new gadget (photo camera, whatever) because the newer, bigger cards do not fit.

Ka- ching, you’ve just been manipulated into spending a few hundred EUR for a newer gadget.

“industry” has no interest whatsoever into making good and reliable products, or even making the products that people want to have. Their only interest is to extract as much money from customers in as many ways as they can get away with.

With small incremental changes they can extract much more money from customers then with a universal and flexible standard.

I totally agree , the ‘ greed ‘ behind…

It’s so extreme big business that no no ”” we do not make products to last more than the law say ”””

Money … only Answer that is correct.

The curious case of the GPX Model: MW3836 SUFFIX NO.: E1 MP3 player. It has 1 gig internal storage and out of the box maxes at 8 gig SD cards. But in mine I’ve used cards up to 64 gig, when formatted FAT32.

I don’t want to donate mine to a teardown to find out how it was made so dramatically forward compatible. It’s tiny yet has a display with a good amount of information and runs off a single AAA cell.

This is actually explained in the “SD compatibility table” in this article: any SDHC host can support SDXC cards, as long as you ignore the filesystem part of the spec.

And I just finished reading … https://www.bunniestudios.com/blog/?p=3554 On Hacking MicroSD Cards from 2013.

And what would be a time-proof solution? A register which reads as “how many next bytes describe the card capacity”, and then the reader reads the next n bytes.

It’s not that simple. With larger capacities you need a larger address space, or you won’t be able to reference the blocks at the end of the drive. That’s why filesystems like FAT32 bump up against limits, because the storage for addresses maxes out for address values beyond 2TB. And it’s very difficult to work around that kind of thing incrementally, but even more difficult to design a system without any such limitations that isn’t far too complex to be implemented correctly, quickly, or cheaply.

You can say, “Oh, just give everything lots of extra room, but (for example) having to set aside 32 bits of memory for _every_ block address when you only need 8 bits right now does cause real problems, and any such system would get crucified for its inefficiency.

The reality is, systems like that are designed, frequently — and they almost invariably end up losing in the market to a simpler, cheaper, less flexible, less complex, worse-is-better alternative full of limitations that won’t matter until later.

I think that around 2000, engineers could count to massive growth in address space and think about the future. Not simple – giving awesome solutions were never simple :) As bunnie wrotes in his 2013 post about Kingston SD’s, there are smart controllers, which are cheap.

Yes, this would be a perfect solution.

Makeds bootable os from sd card to prevent os bsods and to run computer if mainframe crashes.

My android specs say external storage: 128g or 1Tb exfat. I have only a 512g micro sd? Can I use this?

What about adding additional RAM via ramdisk like on the PC for smartphones or CPU cache l2 or l3 for smartphones too I’m just asking cuz I’m looking at the Intel optane SSD for adding CPU cache a for internal graphics and for gpus as well for motherboards.

> All models except for the 3A+, 3B+ and Compute Module 3+ are limited to SD cards below 256 GB, due to a bug in the System-on-Chip of earlier models.

What about later models? At the time this article was written, the Pi 4 had already been available for at least six months if not longer. And now the Pi Zero-2 is available, which I’ve read uses the Pi 3 chip. Thanks for an informative article.