September 30th, 1980 is the day when Ethernet was first commercially introduced, making it exactly forty years ago this year. It was first defined in a patent filed by Xerox as a 10 Mb/s networking protocol in 1975, introduced to the market in 1980 and subsequently standardized in 1983 by the IEEE as IEEE 802.3. Over the next thirty-seven years, this standard would see numerous updates and revisions.

Included in the present Ethernet standard are not just the different speed grades from the original 10 Mbit/s to today’s maximum 400 Gb/s speeds, but also the countless changes to the core protocol to enable these ever higher data rates, not to mention new applications of Ethernet such as power delivery and backplane routing. The reliability and cost-effectiveness of Ethernet would result in the 1990 10BASE-T Ethernet standard (802.3i-1990) that gradually found itself implemented on desktop PCs.

With Ethernet these days being as present as the presumed luminiferous aether that it was named after, this seems like a good point to look at what made Ethernet so different from other solutions, and what changes it had to undergo to keep up with the demands of an ever-more interconnected world.

The novelty of connecting computers

These days, most computers and computerized gadgets are little more than expensive paper weights whenever they find themselves disconnected from the global Internet. Back in the 1980s, people were just beginning to catch up on the things one could do with a so-called ‘local area network’, or LAN. Unlike the 1960s and 1970s era of mainframes and terminal systems, a LAN entailed connecting microcomputers (IBM PCs, workstations, etc.) at for example an office or laboratory.

During this transition from sneakernet to Ethernet, office networks would soon involve thousands of nodes, leading to the wonderful centrally managed office network world. With any document available via the network, the world seemed ready for the paperless office. Although that never happened, the ability to communicate and share files via networks (LAN and WAN) has now become a staple of every day life.

Passing the token

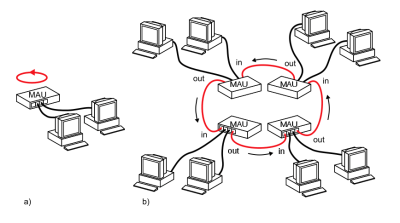

What did change was the rapidly changing landscape of commodity network technology. Ethernet’s early competition was a loose collection of smaller network protocols. This includes IBM’s Token Ring. Although many myths formed about the presumed weaknesses of Ethernet in the 1980s, summarized by this document (PDF) from the 1988 SIGCOMM Symposium, ultimately Ethernet turned out to be more than sufficient.

Token Ring’s primary points of presumed superiority were determinism instead of Ethernet’s multiple access with collision detection approach (CSMA/CD). This led to the most persistent myth, that Ethernet couldn’t sustain saturation beyond 37% of its bandwidth.

For cost reasons, the early years of Ethernet was dominated by dumb hubs instead of smarter switches. This meant that the Ethernet adapters had to sort out the collisions. And as anyone who has used Ethernet hubs probably knows, the surest sign of a busy Ethernet network was to glance over at the ‘collision’ LED on the hub(s). As Ethernet switches became more affordable, hubs quickly vanished. Because switches establish routes between two distinct nodes instead of relying on CSMA/CD to sort things out, this prevented the whole collision issue that made hubs (and Ethernet along with it) the target of many jokes, and the myth was busted.

Once Ethernet began to allow for the use of cheaper Cat. 3 (UTP) for 10BASE-T and Cat. 5(e) UTP cables for 100BASE-TX (and related) standards, Ethernet emerged as the dominant networking technology for everything from homes and offices to industrial and automotive applications.

A tree of choices

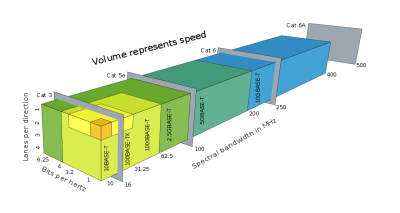

While the list of standards listed under IEEE 802.3 may seem rather intimidating, a more abbreviated list for the average person can be found on Wikipedia as well. Of these, the ones one most likely has encountered at some point are:

- 10BASE-T (10 Mb, Cat. 3).

- 100BASE-TX (100 Mb, Cat. 5).

- 1000BASE-T (1 Gb, Cat. 5).

- 2.5GBASE-T (2.5 Gb, Cat. 5e).

While the 5GBASE-T and 10GBASE-T standards also have been in use for a few years now, the 25 Gb and 40 Gb versions are definitely reserved for data centers at this point, with the requirement for Cat. 8 cables, and only allowing for runs of up to 36 meters. The remaining standards in the list are primarily aimed at automotive and industrial applications, some of which are fine with 100 Mbit connections.

Still, the time is now slowly arriving where a whole gigabit is no longer enough, as some parts of the world actually have Internet connections that match or exceed this rate. Who knew that at some point a gigabit LAN could become the bottleneck for one’s Internet connection?

ALOHA

Back in 1972, a handful of engineers over at Xerox’s Palo Alto Research Center (PARC) including Robert “Bob” Metcalfe and David Boggs were assigned the task of creating a LAN technology to provide a way for the Xerox Alto workstation to hook up to the laser printer, which had also been developed at Xerox.

This new network technology would have to allow for hundreds of individual computers to connect simultaneously and feed data to the printer quickly enough. During the design process, Metcalfe used his experience with ALOHAnet, a wireless packet data network developed at the University of Hawaii.

The primary concept behind ALOHAnet was the use of a shared medium for client transmissions. In order to accomplish this, a protocol was implemented that could be summed up as ‘listen before send’, which would become known as ‘carrier sense multiple access’ (CSMA). This would not only go on to inspire Ethernet, but also WiFi and many other technologies. In the case of Ethernet the aforementioned CSMA/CD formed an integral part of early Ethernet standards.

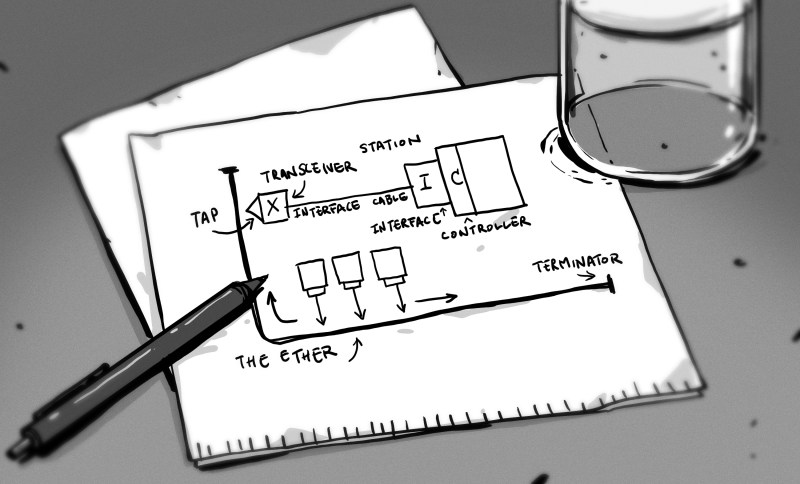

Coaxial cabling was used for the common medium, which required the use of the cherished terminators at the end of every cable. Adding additional nodes required the use of taps, allowing for the BNC connector on the Ethernet Network Interface Card to be attached to the bus. This first version of Ethernet is also called ‘thicknet’ (10BASE5) due to the rather unwieldy 9.5 mm thick coax cables used. A second version (10BASE2) used much thinner coax cables (RG-58A/U) and was therefore affectionately called ‘thinnet’.

The best plot twist

In the end, it was the use of unshielded, twisted-pair cabling that made Ethernet more attractive than Token Ring. Along with cheaper interface cards, it turned into a no-brainer for people who wanted a LAN at home or the office.

As anyone who has ever installed or managed a 10BASE5 or 10BASE2 network probably knows, interference on the bus, or issues with a tap or AWOL terminator can really ruin a day. Not that figuring out where the token dropped off the Token Ring network is a happy occasion, mind you. Although the common-medium, ‘aether’ part of Ethernet has long been replaced by networks of switches, I’m sure many IT professionals are much happier with the star architecture.

Thus it is that we come from the sunny islands of Hawaii to the technology that powers our home LANs and data centers. Maybe something else would have come along to do what Ethernet does today, but personally I’m quite happy with how things worked out. I remember the first LAN that got put in place at my house during the late 90s as a kid, first to allow my younger brother and I to share files (i.e. LAN gaming), then later to share the cable internet connection. It allowed me to get up to speed with this world of IPX/SPX, TCP/IP and much more network-related stuff, in addition to the joys of LAN parties and being the system administrator for the entire family.

Happy birthday, Ethernet. Here is to another forty innovative, revolutionary years.

Lovely article

I remember the special drill bit – for drilling the holes in the big yellow cable!

Those were the days.

Still have some thinnet lurking around the house.

We quickly decided that N connectors were far more reliable than taps. 3Com transceivers were the most desirable.

AUI cable was a right PITA. Along with the stupid slide latch connectors which were shoe-horned into the standard by AMP, but which were totally not up to the stresses introduced by the thick and inflexible AUI cable.

My first Ethernet experience was connecting several VAX 11/780 systems at work with DECnet

Forgot to mention, that was DECnet over 10base5. My first network experience, which predates Ethernet, was a TDM system connecting Collins 8500C computers in the Air Canada reservation system. That system had 2 networks, 2 Mb over RG-58 and 8 Mb over tri-axial cable (You think 10base5 is fun to work with?). The various devices, such as disk & tape drives, card punch/reader, printer and more were connected to the CPUs over these networks. There were also a couple of dozen PDP-11s, with several serial ports, connected to modems to connect the terminals located around the world.

Good old days of multi drop busses.

Back when switches were way too resource intensive to build for smaller networks.

And honestly, even today a fair few “switches” are just simple hubs that does little else then mirror the package contents onto all ports…

Rather crude to be fair, a simple hub is a handful of transistors in comparison to an actual switch that needs a bit of processing for package handling and potential routing, then it needs enough memory to buffer at least a few packages, not to mention its own internal buses for moving data between the ports and memory….

So even today, a switch can still be a very resource intensive thing, and why some Gigabit switches can’t actually reach more than a handful of Gb/s of traffic at once due to internal bottlenecks.

“And honestly, even today a fair few “switches” are just simple hubs that does little else then mirror the package contents onto all ports”

Do you have a model number?

I often need these kind of “dumb” switches for use with WireShark.

It’s a pain to set up port mirroring and port forwarding on “smart” switches.

A lot of them are called “hubs”.

So there are plenty of them on the market, though most doesn’t do gigabit, so expect fast Ethernet or slower…

They are also the bane of nice network setups, since package latency can be rather arbitrary due to other devices on the same hub sending their own packages…

Also be sure to connect a true hub back to itself for full nostalgia, if missing terminators was the bane of the thinnet age then surely packet storming one’s self with a network loop was the joy of the later star topology ;)

Well I guess, it wasn’t truly a “star” yet then now that I think about it!

Ah, that is indeed a wonderful thing to do….

Though, if one has 2 or more actual switches.

Then connecting them up in a circle can be a rather nifty way to increase overall bandwidth, since the switches can then practically handle two packages in parallel.

Though, parallel/serial behavior over multiple ports is also an interesting topic.

Like if we have 2 cables going from switch A over to B, then should we multiplex our data over it, or should we use them as two independent cables?

One solution gives us 2x bandwidth for any package.

While the other gives us 1x bandwidth for a single package, but if we send a small package, then it can use the free link instead of needing to wait for a larger package to squeeze through both links… (It becomes a latency-consistency vs peak-bandwidth argument.)

You can find network taps (purpose built for passively capturing traffic) for pretty reasonable prices on ebay. The search terms “network tap” and “gigabit tap” both find a wide variety.

You can make your own with a cheap managed switch.

https://forum.netgate.com/topic/144521/creating-a-data-tap?_=1602895609553

My first gen LinkSys DSL router (BEFSR81 and probably the 41). It is really slow and good for like 5Mbps. The ARM controller like a couple of my old Cisco consumer grade junk died of corrupted FLASH. I have turned the main board into a n unmanaged switch – needs a clock and EEPROM (that were on the controller card.)

The 8 port one uses the Zarlink MDS108 Unmanaged 9-Port 10 100 Mbps Ethernet Switch chip. There might be a similar smaller version of the chip?

Obviously you would need to use the I2C management interface to poke it.

I have not see that in the switch datasheet in my other manufacturers 4 port (10/100) routers.

How does this port mirroring actually work when the mirrored port is saturated in both directions (full duplex)?

Does the switch artificially limit the bandwidth so the mirror port can keep up in what is essentially half duplex mode?

Related to that dilemma I always wanted to try this: Take two 100MBit/s hubs and put one each between the separated RX and TX pairs.

Device1-RX—–TX–Hub1–RX—–TX-Device2

Device1-TX—–RX–Hub2–TX—–RX-Device2

And connect a sniffer to any other ports on the Hubs but “Sniffer-RX—–TX-HubX” only, not the TX line.

Would both devices still work in full duplex mode?

You’d set up 2 monitoring ports, 1 for each direction. You’d then need a computer with 2 NICs, to run Wireshark on. Wireshark supports multiple interfaces. The other way would be to use a switch and NIC that are capable of greater than that traffic, for example a 1G switch & NIC to monitor 100Mb connections.

I’ll soon see how it does with a higher bandwidth connection. Tomorrow, I’m upgrading my Internet connection from 75/10 Mb to 500/20.

“actual switch that needs a bit of processing for package handling and potential routing”

A layer 3 switch will have processing for setting up L3 routes, a layer 2 switch won’t need this, but might have CPU and memory for the user interface. However the layer 2 switching is all done in hardware, at line rate – no CPU or buffering required.

Unless several incoming packets need to go to one destination port… They have some hardware buffers per port for that reason.

Yes, a layer 2 switch is rather simple.

But ask someone in the early 80’s to buy a bunch of RAM for a buffer and they will potentially faint.

Especially all the added logic for handling that buffering as well.

So hubs were much much simpler and cheaper in comparison.

But yes, a L2 switch is very simple and rather dumb by todays standards.

Though a hub can be built out of “a few” 74 series logic chips rather easily.

Back in 1989, I hand wired a couple of Ethernet controllers on Data General Eclipse prototyping boards.

Uhm, afaik a true OSI layer 2 switch needs a cache for the MAC address to port translation table and some way to manage that.

Don’t you need at least some kind of CPU for that?

Cant imagine that being solved just with discrete logic…

Yep, it needs a CPU, though the switching fabric can be set up with tables to determine where a frame goes, instead of relying on the CPU to examine the MAC of every frame. However, that still requires a CPU to manage it.

Great article :-)

No, I don’t miss having to set terminal addresses on twinax attached devices

This is a great article. I’m curious, given the “countless changes to the core protocol” over the years, would a 10BaseT device from 1990 still work on my 2020 home LAN? My guess is that 10BaseT == 10BaseT, but maybe something’s changed?

“would a 10BaseT device from 1990 still work on my 2020 home LAN?”

Maybe. The 10BaseT devices in the early 1990’s were still half duplex, everything is full duplex these days. In theory, autonegotiation should take care of that, but I have encountered some hardware that would only support half duplex if explicitly configured to do so.

Yes, switches are backwards compatible.

Yes, can confirm. See me other posting below. Ethernet is backwards compatible.

You might find that the testing 10Base-T support in modern chipsets receives leaves a lot to be desired.

I do ethernet tapping/interception for a living. 100Base-T remains relatively common in ICS, military and even some specialist areas of finance (my most recent and surprising experience: a fault tolerant credit card processing mainframe only supported 100Base-T). I’ve not seen 10Base-T in the wild for many years.

Previously covered here https://hackaday.com/2015/06/12/retro-edition-the-lan-before-time/ was a blog writeup with gems like DIY Thicknet USB adapater: “Building a 10BASE5 “Thick Ethernet” Network” (2012) http://tech.mattmillman.com/projects/10base5/

Video recreating mixed thicknet/thinnet/10baseT setup, including windows 3.11 laptop :o : “Retro 10base5 Thicknet and 10base2 Thinnet network”

https://www.youtube.com/watch?v=bAcSnwmB_4Q

I notice his 10base2 cable was much thinner that the typical RG-58 cable.

Thicknet? I always thought that “Frozen Yellow Garden Hose” was a much more accurate name.

How man times did we have to type these lines into the CONFIG.SYS to get the PC to connect to the network??

DEVICE=DXMA0MOD.SYS (interrupt arbitrator)

DEVICE=DXME0MOD.SYS (Ethernet driver)

DEVICE=DXMT0MOD.SYS (NetBIOS driver)

Here’s a link to the original “Blue Book” spec.

https://gordonbell.azurewebsites.net/Ethernet_Blue_Book_1980.pdf

Oddly enough I came across a couple of BICC branded 10Base5 transceivers complete with N-type adaptors whilst rummaging through my collection of bits yesterday evening. This article brings back memories of that yellow cable snaking around and up through the buildings, MAU cables attached to hubs, bridges and even gateways between different network technologies. I liked ‘Thinnet’, especially with those (expensive) make-before-break connectors which allowed live insertion of removal of PCs. I implemented FDDI at a then blistering rate of 100Mbps, but without doubt the biggest change was the introduction of “Structured wiring’ which allowed us to deploy terminals and PCs from different systems anywhere in a building, and importantly be able to move them without rewiring; my first twisted pair EtherNet installation used a pre-10Base-T standard implementation from Synoptics (Lattisnet, remember that?) now LANs are commoditised and almost anyone can put together a working 1, 10 or 40 Gbps without even thinking about it – but every now and then you still need to sniff a few packets to find out what’s going on, that’s still fun and a welcome distraction!

At the sugar mill where I did my apprenticeship we had a few coax switches and many of the lan cards had a termination selector on them. When I talk to most current IT people the say it never existed but we had many unusual communications systems throughout the factory with different process control brands doing there own thing.

Our company network guy shared a problem with me once.

He had been called in because the company accountant could no longer access the file server. “Have you changed anything?” “No.” So he visited the office and found that the desk had been moved across the room. As it was now away from the T-piece on the coaxial cable loop there was now a length of RG-58 (correctly terminated with BNC at both ends) from there to the network card in the PC. (For the uninitiated, the T-piece was supposed to connect directly to the network card as otherwise it would be an impedance discontinuity and cause signal reflections).

I worked on Token Ring products for Data General and 3Com. Once 100mbit Ethernet arrived, Token Ring was doomed.

Token Ring had a number of issues, but the main problem with it was that the Type I shared media cabling was even more cumbersome and finicky than “thick wire” Ethernet. When we got to building 16mbit twisted pair Token Ring switches, they were always harder to get through radiated emissions testing than 10/100 ethernet. This was because the TR waveforms were not tailored to the CAT3 cable as had been done for ethernet (due to the rigid clock edge stability requirements for TR). And TR was never going to go faster than 16Mbit/s

Another issue with Token Ring, was the more complex MAC firmware. Unlike with Ethernet, where the MAC was self-contained and the upper layer code was run on the attached host, TR used licensed firmware which had to be loaded into the MAC on powerup (this was because the media access process on TR couldn’t tolerate the latency introduced when the code ran on the host). More expensive MACs and license fees meant a TR interface card cost ~3x what a 10/100 Ethernet card cost, for a slower and more complex network. Once PC manufacturers started putting Ethernet chips on motherboards, TR was done.

I, too, have a couple of lengths of half-inch Ethernet cable lying around. It is actually pretty close to RG-213 50 ohm coax, and is eminently usable for HF ham radio feedline. I also have a reel of “thin net” coax and some crimpable BNCs, which come in quite handy for making RF patch cables. I have also successfully connected an old SGI desktop with a 10mbit AUI connector to a modern 10/100 ethernet network. Finding the little AUI-to-10/100 RJ adapter was a bit of a challenge, but you can still find them on eBay and from surplus dealers. I used a Linux system as a TFTP server to load an operating system update onto the SGI.

Thanks for the memories…

Does anyone remember (or have a link to) the Xerox TV commercial where the guy is explaining how Ethernet can connect computers, printers, scanners and copy machines? He’s in an office, and as he talks, he’s drawing an Ethernet network on his office wall. At the end of the scene, a couple of coworkers walk by his office door and one says to the other, “Is he drawing on his walls again?”

Only saw it once, but it’s such a typical “engineer” commercial…

It’s been a while, but I think ALOHA actually went to two different channels one for upstream and one for downstream. They eventually went to “slotted ALOHA” due to the “hidden transmitter problem”: you can’t to CSMA-CD if you cannot reliably do the “CD” collision detection part, and the transmitters on the different islands could not always detect a colliding transmission.

Alohanet (sorta) lives on in amateur and commercial “packet radio”.

great article!

I really liked the idea of ARCNET. It could use either coax or telephone wire and worked in both star and bus topologies, or even as a mix. I never really worked with it much though as the one place I worked that had it was phasing it out for ethernet when I got there.

Anyway, back when I was exposed to ARCNET (late 90s) ethernet cable and hubs were sooo expensive and switches were just unobtainable. Or at least they seemed to be on younger me’s budget, I don’t know which. Maybe I’ll go check archive.org later and find out. I wanted to wire my home and my parents homes with ARCNET.

In those days every building was already wired with telephone wires that were already starting to fall out of use so ARCNET could have just been plugged in.

I imagined one hub per house, using star topology to run a line to each room but then each room could have any number of devices by just daisy chaining them. There would be no need to buy a new hub just because one more port was needed for a new device. Detached garages and barns could each have their own hub connected back to the house hub via coax. I forget if it took 50 or 75 ohm but I had stashes of both.

People I talked too told me they didn’t miss ARCNET because it required terminators and you had to flip dip switches to configure each card with a unique id.

A terminator was just a resistor crimped into a telephone plug. I had already cut my networking teeth by crimping 100s of ethernet cables so I was hardly going to be dissuaded by something so trivial as a terminator.

As for assigning ids to cards via dip switches. No big deal. Those were the days when “Plug n Pray” was replacing dip switches on motherboards (but not yet doing so reliably) and after that awful experience I liked the idea of manually assigning anything and everything by dip switch. Besides, how many computers was I going to have? Later in life I tried assigning port forwards to computers on a LAN behind a crappy AT&T U-Verse modem. I still see value to being able to hard set IDs!

So, when a building was being torn down I managed to acquire a big stack of ARCNET hubs. But I never managed to get ahold of more than one ARCNET card so I couldn’t use them for anything. Years later I realized that the price had dropped and speed increased of ethernet to the extent there was no point in holding onto that old ARCNET stuff so I sold it all on Ebay.

Oh well, it could have been great.

There was another network technology called “StarLAN”, which evolved into 10baseT. It was designed to be used over existing telephone wiring.

https://en.wikipedia.org/wiki/StarLAN

Oh yes, StarLAN. “AT&T StarLan Sales Training”:

Warning: was recorded in ‘They Live’ universe, which you learn at 2:00 minute mark ;o)

https://www.youtube.com/watch?v=REFfvSIxEP0

Would love to see someone setup a small StarLAN network nowadays and walk thru its quirks. Seems the only cards still documented/somewhat available on the internet are for Melco Embroidery Machines. They do look pretty simple, two RS-422 transceivers (AM26LS30), Altera CPLD, 8KB SRAM, Intel 8051 micro and some buffers/address decoders.

I seem to remember the main commercial advantage Ethernet had over token ring in the early days was that token ring was patent encumbered and any 3rd party implementation had to get a license and pay royalties to IBM. Ethernet was an open standard, which meant pretty well anyone could create an ethernet card. This meant that, despite the initial performance issues, it quickly overtook token ring and out innovated it.

The big lesson is companies trying to control protocols and standards rarely ends well.

On the other hand, Token Ring had significant performance advantages over Ethernet. With the collisions & retransmissions, it wasted a lot of bandwidth. With token ring, only the station holding the token could transmit, which meant the bandwidth could be almost fully used. TR was also deterministic, which meant there was a hard limit on how long a station had to wait to transmit.

BTW, I used to do 3rd level support, mostly OS/2, but also some Windows stuff, at IBM Canada back in the late 90s. The network there was token ring, with Ethernet just starting to appear when I left in 2000.

Yeah, along with that IBM’s Token Ring was at least 4x more money than any other solution. The licensing issues helped to keep it that way. And that helped it to die. Kind of ironic. Or just plain funny to me. Of course the wiring was also really inconvenient. I still remember I bought my first butane powered soldering iron so I could easily solder Token Ring cables in attics and crawl spaces. I still have one! :-)

Ethernet, yet another example of tech becoming dominant not based on technical merit. Back when Ethernet was on thinnet Arcnet had superior performance characteristics even though it was rated at near a tenth the speed. @James Knott does a good job of summing up Ethernet woes. Every other network medium I’ve worked with (other than WiFi which is Ethernet for the sky) has used a “token passing” architecture, even if it wasn’t named “Token Ring”. I often used to quip, “Which would you rather drive in: A heavily populated city with no traffic signals (Ethernet) or one with (everything else)?” Bottom line is: every blink of the collision light equates to a loss of bandwidth on two fronts multiplied by the number of injured parties and possible new injuries: 1. bandwidth lost in transmitting the headers that detected the collision. 2. the “randomish” back off, wait and hope for the best delay.

I have often wondered what would have happened if other network technologies could have afforded to stay in business and continue to improve themselves as Ethernet did. Cheap was really the only thing it had going for it. Well, until UTP, which was nice. But still… with hubs one node could poison the whole net, even in a star topology. Thank GOD for affordable switches: they put sanity into the insane!

We played Doom over a serial connection in the 90’s – the cable made from 3pcs of 230v extenders, 20m between two flats :D

My first home “network” was over a parallel port “LapLink” cable. I was running OS/2 at the time and it had a parallel port adapter. It was also possible to do the same over a serial port, though I didn’t try that. Of course we had dialers for accessing the Internet. With my first ISP, I used SLIP and had a static IP.