Al and I were talking about the IBM 9020 FAA Air Traffic Control computer system on the podcast. It’s a strange machine, made up of a bunch of IBM System 360 mainframes connected together to a common memory unit, with all sorts of custom peripherals to support keeping track of airplanes in the sky. Absolutely go read the in-depth article on that machine if it sparks your curiosity.

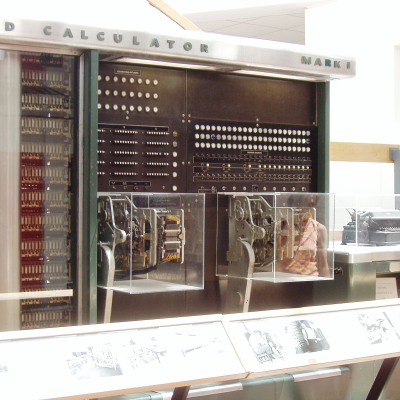

It got me thinking about how strange computers were in the early days, and how boringly similar they’ve all become. Just looking at the word sizes of old machines is a great example. Over the last, say, 40 years, things that do computing have had 4, 8, 16, 32, or even 64-bit words. You noticed the powers-of-two trend going on here, right? Basically starting with the lowly Intel 4004, it’s been round numbers ever since.

I wasn’t there, but it gives you the feeling that each computer is a unique, almost hand-crafted machine. Some must have made their odd architectural choices to suit particular functions, others because some designer had a clever idea. I’m not a computer historian, but I’m sure that the word lengths must tell a number of interesting stories.

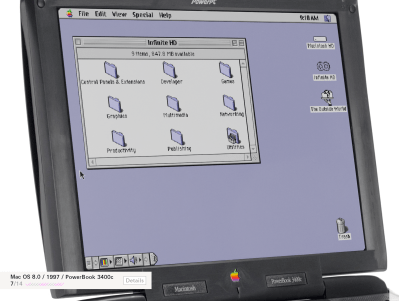

On the whole, though, it gives the impression of a time when each computer was it’s own unique machine, before the convergence of everything to roughly the same architectural ideas. A much more hackery time, for lack of a better word. We still see echoes of this in the people who make their own “retro” computers these days, either virtually, on a breadboard, or emulated in the fabric of an FPGA. It’s not just nostalgia, though, but a return to a time when there was more creative freedom: a time before 64 bits took over.