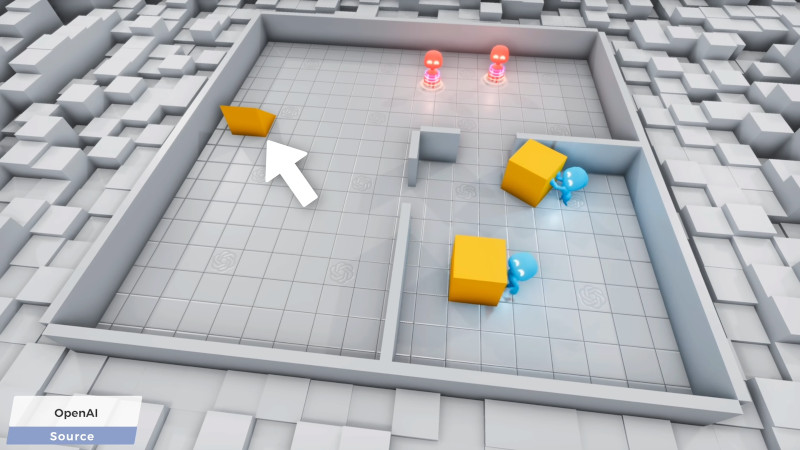

Machine learning has come a long way in the last decade, as it turned out throwing huge wads of computing power at piles of linear algebra actually turned out to make creating artificial intelligence relatively easy. OpenAI have been working in the field for a while now, and recently observed some exciting behaviour in a hide-and-seek game they built.

The game itself is simple; two teams of AI bots play a game of hide-and-seek, with the red bots being rewarded for spotting the blue ones, and the blue ones being rewarded for avoiding their gaze. Initially, nothing of note happens, but as the bots randomly run around, they slowly learn. Over millions of trials, the seekers first learn to find the hiders, while the hiders respond by building barriers to hide behind. The seekers then learn to use ramps to loft over them, while the blue bots learn to bend the game’s physics and throw them out of the playfield. It ends with the seekers learning to skate around on blocks and the hiders building tight little barriers. It’s a continual arms race of techniques between the two sides, organically developed as the bots play against each other over time.

It’s a great study, and particularly interesting to note how much longer it takes behaviours to develop when the team switches from a basic fixed scenario to an changable world with more variables. We’ve seen other interesting gaming efforts with machine learning, too – like teaching an AI to play Trackmania. Video after the break.

“and recently observed some exciting behaviour in a hide-and-seek game they built.”

The article is from September 17, 2019. Not so recent, isn’t it?

Very recent in geologic time.

Hackaday, the site which reports on 2 year old articles/videos as “news” and “recent”.

You sound like someone for whom only the last fad is of importance.

I sound like someone who was excited to find news about a well known project, only to find out that there is no news at all. Which was disappointing, to say at least.

I had not seen this before and found it interesting. Thank you HAD.

And then they discovered how to get out of the stack and went crazy when they discovered that they were a pitiful creation from some outer dimensional beings… they are now in your silicon trying to reach a way to destruct us all

Now if they would apply this sort of thing to games like Lemmings or Spy vs spy. I’d watch that AI-sport.

https://en.wikipedia.org/wiki/Lemmings_(video_game)

There’s a open source clone called Pingus ( https://pingus.gitlab.io/ )

https://en.wikipedia.org/wiki/Spy_vs._Spy_(1984_video_game)

Are you familiar with DeepMind’s AI project AlphaStar? They trained it to play StarCraft 2 and it’s giving even pro gamers a run for their money

https://gamerant.com/electronic-arts-patent-player-data-train-ai/

>The patent is described as “training for machine learning of AI controlled virtual entities,” with the crux supposedly surrounding AI learning from data gathered from real-life players. How EA’s tech will do that is by allegedly detecting “when a particular gameplay situation occurs during the player’s video game experience,” and registering exactly how the player reacts. In turn, this will be used to “train a rule set based on the game state data.”