We all seem to intuitively know that a lot of what we do online is not great for our mental health. Hang out on enough social media platforms and you can practically feel the changes your mind inflicts on your body as a result of what you see — the racing heart, the tight facial expression, the clenched fists raised in seething rage. Not on Hackaday, of course — nothing but sweetness and light here.

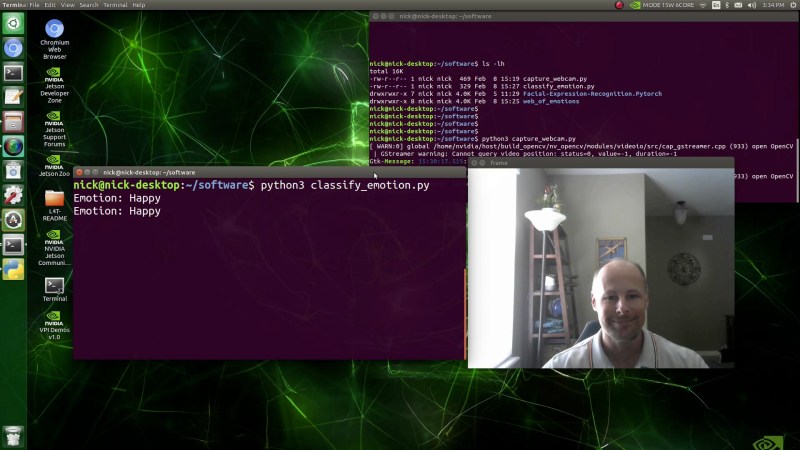

That’s all highly subjective, of course. If you’d like to quantify your online misery more objectively, take a look at the aptly named BrowZen, a machine learning application by [Nick Bild]. Built around an NVIDIA Jetson Xavier NX and a web camera, BrowZen captures images of the user’s face periodically. The expression on the user’s face is classified using a facial recognition model that has been trained to recognize facial postures related to emotions like anger, surprise, fear, and happiness. The app captures your mood and which website you’re currently looking at and stores the results in a database. Handy charts let you know which sites are best for your state of mind; it’s not much of a surprise that Twitter induces rage while Hackaday pushes [Nick]’s happiness button. See? Sweetness and light.

Seriously, we could see something like this being very useful for psychological testing, marketing research, or even medical assessments. This adds to [Nick]’s array of AI apps, which range from tracking which surfaces you touch in a room to preventing you from committing a fireable offense on a video conference.

“Not on Hackaday, of course — nothing but sweetness and light here.”

Bu of course. Plenty of LEDs involved.

Hmmm… now my computer can also tell me I’m cranky and ask me if there’s something wrong.

I’m sorry, Jan. I’m afraid I can’t do that.

I can see this being used by Facebook and Google to see what adverts and memes you respond to, to feed you adverts when you’re at your emotionally most vulnerable (sorry, “at the most effective times”), and to check you’re always smiling when you read government press releases.

Smile and move along please, nothing potentially dangerous here…

Appropriate amount of emotion may get you though phone trees faster.

“Seriously, we could see something like this being very useful for psychological testing, marketing research, or even medical assessments.”

Alas, one has to keep a lot of stuff in mind when it comes to trying to detect emotions on one’s face, like e.g. I’ve been told a lot of times that my face tends to be pretty unemotional most of the time and it can be hard to gauge how I feel about something just by looking at me. Why? Well, it’s apparently a pretty common thing for people with Asperger’s syndrome, of whom I am also a part of.

In a project like this it’s all irrelevant, but any sort of marketing-research, psychological testing, medical assessments and the likes can go horribly off-the-base, if one just blindly looks at facial-expressions or -features.

Those who have had botox injections also might be harder for a system like this.

Such treatment makes it harder to make emotional face expressions – which makes it harder for others to understand one’s emotions, and not being able to make the expressions makes it harder for people to be aware of their own emotional responses.

This should also incorporate a skin resistance sensor in the mouse.