If you snore, you’ll probably find out about it from someone. An elbow to the ribs courtesy of your sleepless bedmate, the kids making fun of you at breakfast, or even the lady downstairs calling the cops might give you the clear sign that you rattle the rafters, and that it’s time to do something about it. But what if your snores are a bit more subtle, or you don’t have someone to urge you to roll over? In that case, this AI-powered haptic snore detector might be worth building.

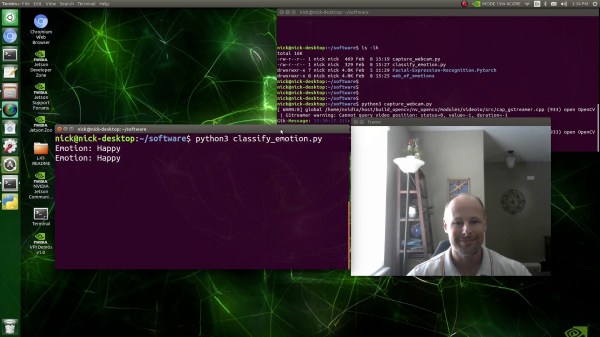

The most distinctive characteristic of snoring is, of course, its sound, and that’s exactly what [Naveen Kumar] chose as a trigger. To differentiate between snoring and other nighttime sounds, [Naveen] chose an Arduino Nicla Voice sensor board, which sports a Syntiant NDP120 deep-learning processor and a built-in MEMS microphone. To generate a model that adequately represents the full tapestry of human snores, a publicly available snoring dataset — because of course that’s a thing — was used for training. Importantly, the training data included samples of non-snoring sounds, like sirens and thunder, as well as clips of legit snoring mixed with these other sounds. The model is trained with an online tool and downloaded onto the board; when it detects the sweet sound of sawing wood three times in a row, a haptic driver board vibrates the pillow as a gentle reminder to reposition. Watch it in action in the brief video below.

Snoring is something that’s easy to make light of, but in all seriousness, it’s not something to be taken lightly. Hats off to [Naveen] for developing a tool like this, which just might let you know you’ve got a problem that bears a closer look by a professional. Although it might work better as a wearable rather than a pillow-shaker.

Continue reading “AI-Powered Snore Detector Shakes The Pillow So You Won’t”