Last week I was sitting in a waiting room when the news came across my phone that Ingenuity, the helicopter that NASA put on Mars three years ago, would fly no more. The news hit me hard, and I moaned when I saw the headline; my wife, sitting next to me, thought for sure that my utterance meant someone had died. While she wasn’t quite right, she wasn’t wrong either, at least in my mind.

As soon as I got back to my desk I wrote up a short article on the end of Ingenuity‘s tenure as the only off-Earth flying machine — we like to have our readers hear news like this from Hackaday first if at all possible. To my surprise, a fair number of the comments that the article generated seemed to decry the anthropomorphization of technology in general and Ingenuity in particular, with undue harshness directed at what some deemed the overly emotional response by some of the NASA/JPL team members.

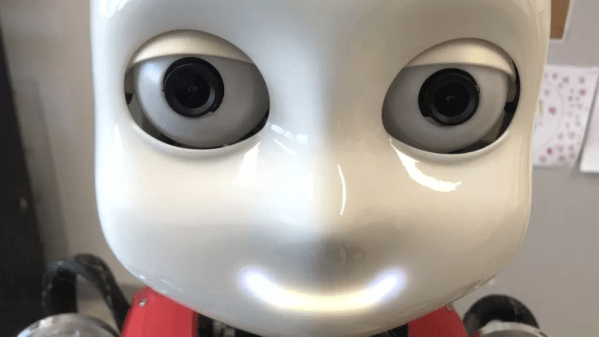

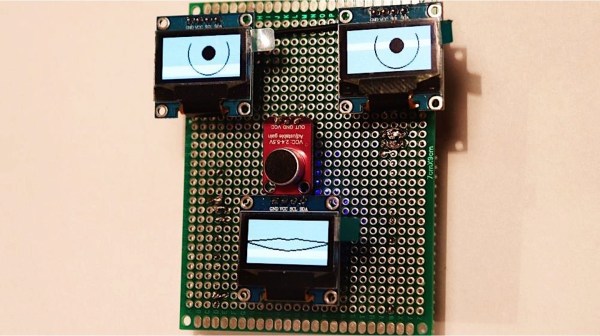

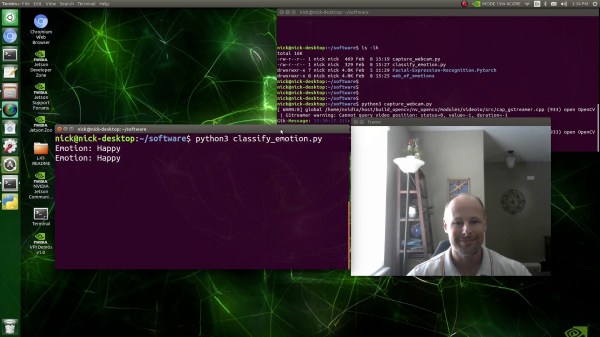

Granted, some of the goodbyes in that video are a little cringe, but still, as someone who seems to easily and eagerly form attachments to technology, the disdain for an emotional response to the loss of Ingenuity perplexed me. That got me thinking about what role anthropomorphization might play in our relationship with technology, and see if there’s maybe a reason — or at least a plausible excuse — for my emotional response to the demise of a machine.

Continue reading “In Defense Of Anthropomorphizing Technology”