The much-anticipated video from the entry descent and landing (EDL) camera suite on the Perseverance rover has been downlinked to Earth, and it does not disappoint. Watch the video below and be amazed.

The video was played at the NASA press conference today, which is still ongoing as we write this. The brief video below has all the highlights, but the good stuff from an engineering perspective is in the full press conference. The level of detail captured by these cameras, and the bounty of engineering information revealed by these spectacular images, stands in somewhat stark contrast to the fact that they were included on the mission mainly as an afterthought. NASA isn’t often in the habit of adding “nice to have” features to a mission, what with the incredible cost-per-kilogram of delivering a package to Mars. But thankfully they did, using mainly off-the-shelf cameras.

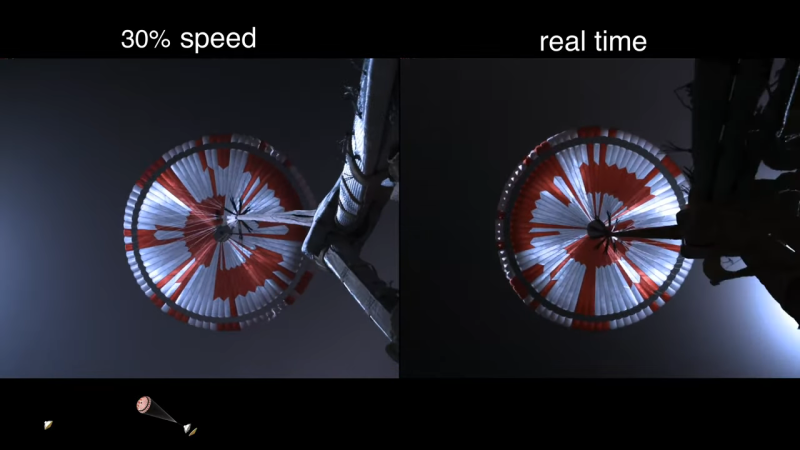

The camera suite covered nearly everything that happened during the “Seven Minutes of Terror” EDL phase of the mission. An up-looking camera saw the sudden and violent deployment of the supersonic parachute — we’re told there’s an Easter egg encoded into the red-and-white gores of the parachute — while a down-looking camera on the rover watched the heat shield separate and fall away. Other cameras on the rover and the descent stage captured the skycrane maneuver in stunning detail, both looking up from the rover and down from the descent stage. We were surprised by the amount of dust kicked up by the descent engines, which fully obscured the images just at the moment of “tango delta” — touchdown of the rover on the surface. Our only complaint is not seeing the descent stage’s “controlled disassembly” 700 meters away from the landing, but one can’t have everything.

Honestly, these are images we could pore over for days. The level of detail is breathtaking, and the degree to which they make Mars a real place instead of an abstract concept can’t be overstated. Hats off to the EDL Imaging team for making all this possible.

A special shoutout to MRO’s Hirise imager too, which managed to manage to capture a stunning image, from orbit, of the backshell and parachute impacting the surface.

Wild considering the level of tech onboard. FPGAs + a 200Mhz (max) PPC can be a potent combination.

Ingenuity is rocking a Qualcomm Snapdragon 801 processor!

Only because it’s not very important. It’s only real job is to prove it can fly.

All good towards having provable space hardware.

An all-stock cameras except for the few components they had to change because they’d out-gas in a vacuum and cloud the optics.

I would think the most important criteria would be environmental durability and energy efficiency.

The camera system is actually completely separate from the rover’s primary computer. One of the requirements for this project was that it had little to no impact on the rover’s critical systems during EDL, since it was already proven tech (from Curiosity) and NASA didn’t want to fiddle with it too much:

The EDLCAMs were developed with a minimal number of key requirements. The first requirement was that the EDLCAMs must not interfere with the flight system during the critical EDL phase of the mission. This requires that the EDLCAM imaging system have zero or near zero interaction with the MSL-heritage flight system elements during EDL.

If you’ve got some time to kill, the ~50 page whitepaper on the rover’s various camera systems is fascinating stuff:

https://link.springer.com/article/10.1007/s11214-020-00765-9

Right. Isn’t that what’s handled by the FPGAs?

Where’s the hack? The hardware is doing exactly what it was designed to do.

If putting hardware on other planets does not count as a hack then nothing does.

Many hacks work perfectly, this only makes them better hacks.

Many things are hacks, putting hardware on other planets is pure engineering.

Using off the shelf cameras by replacing a few components so they don’t outgas and filling voids with epoxy (or whatever they used) seems like the best kind of hack to me.

I don’t remember anything in the data sheets saying “suitable for interplanetary exploration” so it is clearly a hack. :)

The hack is dubbing the applause and cheering at mission control over the video just after touchdown occurred, as it clearly happened 3.44 minutes later ;^)

And why the performing seal act whenever something goes according to plan?

Yay dehumanizing language!

That’s fine, you can stay here when the rest of us leave for space and we won’t bother you anymore.

I did notice that while watching. Not exactly the old icy Apollo era guys. The one thing that bugs me more is when they dumb down the press briefings and get into the “feelings” everyone had. I prefer the more scientific level press conferences. I get that they are excited about it but really I am interested in the science and engineering of it. All the talk about “capturing an image to inspire people” does nothing for me as well.

Don’t be naive. Nobody cares what you and I want, because we’re already sold. What pays for our fun is the muggles who care about the “human” aspects of exploration. The performing seal act sells tickets, and we get OUR entertainment paid for. Sure, it bugs me when the official feed talks about pounds and miles per hour, because it makes us look like yokels, but it keeps things from looking elitists to the actual yokels.

What is this? Reverse schadenfreude? Dissappointment that it wasn’t a disappointment?

In the briefing the camera/imaging lead mentioned they were COTS cameras but had some slight modifications such as (IIRC) extra internal padding and also the ability to outgas. So, hacked.

Considering the semi-autonomous nature I can see why cameras weren’t an afterthought. Never mind a lot of “never tried before” needed to be verified.

I would think being able to have something like 30 cameras for the weight of one on a Viking mission might have helped.

Goosebumps!

The parachute color coding is an easter egg.

Anybody care to crack the code?

Converted to hex it is:

EC4D 5444 444C 645C

4000 DD92 D111 11EE

E991 1111 2D5E 1111

1169 A699 8000 29AD

1111 111A 59E9 2000

MSB is outermost of the 4 rings, LSB is the innermost

80 nybbles begins after one of the 3-nybble blanks.

Any of the bit order or start position assumptions might be wrong. It may well be four 80-bit words or 40 bytes, or morse, I have no idea.

Hm. I thought it was just over-thinking a way to make it easier to determine the spin rate, in case of failure.

At the press conference Al Chen said there is a message to decode in it and “We invite you all to give it a shot — and show your work.”

I’m sure someone over on reddit probably has already cracked it.

Admitted my first thought was to try and see what it come out as when converted to 8080 instructions. :D

And endianness is assumed too. I got nothing on it atm, but I’m no hex/bin hacker

Isn’t it DARE MIGHTY THINGS? someone claimed it is a 10 bit encoding

Yeah. nth letter of the alphabet. Lamest encoding ever. I totally overthinked it.

And nice touch with the geographic coordinates on the outermost ring.

Guess they want the grade schoolers to figure it out.

Are you treating each panel as a bit? Is it 1 (red) and 0 (white)?

I’m not seeing the 3 nib “blanks” – that would be 12 panels in a row, yeah? I only see the inner 3 rings with red panels. How are you getting the outside ring start/stop location? Do you read clockwise or counter-clockwise?

If it’s \0 terminated, you can work back from the end. It may also be \0 started, as well. If it really is a “10 bit encoding”, it could be 8 bits, 2 stop bits, 0 parity, ASCII encoded.

Could also be $-terminated, like CP/M and PC BIOS. :)

Do you think it’s RZ or NRZ?

The strings of repeating ones argues against NRZ, since this would result from alternating 0s and 1s in the original (unencoded RZ) string, which seems unlikely. Also, without any clear syncing method, you couldn’t tell which state to start in.

Also, doesn’t look like asynchronous serial after all – I tried this and got numerous framing errors.

I don’t know whether this is done as nybbles on each radial line, or each ring serial, possibly not synced with each other. Nybbles doesn’t look likely, since the high nybbles look pretty much the same as the low nybbles.

It’s not a balanced code – the ratio of reds to whites is different for each ring.

If each ring is separately clocked, it’s worth noting that each ring has exactly one sequence of w-r-w-r-w. Aligning the rings with these made it look like it almost wanted to split into 10 bit characters, but that didn’t pan out.

ROT13’d spoiler: rnpu bs gur sbhe evatf pbagnvaf rvtug gra ovg flzobyf

vaare guerr evatf ner whfg bar vf n, gjb vf o, naq fb ba

bhgre evat fubhyq or ernq nf ahzoref znexvat yngvghgr naq ybatvghqr

Thanks! But Scott Manley already spoiled it for me.

You’re assuming that the code is RZ (return to zero). Could also be an NRZ code.

Look at that: an article with “stunning” in its title, that ISN’T clickbait.

That was my first thought, too. An article that actually had stunnig footage, oh my.

There was a part of the presser where they were struggling to convey just how meaningful it was to the engineering teams to see their decade of work function on Mars.

If I had to guess, the single most compelling selling point for management to approve the additional cameras and data upload was getting quality footage of a supersonic parachute deploying in the Martian atmosphere. Everything else they can infer from sensor data, but if you look through NASA’s backlog, you’ll see parachute development is a major ongoing engineering effort, and that 70fps video was something they would pay dearly for.

It’s the second time we’ve done this maneuver. It still feels like the stuff of science fiction.

It is! When you can’t go out and give it a ‘go’ here on Earth. That is what makes this landing so … awesome. All those little steps ‘had’ to function in that short period of time at the right time without any trial runs (other than many simulations on computers back home). Miss one and mission over. Let alone getting to the planet and at right time to enter atmosphere precisely to get in vicinity of your landing spot….. There is no “let’s get in orbit first”, then pick you time to deobit for landing….

Friend-of-Hackaday Arko worked on the landing visualization system, and did a ton of helicopter flights over the Mojave Desert to get it dialed in. And dialed in it was — he claims they set Perseverance down within 5 meters of their target!

https://twitter.com/arkorobotics/status/1363948858036785152

Skycrane seemed to stir up a lot of dust for a procedure that was supposed to avoid stirring up a lot of dust.

It did its job of not completely sandblasting the rover with supersonic grit. Some distance between the rockets and the ground allows the exhaust to slow down and be less destructive, but putting it far enough up to not blow the dust around at all would be quite impractical.

Especially since Mars has pretty regular dust storms on its own.

I was hoping to see the impact of the heat shield.

But the rest was cool enough.

Here you go: https://i.imgur.com/VZwtaU7.gif

Genuinely impressed by this. It looks so realistic.

I was particularly impressed at the dust modeling on landing. Very impressive.

Also the fact they finally fixed the fake rocket exhaust in the previous versions, and replaced it with the correct clear exhaust.

Are you implying that this was faked? If so, don’t weasel just say so

I’d guess your sarcasm detector might need a bit of tuning.

I really don’t think they could have done that with a 555.

You win the internet today. Although now I’m on a mission — I need to find out of there’s a 555 somewhere on the spacecraft.

I think your mission should be to see how many 555’s it would take to get to mars

Depends: their Isp would vary with operating voltage. :-)

Arduino.

Let’s start here:

“A 555-Based Computer”

http://www.paleotechnologist.net/?p=548

Thankfully it wasn’t shot in vertical mode.

I’m going to use the term “uncontrolled disassembly” from now on for any accidents I see

Some of us call it engineering.

One easy and foolproof extra “instrument” that could have been added at virtually no cost is a piece of wool or other lightweight fibre attached to say the top of the shadow gnomon on the colour circle. This would be light enough to blow about and be captured on camera, thus indicating velocity and direction, especially when the microphone has already picked up some wind noise. Rather like the wool tufts placed on prototype aircraft wings in the past.

I wish they would drive the rover over to the descent vehicle’s “controller disassembly” site to see the wreckage. :-)

Martian sky is obviously blue, as it should be.

I like when NASA forget to fake martian colors to make pictures look lifeless and alien.

The colors from that video could be used for more exact restoration of real martian colors on all martian photos previously published by NASA.

I think this is the main hack. Definitely worth HAD article.

Congratulations to NASA and the USA.

More of this, please.

“…supersonic parachute deployment…”

Was that supersonic in context of earth atmosphere (speed of sound in breathable air) or in terms of mars atmosphere (speed of sound in what is there)?