Raise your hand if you remember when PulseAudio was famous for breaking audio on Linux for everyone. For quite a few years, the standard answer for any audio problem on Linux was to uninstall PulseAudio, and just use ALSA. It’s probably the case that a number of distros switched to Pulse before it was quite ready. My experience was that after a couple years of fixing bugs, the experience got to be quite stable and useful. PulseAudio brought some really nice features to Linux, like moving sound streams between devices and dynamically resampling streams as needed.

The other side of the Linux audio coin is JACK. If you’ve used Ardour, or done much with Firewire audio interfaces, you’re probably familiar with the JACK Audio Connection Kit — recursive acronyms are fun. JACK lets you almost arbitrarily route audio streams, and is very much intended for a professional audio audience.

You may wonder if there is any way to use PulseAudio and JACK together. Yes, but it’s just a bit of a pain, to get the PulseAudio plugin to work with JACK. For example, all of the Pulse streams get mixed together, and show up as a single device on the JACK graph, so you can’t route them around or treat them seapartely.

It’s the conundrum faced by Linux users for years now. PulseAudio isn’t usable for pro audio, and JACK is too complicated for everything else. I’m loathe to suggest that yet another audio system could solve the world’s audio problems, but it would be nice to have the best of both worlds. To let the cat out of the bag, PipeWire is that new system, and it has the potential to be the solution for nearly everyone.

The Audio Video Chosen One

The story told is that [Wim Taymans] was contemplating applications distributed as Flatpaks, and realized that this would be problematic for video input and output. He had been working on making PulseAudio play nicely with containerized applications, and started thinking about how to solve the problem for video streams, too. While doing the initial protocol design, it became apparent that AV synchronization would be a devilish problem if audio and video were routed over separate systems, so it was decided that PipeWire would include audio handling as well. Once that decision was made, it became obvious that PipeWire could replace PulseAudio altogether. To make this a seamless transition, PipeWire was built to be fully compatible with the PulseAudio server. In other words, any application that can talk to PulseAudio automatically has PipeWire support.

If PulseAudio was going to be rebuilt from the ground up, then it might as well address pro audio. If PipeWire could satisfy the needs of pro audio users, it could feasibly replace JACK as the go-to audio backend for digital audio workstations like Ardour. Convincing every project to add support for yet another Linux audio server was going to be an uphill battle, so they cheated. PipeWire would just implement the JACK API. That may sound like a whole lot of feature creep, starting from a simple video transport system, and ending up re-implementing both JACK and PulseAudio.

Let’s make that point again. PipeWire is a drop-in replacement for Pulseaudio and JACK at the same time. Any application that supports Pulse now supports PipeWire, and at the same time it can pull all the clever tricks that JACK can. So far I’ve found two killer hacks that PipeWire makes possible, that we’ll get to in a moment. Pretty much all of the major distros now support running PipeWire as the primary audio server, and Fedora 34 made it the default solution. There were a few bugs to work out in the first couple weeks of using it, but PipeWire now seems to be playing nicely with all the apps I’ve thrown at it.

The Latency Question

JACK gets used for all sorts of hijinks, and one of its killer features is that it can achieve latencies low enough to be imperceptible. Playing audio back with a noticeable delay can throw music or talkers off badly. The effect is so strong, researchers have built a speech jammer that uses the principle to silence speakers from afar. Let’s just say that you really don’t want that effect on your conference call. A 200 ms delay shuts down speech, but even lower latencies can be distracting, especially for musicians.

For PipeWire to really replace JACK, how low do we need the latency to go? The Ardour guide suggests five milliseconds to really be imperceptible, but it depends on what exactly you’re doing. With just a bit of work, on a modern machine, I’ve gotten my latency down to just under 18 ms, over a USB audio device. To put that another way, that’s about the delay you get from standing 18 feet away from an audio source. It’s just enough for your brain to notice, particularly if you’re trying to play music, but not a deal breaker. Need to go lower? You’re probably going to need a real-time kernel.

To get started tuning your latency, copy alsa-monitor.conf and jack.conf into their places in /etc/pipewire. There are a few tweaks here, but the main knobs to turn are api.alsa.period-size and node.latency in jack.conf. To really get low latencies, there are a series of system tweaks that can help, essentially the same tweaks needed for low latency on JACK.

Oh The Fun We Can Have

Now on to those tricks. While JACK can handle multiple streams coming from a single soundcard, it doesn’t handle multiple external sources or sinks easily. You have a pair of USB mics and want to record both of them? Sorry, no can do with JACK or ALSA. Get a bigger, more expensive audio interface.

PipeWire doesn’t have this limitation. It sees all of your audio devices, and can mix and match channels even into Ardour. It’s now trivial to make a three-track recording with multiple soundcards.

OK, I hear you saying now, “I’m not an audio engineer, I don’t need to do multi-track recording. What can Pipewire do for me?” Let’s look at a couple other tools, and I suspect you will see the possibilities. First is Calf Studio Gear, an audio plugin collection. Among the plugins are a compressor, parametric EQ, and a limiter. These three make for a decent mastering toolkit, where “mastering” here refers to the last step an album goes through to put the final polish on the sound. That step is sorely lacking on some of the YouTube videos we consume. Inconsistent audio levels is the one that drive me crazy the most often.

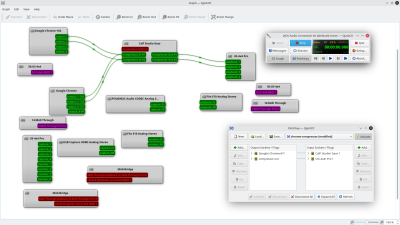

A second tool, qjackctl, helps put Calf to use. Not only does it allow you to see and manipulate the graph of signal flow, it has the ability to design simple rules to route audio automatically. Those rules use Perl regex matching, and it’s easy enough to set them up to automatically direct audio streams from Chrome into the Calf compressor, and from there to your speakers. Add Calf and qjackctl to your desktop’s autorun list, and you have an automated solution for bad YouTube mastering. Why stop there? Going to be on a Zoom call? Route your mic through Calf, and do a bit of EQ, or add a gate to cut down on background noise. Since you’re at it, record your audio and the call audio to Ardour.

Work Left To Do

PipeWire was originally intended to shuffle video around. That part works too. Browsers have added PipeWire support for video capture, and if you happen to be running Wayland, desktop capture is a PipeWire affair now, too. OBS has added support for PipeWire video inputs, but output to PipeWire is still unimplemented. And on that topic, while the JACK tools work great for audio, the video control and plugin selection is noticeably lacking.

There is one thing that JACK supports that PipeWire currently can’t touch. JACK supports the FFADO drivers to talk to FireWire audio interfaces, and PipeWire can’t support them at all. (OK, yes, ALSA has a FireWire stack, but it’s not in great shape, and only supports a handful of devices.) USB3 has certainly replaced FireWire as the preferred connection for new devices, but there are plenty of quality interfaces still at work that are FireWire only. Very recently, a new TODO item has appeared on the official list: FireWire backend based on FFADO or fix up ALSA drivers.

So where does that leave us? PipeWire has already changed what I can do with Linux audio. If the video ecosystem develops, it has the potential to make some new things possible, or at least easier, there too. The future is bright for multimedia on Linux.

Damn that is cool, I’ve hated pulse ever since I first started trying to get it to behave. It has got better since, but its still a tremendous pain for many many things compared to without it… But it does do a few things ALSA can’t, or at least can’t do half as conveniently so I did stick with it.

But a single tool that unifies basically all of the Linux audio stack really makes sense to me, should be much less confusing to decipher how the audio works when its all one tool, and the documentation and polish catch up. Shocked I’d never heard of it till now (not that I am in any way a sound professional/ musician to really be looking for more features than bare ALSA – While I’ve stuck with Pulse because it can do some things easier than ALSA, I don’t actually need or use most if not all of those features in general..).

And being able to handle video as well is of course very useful.

For a decade now JACK is my main audio system and Pulseaudio outputs via jack_sink to JACK. No problems so far. Not sure if something should by fixed that ain’t broken.

It is mentioned in the article it is for “containerized applications” so say that your web browser will never have access to the files for that new PCB you are designing or …

Containerizing apps on a desktop computer is a problem IMHO, not a solution. I had to laugh when I read that there are “solutions” than take a “common” container with the base libs and stuff. So they copy the dependencies of a non-container system to the container world. Funny, really.

My first and only try to get a flatpack’d app ended with “missing symbol on glibc”. So … nay.

To get back to the problem “containerized application”: Run a netjack instance there and connect it to the base system running jack.

BTW that’s how I get high quality audio from a Windows VM to my Linux host.

Why do they bother to fix the roads and bridges that I don’t drive on?

Hey, I fixed a table yesterday. You probably shouldn’t drive on that either. Will need to find another box of screws if you do.

In the US…. they don’t.

Can you use that setup to move application streams around individually? Or support multiple outputs or inputs at once?

Pulseaudio is for entertainment. All I need is to feed it into jack if I want to record my browser’s sound. Or to give Linphone a microphone that’s available in jack.

i do not need Pulse to record my browser (Firefox) only jack

If you mean “multiple interfaces” with “multiple outputs” then there are some solutions in jack. With a recent jack use:

jack_load zalsa_out -i “-d hw:”

There is zalsa_in as well. For older jack versions there are standalone programs alsa_in and alsa_out.

Nice article. I have been running PipeWire for about 2 months on ArchLinux and had no issues so far. I have in fact jumped from ALSA directly to PipeWire. Yes, I had to do some ugly hacks to avoid installing PulseAudio, like running some applications using apulse (PulseAudio Emulation for ALSA), fortunately that problem is gone.

Oh. I forgot. The is a huge pile of pro audio hw that uses firewire that can be got very cheap as drivers for current mac or windows is falling. I bought a Mackie onyx 1220 desk with a built in 18 in, 2 out firewire audio card. Just cost me 100 euro.

Unfortunately after an update of my ubuntu 21.04 distro, jack will not start with that card as the audio interface. FFADO support is important.

I’ve gotten used to jack especially since I can connect multiple instances of rakarack to split and combine, and output to all 6 channels. That bass channel is usually full range as well. My gripe is the pulse audio defaults to mute when booted up and sometimes that’s the forgotten setting that keeps me from taking off.

I often run 2 jackd with different latencies at the same time, each with its own interface: One with big buffers/latency for my DAW project, one with small buffers/latency for Guitarix and software synths.

Not sure if Pipewire will be able to do this.

Not a problem. PipeWire can do this in a single server or separate servers.

I remember when OSS was the new kid on the block. Later, ALSA was a God-send. Pulse was integrated into distros only a few years too early. It’s recently hit my definition of stable, finally. Choosing JACK over Pulse wasn’t a choice that I’ve ever needed to make. Honestly, I’d had my fill of fun configuring Linux audio by the time I got my Soundblaster AWE64 Gold working – today I want plug-n-play audio to save my time to be used on something more important.

I’m excited about Pipewire. It appears to be very well thought out.

It’s pretty awesome. I’ve been using pipewire for about 6 or 8 months now.

If you wan to play with it, the latest version of EndeavourOS has pipewire as its default with pipewire-jack and pipewire-pulse bridges already setup. So you could try out the live disc and see how it works with your interfaces.

I love it. It has the depth and breadth for audiophiles and sound engineers, but it’s also fisher price “plug the green wire into the big box” simple for just connecting things.

Great it’s not part of systemd 🙂

+1,000,000,000,000,000

…yet!

B^)

These are not the distros you’re looking for..

Any project to replace anything by Lennart Poettering is a project I am 100% in support of.

This! So much this!

Indeed.

Can we get rid of all the “automatically switch things around” garbage?

I use the sound card on my PC as part of various electronics experiments, generating and measuring all kindsof signals.

Everytime I change connections, the inputs and outputs switch and change all over the place.

Even in those cases where I just use a voice chat program, I don’t want the damned thing flip-flopping all over the place. I want to be able to unplug a headset, then plug it back in without the system trying to guess where to get or send audio.

The operating system should keep its paws off. I’ll tell it where things should go. It isn’t smart enough to know what I want done.

I usually end up having to disable PulseAudio to get things to work how I want them.

Absolutely, but it’s not just the OS. Certain apps *cough*discord*cough* love to think they’re clever and select a different audio output device of their own choosing. I don’t care that the dumb HDMI to VGA adapter claims it offers audio, my old Dell LCD is not a speaker!

“my old Dell LCD is not a speaker!”

Have you tried increasing the voltage?

surely that means it becomes a smoke machine rather than a speaker

Idk, I think there would be *some* sound, at least briefly ..

Sounds like you didn’t increase the voltage enough. Never heard of plasma tweeters? lol

Oh, good heavens, yes. Discord is an absolute pain.

How would any program guess that I want to send audio out to the HDMI going to the TV and that it should use the extra plugged in USB audio adapter for microphone in? I use an external adapter for the microphone because it is better than the built in one. I use the HDMI output so that the sound comes from the big screen TV where I see the people I’m talking to.

No matter how clever the software, it has no way of knowing why I hook things up the way I do. It could never guess why I don’t want to use the built in audio adapter.

Re: call recording, make sure you’re aware of the regulations for the locales of the participants, or get in the habit of giving a blanket notice, not every place is a one-party notification state.

That’s true, very good point. I’m using this for podcasting, where the whole point is recording the call, but YMMV.

this is NOT legal advice: For personal calls as long as the recording party is in a one-party state it doesn’t matter what the other party state laws are.(assuming that you’re not also a resident of that state) I’m not sure for business how that works.

What if you’re phoning someone on a party line?

That only applies to *telephone* calls. AFAIK, those laws do not apply to ANY internet based chat/voice/video application.

I don’t think that’s accurate. At least one law firm’s take: https://www.barley.com/legal-risks-of-recording-video-conference-calls

Oh man. I have been struggling with audio on mint and ubuntu for the last couple of months. One day it works, the next day none of it. The whole hodgepodge of paperclips and chewing gum solutions to have a route from apps to sound cards and vice versa had never a good architect. Hence the pile of half baked tools. Pulseaudio was the limit, not even trying to streamline things, but just introducing yet another set of setting that can be wrong. Since i’ve switched from qjackctl to cadence, things have going smoother, but is is still a far cry from what it should be.

I haven’t had problems with getting audio to work in Mint but getting it to work how I want is impossible. Using the audio kits like qjackctl and cadence type of setup almost gets there but too much restriction in how things can be connected. It’s like someone used kxMixer on an older SB Live card and tried to recreate that in an environment that is actively hostile towards that type of control from the user. It’s easier to just splice audio devices together to form a rack style of audio handling to avoid the PC having any control over it at all lately.

Excited to see FireWire is on the todo list. There are plenty of prosumer or pro FireWire interfaces that are very capable. USB is usually sligthly worse latency-wise, and Thunderbolt interfaces are still pretty expensive. I’ve tinkered with a PipeWire/Jack setup, but the documentation wasn’t quite there yet, found it hard to understand how to re-route everything via Jack (I made a hackish workaround that sort of works, but is a bit brute force by blacklisting the module for the integrated sound chip).

My biggest use case with JACK was that it allowed me to link different applications together.

For a long time, I was using JACK to link Twinkle (a SIP telephony client) to Zoom for a member of our radio club who didn’t have Internet access. (Yes, Zoom has dial-in numbers, but we didn’t have access to those at the time.) I can pull the same stunt with Jitsi, Slack, Mumble … anything that can talk to ALSA.

The latency was good enough for my purposes. That said, I’ll have to have a look and see if there’s a delay tool there, I could have some fun with the telephone scammers when they ring from “the technical department of Telstra” about “my internet connection”. Just play their own verbal diarrhoea back to them with a delay.

i’m putting pipewire on my todo list because i run my browser in a container and would like to be able to get audio out of it. and the idea of streaming video might have some use to me as well.

and, like others, i’ve ‘fixed’ my system a couple times by nuking pulseaudio :)

but i’m pretty allergic to the newness of it all. when i first started trying to use alsa, probably some time between 2000 and 2002, it was mature enough that it was starting to become oppressive — there was real pressure to ditch OSS — but it was also so immature that literally all of the documentation was for an outdated version of the API. it wasn’t just bad documentation, it was for a significantly different API. that was perplexing to me, given that they were actively evangelizing the product to developers. i don’t know if anyone remembers GGI (kind of a precursor to wayland, in a sense), but they evangelized the documentation long before they had a product and i always thought that was the model to follow.

now that obsolete documentation is long-gone but i looked recently and found, now it’s just ‘bad documentation’. the reference-style documentation is awful, and the tutorials are all over the map. and it’s all 10+ years old. everyone had their struggle in the 2000s, and different blogs and tutorials were published then, and now everyone’s lost interest. ALSA is still the standard, and still no one has properly documented it. kind of disappointing, but workable….except that the ALSA interface is actually extremely dependent on the hardware driver…without documentation you’re left with trial and error, but then what works at home won’t work elsewhere.

i’ve learned a few different APIs over the years, gstreamer, portaudio, CoreAudio, some others. gstreamer changed underneath me and none of that works anymore. ‘libao’ drifted enough to break mpg321, haven’t decided who to blame for that one. i’d like to know how much CoreAudio has drifted, but macos yuck.

as a user, i might find a use for pipewire…but the idea of developing for it gives me bad feelings. the new thing always seems to get evangelized at the exact worst time.

pulse-dlna explicitly NOT supported, non-starter. I have 3 workstations, pulse allows me to use 1 set of speakers (or my tv or dlna capable stereo for audio)

hopefully this can be addressed soon

Any reason this has to be handled at the sound server level? Isn’t there another project that implements this as a separate service?

I haven’t played with it myself, but apparently an option would be to use already existing Jack network audio software like Zita njbridge or NetJack. https://www.reddit.com/r/linuxaudio/comments/q4bcx1/pipewire_audio_over_network/hgfhs62/

“PipeWire doesn’t have this limitation. It sees all of your audio devices, and can mix and match channels even into Ardour. It’s now trivial to make a three-track recording with multiple soundcards.”

There is a reason JACK doesn’t do this – It’s clock drift. Multiple independent sources will all have a different idea of the sampling rate. After a few minutes/hours things WILL drift apart.

You could just resample the signal continuously to adjust for the drifting clocks (pulseaudio does this for synchronizing simultaneous outputs), but this introduces more latency and doesn’t fit well in a “professional audio” stack.

PipeWire uses a very accurate and stable adaptive resampler when multiple clocks are in use. It adds around 48 samples of latency. So, it will work reasonably well for most uses. If you want a pro setup you can link the cards to a word clock and PipeWire will not resample.

Will it support webcam streams?

I’m waiting years for an offset function where i can sync A/V with a specific audio delay. My software DSP needs some time to process audio. I dont see anything in this direction coming. Until then: no FIR convolution in Netflix and Youtube.. No revolutions in sight imho.

Is this going to be the audio server that finally makes it simple to get audio in a freerdp, vnc or other remote desktop situation?

Yes, I know you can do it with pulse but anything that requires typing half a dozen commands on both the client and the server each time one connects and requires the client, which may not even be Linux have pulse or other normally Linux only software installed isn’t really a usable solution.

When I started using Linux one had to spend hundreds of dollars on third party software to get a remote desktop on Windows or Mac. Ssh, remote x and vnc made Linux seem like magic. But Windows has had an easy to use remote desktop with audio since when, 2000 maybe? In Linux development seems to have been in exporting audio to networked stereo equipment and advanced stuff that while completely forgetting the remote user.

I went to install it on Ubuntu Studio and was surprised to find it already installed.

I guess thats why Ubuntu Studio audio has been working so smoothly?

This is wonderful. I remember when I first encountered PulseAudio and basically abandoned Linux for a while because a computer with no sound is not much of a computer for doing the things I do on them. It has since improved a ton as all have noticed and commented, but trying to do anything “fancy” ends in frustration. I’m going to check this out. Thanks for the tip!

I have personally broken my PulseAudio setup on a recent distro by trying to set up JACK on a by-program basis; I was never able to fix it, it didn’t work until I started fresh. I can verify that even now, trying to get JACK to work alongside PulseAudio so you can have precise audio streams for Ardour or some other programs is a beast to handle.

Not a single comment on the awesome picture in this article? I’m disappointed.

I think we have gotten used to the great illustrations that it’s not even at a point of doubt whether anyone admires it.

This blog is not for any clickbait-headlines, its worth scrolling through and selecting the articles based on the spot-on header illustrations, even when it is just the perfect still of the video [[https://hackaday.com/tag/ornithopter/]]

As of a couple days ago, FFADO support is no longer necessary! The Linux kernel has had native ALSA support for most FireWire audio interfaces for a while, but it was too broken to use (clocking issues causing glitches). That has now been fixed, and it should be released in Linux 5.14. I’ve been using PipeWire with my Focusrite Saffire 26 FireWire interface for a week (snd-dice driver on a testing kernel), and it works great.

I actually had a prototype FFADO plug-in for PipeWire half-written, but I don’t intend to continue that work, now that ALSA support finally works properly. The FFADO package itself is still useful, though, as it has ffado-mixer which is required to control the hardware mixers on many FireWire interfaces properly. It can be used together with the kernel ALSA drivers, so no issues there.

OK, that’s interesting. I have a Saffire 40 in my desktop rack, been playing with it connected to pipewire. I was a bit surprised when it worked without much fuss, but… I’ve been getting weird audio crackling that sounded like a bunch of xruns, but nothing was showing xruns. Is that the sort of issue the patch fixes? The next question is whether this fixes the latency problem, allowing for reasonable latencies. Very interesting and thanks for the update!

Yup, that kind of crackling is exactly it. It *is* xruns, but at the audio interface level, due to the bad clocking.

I’m not sure what you mean with the latency problem. If you mean better latencies than PA, then yes; the PW folks have a bunch of comparisons with JACK and even sometimes PW comes out on top. If you mean something specific to FireWire, I’m not sure; I’m currently testing at buffer size 512 with a rather heavy Ardour project (can’t run at 256, not JACK, not PW). I can try how low I can go on lighter workloads later. I’d hope moving the driver into the kernel improves latencies if anything, though.

Oh, also, one very cool thing about PW is that the latency is dynamic, so you can be using normal apps at high latency/low CPU, then spin up some proaudio stuff (there’s an environment variable to tell the JACK shim what buffer size to request) and everything seamlessly switches over to the lower latency. JACK2 kind of supports this, but not well, not automatically, and not seamlessly in my experience.

I may need to figure this out in further detail, and write a followup article once 5.14 is released… There seems to be two latency knobs to turn for Pipewire. PIPEWIRE_LATENCY is dynamic, and can be set per application, which is great. There is also a latency setting in alsa-monitor.conf, api.alsa.period-size, and that one is not dynamic. I know that second setting is useful for getting lower latency on USB devices, but I haven’t yet played with it on Firewire hardware.

The latency problem I mentioned is a reference to this: https://gitlab.freedesktop.org/pipewire/pipewire/-/issues/326#note_705678 I understand that to mean that the alsa.period-size can’t be set any lower than 256 for some Firewire hardware.

Well, that tears it.

USE=”${USE} -pulseaudio screencast”

emerge –ask –verbose –changed-use –update –deep @world

See you on the other side!

No jack bridge that pulse has, constant issues with random muting of audio, 0 support when submitting issues. For months I tried to make it work, now I just blacklist it.

[all of the Pulse streams get mixed together,]

Actually it is possible to get more pulse-audio-jack-sinks

pactl load-module module-jack-source client_name=pulse_source_2 connect=no

and in pavucontrol you can split up the applications

True, and obvious in retrospect. Thanks!