It’s quite a while since any of us unpacked a brand new VGA monitor, but since so many machines still have the ability to drive them even through an inexpensive adaptor they’re still something that finds a use. With so many old VGA flat panel monitors being tossed away they even come at the low low price of free, which can’t be argued with. CNXSoft’s [Jean-Luc Aufranc] was tasked with fixing a dead one, and wrote an account of his progress.

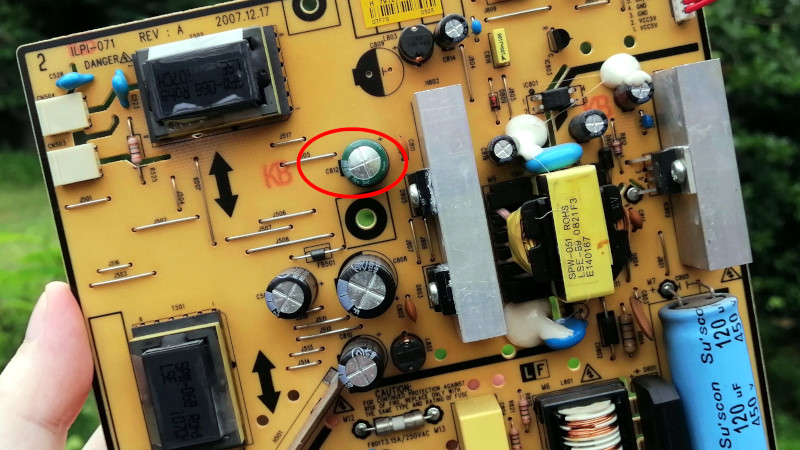

Seasoned readers will no doubt be guessing where this story will lead, as when he cracked it open and exposed the PSU board there was the tell-tale puffiness of a failed electrolytic capacitor. For relative pennies a replacement was secured, and the monitor was fixed. As repair hacks go it’s a straightforward one, but still worth remarking because a free monitor is a free monitor.

We called the demise of VGA back in 2016, and have seen no reason to go back on that. But for those of us left with a few legacy monitors it’s worth remembering that DVI and thus the DVI compatibility mode of HDMI is little more than a digitised version of the R, G, and B channels you’d find on that trusty blue connector. Maybe that little dongle doesn’t make such a bad purchase, and of course you can also use it as an SDR if you want.

Been there, done that. With Samsung screens an additional fuse often blows, too.

All in all that 10 or 15eur repair cost many many years ago was a good deal for me, instead of buying a new monitor!

You’re making up the cost in electricity used.

The CCFLs don’t use that much more power compared to LED backlights.

If you really want LED backlighting on an old CCFL monitor its not that hard. On ebay search for ccfl led conversion kits. You get an LED driver board and two strips of LEDs to go in the edge channels where the ccfl tubes are. The driver board needs 12vdc power so you’ll need to poke around with a DMM to find a source. I believe the board also has brightness control but I didn’t use it for my project.

I wanted a 19″ 4:3 VGA LCD with LED backlighting and didn’t want to pay 200USD for one of the arcade models.

And you make up that used electricity with less wasted resources and energy required for a new monitor…

…which is produced anyway I guess… I just love to open stuff and try to repair it, that’s about it :D

By this logic it’s impossible to reduce power consumption but it course if demand does not increase as fast overtime the need to generate power will not be as great.

Just a little note: DVI port also supported analog as well as digital. DVI-I did both, DVI-A only did analog (obsolete I would imagine) and DVI-D is digital only. DVI covers all types. HDMI and DP adapter generally works only on DVI-D or I and wouldn’t work on video card that is old enough to be DVI-A only

Don’t think i ever saw a DVI-A port, not sure what the point would have been when there is/was VGA for analog. DVI-I and DVI-D of course were common. The whole point of DVI was to go digital, but they designed it as being able to do DVI-I that way a legacy monitor could have been plugged in with an adapter without the video card makers having to add a VGA port along side the DVI port. Though some did for dual head cards, your primary monitor would be DVI and the secondary VGA.

The only place I’ve seen a DVI-A was an input to an old 14″ LCD. The VGA cord went inside the monitor case and the connection to the board was made with a DVI-A end.

essentially one can buy a compact hdmi female to dvi-d male adapter for 1-2 bucks. also don’t forget: for resolutions above 1080p – like 2560 x 1440 wqhd, you need dual link DVI cables too.

summary: Replace all the electrolytic capacitors (They typically have a 20 year shelf/active life).

So i am more of a lurker here but feel like I should known this anyway, but im going to ask it.

Is there a reason other than cost per “farad” that makes people use electrolytic caps over something more “robust”? Most of the projects ive played with it would specify specific types of caps for RF, etc, but for most general jobs electrolytic were only chosen because they were big and cheap. Im sure im missing some obvious reasons why you would select electrolytic other than “big and cheap” right?

An electrolytic is the way to get high capacitance in a reasonable size. It’s not that “an electrolytic” is needed but that higher capacitance is needed, and electrolytics are the only way.

A 10,000uF 16v electrolytic I got in 1974 was the size of a Coke can. I have some oil filled 2uF high voltage capacitors, thinner but the same height.

In the tube era, high voltage and low current, few high value capacitors were needed. The switch to solid state, low voltage at higher current, meant electrolytics became more needed. So they are more common. Worse, they are often used where higher frequencies reside, which strains them more than 60Hz in the tube days.

In fifty years, demand has made electrolytics smaller. That 10,000uF is now the size of an short thumb, and better voltage rating.

Other types of capacitors have improved in size versus capacitance, but they’ve not replaced electrolytics.

It’s even worse than that, their rated lifetimes are typically much less.

“Maybe that little dongle doesn’t make such a bad purchase, and of course you can also use it as an SDR if you want.”

No, you can’t. Your link goes to an article to a USB to VGA adapter. A DVI-I or DVI-A to VGA adapter is just a re-arrangement of the analog video signal connections to a different connector.

Do a search for “OSMO-FL2K”

Sorry, I see what you are saying one is VGA to USB capture device not linked to anywhere above and the other, linked above, is a USB to VGA device which is not helpful.

It’s better to not fix these because CRTs use a huge amount of power compared to today’s displays which are LED based. I’m normally not a fan of scraping things that can be repaired but CRT technology is extremely wasteful.

I think you’ve missed something @Gravis. The OP was about fixing a LG Flatron W1934S which is a Liquid Crystal Display (LCD) likely back lit with a drinking-straw-sized Cold Cathode Fluorescent Lamp (CCFL). Not a (really old, large & heavy) Cathode-ray tube (CRT) monitor.

That said, newer LCDs might use slightly less power than old as it is more likely new LCD use LEDs instead of a power inverter and CCFL to back light the LCD.

Then the title should be changed from

> Save That Old VGA Monitor From The Trash

to

> Save That Old LCD/TFT/CCFL Monitor From The Trash

“VGA monitor” hints strongly at CRT for many people I assume (me included) because no one in their right mind calls a TFT/LCD “VGA monitor” even if it’s only input is VGA.

It’d still primarily be a TFT/LCD monitor the “only VGA” part could be used as a qualifier.

well, just no.

no explanation/reason?

Is it an age thing?

Only people old enough to have used CRTs extensively make the “VGA monitor” -> CRT association?

“VGA monitor” in itself is a stupid term imho.

We (as in hackers, nerds, techies etc.) know plasma/CRT/LCD/LED/TFT/whatever screens/displays/monitors/TVs and they can have inputs from C-/S-Video, component/RGB, Scart, VGA over SDI to DVI, HDMI, DP, USB-C and what-not.

How does “VGA monitor” describe what type of monitor it is?

Personally, I used to use the term “VGA monitor” for both 640×480 panels/old fixed-frequency monitors and RGBHV monitors/displays with a DE15 chord..

But maybe it’s the term “monitor” that causes memories of CRT’s?

(I’ve been there, despite being still young. In my childhood I had a 14″ IBM PS/2 “VGA” monitor on a 286 PC with on-board VGA in the late 90s.)

Btw, a “video monitor” historically was a tuner-less CRT monitor that could handle composite (cvbs), separate chroma/luma (now “s-video”) or the original monochrome video (vbs) via rca/cinch or so-239 sockets.

By that logic mentioned above, no LCD screen ever can be a video monitor, though.

And likewise, an RGB capable monitor never can be a “video monitor” also.

PS: The term monitor was not only used for screens. Audio equipment had a “monitor” jack for headphones etc. And early computers had a “monitor” (monitor program), an early type of interactive firmware, in ROM. Like my Sharp MZ-80K.

Agree, vga monitor for older folks meant the resolution. This seems “VGA port only monitor”

Quote [limroh]: ‘“VGA monitor” hints strongly at CRT for many people’

Glass tube (CRT) monitors that were actual VGA (640×480) were common place in the late 80’s. So if someone were 10 years old then, they would be over 40 years old now. So you are correct to say “many” people but that is not “most” people.

For most people the term VGA is used to describe every extension to the VGA standard (SVGA-xxxXGA) and not the specific VGA standard (640×480). And therefore relates strongly to the HD-15 (DE-15) connection type that remained constant for all the extensions to the original VGA standard.

And you’d be silly to toss a good high resolution crt monitor now. People *love* them for emulator and arcade builds.

I recently turned down a widescreen sony “flat” CRT which were amazing and would be epic for a arcade build. The problem is i can’t possibly lift it so id have to plan out my build around dragging friends over to help mount it in the cabinet and then need a dolly to move the thing around. Ill pass :(

Depends, I think. Many monitors from the 80s could display 640×480 because that was the maximum resolution of NTSC devices, both TVs and video monitors.

However, they ran at 15KHz and 30 half-frames/second interlaced.

A VGA monitor may run at 31KHz and 60 full frames/second.

Amigas used to use flicker fixers to get VGA monitors working.

“For most people the term VGA is used to describe every extension to the VGA standard (SVGA-xxxXGA) and not the specific VGA standard (640×480). ”

Jein. PC Gamers from the early 90s will say that Super VGA is anything that has 256 colours at a resolution higher than 320×2** resolution. So 640×400 (lowest Vesa VBE mode) and higher is SVGA.

Yes, 640×480 in 256c is not “VGA” to them, but SVGA!

That’s the same folks that think that 320×200 @256c is usually VGA.

Others would call that CGA, MCGA etc, because that’s the default resolution of said standards (CGA/EGA can do 640 by something too) .

By contrast, PC professionals used “Super VGA” term especially for 800×600 resolution, which was the original SVGA mode in 16c, which many ISA VGA cards from 1987 onwards could handle. Vesa VBE even had two mode numbers reserved for it. Higher resolutions were called XGA, etc by these.

So no, I think SVGA isn’t “anything beyond 640×480” for everyone. That’s a stereotype and oversimplified.

I used to own a domestic electronics repair center in the era of CRT TVs. I would often see three people carry in one large screen CRT.

I used to call it “inbuilt theft prevention”.

I have to agree here. When someone says VGA I’m inclined to assume there’re talking about a CRT. (or maybe an old PLC HMI box or something esoteric).

Yeah, I’ll give you that VGA is both the name of the standard and the name of the port. It doesn’t explicitly mean the kind of device attached to it, but I feel we’re arguing denotation vs connotation.

That may be so. But if you can squeeze another 10 or 15 years out of older hardware. I’m going to guess that it would still end up using far far less energy over all than producing new more efficient hardware from raw minerals.

e.g. a 24″ CRT would be about 120 watts, no one would use a 24″ LED/OLED/LCD/Plasma these days, so you are probably going to be comparing it to a 50″ device which would be about 72/89/150/300 watts respectively.

I’ve never bought a new monitor, and that goes back forty years. A surplus Ball Brothers open frame monitor that I used with my OSI Superboard.

I was given a 19″ monitor about 2003. It barelyfit on the desk. When I found my first LCD monitor over ten years ago,just lying on the sidewalk when the stuudents moved out, I gave up the 19″. I was glad to not feel crowded by the monitor. The monitor I use now is another one found on the sidewalk, a 19″ , andit’s just so much more convenient than 19″ CRT.

heh, bought my last and only display ~15 years ago (19″ TFT).

but that doesn’t mean it’s my current monitor – in my time at a university I rescued and repaired ~50 to 100 LCDs/TFTs from the e-garbage container. Sizes range from 17 to 24″.

From the five 24″ I still have, 2-3 are in active use…

There was a period between about 1995 and 2005 where UNIX workstation CRTs were almost always tossed out when the system was upgraded thus flooding the surplus market. The first wave of these were fixed-frequency that were specific to the workstation, such as older Sun or IBM monitors with 13W3 or component BNC. Those could be made to work with Linux if you could figure out the magic Xfree modeline. Later workstation monitors were multisync, and either had standard VGA or 13W3, with the latter working fine as standard PC monitors using a $30 adapter.

I can’t say that I haven’t bought a new monitor, but there was a while where I was picking up high quality 19″-21″ monitors made by Sony and Hitachi from auctions and Goodwill for just a few bucks each, while mediocre high res PC monitors like NEC Multisync were retailing for $500+.

Don’t you mean compared to yesterday’s LED TVs? A new OLED is going to use quite a bit more power than a standard LED lit LCD panel and honestly it’s not that much different from a CRT (unless we’re talking the same display size but that’s basically only in a laptop).

The main and best thing about old VGA monitors is the loud noise you can make

when you heave them into a dumpster!

I just chuck them in the lake

And all the fish you get out of the lake has high lead level because you threw that CRT irresponsibly. CRT has lots of leads and other hazard, take it to be properly scrapped or recycled.

The sound of plastic? You might be put off by the VGA, this is not about CRTs (that could make a nice bang when the vacuum tube is compromised). Nevertheless I strongly advice you not to break those and look for their current retail prices on second hand websites…keep hoarding!

As far as I am concerned, if it doesn’t have a CRT tube, it ain’t a VGA monitor.

second!

Even VGA-only TFTs or LCDs should not be called “VGA monitor” imho.

While there were CRT monitors, there were NTSC, CGA, EGA, and other standards, including the Atari and Macs. So it was important to specify.

LCD monitors came after all that. Nothing to be specific about, even my TV set does VGA.

These old monitors are often thrown away because they are obsolete, not because they are not repairable. Any monitor with a max resolution below 1080p, is garbage, and even 1080p is rapidly slipping into the garbage category. Also, if a monitor doesn’t have at least 1 HDMI 2.0 and at least 1 DP connector, it’s garbage. Leave the trash monitors in the trash. They’re there for a reason.

My 1080p, VGA input, LCD with CFL backlight, on my lab spectrometer, beg to differ for at least 15 years.

That makes a great editing monitor for photos (though its fairly low res) but editing or color grading 4k footage is going to be iffy. Or playing modern PC games at 60hz when you could play at 144hz with gsync or other frame sync solutions. You can’t do any of that over a VGA cable (or watch netflix or a bluray i believe).

Their not garbage, especially a color accurate “studio monitor” class panel but they are definitely not “great”. A modern ips or S-IPS with a full lcd backlight, freesync, 1440p, 98% color palette (or whatever they call it now) and 144hz refresh can be bought for 300-600$ and easily be calibrated and stay calibrated.

They’re there for a reason, because people are slaves on consumerism and instead of try to extend the life of the object they use they, like bored toddlers prefer to throw away them. I’m more happy to people that think that CDs and DVD are garbage, like people that 40 years ago were thinking that LP and cassettes were trash. For the change to DVB-T2 I expect that perfectly working LED LCD TV-set will be thhown out in the trash just because and people will buy inferior quality smart tvs with more cumbersome interfaces and sypware preinstalled.

“I’m more happy to people that think that CDs and DVD are garbage, like people that 40 years ago were thinking that LP and cassettes were trash”

I’m not. I love audio CDs, Laserdiscs.. And cassettes, even VHS to some extent.

The first two are based on futuristic, elegant, noble, laser technology. By comparison, records are stone age technology. A needle that scratches on the surface of a circle of vinyl/shellac like a dry piece of chalk on a chalkboard.. Yikes. That being said.. If someone uses a laser to scan the record, then that’s acceptable too. ^^

“DVI compatibility mode of HDMI is little more than a digitised version of the R, G, and B channels”

So does that mean it should be possible to make pretty decent and clean HDMI to VGA (or RGB SCART) converter? Asking for a friend ;)

(Those cheap HDMI to VGA converters you find on Amazon are rubbish)

There is nothing wrong with the cheapo DHMI converter. The only thing they could mess up is the power supply.

As for designing it from scratch, you can pretty much use a FPGA with SERDES support for the conversion and proper video DAC to VGA. Getting HDCP (DRM) to work is another problem.

Why in the hell doesn’t someone make a capacitor that lasts forever?

They did. Now they’re dead. They succeeded.

Ask yourself the same question about a battery and you have your answer.

Well.. There’s a story of an engineer from former east Germany which once developed a very long lived light bulb.. His plane crashed.

As long as we have capitalism in mind, aggression and greed is a driving force. IMHO. Maybe after WW3 or if resources have become short, we will overthink that mindset.

They exist, they’re just a lot bigger than we want.

Got my old Acer Monitor up an running after replacing some bad caps.

Noting the hate on CRT’s — there is one thing CRT’s could do that LCD’s can’t, and that’s multiscan. LCD’s have a native pixel resolution and while they can interpolate other resolutions they look like crap when they try. A CRT can do every VGA mode from 640×480 through 1280×1024 and look good at all of them. They also have no lag and some other timing advantages over LCD. So not for everybody, but for some purposes you actually do need CRT’s. (And yes I realize OP is not a CRT, just looking over the comments and going hrrrm.)

There’s more than “one thing” CRTs can do better than LCDs. There’s also color accuracy and accurate black levels. For picture quality CRT’s are still superior in these regards and likely always will be. Also you don’t as many issues with off axis viewing angles. Yes, CRT’s were big and bulky and because of that manufacturers were eager to ditch them, but CRT’s were always king when it came to image quality. We definitely lost something when the CRT died.

Mentioning composite video I suppose will show my age. I still love these.

The correct term is CVBS! CVBS! 😜

Anyway, I always silently cry (in my mind) when people describe a pure, grayscale video signals as “Composite” or NTSC/PAL video.. 🙄

The correct term is VBS – maybe AV or just ‘Video’, too.

While tossing a CRT in a lake is bad practice, the lead is almost entirely a part of the glass and will take centuries to dissolve.

Solder from the CRT era was 40% lead and would easily oxidize or leach in water.

It’ll find good company with all the old lead fishing weights.

Please dont Just replace one capacitor and replace them all. That way you will eliminate the ripple from old capacitor and give a second life to the rest of the Electronic… it will Last longer… 😉

I have watched many youtube videos after my monitor has burn out . Tried everything i saw on youtube videos, but thanks to your article i managed to bring back to life my monitor. Got some blown swollen capacitors visible, but i had to replace some others that were near those bad capacitors. Thanks to you i got my monitor working again.