It isn’t news that [s0lly] likes to do ray tracing using Microsoft Excel. However, he recently updated his set up to use functions in a C XLL — a DLL, really — to accelerate the Excel rendering. Even if ray tracing isn’t your thing, the technique of creating custom high-performance Excel functions might do you some good somewhere else.

We’ve seen [s0lly’s] efforts before, and you can certainly see that the new technique speeds things up and produces a better result, which isn’t especially surprising. In addition to being faster, the new routines produce more detail.

The Microsoft documentation on doing this is pretty clear if you want to give it a go. One of the things you can do in your C code is to take advantage of things like threads to get better performance, which [s0lly] shows in his example.

Of course, you could argue that you don’t need Excel here, but what fun would that be? Besides, then you’d need to handle all the data input and output which would be a pain in its own right.

If you need a simple explanation of ray tracing, we just covered that. We aren’t above abusing spreadsheets, ourselves.

Cudos for the work that’s gone into this.

Last time I even looked at ray tracing, Pov-Ray was the program everyone was using. I modelled a top down view of a beam of white light, passing through a prism. There was no diffraction or splitting of the light into a spectrum, and I dubbed it “Ray Tracers Ain’t Real”.

People these days look to Blender. Not sure if Blender is a ray tracer in the strictest sense. Maybe I should load it up, and try that prism scene again.

It didn’t split the colours? Let’s troubleshoot. Possibilities: A) povray is junk. B) there may be a parameter I need to set.

C) A library you need to include

https://www.lilysoft.org/CGI/SR/Spectral%20Render.htm

Blender won’t do it either. If you want the prism effect to work, look at the more advanced renderers like Maxwell or Octane.

Footnote: I once wrote a raytracer using a programming language not remotely designed for raytracing. It too was lots of fun!

LuxCoreRender is supposed to get pretty close: https://luxcorerender.org/

POV-Ray is pretty clear in the documentation that a default light is single color. Sure, single color ‘white’ light doesn’t work in the real world, but code doesn’t care.

I used LightSys4 way back when my computer spent most of its day just thrashing RAM. VGA wasn’t the best way to view a CIE color image; even setting the gamma that POV, and the early GeForce, used, I was never sure what the monitor was using.

That is truly some surprise that a free bit of software would not duplicate the chromatic dispersion that was then available in $50,000 and up optical analysis software. Calling it a ray tracer and not a photonic physics simulation was a clue? You calling it “white light” is a giveaway as there is no “white light.”

As mentioned, there were options to simulate that behavior, but for a continuous spectrum would take infinite time to duplicate which, for some reason, no one does. /s

luxcorerender does that: https://luxcorerender.org/gallery/

“It isn’t news that [s0lly] likes to do ray tracing using Microsoft Excel.”

Gee, I wonder if [s0lly] also likes to hammer 6-inch nails in with a screwdriver.

Some time ago I did my own distributed raytracer as a homage to the original “1984” paper where the technique was described (“distributed” not in the sense of several processors, but “distributed in space and time”, which allowed to reuse the same extra rays already used for antialiasing to also obtain soft shadows, motion blur and depth of field without extra penalty).

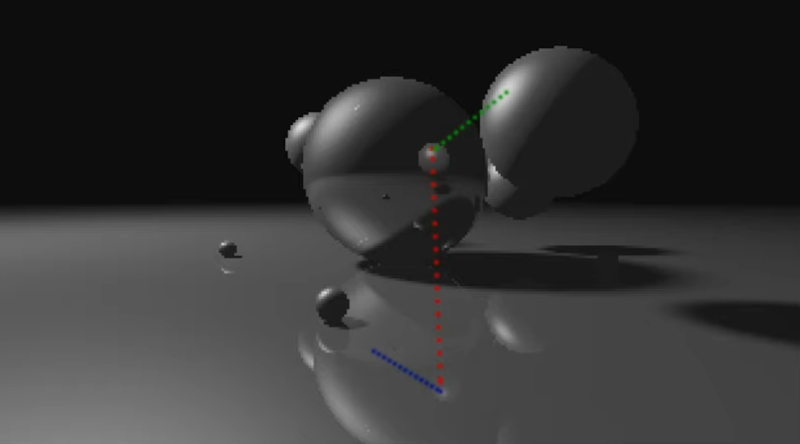

I wrote it first in python, but it was extremely slow (about 18 hours to render a 1920×1080 picture using all the four cores in my processor), so I ported it into C++… and it only needed less than 7 minutes. It just renders the same “1984” picture with the four billiard balls with motion blur, soft shadows and reflections, but in widescreen format.

If somebody wants to check it, its in my gitlab repo: https://gitlab.com/rastersoft/1984

It’s a nice project, thank you for sharing it

Here is a link to a library I wrote for creating xll add-ins: https://github.com/xlladdins/xll.