This year, we’ve already seen sizeable leaks of NVIDIA source code, and a release of open-source drivers for NVIDIA Tegra. It seems NVIDIA decided to amp it up, and just released open-source GPU kernel modules for Linux. The GitHub link named open-gpu-kernel-modules has people rejoicing, and we are already testing the code out, making memes and speculating about the future. This driver is currently claimed to be experimental, only “production-ready” for datacenter cards – but you can already try it out!

The Driver’s Present State

Of course, there’s nuance. This is new code, and unrelated to the well-known proprietary driver. It will only work on cards starting from RTX 2000 and Quadro RTX series (aka Turing and onward). The good news is that performance is comparable to the closed-source driver, even at this point! A peculiarity of this project – a good portion of features that AMD and Intel drivers implement in Linux kernel are, instead, provided by a binary blob from inside the GPU. This blob runs on the GSP, which is a RISC-V core that’s only available on Turing GPUs and younger – hence the series limitation. Now, every GPU loads a piece of firmware, but this one’s hefty!

Barring that, this driver already provides more coherent integration into the Linux kernel, with massive benefits that will only increase going forward. Not everything’s open yet – NVIDIA’s userspace libraries and OpenGL, Vulkan, OpenCL and CUDA drivers remain closed, for now. Same goes for the old NVIDIA proprietary driver that, I’d guess, would be left to rot – fitting, as “leaving to rot” is what that driver has previously done to generations of old but perfectly usable cards.

The Future Potential

This driver’s upstreaming will be a gigantic effort for sure, but that is definitely the goal, and the benefits will also be sizeable. Even as-is, this driver has way more potential. Not unlike a British policeman, the Linux kernel checks the license of every kernel module it loads, and limits the APIs it can use if it isn’t GPL-licensed – which the previous NVIDIA driver wasn’t, as its open parts were essentially a thin layer between the kernel and the binary drivers, and thus not GPL-licenseable. Because this driver is MIT/GPL licensed, they now have a larger set of interfaces at their disposal, and could integrate it better into the Linux ecosystem instead of having a set of proprietary tools.

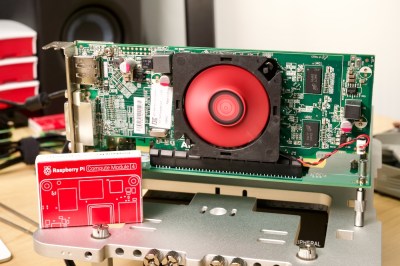

Debugging abilities, security, and overall integration potential should improve. In addition to that, there’s a slew of new possibilities opened. For a start, it definitely opens the door for porting the driver to other OSes like FreeBSD and OpenBSD, and could even help libre computing. NVIDIA GPU support on ARM will become easier in the future, and we could see more cool efforts to take advantage of what GPUs help us with when paired with an ARM SBC, from exciting videogames to powerful machine learning. The Red Hat release says there’s more to come in terms of integrating NVIDIA products into the Linux ecosystem properly, no stones unturned.

You will generally see everyone hail this, for good reasons. The tradition is that we celebrate such radical moves, even if imperfect, from big companies – and rightfully so, given the benefits I just listed, and the future potential. As we see more such moves from big players, we will have a lot of things to rejoice about, and a myriad of problems will be left in the past. However, when it comes to openness for what we value it, the situation gets kind of weird, and hard to grapple with.

Wait, What Does Openness Mean?

Openness helps us add features we need, fix problems we encounter, learn new things from others’ work and explore the limits, as we interact with technology that defines more and more of our lives. If all the exciting sci-fi we read as kids is to be believed, indeed, we are meant to work in tandem with technology. This driver is, in many ways, not the kind of openness that helps our hardware help us, but it certainly checks many boxes for what we perceive as “open”. How did we get here?

It’s well-known that opening every single part of the code is not what large companies do – you gotta hide the DRM bits and the patent violations somewhere. Here, a lot of the code that used to reside in the proprietary driver now runs on a different CPU instead, and is as intransparent as before. No driver relies as much on binary blob code as this one, and yet only semi-ironically, it’s not that far from where it could technically get RYF-certified. It’s just that the objectionable binary blobs are now “firmware” instead of “software”.

The RYF (Respects Your Freedom) certification from the Free Software Foundation, while well-intentioned, has lately drawn heat for being counterproductive to its goals and making hardware more complex without need, and even the Libreboot project leader says that its principles leave to be desired. We have been implicitly taking RYF certification as the openness guideline to strive towards, but the Novena laptop chose to not adhere to it and is certainly better off. We have a lot to learn from RYF, and it’s quite clear that we need more help.

From here – what do we take as “open”? And who can help us keep track of what “open” is – specifically, the kind of openness that moves us towards a more utopian, yet realistic world where our relationship with technology is healthy and loving? Some guidelines and principles help us check whether we are staying on the right path – and the world has changed enough that old ideas don’t always apply, just like with the cloud-hosted software loophole that proves to be tricky to resolve.

But still, a lot more code just got opened, and this is a win on some fronts. At the same time, we won’t get where we want to be if other companies decide to stick to this example, and as hackers, we won’t achieve many of the groundbreaking things that you will see us reach with open-source tools in our hands. And, if we don’t exercise caution, we might confuse this for the kind of openness that we all come here to learn from. So it’s a mixed bag.

Still Haunting Our Past A Bit

As mentioned, this driver is for 2000 RTX series and beyond. Old cards are still limited to either the proprietary driver or Nouveau – which has a history of being hamstrung by NVIDIA. Case in point: in recent years, NVIDIA has reimplemented vital features like clock control in a way only accessible through a signed firmware shim with closed API that’s tricky to reverse engineer, and has been uncooperative ever since – which has hurt the Nouveau project with no remedy in sight. Unlike with AMD helping overhaul code for the cards released before their open driver dropped, this problem is to stay.

From here, Nouveau will live on, however. In part, it will still be usable for older cards that aren’t going anywhere, and in part, it seems that it could help replace the aforementioned userspace libraries that remain closed-source. The official NVIDIA release page says it’s not impossible that Nouveau efforts and the NVIDIA open driver efforts could be merged into one, a victory for all, even if a tad bittersweet.

Due to shortages, you might not get a GPU to run this driver on anyway. That said, we will recover from the shortages and the mining-induced craze, and prices will drop to the point where our systems will work better – maybe not your MX150-equipped laptop, but certainly a whole lot of powerful systems we are yet to build. NVIDIA is not yet where AMD and Intel stand, but they’re getting there.

[Tux penguin image © Larry Ewing, coincidentally remixed using GIMP.]

Anybody still use Tegra? Maybe they should’ve done it 5 years ago when there was a chance it might mean something.

The Nintendo Switch uses a Tegra IIRC

The switch uses a Tegra X1, which was the same processor they used in their Shield devices. It’s a very powerful and power efficient ARM processor with custom Nvidia GPU cores.

There’s loads of decent hardware with tegras that could easily avoid landfill for another few years if they’d provided the sources for their drivers which is such a shame. There is the linux-grate project that’s helping but it’s still a way off.

Jetson Nano

Still see this in many DYI and course level projects

“It’s well-known that opening every single part of the code is not what large companies do – you gotta hide the DRM bits and the patent violations somewhere.”

Whew! Good thing for leaks. ;-)

I’ve never cared enough to track video card model numbers and performance, and I suspect I’m not alone in that. That said, can anyone expand on what video cards this new ‘more open’ driver currently supports? I’d appreciate it.

Current generation is RTX3000 series, and this driver supports the RTX2000 series and newer, which basically means it covers RTX GPUs.

Previous families of Nvidia GPUs were called GTX up until the GTX1000 series. The RTX generations go a bit beyond incremental performance increases with a more broad architectural shift to focus more on generalized computation, like the GTX family had moved away from fixed pipelines. The R in RTX highlights the hardware raytracing features in the newer GPUs since that’s probably the most consumer-understandable feature in the newer cards.

But it’s all a pretty moot point because, frankly, if you’re not following these things then you probably don’t have much need for a $1000+ GPU. I work with CGI and physics simulation so I’m eyeballing an RTX3070 myself, but unless you’re doing something like that or playing games, transcoding video, or something else that requires a beefy GPU, integrated graphics on your CPU will do everything you need. Some people will insist that everyone needs maximum performance in every category, but it really isn’t true. Most people don’t need discrete GPUs, or at least not very powerful ones, like most people don’t need 64-core CPUs or 32GBs+ of RAM or multiple PCIe SSDs.

A lot of people, especially around here, can do everything they need on a 2010 Thinkpad running a recent Linux distro.

“A lot of people, especially around here, can do everything they need on a 2010 Thinkpad running a recent Linux distro.”

Amen to that. But i’d greatly appreciate if they would get the ball moving. Intel is hot on their heels in the GPU space and it’s only a matter of time before laptops with discreet GPU’s from Nvidia go the way of the dodo. Laptop engineers will be able to just use the current-gen Intel or AMD design and get an integrated GPU that’s capable doing fair AAA gaming (so good SolidWorks/Fusion performance) without the power draw of a discrete card.

I like the comparesion of Nvidia with a bird that couldn’t fly.

The irony is that there are plenty of laptops out there with hybrid graphics, but all AMD: one low spec integrated GPU, and one higher power “discrete” one. As somebody who works in VR/XR, it annoys me most that the topology of these hybrid systems is simultaneously really important (in general, you can’t use a VR headset plugged into HDMI/DP if your discrete graphics card isn’t connected to that connector, same thing for USB-C DP alt mode, no idea about what the Quest does in link mode) and totally opaque: no real way to find out before purchase, and sometimes confusingly going thru a mux and bios configurable…

Would this release be related in any way to the Lapsus$ hack? 🧐

No, the Red Hat and GNOME people involved with Nvidia negotiations have explained that this was in the talks for some months before the hack even occurred.

Now, given the risks of not having a FLOSS plan as a major corporation, it would be interesting to see if this is an effort to mitigate possible losses from such a leak, but it’s pretty late for that

If this really all hinges on the availability of an extra core on the GPU to take over responsibilities, I expect it has been in the queue for a really long time. Silicon takes a long time.

It has it’s pros and cons. Yes, we can make it better and see how it works, but it also opens the door to people who might want to exploit it for nefarious gains.

Not really, that door has been open for a very long time now.

At most it changes from the door standing wide open, to the door standing wide open with a post-it note saying “Welcome!”

One could say that about any variety of open-source software, and closed-source software has plenty of vulnerabilities that are exploited in high-profile ways every week.

Regardless, a graphics driver is, generally speaking, a far less tempting attack surface than any of the countless other open-source utilities often running on a Linux box.

WebGL.

So now mister Torvalds can finally put his middle finger down.

not really imagine all those old cards before RTX …

And the whole userspace driver…

I applaud Nvidia for their move. Hopefully the API docs are comprehensive and accurate. For profit companies need to protect their hardware designs and revealing low level firmware/software to continue to fund future development.

WRT Intel ME, I think that Intel is moving away from ‘secret ME code’ for future generations.

Disclosure: Former TI employee and ME accessible firmware developer.

At this point, the main reason I generally avoid Nvidia is back when their proprietary Linux driver had a backdoor “bug” that they were aware of and didn’t fix for over 2 years. When you let it sit for 2 years, it’s not a bug anymore, even if it started that way. It’s a deliberate choice to leave a backdoor for that long.

It’s nice to see Nvidia release a small portion of their driver as open source, and maybe having an open source driver between the firmware and the kernel will provide the necessary transparency to make sure they don’t “accidentally” add another backdoor “bug”, but moving most of the driver into an opaque blob so they can open source a thin shim isn’t going to restore my trust.

They might be happy to know that I did purchase one of their cards a few years ago while building a machine learning rig (that I’m using for mining currently, because why not make some money between AI projects?), though they might not be _so_ happy, given that the primary video card in that machine is still an AMD card and the Nvidia card is purely used for its compute powers. Better than nothing I suppose. I still kind of wish AMD had some better machine learning options (and that most of the available programming libraries didn’t exclusively support CUDA), but at least Nvidia has something going for it.

Maybe this is their first step in restoring my trust, though again, if they have _that much_ to hide, maybe they still aren’t trustworthy. If that’s the case, then actually becoming trustworthy would be the real first step.

Moving most driver parts to firmware and make calls from Kernel..

Really great openness.

Broadcom style

>> Not unlike a British policeman, the Linux kernel checks the license of every kernel module it loads, and limits the APIs it can use if it isn’t GPL-licensed.

Can someone clarify this for me? Loading non-GPL modules sets a “tainted” flag in the kernel, but I’ve written a handful of drivers and I’ve never seen anything that suggests you can’t call certain APIs. Anyone have a reference?

EXPORT_SYMBOL_GPL has been in the kernel since 2001. As always, LWN has a few excellent articles on the subject: https://lwn.net/Kernel/Index/#EXPORT_SYMBOL_GPL

“Power efficient” and “ARM processor” shouldn’t be used is same sentence.;P