The tech news channels were recently abuzz with stories about strange signals coming back from Voyager 1. While the usual suspects jumped to the usual conclusions — aliens!! — in the absence of a firm explanation for the anomaly, some of us looked at this event as an opportunity to marvel at the fact that the two Voyager spacecraft, now in excess of 40 years old, are still in constant contact with those of us back on Earth, and this despite having covered around 20 billion kilometers in one of the most hostile environments imaginable.

Like many NASA programs, Voyager has far exceeded its original design goals, and is still reporting back useful science data to this day. But how is that even possible? What 1970s-era radio technology made it onto the twin space probes that allowed it to not only fulfill their primary mission of exploring the outer planets, but also let them go into an extended mission to interstellar space, and still remain in two-way contact? As it turns out, there’s nothing magical about Voyager’s radio — just solid engineering seasoned with a healthy dash of redundancy, and a fair bit of good luck over the years.

The Big Dish

For a program that in many ways defined the post-Apollo age of planetary exploration, Voyager was conceived surprisingly early. The complex mission profile had its origins in the “Planetary Grand Tour” concept of the mid-1960s, which was planned to take advantage of an alignment of the outer planets that would occur in the late 1970s. If launched at just the right time, a probe would be able to reach Jupiter, Saturn, Uranus, and Neptune using only gravitational assists after its initial powered boost, before being flung out on a course that would eventually take it out into interstellar space.

The idea of visiting all the outer planets was too enticing to pass up, and with the success of the Pioneer missions to Jupiter serving as dress rehearsals, the Voyager program was designed. Like all NASA programs, Voyager had certain primary mission goals, a minimum set of planetary science experiments that project managers were reasonably sure they could accomplish. The Voyager spacecraft were designed to meet these core mission goals, but planners also hoped that the vehicles would survive past their final planetary encounters and provide valuable data as they crossed the void. And so the hardware, both in the spacecraft and on the ground, reflects that hope.

The most prominent physical feature of both the ground stations of the Deep Space Network (DSN), which we’ve covered in-depth already, and the Voyager spacecraft themselves are their parabolic dish antennas. While the scale may differ — the DSN sports telescopes up to 70 meters across — the Voyager twins were each launched with the largest dish that could fit into the fairing of the Titan IIIE launch vehicle.

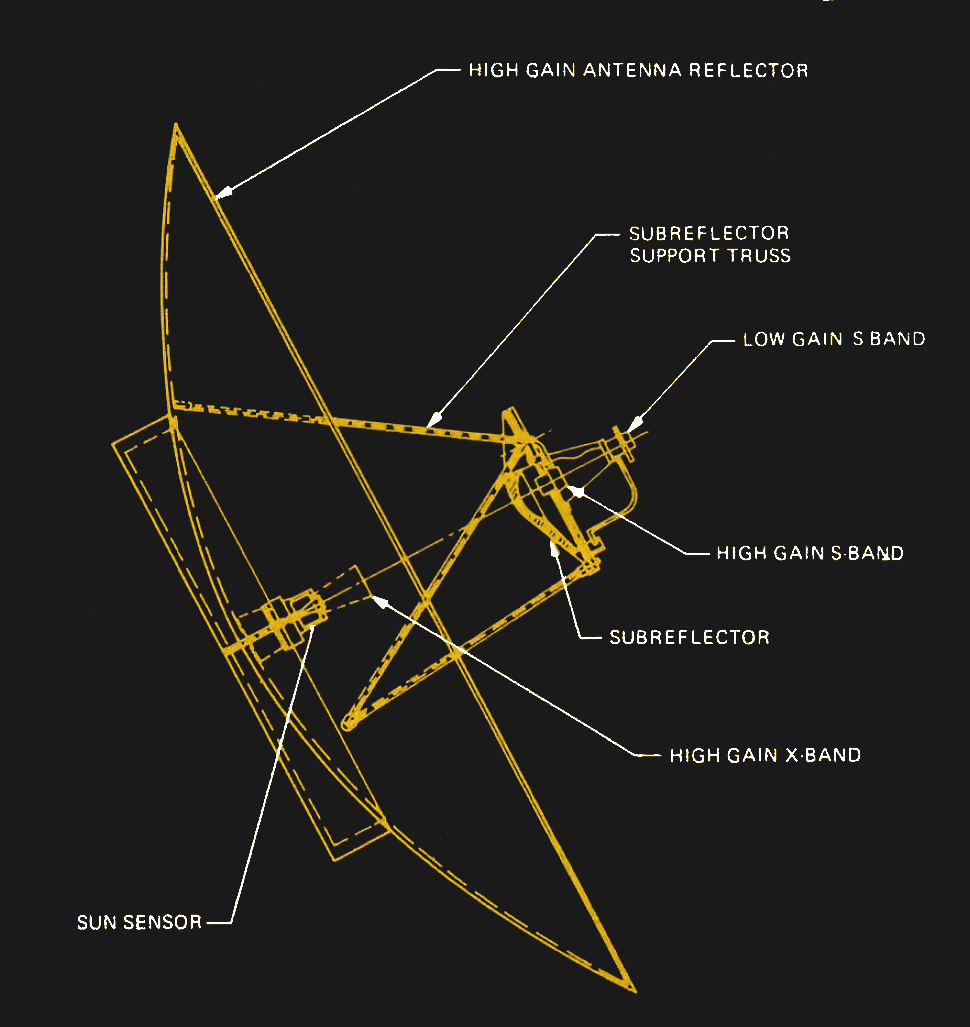

The primary reflector of the High Gain Antenna (HGA) on each Voyager spacecraft is a parabolic dish 3.7 meters in diameter. The dish is made from honeycomb aluminum that’s covered with a graphite-impregnated epoxy laminate skin. The surface of the reflector is finished to a high degree of smoothness, with a surface precision of 250 μm, which is needed for use in both the S-band (2.3 GHz), used for uplink and downlink, and X-band (8.4 GHz), which is downlink only.

Like their Earth-bound counterparts in the DSN, the Voyager antennas are a Cassegrain reflector design, which uses a Frequency Selective Subreflector (FSS) at the focus of the primary reflector. The subreflector focuses and corrects incoming X-band waves back down toward the center of the primary dish, where the X-band feed horn is located. This arrangement provides about 48 dBi of gain and a beamwidth of 0.5° on the X-band. The S-band arrangement is a little different, with the feed horn located inside the subreflector. The frequency-selective nature of the subreflector material allows S-band signals to pass right through it and illuminate the primary reflector directly. This gives about 36 dBi of gain in the S-band, with a beamwidth of 2.3°. There’s also a low-gain S-band antenna with a more-or-less cardioid radiation pattern located on the Earth-facing side of the subreflector assembly, but that was only used for the first 80 days of the mission.

Two Is One

Three of the ten bays on each Voyager’s bus are dedicated to the transmitters, receivers, amplifiers, and modulators of the Radio Frequency Subsystem, or RFS. As with all high-risk space missions, redundancy is the name of the game — almost every potential single point of failure in the RFS has some sort of backup, an engineering design decision that has proven mission-saving in more than one instance on both spacecraft over the last 40 years.

On the uplink side, each Voyager has two S-band double-conversion superhet receivers. In April of 1978, barely a year before its scheduled encounter with Jupiter, the primary S-band receiver on Voyager 2 was shut down by fault-protection algorithms on the spacecraft that failed to pick up any commands from Earth for an extended period. The backup receiver was switched on, but that was found to have a bad capacitor in the phase-locked loop circuit intended to adjust for Doppler-shift changes in frequency due primarily to the movement of the Earth. Mission controllers commanded the spacecraft to switch back to the primary receiver, but that failed again, leaving Voyager 2 without any way to be commanded from the ground.

Luckily, the fault-protection routines switched the backup receiver back on after a week of no communication, but this left controllers in a jam. To continue the mission, they needed to find a way to use the wonky backup receiver to command the spacecraft. They came up with a complex scheme where DSN controllers take a guess at what the uplink frequency will be based on the predicted Doppler shift. The trouble is, thanks to the bad capacitor, the signal needs to be within 100 Hz of the lock frequency of the receiver, and that frequency changes with the temperature of the receiver, by about 400 Hz per degree. This means controllers need to perform tests twice a week to determine the current lock frequency, and also let the spacecraft stabilize thermally for three days after uplinking any commands that might change the temperature on the spacecraft.

Double Downlinks

On the transmit side, both the X-band and S-band transmitters use separate exciters and amplifiers, and again, multiple of each for redundancy. Although downlink is primarily via the X-band transmitter, either of the two S-band exciters can be fed into either of two different power amplifiers. A Solid State Amplifier (SSA) provides a selectable power output of either 6 W or 15 W to the feedhorn, while a separate traveling-wave tube amplifier (TWTA) provides either 6.5 W or 19 W. The dual X-band exciters, which use the S-band exciters as their frequency reference, use one of two dedicated TWTAs, each of which can send either 12 W or 18W to the high-gain antenna.

The redundancy built into the downlink side of the radio system would play a role in saving the primary mission on both spacecraft. In October of 1987, Voyager 1 suffered a failure in one of the X-band TWTAs. A little more than a year later, Voyager 2 experienced the same issue. Both spacecraft were able to switch to the other TWTA, allowing Voyager 1 to send back the famous “Family Portrait” of the Solar system including the Pale Blue Dot picture of Earth, and for Voyager 2 to send data back from its flyby of Neptune in 1989.

Slower and Slower

The radio systems on the Voyager systems were primarily designed to support the planetary flybys, and so were optimized to stream as much science data as possible back to the DSN. The close approaches to each of the outer planets meant each spacecraft accelerated dramatically during the flybys, right at the moment of maximum data production from the ten science instruments onboard. To avoid bottlenecks, each Voyager included a Digital Tape Recorder (DTR), which was essentially a fancy 8-track tape deck, to buffer science data for later downlink.

Also, the increasing distance to each Voyager has drastically decreased the bandwidth available to downlink science data. When the spacecraft made their first flybys of Jupiter, data streamed at a relatively peppy 115,200 bits per second. Now, with the spacecraft each approaching a full light-day away, data drips in at only 160 bps. Uplinked commands are even slower, a mere 16 bps, and are blasted across space from the DSN’s 70-meter dish antennas using 18 kW of power. The uplink path loss over the current 23 billion kilometer distance to Voyager 1 exceeds 200 dB; on the downlink side, the DSN telescopes have to dig a signal that has faded to the attowatt (10-18 W) range.

That the radio systems of Voyager 1 and Voyager 2 worked at all while they were still in the main part of their planetary mission is a technical achievement worth celebrating. The fact that both spacecraft are still communicating, despite the challenges of four decades in space and multiple system failures, is nearly a miracle.

” To avoid bottlenecks, each Voyager included a Digital Tape Recorder (DTR), which was essentially a fancy 8-track tape deck, to buffer science data for later downlink.”

Ah, 8-track we miss you. Good thing they didn’t go with the more popular cassettes. :-)

“The uplink path loss over the current 23 billion kilometer distance to Voyager 1 exceeds 200 dB; on the downlink side, the DSN telescopes have to dig a signal that has faded to the attowatt (10-18 W) range.”

Stick a dish in space and we might be able to extend that.

“Stick a dish in space and we might be able to extend that.”

It’s radio, the path loss through the atmosphere’s relatively negligible. And extending the ability to talk to them past the mid-2030s is a little silly – they’ll be unable to do any science other than kinematics past 2025.

It’s actually a bit of a race to see what happens first (signal fade beyond detection vs power loss at spacecraft). By 2036 (the DSN range limit) both of the spacecraft should be well under the power limit: they lose around 1.6% per year, were at ~250W in ’16, and need ~200W minimum. They *should* run out of power right around 2030 but my instincts tell me that there’s probably some margin in that “minimum” (the ‘not enough power for science’ date keeps moving back).

It’s a gambling, yes. However, I assume -or rather hope- that the creators of the Voyagers were a bit conservative on paper. So they could justify the demand for a better/longer lasting power source. The real minimum might be a bit lower thus, if that turns out to be true.

Oh, God no, we’re way past what the Voyager designers envisioned! The RTGs were 470W at launch – they’re basically at 50% of their original power output currently.

The two spacecraft are in *terrible* shape overall. Both Voyagers are on their backup TWTA, as the article noted, and the backup TWTAs have *way* exceeded any life expectancy. V1 and V2’s star trackers are both degrading rapidly and V1 “might or might not” have a backup (other components in the same subsystem failed). The clocking system on V1 acts weird and has to be reset frequently. V2’s power command decoder acts weird and occasionally just shuts stuff off or on. Both V1 and V2 are on backup thrusters and system memory on both V1 and V2 have failures.

The only reason I think there’s a *chance* that they could get into the 2030s is because I think the scientists and engineers currently operating it are pulling a Montgomery Scott and intentionally overstating/overestimating the rate at which the power’s dropping – all research papers tend to report “minimum powers” as originating from “private communication.”

?

Anyway, hoping the best and expecting the worst isn’t exactly wrong to do, I suppose.That’s what I meant to express. If you design something, you normally use “safe” (higher) specs as countermeasure for unpredictable situations, so the mission isn’t doomed.

Unfortunately, laymen like me don’t know much about those details. Very few people likely know about all the defects these probes do have accumulated over the years.

Besides, wouldn’t it be possible to simulate behavior of the Voyagers under certain conditions by using one of the spare models? One is hanging from the ceiling in some museum, if memory serves. Sure, the RTGs are missing. But they could be simulated by batteries or power supplies. Or maybe, replicas of the instruments can be made for testing? A crowdfunding could financially assist, I guess.

The Voyager Interstellar Mission papers are pretty readable.

“Besides, wouldn’t it be possible to simulate behavior of the Voyagers under certain conditions by using one of the spare models?”

You can’t model Voyager’s behavior at this point. They’ve been in space 45 years. The models aren’t even remotely close.

I don’t think it’s a lack of information that stops them from knowing to the month when V1/V2 will go offline permanently, no doubt, absolutely no chance. It’s physically impossible for it to be like 2050, for instance. I just don’t think they want to admit it because then if they *don’t* get to that point, it’s viewed as a failure. There’s sooo much that has to work just for the spacecraft to even talk to Earth that from an engineering perspective you’d have to guess that they’re more likely to experience a catastrophic failure due to aging rather than run out of power.

2036 is probably the range limit for a single 34-m antenna at 160 bps. There’s a JPL paper (Descanso 4) that puts the range limit at 2050/2057 for 40 bps on a single 34-m antenna. Arraying DSN antennas would extend that further, and arraying with the VLA increases the range beyond that.

That makes sense, but what about the receiver/antenna technology on earth?

Won’t developments in science help to compensate, too?

Or is the reception equipment not updated for the Voyagers and only a few remaining parabolic dishes can receive them?

Please forgive my arrogance. I do vaguely remember that generally archaic equipment must be kept operational to stay in contact with old missions. That has to do with old/outdated modulation schemes, frequency ranges etc.

But maybe that information is incorrect by now? SDR technology (software defined radio) has made huge progress in the past 10 years, for example.

Or, how about cooling with liquid hydrogen? That should reduce the noise floor on the reception side. If transistors are cooled, their own noise will decrease, which increases sensitivity of a receiver. Things like that.

*ignorance. But if you can forgive my arrogance, then that’s great, too! 😁

Equipment on the ground has been updated since the launch of the Voyagers. In 2020, NASA overhauled DSS-43, the only antenna that can send commands to Voyager 2, including a new transmitter.

The Descanso paper is from 2002. The receivers are cooled to 4K.

there’s a trend for newer missions to use higher frequencies, Voyager uses S-band and X-band (2 and 8 GHz, IIRC). X-band is still common for newer missions.

I don’t know if they have to keep around old equipment for the radio side of things.

23 billion is a ridiculous number, i personally prefer 21 light hours, more inaccurate, but, considering, the moon is one light second away, roughly speaking, it puts the distance into a whole new perspective.

It really is a testiment to the quality of engineering that went into this project when we see how long such “old school” designs like the DTR’s and TWT’s lasted so long.

I woked on ECM system in the USAF back in the 80’s and many of our systems used these exotic vacuum tubes like TWT’s. We also had weird things like “backwards wave oscillators.”

We also had an obsolete system in tech traning that used a slug-tuned magnetron.

They certaintly did not have the same MTBF, though it was also a different environment.

A few more details:

– Each Voyager gets at least 8 hours of downlink time per day. This is live playback of instrument data, at 160 bit/s, to one of the 34 m DSN antennas.

– On Voyager 1, the tape recorder is still operational. Twice a year, data from the recorder is played back at 1400 bit/s. To receive this, all 5 antennas of a DSN station (1x 70 m diameter, 4x 34 m) are used in an array.

– Because of the capacitor failure on Voyager 2, uplink is done not at the usual 18 kW, but at 75 kW.

Thanks for this details!

Is there any kind of authentication for uplinked commands to stop someone with a spare radio telescope and too much time on their hands from playing silly buggers with the thruster system?

Pffft, who needs a radio telescope, if you’re a real hacker, what you need is to just wait until the night side of the earth with the more reflective Heaviside layer is expanded and extra shiny, and take over a dozen Ham repeaters to create a phased wavefront that appears to have an origin at the focal point, and use that as your death ray*cough*radio telescope.

* and pointing in the right direction

was meant to be in there somewhere.

Don’t know of any hams that have free access to a 70M dish and 75KW transmitter.

Security is built into the requirements. Maybe not absolutely safe, but very close.

About 1971, two hams heard Apollo from the moon at about 2.4GHz. Esoteric stuff at that point. I think both used parametric amplifiers, I think the last time I read about them being used by hams. And that was close, the moon.

And don’t forget the software. Joe Taylor developed the encoding system. In use today by radio amateurs.

https://www.physics.princeton.edu/pulsar/k1jt/wspr.html

https://phy.princeton.edu/people/joseph-taylor

Able to get data around the world with only a few watts of power. Amazing stuff…

Joe Taylor is a legend. I tried to get him on for a Hack Chat, but I never heard back from him. If anyone can set up an introduction, let me know — I’d love to have him on for a chat.

You might ask – joe@princeton.edu

I live north of Seattle and used to work for MSFT. He gave a presentation to their amateur radio group. Alas, I was not able to attend but he does give public presentations. He was also at the SeaPac radio convention in Oregon a couple years ago.

“When the spacecraft made their first flybys of Jupiter, data streamed at a relatively peppy 115,200 bits per second. ”

For comparison, 115200 Baud was the maximum symbol rate of the old RS-232/V.24 serial port found on PCs with i8250, 16450 or 16550A UART FiFos.

Came here to post that!

And to add: “only 160 bps” is half of what early home computer’s modem could do. I still have a Commodore Vic Modem that does 300

I used a teletype with a 110 baud acoustic coupled modem to talk to my dad’s company mainframe in the early 70’s to play Lunar Lander. 300 baud was fast (For a short time). My first PC had a 1200 baud modem circa 1986.

Hats off to the NASA teams and what they have done over the years – I stand in awe of their accomplishments and how many of their projects are still working so many years later

Given the hardware available at the time the skill and expertise of the engineers who designed and built Voyager is mind blowing.

I think the same. It’s amazing what they accomplished.

Now let’s imagine if the Voyager were actually designed for a long voyage.

What’s often forgotten – these probes were primarily designed for the Grand Tour, not for interstellar travel.

However, “designed” might be not the right term, even.

The Voyagers were perhaps rather improvised, an makeshift solution.

With knowledge gained from the Pioneer and Mariners probes (hence the original name, “Mariner-Jupiter-Saturn” MJS)

But maybe that’s exactly why the lived so long, perhaps.

“Necessity is the mother of invention”, used to people say.

Maybe that’s why JPL/Nasa unintentionally created such a masterpiece.

The Voyager series wasn’t planned for years, after all. The Voyagers were a low-cost substitute, rather. In comparison to the original plan, I mean. They were made in a rather short time.

If there weren a few individuals (Michael Minovitch, Gary Flandro, and likely some more individuals I don’t know of) that discovered the constellation and calculated a flight path, the whole opportunity was missed for another 176 years.

There’s something what I always wondered, also, by the way.

With today’s mindset and technology, would it have been possible, still?

I saying “still”, because I think we lost something.

Back in the 1970s, before my time, people knew of the limits of technology.

The 70s were the time when all of our world’s digital components saw the light of day. 555, 74xx series, LM386, the EPROM, COSMAC 1802 CPU, shift registers, glass terminals, the CP/M OS, first home computers..

Back then, people noticed both the limitations, but also the capabilities and chances of the then new technology.

They had a deep respect for the humble technology of the day, without arrogance.

They handwired PCBs and dutifully checked every bit multiple times. They know that a single error of them could mess things up.

Now let’s compare this with today.

Seriously, how often did you guys read or write the lines “wow, technology has come a long way” or “If I could go back in time, I’d show people my super duper smartphone XD” or “now my smartphone can all do of this”.

Personally, I think that is very arrogant.

Firstly, old technology as such isn’t obsolete if it performs its task perfectly fine.

Maybe it looks obsolete to us, though.

But that’s not true from a physical/functional point of view.

Secondly, all the modern technology isn’t “our” work. We did contribute zero to it. We’re merely consumers.

Not do much hobbyists of the 1970s, when the Voyagers were built.

The majority of users also were programmers, thinkerers, hackers.

Operating an old machine simply required a certain set of skills. Users also built their machines from kits, at the time. They learned to solder, to read hex and use assembly language, read schenatics. Some were radio amateurs, too.

Lastly, 1970s technology was made from discrete components, was made in Micron/Micrometer (not Nanometers). The 70s technology was generally way more rugged than our wimpy sunshine technology of today. The sheer dimensions of the manufacturing process made them more robust against internal defects caused by material exhausting and radiation. DRAMs and early CMOS excepted (early 4000 series), they were very fragile. The NMOS and TTL chips had a higher tolerance level in terms of voltage fluctuations, also. They could withstand shorts in some case, without immediately dying. The list goes on.

Anyway, that being said, I’m not saying thst 70s tech was superior.

Manufacturing processes have evolved fir sure, even though miniaturization itself is no solution alone. Bigger structures are less efficient, as long as we stick to old silicon, but they also cause less noise and cross talk. That’s one of the “analogue” problems of our day that people tend to forget or simoly ignore. Things like radio interference (RFI) and proper electro-magnetical shielding are almost completely ignored in our day. We use sunshine technology that fails easily, because it’s build for sheer performance rather than with wisdom and balance.

What we need are a advancement in mentality, rather than technology. The higher than normal human factor is what made the Voyager mission possible. All the excellent people at JPL and the Voyager’s control center who fought through old documents and used their phantasy and skills to keep things running. The sheet dedication of these people made it possible. A purely rational, commercial thinking would never had archived that.

“Now let’s imagine if the Voyager were actually designed for a long voyage.

What’s often forgotten – these probes were primarily designed for the Grand Tour, not for interstellar travel.”

Well, yes and no. Officially they were designed for the Grand Tour. Unofficially, the engineers did everything they could to sneak long-lived hardware into the design, and were careful not to make choices that would end up limiting the life of the spacecraft.

Fair point about the scale of the semiconductors. I was doing some figuring that gate doping in modern chips might get wrecked by diffusion and cosmic rays at the earths surface within a decade or two. Whereas 0.6 micron may be fine for in excess of 75 years.

Relevant xkcd ..

https://xkcd.com/2624/

A 250 micrometer surface finish is extremely course. Is that meant to be .025?

No, we’re talking about GHz signals with wavelengths of the order of cm. 250 microns accuracy is plenty fine for that.

That would be coarse for light, but the shortest wavelength the dish needs to reflect is about 3 cm or so (X-band, 8-GHz). So it’s pretty darn smooth at that wavelength.

FYI, I got the figure from the Deep Space Communications and Navigation book series. Chapter 8 concentrates on the mechanical aspects of antenna designs, and makes for pretty fascinating reading – the whole series does, in fact. The 250um figure comes from table 8-1 on page 437.

The spacecraft transmitter is also a science instrument. The Deep Space Network receiver is used to “sample” the planetary atmosphere as the virtual image of the spacecraft passes behind the planet. The science data are the amplitude and polarization of the S- and X-Band carrier waves (telemetry is off during a science measurement), and, importantly, the differences between the two signals. For this reason, there is no X-Band uplink to the Voyagers, or, rather, the reason there is an X-Band downlink at all.

The discussion of the “incoming X-Band waves” should probably be removed from the article.

best tape decks in the known universe.

What do you say we start a Kickstarter campaign to lure some of these legendary engineers out of retirement long enough to design a few key devices, like a decent audio amp and an AM/FM radio that works? You know, something where the schematic wasn’t just lifted from a chip manufacturer’s application data sheet. Oh and something without an AC hum in the audio, if it’s not too much to ask.

It’s not so much that the current generation of engineers doesn’t know how to design things, it’s more that there’s no way to make money mass marketing something that’s built to last. The Voyagers, Curiosity and Perseverance, Ingenuity –all were built to survive as one-offs (or two-offs), not to make a profit.

if people were not spending all their time/energy/money replacing things as they go bad, they might be able to afford other things which they also manufacture. not to mention how much it costs to figure our what corners they can cut so the thing fails a week after its warranty period. apple spends a fortune to make sure you cant repair their stuff.

“decent audio amp” – just buy a TPA3255 board (official TI is the best, generic ones are cheaper and just need a few part swaps to perform excellent) and call it done. Use differential audio input and output for best results.

The fact that TI still has the best single chip audio amplifier is a pretty good indication the engineers there are still hard at work. (600W from a single chip is especially impressive when it’s all on a single piece of silicon – power and control. IPMs can do even more but they do that with separate dies for power handling and control logic.)

I am amazed at the longevity of NASA’s machines. Rovers on Mars (and elsewhere) have consistently exceeded their original predicted lifespan, from a few weeks / months to years. It truly was an emotional moment when Opportunity “died”. Its last words will remain famous for sure.

Hardware on Mars could run even longer if they’d take advantage of the Martian atmosphere and put a dust blower on the end of the mechanical arms. Then they could blow the dust off the solar panels. The blower could also be run in reverse to vacuum up samples of regolith and what’s ground off of rocks and out of drill holes.

They’ve looked into panel cleaning technology for years. It’s a much harder problem than you’d think, mainly because since the atmosphere’s so thin, the dust tends to be statically charged and *exceptionally* fine. They actually cleaned off InSight’s panels by dropping sand on them.

They got a bit ‘greedy’ in expectations for InSight, for instance, because the winds periodically cleaned off Spirit/Opportunity’s panels. Apparently InSight’s region of Mars doesn’t get similar types of wind events.

Thank you both for your answers, very interesting topic. I’ve heard that yes, dust is so thin it would be too hard to get rid of it all. I suppose wipers would also ruin the panels ?!

Have you ever used a fan to remove dust? It doesn’t work very well: in no time, the dust starts to circulate back to the fan intake side instead of moving in a straight line. Using high pressure improves the situation, but that’s a lot more hardware-intensive, and the rovers are severely mass-limited.

I bet no-one at NASA thought of that and there can be no good reasons other than laziness or ignorance that they haven’t implemented this.

“Rovers on Mars (and elsewhere) have consistently exceeded their original predicted lifespan,”

Good portion of that is the Montgomery Scott effect (multiply your original estimates by a factor of 4 so you look smart).

Pathfinder, Opportunity and Spirit genuinely outlasted “real” expectations by a huge amount. Those rovers were *not* expected to last that long. Curiosity and Perseverance would’ve been huge disappointments if they didn’t make it to at least 5 years, and I’d bet internally the expectations are at least 10 on both.

While NASA engineers indeed do excellent work, long living machines aren’t that uncommon. Think e.g. of historic cars, houses, water power plant turbines, and a lot more.

Once every possible location of wear is identified and eliminated or made sturdy enough to last more than a million load cycles, it pretty much lasts forever. Careful engineering.

My iPhone 3 didn’t stop working either. It just happens that the modern world requires at least TLS 1.2 and there’s no software supporting this kind of protocol on this phone.