There are about one million known species of insects – more than for any other group of living organisms. If you need to determine which species an insect belongs to, things get complicated quick. In fact, for distinguishing between certain kinds of species, you might need a well-trained expert in that species, and experts’ time is often better spent on something else. This is where CNNs (convolutional neural networks) come in nowadays, and this paper describes a CNN doing just as well if not better than human experts.

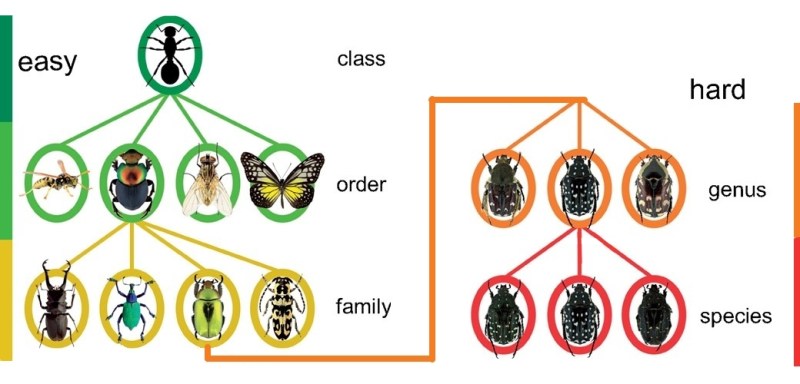

There are two particularly challenging tasks in insect taxonomy – dealing with visually similar species that are hard for non-experts to tell apart, and reliably determining which family an insect belongs to from just its pictures. The paper describes quite well how the CNN technologies they’re using work, and how they narrowed their technology choice down to the method of feature transfer. Feature transfer uses a general-purpose image recognition network, and builds upon that to form a more application-tailored machine learning system – saving computational power, reducing the amount of training data required, and largely avoiding problems like overfitting.

The resulting network has outplayed both experts and more traditional automated recognition methods, and is promising when it comes to acceleration of scientific discovery. We encourage you check this paper out – the research story is coherent, and the paper provides good insights into abilities and limitations of CNNs, save for heavy terminology here and there. (The webpage view of the paper has mangled characters, but the PDF download doesn’t have such problems.) We’re seeing neural networks be used more and more for pattern recognition tasks everywhere, and while the results aren’t as miraculous as some say, hackers like us have used a CNN in teaching a dog to stop barking when the owner’s not home, and a research team has developed a toolkit enabling anyone to recognize birds from their songs.

We thank [Anonymous] for sharing this with us!

apart from “where’s the hack”, you could substitute any tool into this type of news line. “hand drill outperforms humans in drilling holes”. “Car outperforms humans in covering distance in most conditions”.

The danger with these neural network type of things is that it’s GIGO, garbage in, garbage out. Also known as overfitting. It’s only ever as good as the training data, and if there’s a new family line of insects, its likely it will claim it’s something it already knows.

Read the “calling bullshit” book for more details on this.

one example from the book: there was a neural network which was 99% accurate in predicting criminals from photographs. Only the researchers used mugshots for the criminal data in which people were not smiling, and regular social network pictures for the “non-criminals”, in which people are typically smiling.

Another one: researchers used data from a lung scan device to predict future death by lung disease. Only the field mri scanners which were used in case of a very serious call out had the words “mobile mri” in the image, compared to the hospital MRI which did not have this, and field MRI would be more likely to target very ill patients, who in less severe situations could just come to the hospital. And the neural network detected which images where field MRI images by that label “mobile MRI” written in the top right of the image, rather than any actual MRI data.

Over fitting…

yeep. there’s a story of training an AI to distinguish between real and fake (mockup) tanks. Except that pictures of fake tanks were all made on the same polygon on the same day, and it was cloudy, so the AI actually learned to recognize clouds in the sky on the pictures.

..did you read the article? I do mention overfitting, and the paper talks about overfitting, and how they’re using technologies and methods less prone to that.

No I didn’t. I only read further if it looks like its about hacking.

https://knowyourmeme.com/memes/baton-roue

so the problem appears to be which features are being extracted

the paper is unclear as which features of the images are being used, or it could be that I have trouble understanding the winding and unclear language being used?

so perhaps the neural networks needs to be able to identify which features are being used and present that information to the user along with the conclusions being drawn, just presenting images without knowing which features are being used appears to be the lazy approach

clearly any labeling of the images need to be excluded

It would be nice if in the insect taxonomy case, the ANN could draw an abstract representation or outline of the insect and show which dimension and colours are being used

hmm. is it practical for us to get such information out of ANNs nowadays? My (pieced together) understanding is that lack of transparency is +- the MO, since the mathematical complexity of internal layers makes it tricky to translate them into human-understood (and relatable) features.

My main issue with NN-based AI is that the entire thing is a blackbox. You have no way to know what that thing is seeing, and there’s few ways to change what is being returned, and re-training the entire network usually isn’t feasible.

Statistical analysis is way more difficult than just “shove a bunch of examples and let the computer figure out,” but at least you can control what is being analysed and what is not working.

You could have the NN produce a list of the closest matches and then check them yourself.

You certainly can. The problem is to know what the AI did to select the closest matches, and understand what the heck the AI thought that a plastic turtle looks almost exact like an AR-15 (and vice versa).

AI Adversarial Attacks are a thing, and a scary one. If the attacker gets to know how the NN is behaving, he can easily construct the input to achieve the desired output. For example, this mom cheated the school AI so her son got straight 10 on each test: https://www.theverge.com/2020/9/2/21419012/edgenuity-online-class-ai-grading-keyword-mashing-students-school-cheating-algorithm-glitch.

Now all we need to do is install the smarts in a roomba with an electric zapper so it can kill off the insects that outperform humans the instant it identifies them.

The problem I see with this is that this network can only analyse morphology (“how the insect looks”). We have been doing this for ages, but now that we can do gene sequencing relatively easy we have found that just because two animals look similar they do not have to be closely related. This has lead to animals being reclassified over the years.

(sorry, can’t cite anything. It’s just something I overheard insect researchers talk about)

I’m far more worried about an AI that casually performs random gene sequencing on anything it perceives as an insect.

I’ll let researchers worry about misclassified bugs so long as I don’t have to keep rescuing my dog from my cell phone.

Check out as well:

– Classifying the Unknown: Identification of Insects by Deep Zero-shot Bayesian Learning — https://www.researchgate.net/publication/356706523_Classifying_the_Unknown_Identification_of_Insects_by_Deep_Zero-shot_Bayesian_Learning

– Plant identification? There’s an app for that—actually several! — https://www.canr.msu.edu/news/plant-identification-theres-an-app-for-that-actually-several

– An Analysis of the Accuracy of Photo-Based Plant Identification Applications on Fifty-Five Tree Species — https://joa.isa-arbor.com/article_detail.asp?JournalID=1&VolumeID=48&IssueID=1&ArticleID=5537