When it comes to an engineering marvel like the James Webb Space Telescope, the technology involved is so specialized that there’s precious little the average person can truly relate to. We’re talking about an infrared observatory that cost $10 billion to build and operates at a temperature of 50 K (−223 °C; −370 °F), 1.5 million kilometers (930,000 mi) from Earth — you wouldn’t exactly expect it to share any parts with your run-of-the-mill laptop.

But it would be a lot easier for the public to understand if it did. So it’s really no surprise that this week we saw several tech sites running headlines about the “tiny solid state drive” inside the James Webb Space Telescope. They marveled at the observatory’s ability to deliver such incredible images with only 68 gigabytes of onboard storage, a figure below what you’d expect to see on a mid-tier smartphone these days. Focusing on the solid state drive (SSD) and its relatively meager capacity gave these articles a touchstone that was easy to grasp by a mainstream audience. Even if it was a flawed comparison, readers came away with a fun fact for the water cooler — “My computer’s got a bigger drive than the James Webb.”

Of course, we know that NASA didn’t hit up eBay for an outdated Samsung EVO SSD to slap into their next-generation space observatory. The reality is that the solid state drive, known officially as the Solid State Recorder (SSR), was custom built to meet the exact requirements of the JWST’s mission; just like every other component on the spacecraft. Likewise, its somewhat unusual 68 GB capacity isn’t just some arbitrary number, it was precisely calculated given the needs of the scientific instruments onboard.

With so much buzz about the James Webb Space Telescope’s storage capacity, or lack thereof, in the news, it seemed like an excellent time to dive a bit deeper into this particular subsystem of the observatory. How is the SSR utilized, how did engineers land on that specific capacity, and how does its design compare to previous space telescopes such as the Hubble?

High Speed in Deep Space

The communication needs of the James Webb Space Telescope provided engineers with a particularly daunting challenge. To accomplish its scientific goals the spacecraft must be located far away from the Earth, but at the same time, a considerable amount of bandwidth is required to return all of the collected data in a timely manner.

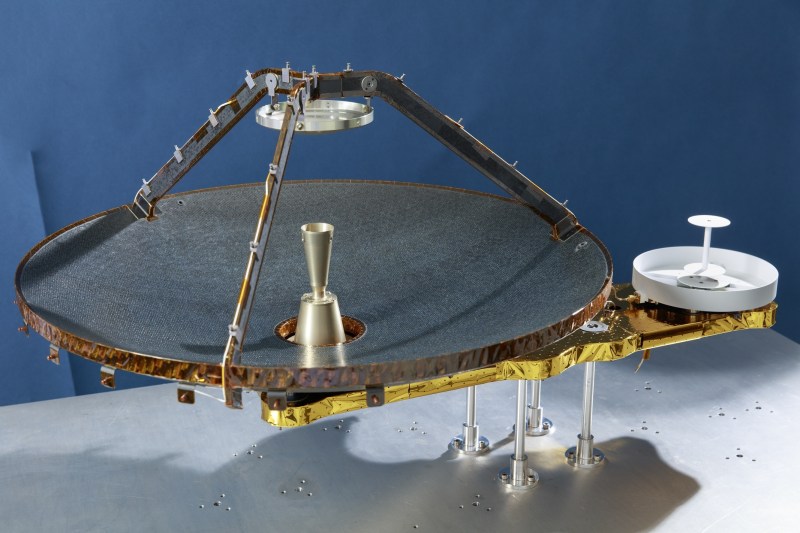

To facilitate this data transfer, the JWST has a 0.6 meter (2 foot) diameter Ka-band high-gain antenna (HGA) on an articulated mount that allows it to be pointed back to Earth regardless of the observatory’s current orientation in space. This Ka-band link provides a theoretical maximum bandwidth of 3.5 MBps through NASA’s Deep Space Network (DSN), though the actual achievable data rate is dependent on many factors.

Unfortunately this high-speed link back to Earth isn’t always available, as the DSN needs to juggle communications with many far-flung spacecraft. With the network’s current utilization, the JWST has been allocated two four-hour windows each day for data transmission. On paper, that means the spacecraft should be able to transmit just over 100 GB of data back to Earth in a 24 hour period, but in practice there are other issues to consider.

For one thing, the high-gain antenna can’t constantly track the Earth, as its movement produces slight vibrations that could ruin delicate observations. Instead, it’s moved every 2.7 hours to keep the planet within the beam width of the antenna. Observations are to be scheduled around this whenever possible, but inevitably, a conflict will eventually arise. Either high-speed data transmission will have to be cut short, or long-duration observations will need to be put on pause while the antenna is realigned. Mission planners will have to carefully weigh their options, with the deciding factor likely to be the scientific importance of the observation in question.

There’s also downtime to consider, on both ends of the link. The DSN could temporarily be unable to receive transmissions, or there could be an issue aboard the spacecraft that prevents it from making its regularly scheduled broadcast. Between the logistical challenges associated with the observatory’s standard downlink and the possibility of unforeseen communication delays, the only way the James Webb could ever hope to make round-the-clock observations is with a sizable onboard data cache.

Flight Tested Technology

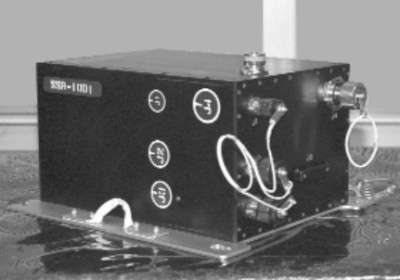

In the context of personal computing, solid state drives are a relatively new development. But NASA has been well aware of the advantages, namely lighter weight and a lack of moving parts, for decades. The space agency isn’t known for fielding untested concepts on flagship missions, and this is no different. They’ve been using a similar approach on the Hubble Space Telescope since 1999, when astronauts on the third servicing mission replaced the spacecraft’s original tape-based storage with a 1.5 GB SSR.

Naturally the lower capacity of Hubble’s SSR is due, at least in part, to the era. But even still, this was a considerable upgrade, as the tape recorders the SSR replaced could only hold around 150 MB. Remember that the resolution of the images captured by Hubble are considerably lower than that of the JWST, but that communications with spacecraft in Earth orbit are naturally far more reliable than those in deep space.

Store and Forward

All told, NASA estimates the James Webb should be able to transmit a little over 28 GB through the DSN during each of its twice-daily windows. To provide a full 24 hour buffer, the spacecraft therefore needs about 60 GB of onboard storage. So why is the SSR 68 GB? Partly due to the fact that some of the space is reserved for the observatory’s own use. But also because, as explained by flight systems engineer Alex Hunter to IEEE Spectrum, the extra capacity gives the system some breathing room as wear and radiation whittle away at the SSR’s flash memory over the next decade.

It might not seem like 24 hours is much of a safety net, but there’s several provisos attached to that number. Depending on which scientific instruments are actually being used on the James Webb, the actual amount of data generated each day could be considerably lower. If high-speed communications are hindered, ground controllers would likely put the more data-intensive observations on hold until the issue is resolved. If necessary, NASA could also allocate extra DSN time to work through the backlog. In short, there are enough contingencies in place that the capacity of the SSR should never become a problem.

So while you could certainly find a bigger solid state drive in a mid-range Chromebook than the one NASA recently sent on a decade-long mission aboard the James Webb Space Telescope — careful planning and a healthy dose of the best engineering that money can buy means that size isn’t everything.

[Editor’s note: Yeah, we know that the graphic shows the JWST radiating from the telescope focus. I’ll take the blame for an insufficiently specific art request on this one. But you do have to admit that they look kinda superficially similar if you’re an artist and not a radio guy.]

Nice article, thanks!

“They’ve been using a similar approach on the Hubble Space Telescope since 1999, when astronauts on the third servicing mission replaced the spacecraft’s original tape-based storage with a 1.5 GB SSR.

Naturally the lower capacity of Hubble’s SSR is due, at least in part, to the era. But even still, this was a considerable upgrade, as the tape recorders the SSR replaced could only hold around 150 MB. ”

Cool. Back in the early 90s, my father had a SCSI-based QIC streamer for fixed-drive backup. The backup software was similar to SyTOS, I think. The capacity of the normal tape (almost VHS size) was around 150 MB, too.

That was huge at the time, when ordinary 386 PCs still had 40 MB AT-Bus HDDs.. Remember, a typical DOS/Windows 3.x installation was 10MB in size. And Win95 RTM was ~40MB in size. Anyway, it was apparently sufficient back then.

In a few decades people will make fun of tomorrow’s specs.

In a few decades (at the rate we’re going) mankind will rediscover that a small sharp Reed will allow clay tablets to store information about food barters.

Ah yes, reminds me of the old saying from a popular German scientist/physician..

He didn’t know what, um, ‘tools’ are used in WW3, but thought it will be sticks and stones in WW4.. ;)

But that was a rather misanthropic vision from the cold war era, I think. As a modern German, I think we won’t start WW3. After two WW’s the mindset has changed forever, I think. There’s so much more in life. The stars are the limit.. ✨

I don’t think that anyone believes Germany would start WWIII.

Mr Putin is trying really hard to provoke WW3 right now, thankfully no-one is falling for his BS.

And last forever too!

Tape seemed quite ahead on the storage capacity curve until mid 90s when it all fell apart, what do you mean I need 4 tapes to back up my shiny new 8GB HDD, I used to get 4 backups to a single tape???

I think it was that areal density wasn’t that hot, but a lot of area could be had in a thin tape tightly wound. But then thinner was the enemy of reliability and/or denser coatings to get more bits per inch.

The other consideration is that the very dense SSDs we typically use have to deal with a lot of error correction and processing which they probably wouldn’t want at all for Webb, so it’s not likely the exact same technology. Can’t find info about the SSR design itself though.

An interesting note I saw is that files on the SSR for JWST are pretty large (1 Gb) because the latency is so large (12 seconds) and smaller files would require much more accounting. Basically with smaller files there would be more “in flight” at any given time.

Can I just say a BIG THANKS for admitting your mistake on commissioning the (very nice!) art. So often the artist gets blamed for not knowing obscure domain-specific details of a technology / procedure / etc.

So often no one bothers to tell the artist anything helpful and just gives them a bunch of internal industry acronyms to draw from. Or the “sketches” that they provide for you to work from are themselves inaccurate because “everyone knows” what you meant to draw.

Particularly frustrating is when as the artist you do research, and Google/etc gives you the wrong information.

To be clear – this complaint isn’t about HAD, but our clients, who span from healthcare to networking to mechanical to aerospace, and expect us to know everything about their niche of their industries.

The takeaway for HAD readers commissioning artwork / diagrams / slide decks / brochures is:

Please be really clear about what you want. Be clear what symbols / features are distinctive for each item. E.G. what could identify this 2U rack unit as a firewall instead of a router or NAS? What type of connectors do your cables have (photos!)? What do these TLAs mean in your industry? etc.

If you want to be especially nice to your artists, draw a rough sketch of what you want. It doesn’t have to be good, it just has to be understandable. The equivalent of stick figures and smiley faces will do.

Yeah! I usually include images of whatever when I can. Here, I actually had trouble finding an image of the high gain antenna on the JWST. Tom found that one after I put in the art request.

It _really_ does seem like the radio backlink just doesn’t get any play in the press, even though it’s interesting enough that it can only send in a couple windows per day because any movement of the antenna risks messing up the science imaging, etc.

Whenever you think you kind of understand a simple space telescope, it turns out that there are even more layers of interesting material there if you poke hard enough. :)

>its somewhat unusual 68 GB capacity isn’t just some arbitrary number, it was precisely calculated given the needs of the scientific instruments onboard.

Does that mean it has no over-provisioning, or it’s included in the 68GB?

If I was sending a $10bn thing in space for a multi years mission, I’d include at least a 100% over-provisioning! In which case the drive would really be 34GB?

Let’s don’t forget about redundancy. In the past, space vehicles had multiple duplicates of the same components installed, often three. So is there really just one SSR installed in the telescope?

There is just a single SSR, however as you indicated there are redundant copies of every PCB (input, output, power supply, etc.) to ensure it is always up and running. For the memory boards, there is a fair amount of EDAC as well (error detection and correction) implemented to deal with the effects of radiation particles flipping bits in the memory, though because it’s technically not a “critical component” (having a couple off pixels in the images won’t bring the whole telescope down) more attention is paid to other parts of the system in dealing with radiation.

The integrated circuits (ASICs) used in the recorder have redundancy built into the critical datapaths as well (i.e. 3 copies of every reset signal, so a particle flipping one won’t actually reset the box). Of course we can’t make the whole chip that redundant, so we have to assess which parts are most important in terms of the impact of radiation event would have (bring the telescope down, require a reboot, require ground intervention, etc.). For example, a telescope like Webb (or any satellite) has a requirement for how often during the course of a mission a ground intervention can be required, and the radiation tolerance and redundancy is incorporated based on that.

Great article! As an engineer who works at the company in Colorado who built the SSR for JWST ( and many others), it’s been fun to see our work generate such lively discussion over the past week. The company, SEAKR Engineering, was started by an ex Air Force engineer and his sons. In working on spy programs in the 60s, he was very in tune with the issues related to tape and saw the need to migrate to solid state for space based storage.

One major difference between an SSR and “earthbound” SSD is that all of the memory elements on the SSR are actually DRAM and not flash. Because it’s a satellite we don’t have to worry about those pesky reboots or unplanned power cycles, so we don’t have to use true non-volatile storage elements. As previously indicated, the entire recorder is custom built and about the size of the Hubble box pictured, and has custom I/O interfaces to work with the particular imaging technology on the input side and the transmission system on the output side.

Hope that sheds a little more light on this, thanks again for the great article!

DRAM! Unexpected! Awesome to have the internal knowledge!

There was no mention of software in the article. I’m guessing all the software is stored elsewhere on an actual non-volatile storage? But is still upgradable? Or if it has a power outage is it dead? Or does it PXE boot from earth?!

Software is stored on non-volatile memory and is upgradeable. It’s actually pretty bare-bones in that all it does it respond to requests from the host system (store this file here, give me the file stored here, erase this file, etc.) so it doesn’t take up a lot of space. As has been discussed elsewhere, there is also a separate interface slow interface for telemetry data that is stored in the DRAM as well and transmitted back to earth during the windows.

Yeah! Super cool. Comparisons with a hard drive are all off base, then. That’s hilarious.

The thing has 68 GB of dedicated storage RAM drive. Take that, my desktop!

So what *is* the actual capacity of the SSR? JWST design documents all list 471 Gb (sometimes 470 Gb), which doesn’t equal 65 GB pretty much any way you slice it. The JWST user manual got (partially) updated with 65 GB capacity, but it still lists it as 58.8 GB (which is poorly-rounded 471 Gb) in a few places.

So this part in the article “the extra capacity gives the system some breathing room as wear and radiation whittle away at the SSR’s flash memory over the next decade” is incorrect? That would be the first time writers here made a mistake :)

Fairness to the writers if you follow the links you will their source material is vague at best. Contradictory at worst. Is it 58.8GiB or 65GiB

I’ve got more questions than answers. Is it possible RAM is better than Optane technology for example? Is it kept cold so it doesn’t need refreshing as often? Does it have robust or redundant ECC?

That part comes from the IEEE interview with Alex Hunter, and I’d expect a flight engineer on the project to be a pretty reliable source.

Okay! That is a lot of DRAM to power and talk to! When I think of DRAM I think of DDR3 or 4, is this what you are using? This screams to me custom ASIC or very large FPGA/SoC.

It does seem like a lot, but this was designed probably a decade ago and space technology is always a fair bit behind what would be considered state-of-the-art consumer tech. It’s standard SDRAM, the controller ASIC is probably running <100MHz (like I said, old tech a fair bit behind), you'd be surprised how little performance is required to power something as large as JWST, at least in the SSR side of things.

Ha, the SSR for JWST – at least one version of it, not sure if there were more – was actually *delivered* a decade ago, in 2012. So obviously designed even longer back than that.

Very informative article, thanks! Lots of stuff the average hacker (like you and me) want to know, that hasn’t been put through the “marketing hazer” by people that say “noo co lar” instead of “new clear” 😀

Could they put a relay satellite close by so the telescope antenna wouldn’t need to be moved at all?

That will be 11 billion dollars

Waste of tax money, could have been spent on sustainable energy for humans on Earth.