Over the years, we’ve seen a good number of interfaces used for computer monitors, TVs, LCD panels and other all-things-display purposes. We’ve lived through VGA and the large variety of analog interfaces that preceded it, then DVI, HDMI, and at some point, we’ve started getting devices with DisplayPort support. So you might think it’s more of the same. However, I’d like to tell you that you probably should pay more attention to DisplayPort – it’s an interface powerful in a way that we haven’t seen before.

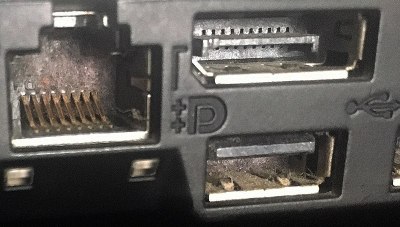

You could put it this way: DisplayPort has all the capabilities of interfaces like HDMI, but implemented in a better way, without legacy cruft, and with a number of features that take advantage of the DisplayPort’s sturdier architecture. As a result of this, DisplayPort isn’t just in external monitors, but also laptop internal displays, USB-C port display support, docking stations, and Thunderbolt of all flavors. If you own a display-capable docking station for your laptop, be it classic style multi-pin dock or USB-C, DisplayPort is highly likely to be involved, and even your smartphone might just support DisplayPort over USB-C these days.

Video Done Right

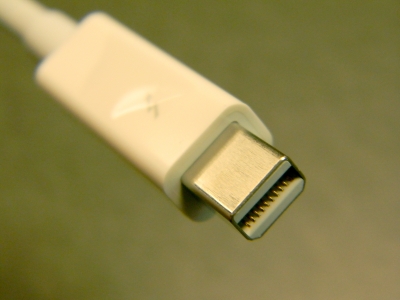

Just like most digital interfaces nowadays, DisplayPort sends its data in packets. This might sound like a reasonable expectation, but none of the other popular video-carrying interfaces use packets in a traditional sense. VGA, DVI, HDMI and laptop panel LVDS all work with a a stream of pixels at a certain clock rate. HDMI is way more similar to VGA than it is to DP. With DisplayPort, the video is treated like any regular packetized data stream, making the interface all that much more flexible! Physically, desktop/laptop DisplayPort outputs come in two flavors: full-size DisplayPort, known for its latches that keep the cable in no matter what, and miniDisplayPort, which one would often encounter on MacBooks, ThinkPads, and port-space-constrained GPUs.

However, if you don’t need the full bandwidth that four lanes can give you, a DP link can consist of only one or two main lanes resulting in two and three diffpairs respectively, with less bandwidth but also less wiring. This is perfect for laptop and embedded applications, where DisplayPort has taken hold, and certainly beats HDMI’s “four diffpairs at all costs” requirement. The advantages don’t end here, but I’d like to note that DisplayPort is also way more hackable – of course, I’ll show you!

For more advantages, the DisplayPort sideband channel, AUX, is a great improvement over I2C, which we’ve been using as a sideband interface for VGA and HDMI. It’s a lower-speed bidirectional single-diffpair link that doesn’t just bring backwards compatibility with EDID (display identification) and DDC (display control), but is also used for DisplayPort link training communications, as well as gives developers control over a number of low-level aspects from the DP transmitter side. Audio travels over AUX too, and it’s future-proof. For instance, features like CEC can be implemented over the AUX layer, instead of the single-wire kludge that is HDMI CEC.

All of these aspects have resulted in an interface that’s pretty friendly for modern-day tech. Thunderbolt has coexisted with DisplayPort in particular — TB1 and TB2 have been using miniDisplayPort connectors that you might’ve encountered on Apple laptops, bearing the lightning bolt symbol. When it comes to TB3 and TB4, those coexist with DisplayPort on USB-C connectors, and DisplayPort is a first-class guest in the Thunderbolt ecosystem, with DisplayPort tunneling being an omnipresent feature in Thunderbolt peripherals.

Embrace, Extend, Embed

DisplayPort isn’t just for consumer-use monitors, however — remember the flexibility about number of lanes? This alone is a big win for DisplayPort when it comes to internal connectivity, and, considering the sheer number of features already making it embedding-friendly and low-power, the embedded DisplayPort standard (eDP) was developed, compatible with DP in almost every aspect. If you have a laptop or an iPad, it’s highly likely to be using eDP under the hood – in fact, the first time we mentioned eDP over on Hackaday, was when someone reused high-res iPad displays by making a passthrough breakout board with a desktop eDP socket. Yes, with DisplayPort, it’s this easy to reuse laptop and tablet displays – no converter chips, no bulky adapters, at most you will need a backlight driver.

eDP came in handy right as we started pushing against the limits of FPD-Link, which you might have heard called LVDS in the laptop panel space. Ever since mid-2010s, you’ll be hard pressed to find any newly designed laptop that still has an LVDS panel, and nowadays it’s all eDP. The advantage is noticeable – what LVDS needs six or eight diffpairs for, eDP can do with two or three, letting designers use cheaper wiring and freeing up PCB space, and enabling extremely high resolution displays with just four pairs. Unlike classic LVDS implementation laptops which aren’t required to use I2C for EDID purposes, the AUX channel provides EDID for free – which also might mean that your laptop might just work if you are to plug in a display with a higher or lower than factory resolution; something that isn’t a guarantee with LVDS.

Oh, and all this flexibility didn’t just help with laptop displays – it also made DisplayPort a perfect match for the video backbone of USB-C, to the point where, if you see a USB-C dock with any kind of video output connector, you can basically be sure that the video output capability is powered by DisplayPort. We will talk more later about the DisplayPort altmode in way more depth in the next weeks. Until then, I’ll note that all those strengths of DisplayPort, undoubtedly, contributed to the HDMI altmode dying out – especially the ability to run an extra USB3 link alongside DisplayPort through the same USB-C cable. I’d like to assure you, however, that the HDMI altmode dying out is a win for us all.

Plus, It’s Not HDMI

Another large advantage of DisplayPort is that it’s not HDMI. We’ve all gotten used to HDMI, but if you’ve been lurking in related tech discussions, you’ll know that HDMI has got a few nasty aspects to it. The reason is fundamental – HDMI is backed by media interests vested in things like home theater and TV, as opposed to the personal computing field where the VESA group comes from. The HDMI group claims to value interoperability when, say, protesting against the sale of certain products, but the recent HDMI 2.1 debacle has shown us that it isn’t truly a focus of theirs. My observation so far has been that the HDMI group is averse to competition, marketing and branding considerations are significant driving forces in their decisionmaking, and so is extracting per-device royalties from manufacturers – steep for players small and large alike. Bringing a good product appears to be secondary.

Neither DP nor HDMI standards are openly available — however, HDMI is restricted way more NDA-wise, to the point where AMD is unable to implement features like FreeSync and higher resolutions in their open-source Linux drivers specifically in HDMI; DisplayPort isn’t burdened to such an extent. When it comes to devices we buy, especially from a hacker viewpoint, certain HDMI certification requirements may bring us worse products when they’re included. A carefully shaped money-backed lever over the market is absolutely part of reason you never see DisplayPort inputs on consumer TVs, where HDMI group reigns supreme, even if the TV might use DisplayPort internally for the display panel connection.

Sure, the Raspberry Pi CM4 might have two HDMI links exposed, but if you aren’t careful while marketing your board or the product it goes in, you’re in legal limbo at best — DisplayPort has no such issue. That cool $10 open-source HDMI capture card we covered a few months ago? If you want to manufacture and sell them, you better talk to a competent lawyer first, and lawyers savvy in HDMI licensing issues aren’t quite commonplace – putting this idea out of reach for a hobbyist who just wants to order a few open-source boards and sell them to others.

If you see a DisplayPort interface on your board where you’d usually expect HDMI, that might just be because the company decided to avoid HDMI’s per-device royalties, and do firmly believe that this is a good thing. Consider this – if your monitor has HDMI, but your GPU or laptop has DisplayPort, you’re way better off than you might expect, for a very interesting reason.

The Shapeshifting Interface

When it comes to DisplayPort connectors on our GPUs and laptops, there’s an even cooler thing you can do – switch it into HDMI compatibility mode, a feature called DP++. All you need is a dongle that has a logic level shifter inside so that the signals are re-shaped as needed, presents those signals on a HDMI connector, and shorts two pins on the DisplayPort connector, signaling the GPU to do a mode switch. Oh, and if you’re wondering how AUX could be compatible with I2C in case of DP++ – the GPU puts an I2C bus on the AUX pins instead. We buy these dongles as DisplayPort to HDMI converters, but in reality, the dongle doesn’t do much on its own, mostly telling the GPU to start outputting HDMI – it’s cheap, efficient and has low power consumption as a side effect.

As you might see now, DisplayPort is a friendly interface, perhaps way friendlier than you might have expected! Since its introduction, Intel and AMD have made big bets on DisplayPort – and now, it’s basically a staple of portable devices, even if you don’t see a DisplayPort connector on the outside. Not even talking about eDP or the DP altmode – nowadays, even for a typical HDMI connector on your modern-day laptop, the backbone is DP, or a lower-level interface like Intel and AMD’s DDI interfaces tailored towards producing DP first and foremost, with HDMI being a bit of a second-class citizen. After all, DisplayPort has enough advantages to deserve such first-class treatment.

Now, these are mostly consumer-facing benefits of DisplayPort, but I hope that they can give you insights on what makes modern DisplayPort tick. Next time, I’d like to show you all the new things that a hacker can get out of DisplayPort – more in-depth about eDP aka embedded DisplayPort, a bit of insight into the AUX channel, designing our own PCBs with DisplayPort links, and some of the intricacies of cabling.

If you’re going to talk about the merits of the protocol then you probably shouldn’t use a pun on a saying used by a particularly large company famous for killing products after supposedly supporting it.

I don’t even know what are you talking about, which means that whatever saying I accidentally made a pun on, might’ve been psyop’d into my mind without my awareness. What’s the company, even? Google? Amazon? Samsung? 🥲

Guessing it’s the “Embrace, Extend, Embed” heading, which is similar to the “embrace, extend, extinguish” terminology used by Microsoft internally to describe their monopolistic practices.

ah, that? that was 100% intentional and I don’t see a problem. puns do work like that, last time I checked! also oh right how’d I forget stuff like Windows Phone and Skype, aye

[1]: https://en.wikipedia.org/wiki/Embrace,_extend,_and_extinguish

He’s talking about Microsoft’s infamous policy of embrace, extend, extinguish

HDMI vs DP like MCA vs EISA.

Or PCI vs VLB..

>DisplayPort sends its data in packets

>none of the other popular video-carrying interfaces use packets in a traditional sense

>video is treated like any regular packetized data stream

Its not that simple. DisplayPort is packetized, but not in a 100% sane way. You would expect that clean slate purely digital interface designers would at least optionally allow sending whole screen update at a time. Alas you are still sending same VGA formatted pixel stream (Hsync/Vsync/blanking), just chopped into TUs (32-64 symbol Transfer Units) with stuffing (aka never used garbage) between them, even when connected to eDP display supporting Panel Self-Refresh (own frame buffer inside display).

Are you sure that you aren’t mistaking DisplayPort with HDMI? I mean: in HDMI that’s the case: everything is sent, event the blanking intervals and hidden lines; but, according to this PDF, that’s not the case with DisplayPort, where only the useful data is sent, and those intervals can be used to send other data… https://www.vesa.org/wp-content/uploads/2011/01/ICCE-Presentation-on-VESA-DisplayPort.pdf

Hmmm… I like my data being send over and over again simply because there are less things to go wrong, I like redundancy, especially in displayed info. Adding a form of compression only makes things worse, first you need to pack it, then you need to unpack it, if you have a cable that can handle the continuous load of the full 100% data traffic, why not use it? Less things can go wrong, it’s cheaper and let’s be honest if the costs are in the cable or the connector then there is something else seriously wrong.

I’m on this planet for almost 5 decades and I grew up with (according to modern standards) low resolution images (standard definition). Modern technology has given us much more resolution (but only as long as the image doesn’t change too much, which is a problem when the camera pans/tilts or things on the screen scrolls or change to rapidly, or when somebody runs through the woods in an action flick, dark screens sometimes clearly show the lack of color depth, perfect contrast, sure, now i can see the color compression). Also better sound (but it often happens that this “superior sound” is out of sync with the video and no this is not my TV set), why is this even allowed to happen, am I the only one who is bothered by this? Not to mention the screen lag, very annoying in gaming in various ways.

So please, do not do smart things when they are not needed, simply because there are just much more things that can go wrong and will go wrong and for what… a 20cent cheaper cable? More useless content over the same channel.

Personally the 4K YouTube video craze, it’s the perfect way to waste all sorts of resources and for what so that I can see the wrinkles on grandma’s face when she tumbles down the stairs because she didn’t see the cat sleeping on the second step from the top. Which then made a horrible noise, scared grandma who tumbled down, the cat jumped into the curtains, which tumbled down, but not before the cat tore halve of it to pieces. Now this wasn’t a problem if grandpa wasn’t closely behind grandma, with a disk full of the finest china. Luckily I was filming it all in glorious 4K, in vertical video mode, because I never learned the concept of filming, so everybody on YouTube can watch it on their 8K monitor, but with 2/3 of the screen blackened, since it is vertical video. Now this will take hours to load over the already too crowded WiFi connection but that’s another story.

But in displayport, the data IS sent over and over again. The only thing that isn’t sent is… the data that you don’t see! This is: that 20% of each line that is “blank” because the old TVs needed some time to move the cathodic ray to the left to begin the next lint, that’s send in HDMI, but not in DP. Those extra lines up and down that you also don’t see and that were added to allow the ray to go up again, and to hide the fact that the picture in the old TV tubes shrink with time, are also not sent in DP.

In other words: in DisplayPort only the picture itself is sent; in HDMI, the picture and all the quirks created in the 50s to “fix” the problems of vacuum tubes electronics and cathodic tubes.

> in DisplayPort only the picture itself is sent;

Sadly not so. What do you think “interspersed stuffing symbols” means? :)

Well, what I deduce from the document that I sent, is that “interspersed stuffing symbols” are packets inserted when there is no data to send during those blanking intervals, but they can be replaced by data packets like audio.

Those stuffing symbols are literally just there to maintain the clock between the two devices if the data is not changing.

Compare the two signals (in volts or logic-levels) and tell me what the clock is

110011001100110011

000000000000000

So the interstitial bit stuffing just allows you to send that second signal without loss of the clock. Keep in mind DP doesn’t have a dedicated clock pin. It relies on the changing of the data itself to maintain that clock. This is very common in serial busses.

Not entirely sure what you’re trying to say here.

Digital satellite TV generally has bouquet of channels on a certain frequence/polarisation and its up to somebody to decide how to share that bandwidth between the channels. In the UK at least, the SD channels have been compressed to **** because it’s SD and nobody cares about quality, so current digital SD is indeed awful compare to analog SD.

As for Youtube, feel free to select a resolution that you’re comfortable with – if it’s a 4k video and you choose 480p then you’ll get a nice 480p, not a compressed to the edge of it’s life 480p.

Fully agree on vertical video though. Even worse when it’s displayed in a landscape format, with the black bars replaced by a stretched version of the video so as to make no black bars, but there’s no useful information contained where the black bars would have been so why not just keep the black bars and save bandwidth / energy – if it’s a vertical video then just keep it like that!

Sorry to tell you the 4K displays I see in people’s homes have the oversatured show room mode on as standard. And skin tones are reduced to ‘claymation’ levels. Facepalm.

I was (and still am) annoyed that despite data being continually sent during the blanking intervals, the HDMI organization chose not to send EIA 608 data (nor 708 nor teletext). I can almost buy into the reasoning that they use the vertical blanking interval to send other kinds of data, but for this inconvenient point: line 21 was chosen for Closed Captions specifically because it WAS in the visible portion of the frame.

>Are you sure that you aren’t mistaking DisplayPort with HDMI?

That appear to be exactly what rasz_pl has done, so entire comment is invalid (unless we pivot to talking about HDMI).

ugh. is that true? is that why it still needs an aux lane, because even though it’s packeted, its primary lane is blocked by essentially timed traffic?

from the article, i was really envisioning something more like just a display-themed DMA engine. from my view, it seems reasonable to expect the display to have frame buffer ram and surplus too. i guess even in 2023 there is concern about how much of the logic to implement in the display?

DP graciously lets you send data in blanking periods. There are displays with own framebuffer (eDP PSR), paradoxically standardized by same VESA/DisplayPort people.

Great article, thanks! Especially appreciate the info on the ‘political’ stuff with hdmi

Thank you! Someone’s gotta have it compiled somewhere – I have a few more, that I’ll likely leave a reply comment about here later.

> A carefully shaped money-backed lever over the market is absolutely part of reason you never see DisplayPort inputs on consumer TVs, where HDMI group reigns supreme

What does that mean concretely?

They can give you discounts on licensing fees, etc if you meet conditions..e.g. the fee is cheaper if you include the HDMI logo. I think it’s being implied exclusion of DP is one of those conditions, though I don’t know where the “anti-competitive behavior” line is drawn

Really like the deep dives Arya!

I have started mucking about with PCIe thanks to your previous articles and usb-c and displayport are next in my crosshairs to try and make an adapter board I have in my head. :)

Thank you! Well, you might be interested to hear that there’s more deep dives coming on PCIe, DisplayPort and USB-C – stay tuned! 🥰

Loved the PCIe series so far, and this is a great beginning with DP. Can’t wait to read more, thanks!

+1 on the deep dives!

As somebody that had to deal with HDMI internals, I can tell you that there are many other silly facts that are not mentioned. HDMI (non FRL) uses TMDS encoding that is not DC balanced, causing EMI. DP only was specified when the patent for 8B10B encoding was over 20 years old (same as 1GB Ethernet). HDMI had as an option 100Mbps Ethernet over auxiliary channels (not many products used that!). MHL is HDMI interleaved, placing three physical lanes into one interleaved lane (with clock as common mode!), used with USB micro connectors. China had their own standard DiiVa that went nowhere. HDMI (non FRL) is compatible with DVI – you can use a passive adapter. It is rare anybody buys a long DP cable, long HDMI cables are fitted into meeting rooms. PS3 was the first consumer device to include DeepColour, that is, more than 24 bits per pixel in RGB 4:4:4 – at the time display channels used a lot of dithering so they were more like a 15 bit per pixel display.

Gosh I hate not being able to buy TVs with Displayport. You can find them, rarely, usually marketed as being super-huge gaming monitors, but they’re rare.

I’ve only tried to work with HMDI in a technical capacity once, but once was enough, never again. Embedded DP and conversion to HDMI when absolutely necessary is the way to go.

It’s sad you can’t just buy a dumb large panel with a selection of inputs and no tuner. Display technologies age slowly, the ‘smart’ technologies evolve fast. Smart TVs don’t stay smart for long.

You mean…a monitor?

I’ve seen “tv sets” that when youread the fine print, they don’t receive over the air.

I had a monitor that could decode over the air antenna TV signals, and had ALL the ports. But it was ofcourse marketed as a monitor, not as a TV..

Wallmart.com

Sceptre house brand dumb 4K TVs.

The selection of inputs is HDMI and HDMI though.

Also has tuner.

Old US tariffs categorized a display with speakers as a TV. Without speakers was categorized as a monitor. There were different tariff rates (i.e. tax more or less at import time) for monitor vs TV. I don’t know if that is still true, but probably. Bicycles require pedals, which allowed a crop of mopeds with useless pedals appear in the market to dodge tariffs for a few years (it was far easier to push mopeds than pedal them)

I used to software and HDMI cert. There are a lot of problems with HDMI and it’s a great example of an inferior technology winning over the market. The ubiquity of HDMI is the real value add that overrides every technical flaw for consumers. Sadly it means adding features to HDMI is hard and at the whim of the HDMI Forum, Consumer Technology Association (was Consumer Electronics Association), and/or Digital Content Protection LLC. It’s a complicated dance and really involves main players pushing some technology through. Sadly I spend way too much time implementing, plugfesting, and certifying HDMI 3D for it to disappear from the market a year after I released my first product.

While eDP in embedded, just as mipi, are deff. Here to stay, I prefer raw rgb for short lengths, for the simple reason that displays tend to be a bit cheaper with RGB. After all, anything else requires a controller chip, which adds cost to you BOM.

This is a great glance into DP! Thank you for aggregating this info.

Your articles are always refreshingly upbeat, Arya! They are a reminder that new things are not always bad!

Having designed and shipped some niche products in the past which used HDMI, I was never bothered by the per-unit royalties. It was the annual HDMI and HDCP membership fees that were the killer on our projects.

Fantastic and insight laden post. I look forward to catching up with previous posts and keeping up with the future ones.

DP has always had a very basic issue in use for me, my graphics boards seem to suddenly stop communicating with my monitors over DP ports, whereas the HDMI ports seem consistently reliable, so I’ve formed a user bias.

The above has helped my bias no end. :o)

DP isn’t hot swappable, at least on the video cards and monitors I’ve had. Just turning off the monitor would “disconnect” the device.

This is bizarre. I’ve never seen (or heard of) any DisplayPort device which wasn’t hot-swappable.

I’ve both HDMI and DP behave both ways: turn monitor off and it acts like it’s been unplugged. Or turn monitor off and it acts like it is still plugged in. Both decisions suck, but I’m different ways. I don’t know which is better. If I had a choice, I actually want both. Monitor off, plug cable in, keep acting like it’s unplugged. Turn monitor on, perform hot plug, then keep acting like it’s plugged in, even after the monitor has turned back off. Note that different usb-c “docking stations” (monitor adapters) alter this behavior, so it’s not always the monitor. When I’m shipping for a new one, I but one of several different brands, find the one that works the way I want, then buy 5 more to have my own supply over the next 5 years.

Sick VESA burn, calling EDID a small scale standard. Every display with VGA (for decades), or dvi, or HDMI, or dp, includes it… That said, EDID data is notoriously inaccurate: there’s not great authoring tools, and most vendors only check Windows apparently. But it’s way more widespread than any single connector.

Well… My understanding of this article is that DP is wonderful and HDMI is just a piece of s….

Ok, then why on my 2 monitors setup when they go to sleep the HDMI _really_ goes dark while the DP one stay, well “lit” (I mean the screen is black, but it is still lit, not totally off).

But more important, when the computer resumes from sleep, the HDMI monitor simply “wakes up” while the DP (wonderfull) monitor simply crashes. I mean, half the screen stays black while the other half flickers like crazy. The only way to get the screen back on to normal is to disable that monitor and re-enable it.

Luckily I have two HDMI ports on that PC and will most definitely reinstall W11 using only those ports.

Apparently I can’t just switch cables because the BIOS (or Windows ?) “remembers” which one is 1 and the other is 2. Long story short, I have to reinstall the whole thing but I am procrastinating :(

Anyway, the standard may be great, HDMI may be a piece of crap and “the industry” can think whatever it wants, from a user’s standpoint, DP is totally useless if you’re dumb enough to have a computer that goes to sleep from time to time :(

Your computer or monitor has a serious issue, because none of that is normal. I’d bet a dollar your next monitor or next computer (maybe even just next GPU?) will have different behavior.

Well, the computer is new (a few months old) and his older brother had the exact same problem. (Compulab AirTop2 and now AirTop3 – https://fit-iot.com/web/products/airtop3/airtop3-specifications). The GPU is a GeForce 1660 TI and both monitors are 5 years old Samsung U24E590D… And I _always_ had that problem… Guess it’s the weather then ;)

Your particular tech support issues are not an indictment on one technology over another…put it this way: I once had a red car that had a faulty windshield wiper. Does that mean all red cars are junk, or that I had a problem with mine in particular?

However, if someone has a hundred cars and ten of them red, and all the red ones have faulty windshield wipers… either the red color is not from paint and the broken windshield wipers are collateral damage, or you might not be looking at a true coincidence….

I love when someone provides detailed information on an issue they’ve reproduced on two issues, and other people disregard it without providing any detailed information on their working configuration. Makes you wonder if they actually have a working configuration, or if they really just like the smell of someone elses soup.

heh i am surprised you mentioned sleep mode behavior because i have come to believe that no one implements CEC? even my nVidia shield TV had a bug in its CEC that it took them several years to fix. it would put the tv to sleep after its 5 minute time out even if i had switched inputs to something else.

the thing that frustrates me about CEC is i have read the standard and worked with it and i think it is just an absolute darling of a protocol and everyone should be using it.

anyways, seems like DP has some room to improve!

Not bug, it is a feature. It can turn itself off when you switch away, and can switch off TV when it turns off. You do not want to have both those CEC features active at the same tiem.

no. a bug. CEC specifically sends a status report to the shield to say that it is no longer the foreground device. it should not be sending display power off commands while other devices have the user focus. nvidia agrees with me, they did eventually fix it.

this is caused by a bug in the way Windows muti-monitor support works. at a meeting at my prior job, I had a michaelsoft engineer tell me as such; wddm was never designed for multi-monitor support, and the fact it works at all is a minor miracle. it’s usually caused by mixing display technologies, or having different monitors on different GPUs, and I’ve run into it with DVI and HDMI displays, too.

As the article pointed out, every laptop made in the past 10 years uses DisplayPort internally. This is not some new and untested technology.

Which is more likely: you got a couple of bad samples, a third-party PC manufacturer screwed up its DisplayPort implementation, or nobody in the world has successfully woken their laptop from sleep since the Bush administration? I think if there was some inherent problem with DisplayPort and sleep modes, we would have found it by now.

SysATI isn’t discussing *inherent* problems, but rather *practical* ones.

people have worked out a lot of kinks already in the HDMI/DVI space, which remain to be worked out in DP because it hasn’t been as widely used. as Frank T Catte pointed out, it’s likely due to a bug in Windows support for multiple display standards on one computer. that’s not remotely an intrinsic flaw in DP, but it is definitely a practical concern of people buying their first DP hardware to introduce into a household that has already endured the cost of switching everything to HDMI or DVI.

the irony is, the problem may go away when all the HDMI hardware ages out, and your household becomes a single-display-standard environment again, even if that display is now DP.

>>All in all, if you have a DisplayPort output, you can easily get to HDMI; HDMI to DisplayPort isn’t as rosy.

I recently had to connect a desktop computer with DP output to a small DP monitor (USB-C connector) using an HDMI converter… I’m telling this story in the hopes someone will point out a simpler solution that I wasn’t smart enough to find.

They wanted a small folding monitor for a conference room table. The desktop had dual DP++ ports. We could not change the desktop (so adding a different video card was out of the question), but could do anything we wanted to as far as cabling and adapters went.

I searched and found the Dell C1422H 14″ folding display. It looked perfect. I figured all I would need is a DP (full size) to DP (USB-C) adpater and I could call it a day. I tried a few adapters and cables with no luck: even if the signals were being sent properly (I have no idea if they were), the monitor expected to be powered by the same USB-C port that the video signals were coming in on. The monitor has 2 USB-C ports (and no dedicated power port) so I tried hooking a USB-C power supply up to one of them with a video going to the other. No good, it only detected the port with power.

I could not find a “regular” displayport to USB-C cable that included power injection (though as I searched again while writing this I do think I see some now). The closest thing I could find was an HDMI to DP type C cable that included a USB power cable on the HDMI side. So the solution ended up being: (Computer) –> (DP++ to HDMI adapter) –> (HDMI + USB to DP type C cable) –> (monitor). Works great but I still feed stupid for doing it. On the other hand, maybe I didn’t go far enough… perhaps I should have tried to work DVI and VGA into the path?

I suspect that’s more to do with dell not bothering to consider anything beyond their one specific use case (plugged straight into a usb-c dp output) and less to do with you wanting to do something unreasonable with your adapters. Because hey, if someone wanted to use ordinary DP outputs, they should just buy an ordinary Dell DP monitor, right?

It’s best to use a video card with USB-C output, but those can be expensive. The other route is to get a Dell PN2WG cheaply on Ebay, preferably with a full size bracket, but you can straighten out the small one.

Great stuff (I mean article:).

I’m looking for similar use case. MSI laptop have dying usb ports (3.0 and C-type) and has unused miniDisplayport. Repair and any downtime is not possible at this moment. So I’m wondering if there is a way to use it to get an extra usb high speed port to connect reliably usb devices? Some kind of miniDP do USB-C hub or converter (or at least USB-A 3.0 speed).

It seems that even if Display Port has such great advantages and features no hardware manufacturer is implementing them all as default, and only the functions that they think will be useful are being adapted in their products (like only basic function of video and sound transmission).

Anyone knows how to solve this? Or maybe there’s an easier way of connecting USB devices using erthernet port?

“We’ve lived through VGA and the large variety of analog interfaces that preceded it, then DVI, HDMI, and at some point, we’ve started getting devices with DisplayPort support.”

I didn’t. I still use VGA primarily. It’s open, compatible, has no copy protection.. It’s timeless.

People in 1000 years could still understand it after a quick analysis.

If only we had moved on to digital RGBI with more intensity pins and had chosen a simple digital video port with TTL era tech.

Like digital EGA monitors had worked, but HD capable and with 65536 colours (or more).

Anyway, if I had learned one thing in IT then this: Stupidity wins.

“We’ve lived through VGA and the large variety of analog interfaces that preceded it”

Such as? MDA/Hercules, CGA, and EGA were digital. Quite low bitrate but still signals that were on or off.

Composite video was common on non-PC microcomputers and a small number could output video compatible with S-Video (Commodore 64) but without the 4 pin Mini DIN. European TI-99/4 and 4A output a sort of component video close enough for many TVs with component video in to work with.

But in PC clone land, other than the composite output on many CGA video cards it was all digital video signals prior to VGA.

VGA is not quite analog or digital. It makes a display produce a fixed color palette by shifting its red, green, and blue signals among a range of fixed voltage levels, with more colors requiring a higher number of levels.

Analog broadcast television uses the full peak to peak voltage range of its brightness and color signals, without discrete steps like VGA.

Drive a VGA monitor with 32bit video and it’s *close* to operating in a truly analog way but the signals still have discrete steps, there’s just more steps than with lower color depths.

As a AV guy by trade I hate DisplayPort.

Agreed. It’s marginally better than HDMI — but as this article explains, that isn’t exactly a high bar! Neither of these hold a candle to SDI.

I’m supposed to be impressed you can send video over “just four pairs” of wires, for a couple of meters? With SDI, I can send UHD video over 100 meters with one coax line. And I’ll take the BNC locking mechanism over the tiny DP teeth any day of the week.

It seems to be a truism that the better the tech, the worse the marketing.

Really? And how much would a 24G SDI interface cost to implement? Far more than a multi lane interface like DP…

Bring back VGA analogue that FREAKING JUST WORKS!

Bloody crappy HDMI and DP only work every other tuesday. Please tell me why DP works straight for six months, a year, two years, then one day, just decides to not work. Please tell me why HPs own DP ports , using HP supplied DP cables into HP monitor DP’s port will work, then wont work, then will work, then wont work. GARBAGE technology.

Sure, if you have one computer and one monitor and one DP cable , ya know, fair enough , and it will probably just work for you. But try supporting 250 computers by yourself. DP just sux, along with HDMI. Their technology has a long way to go before being totally durable, idiot proof and bullet proof. Only had two DP issues today on two computers.

If I come across an older style HP laptop port replicator/hub, and if it has a VGA output on it for a second or third monitor, I’ll always instantly go and grab a VGA cable. VGA just works.

I’m pretty sure that it’s the HP displays that are crap and not an issue with DisplayPort. We got some new HP screens at the office and their firmware is horrifyingly bad. I never had a monifor *crash* before! Not even the power button would work anymore.

I am not find of VGA. I remember screaming monitors after the computer was turned off, I’d walk around the office to turn them off too. Multisync was always broken, unsupported resolutions and refresh rates resulted in a blank screen (no message), and try configuring x11, where you had to specify the vertical and horizontal frequencies by hand?! Lastly, aligning a analog signal so all the pixels are on screen is super annoying, especially when you have to do that every time you plug a monitor in or switch inputs. No thank you, I will not go back to the dark ages.

This article is pretty one-sided and glosses over many important details:

1. HDMI is guaranteed to support HDCP in all “sink” devices (aka TVs and monitors), with the “HDMI” trademark used to control compliance, while DisplayPort isn’t. This is critical because set-top boxes will silently downscale to 480p or 720p if they discover your TV or monitor doesn’t support HDCP, and you’ll never know unless you run test patterns. This alone makes DisplayPort problematic in TVs since it’s hard to know that you don’t have a crippled HDCP-less port.

2. Converting DisplayPort to HDMI while maintaining HDR requires an active adapter, not a passive one.

3. HDMI has features like ARC and eARC that allows you to pass audio to a Dolby Atmos receiver/home theater system/soundbar, DisplayPort can’t do that at all.

Personally, I want HDMI ports on the TV (since the TVs capabilities are built around the HDMI standard that they support so you aren’t gaining anything with DisplayPort), but a DisplayPort on an output device is always welcome (since I consider it a type of multiport) as long as an HDMI also exists.

There is one exception though: Stereoscopic 3D. HDMI 1.2 supports only up to 1080p60, which means stereoscopic 3D only goes up to 1080p24 (not 1080p30 due to a blanking interval between the left and right eye frames which puts 1080p30 just above the data rate limit), and the HDMI people never got around to updating HDMI’s 3D mode. This means stereoscopic 3D gaming on 3D TVs is limited to 720p60. I have heard tales of tricking your GPU to think your TV is an Acer Predator monitor that supports an unofficial extension that allows for stereoscopic 3D 1080p60 and it might work with your TV, but that’s as far as I got.

A little bit of “create a problem and sell the solution” here with HDCP

Hollywood had made it clear they wouldn’t allow 1080p output of their content over an unencrypted digital cable (which can then be trivially recorded with a capture card), so the only other solution was Blu-Ray players embedded in TVs (think those TV/DVD-player combos, but with Blu-Ray-players). Even 1080p over component was transitional and eventually “sunsetted” (I am talking DRMed content obviously).

Of course, nothing prevents you from manufacturing a computer or set-top box that only outputs cleartext HDMI (the Raspberry Pi 4 comes to mind), but Hollywood won’t allow you to have licensed access to Blu-Ray playback or streaming services in 1080p resolution, which means you won’t be able to have such features as part of your product (and if you provide unlicensed access to those then you won’t be able to sell on mainstream marketplaces due to the DMCA’s “anti-circumvention provisions”).

Look, I hate DRM as much as the next person, but HDMI just keeps all this mess out of sight and out of mind by mandating HDCP support for TVs and monitors.

Grabbing video data off LVDS/eDP connection between LCD panel Scaler board and TCon is trivial.

No, it’s not, it involves cranking open your TV, finding the LVDS/eDP traces, tapping it (at the risk of damaging the TV), and then converting the signal to something your capture device can use. Doable? Probably. Trivial? Certainly not. What is trivial is plugging an HDMI cable to an HDMI capture card (or to the HDMI input that all Blu-Ray players would have if HDCP wasn’t a thing) and pressing “record”.

And you know what’s more trivial? Grabbing the content from the disc or streaming service using the right software (some software like AnyDVD HD is even zero-click, which means the DRM is removed transparently by a driver). But it doesn’t matter, Hollywood didn’t want their content going over an unencrypted digital cable such as DVI, so they got what they wanted (otherwise no soup err… content for you). Don’t try to make sense of it, due to the DMCA’s “anti-circumvention provisions”, any terms they set for accessing their content is “word from God”.

What matters is that HDMI just keeps all this mess out of sight and out of mind by mandating HDCP support for TVs and monitors and hence allows me to go on with my life without constantly thinking about it.

Arya, you helped me out just in time! Thank you.

This sentence: “All you need is a dongle that has a logic level shifter inside so that the signals are re-shaped as needed, presents those signals on a HDMI connector, and shorts two pins on the DisplayPort connector, signaling the GPU to do a mode switch” just gave me an epiphany… You see, I had just bought a cheaper DP KVM switch, and attempted to use it with old DVI monitor – I tried several well-working DP->HDMI->DVI adapters I had; even found a DP->DVI cable… No picture, but instead, weirdly, they all got warm, some more than others. Turns out, KVM cooked them with steady ~200 mA. I even looked inside, fully expecting to find a stupid solder bridge, but nothing. But now I tried a DP monitor, and what do you know, it just works… So my takeaway is a) DP-DVI converter is internally probably a DP-HDMI-DVI converter, or atleast it is depending on DP++ mode; b) my KVM is probably little “unconventional”, since I would not expect full power on a signaling pair, but atleast I don’t have to throw it out.

“More ubiquitous than you might realize.” And “they’re less ubiquitous.”

Good grief. A thing is ubiquitous, everywhere, or it’s not. It’s also a word that shouldn’t be used more than once in an article – let alone twice and misused.

“but none of the other popular video-carrying interfaces use packets in a traditional sense”.

MIPI DSI

HDMI is for TV, DisplayPort is for personal computing.

Are there DisplayPort-to-Aux adapters? What about DisplayPort-to-DVI or DisplayPort-to-VGA?