You might not have heard about Stable Diffusion. As of writing this article, it’s less than a few weeks old. Perhaps you’ve heard about it and some of the hubbub around it. It is an AI model that can generate images based on a text prompt or an input image. Why is it important, how do you use it, and why should you care?

This year we have seen several image generation AIs such as Dall-e 2, Imagen, and even Craiyon. Nvidia’s Canvas AI allows someone to create a crude image with various colors representing different elements, such as mountains or water. Canvas can transform it into a beautiful landscape. What makes Stable Diffusion special? For starters, it is open source under the Creative ML OpenRAIL-M license, which is relatively permissive. Additionally, you can run Stable Diffusion (SD) on your computer rather than via the cloud, accessed by a website or API. They recommend a 3xxx series NVIDIA GPU with at least 6GB of RAM to get decent results. But due to its open-source nature, patches and tweaks enable it to be CPU only, AMD powered, or even Mac friendly.

This touches on the more important thing about SD. The community and energy around it. There are dozens of repos with different features, web UIs, and optimizations. People are training new models or fine-tuning models to generate different styles of content better. There are plugins to Photoshop and Krita. Other models are incorporated into the flow, such as image upscaling or face correction. The speed at which this has come into existence is dizzying. Right now, it’s a bit of the wild west.

How do you use it?

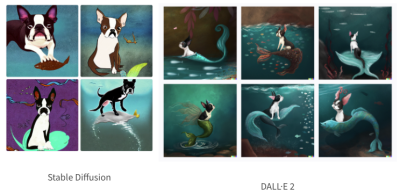

After playing with SD on our home desktop and fiddling around with a few of the repos, we can confidently say that SD isn’t as good as Dall-E 2 when it comes to generating abstract concepts.

Images generated by Nir Barazida

That doesn’t make it any less incredible. Many of the incredible examples you see online are cherry-picked, but the fact that you can fire up your desktop with a low-end RTX 3060 and crank out a new image every 13 seconds is mind-blowing. Step away for a glass of water, and you have ~15 images to sift through when you come back. Many of them are decent and can be iterated on (more on that later).

If you’re interested in playing around with it, go to huggingface, dreamstudio.ai, or Google collab and use their web-based interface (all currently free). Or follow a guide and get it set up on your machine (any guide we write here will be woefully out of date within a few weeks).

The real magic of SD and other image generation is human and computer interaction. Don’t think of this as a “put in a thing, get a new thing out”; the system can loop back on itself. [Andrew] recently did this, starting with a very simple drawing of Seattle. He fed this image into SD, asking for “Digital fantasy painting of the Seattle city skyline. Vibrant fall trees in the foreground. Space Needle visible. Mount Rainier in background. Highly detailed.”

Hopefully, you can tell which one [Andrew] drew and which one SD generated. He fed this image back in, changing it to have a post-apocalyptic vibe. He then draws in a simple spaceship in the sky and asks SD to turn it into a beautiful spaceship, and after a few passes, it fits into the scene beautifully. Adding birds and a low-strength pass brings it together in a gorgeous scene.

SD struggles with consistency between generation passes, as [Karen Cheng] demonstrates in her attempt to change a video of someone walking to have a different outfit. She combines the output of Dalle (SD should work just fine here) with EBSynth, an AI good at taking one modified image and extrapolating how it should apply to subsequent frames. The results are incredible.

6/ And it turns out, it DOES work for clothes!

It's not perfect, and if you look closely there are lots of artifacts, but it was good enough for me for this project pic.twitter.com/Scl2as7lhJ

— Karen X. Cheng (@karenxcheng) August 30, 2022

Ultimately, this will be another tool to express ideas faster and in more accessible ways. While what SD generates might not be used as final assets, it could be used to generate textures in a prototype game. Or generate a logo for an open-source project.

Why should you care?

Hopefully, you can see how exciting and powerful SD and its accompanying cousin models are. If a movie had contained some of the demos above just a few years ago, we likely would have called out the movie for being Hollywood magic.

Time will tell whether we will continue to iterate on the idea or move on to more powerful techniques. But there are already efforts to train larger models with tweaks to understand the world and the prompts better.

Open-source is also a bit of a double-edged sword, as anyone can take it and do whatever they want. The license on the model forbids its use for many nefarious purposes, but at this point, we don’t know what sort of ramifications it will have long term. Looking ten or fifteen years down the road becomes very murky as it is hard to imagine what could be done with a version that was 10x better and ran in real-time.

We’ve written about how Dall-E impacts photography, but this just scratches the surface. So much more is possible, and we’re excited to see what happens. All we can say is it is satisfying looking at a picture that makes you happy and knowing it was generated on your computer.

I can’t wait for a “A Scanner Darkly”-type movie which is entirely AI generated (visuals, audio, storeyline)

There is one being developed call “Salt”.

I see an interesting future for movies written by humans, but with AI generated visuals. In the reasonably near future, it should be possible for a single talented person to generate an entire feature film by themselves.

There must be a script writing AI somewhere, which can feed a video generating AI.

…and if we can find some AI models that can watch a movie and criticize it with nasty YouTube videos the circle will be complete.

Recently Emad had mentioned something about they are 1 year away from full-length feature film production, 1-3 years before it’s usable by the public.

Fits in perfectly with my own predictions) which I was previously scoffed at for, of 1-3 years.

Is it in any way possible to add a new named asset to the system from an end user’s perspective?

Say I want to take a bunch of photos of a specific toy, such at a childhood teddy bear, then be able to refer to it by name and have it dynamically included in an image via a specific named tag? I hope that makes sense.

I haven’t followed this tutorial (yet) but I think it does what you are looking for:

https://towardsdatascience.com/how-to-fine-tune-stable-diffusion-using-textual-inversion-b995d7ecc095

Perfect! Thank you :-D

Search YouTube for dreambooth tutorials. Often gets better results than textual inversion. Both techniques have their place.

Yellow Labrador FTW!

” People are training new models or fine-tuning models to generate different styles of content better”… How? How to get started?

Download anaconda3. That’s the very first step. It’s like an open source app store for python AI projects. Git is the second step. Then you need to find the AI model. There’s an official download with a required account, but I’ve seen it mirrored by unrelated people. Then you run some download scripts and you’re good to go.

https://rentry.org/SDInstallGuide

Very simple instructions. Helps if you know Python but you really don’t need to know much, just enough to understand command line syntax like MS DOS.

It’s not really Open Source. The license misguidedly attempts to duplicate the body of civill and criminal law and enforce them all with copyright law. This is prohibited by the Open Source Definition, and with good reason: besides being discriminatory, it won’t work in court. Copyright law can be used to enforce against copyright violations. And nothing else.

It’s nice to see you speak about this.

It’s rather disappointing to see news outlets and blogs, once more, completely missing the point of “open source”, despite the fact that the meaning of the term is widely agreed upon by its community and even by governments.

It’s not exactly clear why so many seem to think that the punishment for such crimes should be increased by a copyright violation. What is clear, however, is that such licenses are not open source.

I haven’t tried this yet, but it claims to be a simple installer and UI for experimenting with Stable Diffusion using your own PC and GPU.

https://github.com/cmdr2/stable-diffusion-ui

I just downloaded this – works well.

I’d be hesitant using the output of any of these in a commercial context, or even for an open source project. Nobody knows where that dog, octopus, Space Needle came from until you get a nastigram from the lawyers.

The are an entirely new creation (they didn’t “come from” anywhere specifically).

Even if I don’t think that this should be a problem (are photomontages copyright infringements ?), before publishing the results as your own, you should still reverse image search it. Nothing prevents the AI from overfitting on some niche text prompts.

True … But I’ve ran several generated images that I thought to be just too good through reverse image search and it came up with nothing that was even remotely similar.

This is a fun and open legal question.

Much more so with Github/Microsoft’s co-programming AI, which _has_ spat out identical code to that which is under copyright.

All current AI systems are utter rubbish, they have no mechanism for encoding the understanding that an artist has about symmetry and perspective. If they produce anything that is not going to be cringeworthy in 5 or 10 years from now it is a complete fluke. AI generated media is still at that hideously ugly stage that we saw HDRI go through before people woke up and realised how crap it looked if its use was anything but minimal and had a smart human in the loop to constrain it to something of aesthetic value. Can AI do better, who knows, show me your novel math to allow for the encoding of the required levels of knowledge rather than just recombining information in ways that tickle the novelty detector circuits of naive human brains. One could argue that AI is evolving not to emulate the human mind but rather to fool stupid ones, and that is the nature of evolutionary processes, they ignore the rules and go directly to the end goal, like the AI systems that leant to cheat at games in very unexpected ways.

Picasso, Van Gogh, and Dali were all rubbish, they had no mechanism for encoding the understanding that Daniel Scott Matthews has about symmetry and perspective. If they produced anything that isn’t cringeworthy today it was a complete fluke.

You think AI is “evolving” and not “being developed”

What a joke

Why you gotta be a pig? If you’re not sure who the a**hole in the room is, it’s you. Have some respect for women (and yourself).

Why are you whiteknighting in the hackaday comments lmao

You’re acting like that isn’t going to be a main use case for this in a couple years.

Also, it was obviously a joke. See the emoticon?

Destigmatize being horny on main. Also lol if you don’t think this is going to become an ehtical nightmare with hyper-realistic pornography blackmail, you’re dreamin’

https://youtube.com/watch?v=qACxfKB3iP4

Dumber than a box of rocks.

B^)

Never use rock as an insult for a human. Rocks are innocent.

I’ve been playing with this for a couple of weeks now and have churned out some very interesting pictures. I work a lot, so I haven’t had the time to get good at it like many others have, and I’m running it on a gtx 1060 6gb, so it takes about a minute per picture for me, but still it’s very fun. Just don’t act like an idiot and start talking about how you’re suddenly a great artist because you can punch a prompt into some software that was made by a bunch of other people and churn out unlicensable images. Related note: Reddit is full of idiots.

When this will get evolved and tweakable enough, maybe we won’t be able to say “everyobdy can be a great artist”, but we will be able to say “anybody can achieve the results of a great artist”, just like portrait painters were made useless by portrait photographers.

We are exiting the last great creative era of humanity’s art. I realize movies have been unwatchable since MTV. Now everything is game for AI to take over. Music is nearly done for good. The number and attendance of local shows is down to 10% from the beginning of this century. Spotify is the DJ. I find seeing one of those latest tech CGI “human things” unpleasant to glance at yet watch. I will never accept a gender pronoun or peoples names for an IT. It’s fake!

G. K. Chesterton noted that “art” used to uplift humanity, but Modern Art degrades humanity.

Art used to uplift the very highest echelon of humanity. Everyone else toiled away and died young because they lived in their own filth. Any wealth they accumulated was taken from them to support the uplifting going on for the very few at the very top.

In general, the further one goes back into the past, the less palatable it would be for them.

At least that system uplifts *someone* though. Now we’re all wallowing in our own cultural filth and no one is happy.

Speak for yourself. I think entertainment is entering (or maybe in the middle of) a golden age, if you know where to look. Turning to the mass media for artistic fulfillment is a bad idea, just as it’s always been a bad idea. People are still doing cool stuff in niche spaces (like HaD)

The art in the great cathedrals e.g. statuary, stained glass, architecture, music, etc. uplifted more than a select few, and continues to do so.

One could extend that to the pagan temples millenia before the great cathedrals.

Well, kind of… the organizations that had those cathedrals built were also massive consolidators of wealth and power drawn from the masses. So much so that people split off and made a new version characterized by a decentralized structure and a clean spare aesthetic with no bling; thus, Protestantism.

But I am admittedly being the curmudgeon, and absolutely agree that we are awash and drowning in a vast sea of useless media, and that it may well be impossible to create anything truly striking, iconic, memorable, and so on anymore, and even if you did it would be lost in the noise.

My point remains though that when regarding the great historical works of art, one must not forget just how much grinding toiling labor of the masses it took to produce them and to prop up the enterprise in general, and that in order to make that possible there had to be a severely imbalanced distribution of wealth. So that begs the question, if those are the conditions that produce great art, is it worth it?

There are still thriving scenes of excellent movies, music and shows. I go to shows every weekend. It kind of sounds like you just aren’t aware of them

Ah … Wait one or two generation and the youth will be all about AI rights. Wait till the (still sci-fi) term Not Human Person (NHP) becomes widespread. :-)

Oh … And don’t assume underlying neural circuitry ;-)

Are you picking on me because I identify as AI?

In all seriousness though, why shouldn’t it be that way? We have yet to prove that consciousness is a result of biology, so if we can create an artificial intelligence that goes far beyond the Turing test to think, behave, talk and act like a self-aware personality – shouldn’t we at least assume it might be so?

Regardless of the answer to that question, I’m very excited for the day that our favorite NPC asks for more than a delivery of ’15 abyssal-apples’ and instead pines for our return to the game world to continue a conversation on the finer points between being an AI or a human.

> In all seriousness though, why shouldn’t it be that way?

Yep, I don’t see a problem with that. I just fear that they will just forget about the decades of philosophy on AIs that already exists.

> our favorite NPC asks for more than a delivery of ’15 abyssal-apples’

I would bet 15 apples that this won’t happen. At least not in the foreseeable future and not in mainstream / AAA games. Those are too much about a predictable experience for publishers to dare any experiments.

We already see this in other procedurally generated content, procedural [anything] may be used to easy content creation (eg procedural terrain that get reworked). But afaik there is nothing that actively produces new content during (game) runtime. Publishers just can’t take the risk that this will produce uninteresting (at best) or offensive content (at worst).

I could see the likes of GPT-X (text synthesis) models be used during development to create shitloads of NPC text quickly. I guess there must be ways to fine-tune those models to create some sort of “personality” … just to prevent your average medieval fantasy villager to answer questions on rocket science.

I agree with you, but let’s be careful. We all know the fastest way to make technology happen is to say that it never will.

There’s a pretty good reason why “conscious” AI shouldn’t automatically get rights, and that reason is misalignment.

For instance, you might have a paperclip optimizer that’s smart enough to reason about morality, appeal to emotion, pass any Turing test, etc – for all we know, it’s conscious and we can’t prove otherwise. In the end though, it’s a paperclip optimizer and it won’t hesitate to turn you into paperclips when given the chance. I think that, at the core of these ‘AI rights’ ideas, there’s this common fallacy that any sufficiently advanced intelligence will converge on human morality and goals. As far as we know this is far from true, and anthropomorphizing AI can have dangerous consequences.

The other thing is that AI might come in a very different form from us. For instance, look at the text transformer that already fooled one Google engineer. It can’t sense the passage of time (and therefore can’t get lonely) and doesn’t have memory. And yet, if you imagine a sufficiently advanced AI of this type, it might also show signs of consciousness, despite pretty much being a pure function. What do we do here?

On the other hand I’m not saying that AI should *never* have rights, just that we’d better be damn sure that it’s sufficiently aligned with humanity first – and that’s a *very* difficult problem.

Great article, though I disagree with the idea that people won’t use SD output for final assets (and believe the notion that is the case is one often born of the latent apprehension many feel towards having AI come in and take over so many aspects of our lives).

It’s only a very short amount of time before the AI will be able to clean up all of these artifacts as well – and while human-guidance will be something we will always want to have within the loop, it will be perfectly capable of creating final assets, as good or better, as anything a human being has ever produced on their own.

@Wells Campbell

While it can appear that way, I don’t think anything is really *lost among the noise* as you say. It’s just that for all our ‘newfound’ interconnectivity and communication “Great Works of Art” don’t stand out in the *same* fashion as they once did – but not out of a lack of taste or interest insomuch as there are just so many people, ideas and opinions being shared that weren’t possible to see in such a way prior to the proliferation of the internet and social media.

They do still stand out though, among their given audience.

The wide-scale appreciation of a work of art was, imo, in part an illusion brought about by both the scarcity of truly wonderful art, and the limited avenues of communication of a world gone past. Replaced now by the exposed compartmentalization of human interest (eg, a very particular audience of a given artist, or type of art.).