Few CPUs have had the long-lasting influence that the 8086 did. It is hard to believe that when your modern desktop computer boots, it probably thinks it is an 8086 from 1978 until some software gooses it into a more modern state. When [Ken] was examining an 8086 die, however, he noticed that part of the die didn’t look like the rest. Turns out, Intel had a bug in the original version of the 8086. In those days you couldn’t patch the microcode. It was more like a PC board — you had to change the layout and make a new one to fix it.

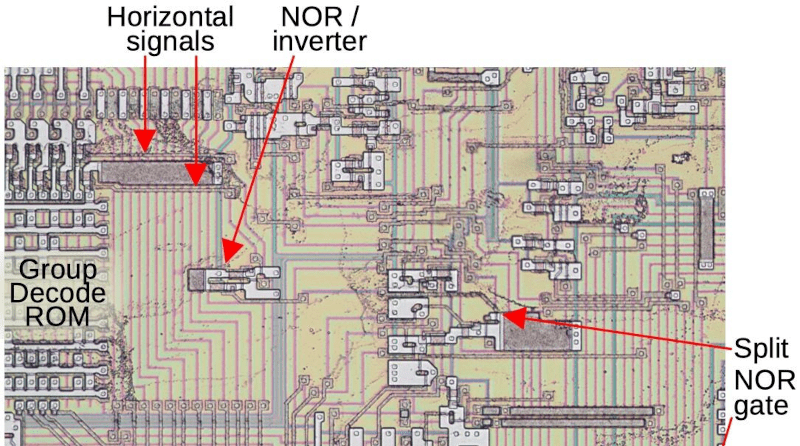

The affected area is the Group Decode ROM. The area is responsible for categorizing instructions based on the type of decoding they require. While it is marked as a ROM, it is more of a programmable logic array. The bug was pretty intense. If an interrupt followed either a MOV SS or POP SS instruction, havoc ensues.

The bug was a simple mistake for a designer to make. Suppose you want to change the stack pointer register entirely. You have to load the stack segment register (SS) and the stack pointer (SP). The problem is, loading both of these isn’t an atomic operation. That is, it takes two different instructions, one for each register. If an interrupt occurs after loading SS but before loading SP, then the interrupt context will wind up at some incorrect memory location.

You could fix this in several ways. The way Intel did it was to make the two instructions that could modify SS hold off interrupts for one more instruction cycle. This would allow you to load SP before an interrupt could occur. Essentially, it makes the MOV SS or POP SS instructions protect the next instruction so you can code an atomic operation. The truth is, the “fix” stalls interrupts for any segment register load. [Ken] notes that this wasn’t necessary and later implementations on newer processors only stall the interrupt for SS.

You might think you could have pushed this off to the programmer. You could, for example, insist that stack pointer changes occur with interrupts disabled. The problem is the 8086 has a nonmaskable interrupt that uses the stack, and software can’t stop it.

Failure analysis back the 1970s and 1980s was fun. An optical microscope would do most of it. If you had a SEM with an EDS attachment, you could do nearly everything. An Auger and a SIMS would put you in the world-class lab status. These days with device geometry several orders of magnitude smaller, dice upside down in the package, and dozens of layers — we aren’t sure how you’d do all this on a modern chip.

We love [Ken’s] CPU teardowns. He works on bigger things, too.

Don’t forget the missing opcodes in the original 6502. There were workarounds, but I’m not sure how many CPUs made it out.

I remember reading about that bug (fix). Intel reminded everyone to set SS first, then SP, so that an NMI wouldn’t be able to trash your stack because interrupts were delayed by one instruction when setting SS.

If only they’d provided an LSS instruction to mirror LDS and LES, the problem wouldn’t have arisen.

God bless the NEC V30.. 😇

Seriously, though. The 8086 was sorta okay.

It wasn’t crippled, at least, like another certain chip.

The STS used the 8086, too, I heard.

The 8086 is to the V30 what the 8080 is to the Z80, maybe.

It’s interesting to study or to look at, but it’s not something you’d want to ever work with anymore.

Both the Z80/V30 have a better architecture and an expanded instruction set.

In case of the V20/30 it’s the 80186 instruction set and the 8080 emulation mode (near useless in practice due to missing Z80 compatibility; merely used it to make a connection here to the 8080/Z80 references).

Go back to 1975, and the 8080 was fine.

You missed living through the time.

It always amazes me when people get down to a personal level when they don’t agree. 🙂

Okay. If the 8080 was fine, then why did its designers literally “escape” from intel, founded their own company straight away and created the Z80? And doesn’t make this the Z80 essentially the final, “finished” version of the 8080 and the 8080 itself an imperfect prototype/beta version?

Because Intel didn’t want to lower the cost of the 8080. The lead designer felt that it would be more useful if it was actually affordable, whereas Intel felt that it would piss off customers that work with them on minicomputer projects to sell a cheapo chip that makes their iron obsolete. Motorola went through something similar with the 6800.

So essentially, Zilog people believed in the 8080 and perfected it.

And Zilog, as a startup, was more user friendly / indirectly supported the young DIY scene, while intel had primarily money on the mind (despite being rather small and still flexible at the time)?

I’m not surprised that all those creative people or so called “computer enthusiasts” of the time departed from intel.

In the 70s, many of them were hippies still, I guess. So I assume empathy/antipathy wasn’t totally irrelevant to the tinkerers?

Personally, I wouldn’t want to have anything to do with a company like that – if I have the chance to/if I can afford it.

Especially in the early days, a dependable supplier was sought after, I assume. So developers used standard parts they knew were still available in the foreseeable future.

If I had lived in such an uncertain, changing period and had to build an 8-Bit system at the time, I would have used a serious Z80, too.

But only used the 8080 instruction set, if possible. Or had included two code paths for certain purposes. That way, 8080, Z80 or 8085 or compatible CPUs could be used if needed, to execute the software.

The V20 and V30 emulate the Z80. Plug one into an old PC, run the 22NICE emulator and it switches to Z80 mode to run CP/M. I had a V20 in a PCjr that would run CP/M faster than a Xerox 820-II, and faster than a 12Mhz 286 that software emulated the Z80 with 22NICE.

The V20 and V30 8088 and 8086 modes were faster than the genuine Intel and AMD copies.

> It is hard to believe that when your modern desktop computer boots, it probably thinks it is an 8086 from 1978 until some software gooses it into a more modern state.

In 2002 maybe but 2002 was 20 years ago.

Modern x86 CPUs still do use 8086 mode (“Real Address Mode” aka Real-Mode) after power-on. It’s necessary to communicate with PC/AT BIOS.

However, once CSM is finally removed from UEFI (thanks intel! 😡), this 8086 compatibility will find an end, likely. Same goes for Option-ROMs, like VGA BIOS or VESA VBE BIOS.. 😔

I’m not absolutely sure this is still true.

For the longest time Windows Installer was Windows _3.x_ configured to run all hardware in compatibility mode.

I friend of mine got trapped into that part of MS, was nightmare.

I remember that Windows 9x/Me contained a minimal copy of Windows 3.1, stored in a mini.cab file.. It was used in the first half of the installation, if memory serves.

I’m not exactly sure in which mode that copy runs or ran, however.

Windows 3.1 has two kernals, krnl286.exe and krnl386.exe.

Krnl286 provides “Standard Mode” and uses the 80286’s 16-Bit Protected Mode.

Krnl386 provides “386 Enhanced-Mode” in addition, and uses 32-Bit Protected Mode.

Technically, Standard Mode and a VGA driver would have been enough to execute a graphical setup program.

My Visual Basic 3.0 programs ran on a 286/in Standard Mode, for example.

Here’s a thought experiment…

Load an entire segment with load stack segment register instructions and jump to it. You can’t ever get out.

Interrupts are delayed by one instruction which means that an interrupt could occur after the second stack segment load.

*compatibility mode*

I think the plural of die is also die?

Nope. At least not at every semi company I’ve ever worked at. You sometimes hear dies, but we always said dice.

I’m in the semi industry also and we usually say die, but everyone knows dice is technically correct. Common usage makes language weird.

It was very expensive to respin hardware back then, I saw boards with bug-fix PALs, dead-bugged with super glue and wire wrap. Yup, on production hardware.

I had a 6502 that wanted to be a 68000

I don’t think this is right but if it is atomic operation is cool!!! “The problem is, loading both of these isn’t an atomic operation. That is, it takes two different instructions, one for each register. “

An atomic operation is one that can’t be split further (back from the days when we couldn’t split the atom). So a single instruction that loaded a register pair would be said to be atomic. If you have to load each one separate that isn’t atomic unless you do a hack like the Intel designers did and say “well, stop interrupts for one more cycle”.

A common place to see this is in test and set instructions made to create things like mutexs or semaphores. Your mutex is zero… you do a test and set…. it sets the flag to 1 and tells you if it was already 1 before you set it. Other wise you have this:

Wait flag=0;

// INTERRUPT OCCURS HERE BAD NEWS

flag=1;

Thank you!! This is a term I’ve never heard before. By the way, Thank you!

Related to glitching.

A clock glitch is when you increase the clock speed for a specific clock (initially found by many reboots and waiting random # of clocks) in a boot sequence. e.g. when it tests a flag to conditionally branch (say after validating the firmware), you run the clock a 4x and it never branches.

Alternatively when it tries to load the Program counter as part of a jump, you glitch that.

Have to understand the microcode.

More than one way to drown a litter of kittens.

Very timing specific, defeated by random number of NOPs at startup. Which is defeated by finding where their ‘random’ # comes from.

Real fix is internal clock gen and cap for Vcc.

Another one that a fair number of people on hackaday may use regularly is that in an AVR328, like an Arduino UNO uses, timer1 is a 16 bit function controlled by and producing two byte data. AVR did some fancy magic in the hardware to ensure that when you write to or read from those two registers, if you do it the right way, you’ll always get the right data where it’s supposed to go regardless of whether interrupts are enabled or triggered during the read/write process.

Hm. Sentience itself can’t be scientifically proven, can it?

Same goes for decision making process, I suppose.

Does the free will exit or is it just an illusion?

That we merely believe we are making decisions, I mean.

I prefer to think of it as anthropomorphism. Honestly, since I design CPUs, to me it is like they think. They just don’t think the way we do ;-)

Didn’t Cyrix have something like this? Interrupts are disabled whenever an XCHG in in the instruction pipeline

I believe that would be “an ancient bug,” rather than “a ancient bug.”

Thanks for the bugfix!