It is interesting how, if you observe long enough, things tend to be cyclical. Back in the old days, some computers didn’t use binary, they used decimal. This was especially true of made up educational computers like TUTAC or CARDIAC, but there was real decimal hardware out there, too. Then everyone decided that binary made much more sense and now it’s very hard to find a computer that doesn’t use it.

But [Erik] has written a simulator, assembler, and debugger for Calcutron-33, a “decimal RISC” CPU. Why? The idea is to provide a teaching platform to explain assembly language concepts to people who might stumble on binary numbers. Once they understand Calcutron, they can move on to more conventional CPUs with some measure of confidence.

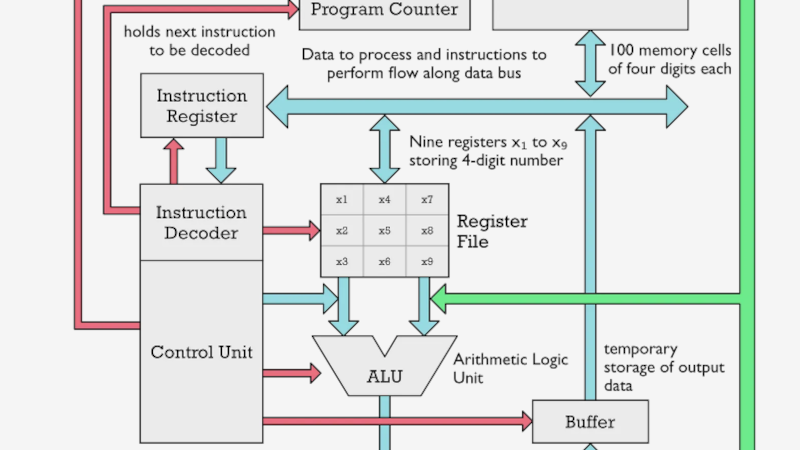

To that end, there are several articles covering the basic architecture, the instruction set, and how to write assembly for the machine. The CPU has much in common with modern microprocessors other than the use of decimal throughout.

There have been several versions of the virtual machine with various improvements and bug fixes. We’ll be honest: we admire the work and its scope. However, if you already know about binary, this might not be your best bet. What’s more is, maybe you should understand binary before tackling assembly language programming, at least in modern times. Still, it does cover a lot of ground that applies regardless.

Made-up computers like TUTAC and CARDIAC were all the rage when computer time was too expensive to waste on mere students. There was also MIX from computer legend Donald Knuth.

The link to the Donald Knuth related page is broken. As extra stuff added at the end.

Perhaps if you can’t grasp binary maybe you shouldn’t be messing with assembly language. Though I’ll admit that I once had to debug a problem involving handing floating point numbers >= 1.0e9 from Fortran to assembler and then shipping them out as binary on a PDP11/23 parallel interface bus. Debugging code using large binary numbers proved to be a real PITA.

Exactly…

“Why? The idea is to provide a teaching platform to explain assembly language concepts to people who might stumble on binary numbers.”

If you can’t get binary numbers in the first place, you have no business trying to learn something like assembly language concepts – in any number base.

just some trivia to round it out…

there is a recent development, IEEE 754-2008. in addition to refining the regular “binary floating point” 32-bit (“float”) and 64-bit (“double”) to include 16-bit (“short float”??) and 128-bit (“long double”) types, it also adds a new set of “decimal floating point” types, in 32/64/128 bit sizes (“decimal32”, etc). the mantissa is encoded as a packed binary representation of a base 10 number, and the exponent is base 10 instead of base 2. i’m honestly not sure what the inspiration is, because the typical example (dollars and cents) really calls out for fixed-point math.

our niche compiler of course supports all the variants (they’re even tested!) because it is a checklist item for us to be compatible with a competitor. i haven’t tested it much but i have the feeling that if you actually try to use decimal32 (or long double) with gcc or clang, you will run into rough edges. that’s the sign to me that no one is using it

That’s “BCD”, no? Perhaps for some sort of compatibility with ancient (perhaps IBM-mainframe-based) software?

From memory, calculators like the HP-41 had 64 bit internal registers, and used BCD. 4 bits mantissa sign, 12 digits of BCD mantissa, 4 bits exponent sign, 2 digits of BCD exponent.

It would be interesting to compare that the the IEEE standard.

The HP-41 with its Nut processor has 56-bit internal registers. The HP-71 with its Saturn processor has 64-bit internal registers, and was the first machine to adhere to the floating-point standard, before the standard was even officially adopted.

The first computer I worked on was a Burroughs medium system. It was a decimal machine. It’s instruction set was also designed to run COBOL.

could you be a bit more specific?

i worked with a b-800 in high school. sadly, not much information about it floating around the net.

Here is a link to the wikipedia article about the systems.

https://en.wikipedia.org/wiki/Burroughs_Medium_Systems

Another link to an article by a person who actually did some of the design of these systems. Skip to the section “Third Generation”.

http://www.columbia.edu/cu/computinghistory/burroughs.html

The operating system on these systems was called the MCP. This was long before the movie TRON come out. A lot of the development staff took the afternoon off to go see TRON.

A link to some info on the B800

https://www.ricomputermuseum.org/collections-gallery/equipment/burroughs-b800

@Anachronda said: “could you be a bit more specific? i worked with a b-800 in high school. sadly, not much information about it floating around the net.”

* Burroughs B800

https://www.ricomputermuseum.org/collections-gallery/equipment/burroughs-b800

* Images Burroughs B800

https://duckduckgo.com/?t=ffab&q=Burroughs+b-800&iax=images&ia=images

* Index of /pdf/burroughs

http://www.bitsavers.org/pdf/burroughs/

+ Index of /pdf/burroughs/B800

1118452_B800_MCP_Dump_Analysis_Feb81.pdf, 2007-03-08 15:57, 2.2M

B700periphCtlrSchems.pdf, 2003-02-23 19:05, 29M

B800_CPUschematics.pdf, 2003-02-23 20:09, 23M

burroughsCassette.pdf, 2003-02-23 18:25, 2.6M

http://www.bitsavers.org/pdf/burroughs/B800/

* burroughs :: B800 :: B800 CPUschematics

https://archive.org/details/bitsavers_burroughsBs_24230302

The Burroughs (Unisys) Medium Systems computers were great to work with. They actually did all their math in decimal, and the instruction set was really easy to work with. Since it wasn’t constrained by hardware register size, most operators could handle operands of anywhere between 1 and 100 digits in length. (Operands could be either 4-bit digits, or 8-bit bytes, signed or unsigned.) The COBOL compiler worked extremely well with the instruction set, with a COBOL statement usually compiling to a single machine code instruction. While all math was done in decimal, the V Series successors to the B2x00/3×00/4×00 computers did implement Decimal-to-Binary and Binary-to-Decimal instructions to improve data interchange with the binary-based world. Code and data shared the same memory space, which had good and bad effects. On the one hand, you could have self-modifying code. On the other hand, you could inadvertently end up trying to execute a string of data if you branched incorrectly. Because all program addresses were relative to the task-specific Base and Limit registers which were loaded each time a task got a processor time-slice, there was no risk of one task accessing the memory of another.

Binary is not hard to understand and it is essential for any digital system. Having a grasp of binary is needed for a lot of digital logic. Both binary numbers and binary logic. Binary is one of the simplest things you will need to understand when trying to understand and learn about how CPUs work. There is only so far you can get without understanding and using binary. How do you properly explain a bus without binary? Does something simple like a bus just become a black box that carries signals? You can’t explain how any of the components work without binary really. How do you explain splitting up busses? Do you just say, this part takes the numbers from 0 to 15 and this part takes the rest of the numbers?

You can maybe explain how a CPU works on a very high level but any digital system requires an understanding of binary so once you get down to talking about how any of it actually works you need to understand binary. Learning how it works in decimal and then trying to understand how it works in binary is probably harder than just learning it in binary in the first place, even from a high level.

I’m all for simplifying concepts but a worthwhile simplification or abstraction needs to add something major or make it significantly easier and not have many disadvantages, this doesn’t do any of that just like a lot of abstractions actually are unnecessary and make things significantly worse, a good example of that is new HDLs or HDL alternatives.

” How do you properly explain a bus without binary?”

1:==Person under bus.

0:==Person NOT under bus.

Calcutron-33 author here. I think I should clarify that my intention was always to quickly move onto RISC-V after the basic concepts of a processor became clear. Indeed you need to learn binary sooner or later.

Having said that, my approach is by no means novel. It is an evolution of the Little Man Computer concept which has been quite popular. You can see it in several puzzle like games built around this concept. My sons have tried this kind of games and enjoyed them. If you want kids or teenagers to learn about processors it helps to lower the barrier.

Keep in mind that I wrote this to target both people who have not yet entered university as well as people with a casual interest in microprocessors. I have been writing about microprocessors now for several years and had numerous people give me feedback over the years. I think what would surprise a lot of us who are software developers or hardware guys by trade is just how many people are willing to read about this kind of geeky stuff.

I have had many financial analysts for instance read my articles about microprocessors, because they wanted to understand what Apple was doing with the M1. For that kind of crowd you cannot spend a lot of time on explaining binary number systems. It is doubtful that it will interest them. They are not interested in becoming professional software developers.

There are many ways of abstracting buses. For instance the LMC basically treats a bus as just a path for a guy to walk around with a box with a number. When we still had human computers, calculation tasks got split up and somebody had to create charts detailing how calculations and information flowed through what was essentially a human organization. That is the beauty of abstractions. Information flows can be anything from an electric cable to a person carrying sheets of paper with notes to a desk.

I first learned programming on an HP-25 programmable calculator. It was sort of like decimal assembler with a good math library linked in. Instructions like “ISZ and DSZ”, , etc. were a lot like a DJNZ type assembly instruction. This got me up to speed programming 8085’s quickly. The binary/hex stuff was fairly easy later, but those machines taught me about initialization, branches, loops, flags, indirection,storage, error handling and general structure which are essential concepts. I think the binary stuff creates a lot of confusion for a newcomer and is forcing him into unfamiliar territory immediately.

BTW, as mentioned above, the HP Calcs up through the HP-48 used a 56 bit BCD architecture, 10m,4e with signs stuffed in cracks, this included the 41. The HP-71 and HP-48, released around 1990 used a new processor, the Saturn which extended this to 64 bits, 12m,4e,2s. Thought these HP calculators still exist, as of a few years ago, the old processors are emulated on modern low power ARM processors rather than HP custom silicon.

Great project.

I had a similar experience, starting with the TI-58c calculator. In program mode, its non-alpha-capable display showed the address and the instruction number given as key row and column. Everything was decimal. It was very much like machine language.

http://wilsonminesco.com/AssyDefense/#calc

Later I got into the HP-41 (for its HP-IL) and then the 71. The 71 came out in ’83.

I can’t believe no one mentioned Motorola’s MC14560/MC14561 BCD math duo. That came out in the mid 70s (and who did the make if for?). Also, no mention of Intel’s old (& new?) math coprocessors that did BCD floating point in addition to binary. Why was it so important? Someone above mentioned COBOL. Well, lots of big financial companies refused to use anything but BCD since they never trusted rounding and guard bits. Why use binary when it cannot express integers — see: https://www.youtube.com/watch?v=WJgLKO-qac0