Many AI systems require huge training datasets in order to achieve their impressive feats. This applies whether or not you’re talking about an AI that works with images, natural language, or just about anything else. AI developers are starting to come under scrutiny for where they’re sourcing their datasets. Unsurprisingly, stock photo site Getty Images is at the forefront of this, and is now suing the creators of Stable Diffusion over the matter, as reported by The Verge.

Stability AI, the company behind Stable Diffusion, is the target of the lawsuit for one good reason: there’s compelling evidence the company used Getty Images content without permission. The Stable Diffusion AI has been seen to generate output images that actually include blurry approximations of the Getty Images watermark. This is somewhat of a smoking gun to suggest that Stability AI may have scraped Getty Images content for use as training material.

The copyright implications are unclear, but using any imagery from a stock photo database without permission is always asking for trouble. Various arguments will likely play out in court. Stability AI may make claims that their activity falls under fair use guidelines, while Getty Images may claim that the appearance of perverted versions of their watermark may break trademark rules. The lawsuit could have serious implications for AI image generators worldwide, and is sure to be watched closely by the nascent AI industry. As with any legal matter, just don’t expect a quick answer from the courts.

[Thanks to Dan for the tip!]

Ask for trouble and sometimes you get it.

FAFO, as experienced by crews of russian T-72 and PT-91 tanks who learned the hard way that it’s not very wise to sit on few hundred kilos of explosives placed under the turret.

Oh Getty images.

Nothing to love.

https://www.latimes.com/business/hiltzik/la-fi-hiltzik-getty-copyright-20160729-snap-story.html

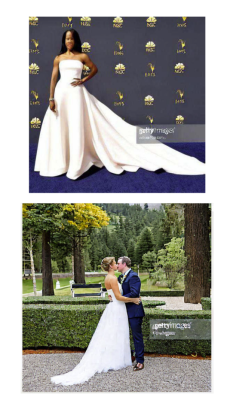

The arms and legs of the soccer player are freaky

And the bride is eating the grooms nose

That was in the prompt.

Meat!…

At least he got 5 fingers!

fair use is one thing however when they are profiting on others copyrighted work that will get them into trouble. I have been waiting for someone with $$$ to get into this game against these types of generators and even on the coding side. How exactly can you take gpl code then repackage it in a slick wrapper and charge a monthly fee for it. This will be fun to watch how it plays out.

There is nothing in the GPL preventing that, you seem to be conflating free (as in freedom) with free (of charge), a common mistake, and the reason why the L in FLOSS comes from Spanish.

I’ll give you that with GPL as i’m not a lawyer but there is far to much copyrighted material that these programs are just spitting right back out and charging for it. They are using licensed material one way or another and taking as their own at that point and charging for it. be it pictures or something else. again this is going to be interesting in the long run.

Stable Diffusion isn’t charging for anything. I downloaded the files from the relevant websites and I’m using it completely free of charge on my laptop. I can even train it with my own images.

A lot of people with strong opinions, who have no clue about how any of this works.

Once again copyright interferes with technological progress. Not surprised.

This is actually good for technological progress since too many of these deep learning models are just copying and internalizing huge blocks of intellectual property and then obscuring it. This is a great example of how the AI fails, since, if you asked an artist to draw a picture of something, you wouldn’t want them to include a random watermark in it. So, this highlights a huge failure of current AI/DL which hopefully will be solved as a result of this.

For what I can make out, the de-noising algorithm can in principle reproduce the original images back, and it sometimes does do that. It looks like a badly compressed JPEG but sometimes there will be exact renderings of the original images in the output. The algorithm does contain a copy of the original images in a sense – it has “compressed” them with a bunch of other images to a common representation.

That makes trouble in terms of copyright, in the same sense as copying a ZIP file containing a movie is still piracy. Even if the file is encrypted, the right key can get it back out and you can reproduce a copy with it.

If you have an algorithm that IS fundamentally built to reproduce copies of images, the fact that the output is a scramble of a million different images doesn’t change the point: it is still violating copyright.

Proof:

https://arxiv.org/abs/2301.13188

“In this work, we show that diffusion models memorize individual images from their training data and emit them at generation time. With a generate-and-filter pipeline, we extract over a thousand training examples from state-of-the-art models, ranging from photographs of individual people to trademarked company logos.”

You might want to include the percentage of images that this happens to, because it’s a very small percentage of images and is caused by duplicate images in the initial dataset.

You also might want to include the conflict of interest statement from the end of the article that identifies the authors as having a vested interest in Stable Diffusion being maligned in the media due to them working for the commercial competition.

How is that any different from what every creator in any field does, and that is look at many examples of extent works. Every genre or style of works in any artistic field come about by people looking at works in the field and consciously copying stylistic elements. Some works are even builtf from direct copies of things, such as the works of Andy Wahole

People don’t just replicate/copy existing work essentially pixel by pixel. Even if you take an example or a reference, you have to deconstruct it in terms of geometry to then be able to re-draw the thing. Of course there are people who do trace other people’s stuff, but that is generally considered cheating and bad value.

What the computer is doing: it’s taking pieces of images and copying them, blending them together as-is without any “personal” input. The computer is making a collage of existing works through clever effects, which for any human artist would be considered cheating if they didn’t disclose their sources.

>Some works are even builtf from direct copies of things, such as the works of Andy Wahole

Except that was the whole point of the piece: Warhol wanted to be an “art factory”, a machine that repeats images. In any other context, we would consider silk prints of an image to be just copies and not art.

I would like to know the prompts put in stable diffusion to get those a.i. generated images.

I would also like to know why Stability AI trained on datasets with Getty images.

There is no provision in the GPL that forbids selling of the software. The big deal is that you have to provide the source code when requested under the GPL. The GPL v1 & v2 is old enough that selling tapes or CDs with the software was the only feasible way of distribution, also software as a service was not “invented” so it has no provisions for that. I am not read up on GPL v3 and what it says but I doubt that it forbids selling.

There is no provision in the GPL that forbids selling of the software. The big deal is that you have to provide the source code when requested under the GPL. The GPL v1 & v2 is old enough that selling tapes or CDs with the software was the only feasible way of distribution, also software as a service was not “invented” so it has no provisions for that. I am not read up on GPL v3 and what it says but I doubt that it forbids selling.

Also relevant in this case is that Getty have licensed their library to train other AIs.

The implications for AIs which write code (which appears to be trained on a mix of OSS projects and variable quality stack exchange answers) will be interesting.

Hahaha, just yesterday I was commenting on chatgpt’s code generation. That the biggest difference between chatgpt’s code and a software developer’s code is that the developer has no clue anymore where he picked up the knowledge and examples that helps him develop his software, and *if* he knows, generallly he will attribute his code because he knows we developers are all in the same boat.

While chatgpt knows very damn well from whom it stole every line of code, and doesn’t attribute sh*t to anyone except it’s creators.

It’s basically stealing copyrighted stuff left and right.

I suspect it’s breaking close to 100% of open source licenses. Even if only because it takes code from everywhere, publishes it in modified form, and doesn’t contribute to improving the original where it took the snippets from.

Basically, chatgpt is standing on the shoulders of giants. However, it’s disrespectful to not give those giants their due attribution.

It seems that the AI’s are mainly so effective because they have no concept of respect and feel no remorse for stealing other people’s work.

One could argue that if an AI can learn to create complex images, complex dissertations, and complex code, it should also be possible for it to learn ‘respect’. And it seems to me that AI’s can already learn that now, unless I myself misunderstand the concept of ‘respect’.

And following along that line, one could argue that any AI that does not properly understand the concept of ‘respect’ should be illegal and be forbidden. And I am not talking only about ‘respect’ for what humans produced, but also about ‘respect’ for what other AI’s produced.

Before it can run, AI’s need to learn to walk… Respect is one of the first things any human, or even animal, child learns. It should also be one of the first thing any AI should learn.

The problem is that “AIs” aren’t artificial intellegences. They are random number generators followed by some rules for picking data, with said rules being picked out of the training data. They do NOT understand anything. Not the content of the things they analyze, not the meaning of the words they spew out, and most certainly not the concept of “respect.”

The folks who write the programs understand those things. They appear to disregard them, however.

The courts will reacquaint the programmers and the companies with the concepts of “respect” and “copyright” – possibly along with “fines” and “jail time.”

Oh, that’s so much crap. Yeah it does spit out very small snippets unedited sometimes but I’ve asked it to create completely custom snippets for unique tasks and follow up conversations have edited, pruned and shaped that code to do additional things.

Do people leave a comment crediting the SO OP when pasting a 2-3 line snippet for something simple into code they’re working on? No. They don’t.

Getty images has to be stopped. You realize they are vultures? They but every image they can get their hands on and have actually created nothing. Look up the lawsuits against the ACTUAL photographers of the of the pics. They donate their work, Getty bus them and then sues the original photographers for trying to use them.

My friend is the mother of the late child star Cory Haim. She was threatened by Getty because a pic she owns of him was used in a charity event. She can’t use her own pic because these scumbags bought it and registered it.

Getty needs to be stopped

If the photographer sold the picture to Getty they own it. Your friend can’t own the same picture, it is impossible for two people to own the same thing.

You’d think an AI system would lend itself perfectly for recognition and removal of watermarks, but instead it adds them? Pfft.

I think the football player should sue getty for using their images…

I mean, getty has been caught multiple times either selling images it doesn’t have the rights to, or selling public domain images and then suing other people for using them without paying getty, they are not a company that should be trusted on what they claim ownership of.

Right? Who gave Getty the right to every image? I’ve had them try to slap that dang watermark on some of my stuff before.

“I’m interested in plagiarism as an art form.”

Unironically yes. Originality is not a virtue.

All thought is plagiarism; to outlaw plagiarism is to outlaw thought. Even the greatest geniuses are merely plagiarizing God. The tricky part is to place the legal definition in the right spot. AI remixes data pretty close to how our minds do it (well at least relative to simply stealing an entire paragraph verbatim).

Getty has big muscles, let’s see what they do. They managed to get the “view image” button removed from Google Images.

…Those absolute twats

Part of the determination as to whether an image is an algorithmic modification of another image or an entirely new image is whether there is “creative intent”. This raises the question as to whether or not AI can have “creative intent”.

But AI is merely a tool, does the person describing the scene they want to see not count as providing “creative intent”? These AI tools are not actually intelligent, they are just weights and algorithms.

Copyright law needs reform. I’m an artist, but I saw this coming decades ago and decided to keep art as a hobby and learn to code instead. I want generative AI to take away all the drudge work, and I will gladly use it as much as I can to save time and effort.

Artists never get paid well for their works unless they are well-connected, and ruling in favor of Getty won’t change that. Sharing is caring, and those that don’t like to share are going to be the real losers in the coming AI races.

I prefer to download AI generated images from aifreeart.com, and it’s free, so…