In the rare case we listen to an audio CD these days, we typically rely on off-the-shelf hardware to decode the 1s and 0s into the dulcet tones of Weird Al Yankovic for our listening pleasure. [Lukas], however, was recently inspired to try decoding the pits and lands of a CD into audio for himself.

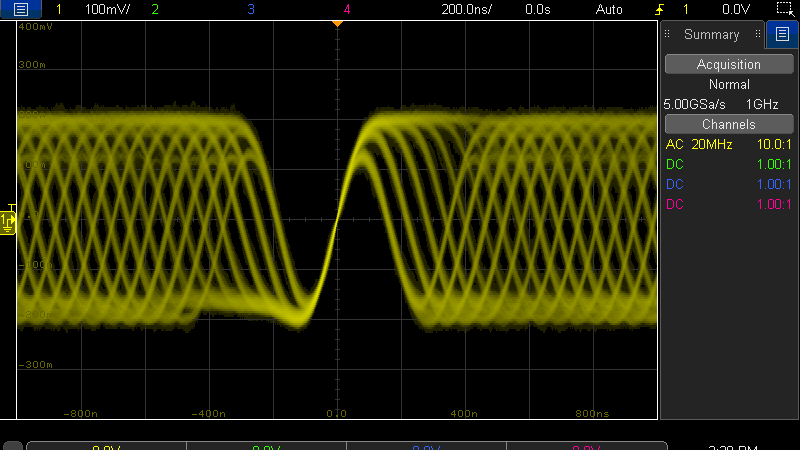

[Lukas] did the smart thing, and headed straight to the official Red Book Audio CD standard documents freely available on archive.org. That’s a heck of a lot cheaper than the €345 some publishers want to charge. Not wanting to use a microscope to read the individual pits and lands of the disc, [Lukas] used a DVD player. The electrical signals from the optical pickup were captured with an oscilloscope. 4 megasamples of the output were taken at a rate of 20 megasamples per second. This data was then ported over to a PC for further analysis in Python.

[Lukas] steps us through the methodology of turning this raw data of pits and lands into real audio. It’s a lot of work, and there are some confusing missteps thanks to the DVD player’s quirks. However, [Lukas] gets there in the end and shows that he truly understands how Red Book audio really works.

It’s always interesting to see older media explored at the bare level with logic analyzers and oscilloscopes. If you’ve been doing similar investigative work, don’t hesitate to drop us a line!

I just bought a set of the first five Lovin Spoonful albums. And a set of thefirst five (Young) Rascal albums. Fortydollars total, came from the UK.

So I may not be listening to much music, but I still buy and use CDs.

“I may not be listening to much music”

So, like the parents of teens during the 1960s, you have a difficult time calling what they listened to as “music”?

B^)

I’m not in the mood these days. But maybe if I listened, I’d feel better.

The only new acts I’ve bought were in 1990, 10,0000 Maniacs and the Indigo Girls.

Here in South Africa many second-hand shops sell used CDs in good condition for ZAR2 – ZAR10 (about €0.10 to €0.52). Sure you could download FLACs if you look around, but it’s nice to have the actual media in your hand, and you also stay clear of the law that way.

Yes there’s a lot to be said for owning the recording.

Firstly it won’t degrade and secondly you can hand it down to next of kin and thirdly you get to see the wonderful artwork on the album. They’re super easy to store and provide perfect sound..or as near perfect as we can discern.

They are not easy to store. I have CDs now unusable because of storage. Humidity, heat and the environment is the enemy.

To be fair, that’s the same environment in books and furniture would start to rot, too. PCs may rust, too, their capacitors die, their fans will bite the dust (pun intended).. Living things also will get ill, at some point. Asthma, infections etc..

I would like to buy all your jazz CD’s. I’m based in South Africa, Johannesburg. I still play CDs and vinyls. Recently discovered Japanese jazz music and it’s not available on iTunes.

I listen to music on a daily basis through an audio system. It is all cd. Why? Because its the best medium out there! Its tactile, hands on, and physical. Storage is easy and best of all, You own it! Probably one of the cleverest inventions out there. What a shame so many people abandoned it just when standards of replay have become do good.

I’m not sure why [Lukas] reinvented the wheel here; was he unaware of the ld-decode project, which HaD has even covered in the past? The same method can be used to capture the signal off the laser pickup, and there’s quite a bit of groundwork already covered, given that digital audio and digital data on Laserdiscs can be encoded in the audio track using EFM, in a remarkably similar way to how CD-DA is encoded.

Quick browsing of the ld-decode project does not say how to pickup the raw laser signal based on a sampling the laser diode almost directly. This project goes right into it.

(ld-decode contributor here…)

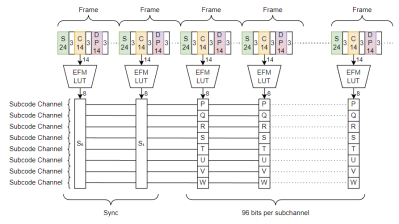

Decoding from the pickup output is exactly what ld-decode does. LaserDisc digital audio is based on the Red Book standards, so ld-process-efm works fine for CDs as well. It has a much more complete implementation of error correction, etc., although it’s neat to see how minimal an implementation you can get away with in Lukas’s decoder.

The filtering and PLL approach you need will depend on the player and how you’ve digitised the signal, but there’s no particular reason that you couldn’t feed the output of Lukas’s PLL into ld-process-efm.

Are there any mentions in the ld-decode wiki about how it decodes *audio CDs* ?

Like Lukas, I’ve played with decoding audio CDs from the laser output in the past, and ld-decode never showed up on my Google search radar.

An even more impressive project of Lukas gets ignored over and over by HaD:

HorizonEDA

Oh wow that looks great. A library-first EDA suite that tries to reuse what’s already great about KiCad. While I don’t hate KiCad’s library system as much as many, I’ll definitely give HorizonEDA a try on my next project.

I have no intention of using HorizonEDA (or LibrePCB). (Disclosure: I’m an active KiCad fanboy) Both those project seem to have a very small user base and few developers. Both also lean heavily on KiCad. From re-using the interactive router, converting KiCad’s libraries and other parts.

It’s quite certain that I can keep on using KiCad 10 years or 20 years from now, and it’s still being maintained and actively developed at that time while it’s questionable whether those other two programs still get any maintenance by then. When I look at the stormy development of KiCad, and the rapid speed in which new features are added and old annoyances get removed it’s unlikely that those other projects can keep up with that. HorizonEDA claims to have support for Linux, but on their website it also states that Linux packages may be outdated. There is apparently not enough manpower for maintenance even now.

It’s not a contest, and there are also good points in having a choice, but personally I would much rather see a single great EDA suite then three “average” programs. Both systems seem to claim to have a better library organization system then KiCad’s. I don’t have any problem with the way KiCad organizes libraries, and in KiCad V7 support for database driven libraries has been added.

Also a literal quote from the HorizonEda website: “My biggest weakness is that i will eventually turn any arbitrary electronics project into an excuse to write EDA software (and vice versa).”

and I’ve read other references too that the person behind Horizon is simply more interested in writing his own program than with collaborating with others.

Hackaday has a:

https://hackaday.com/tag/creating-a-pcb-in-everything/

and I’m a little bit curious how those two packages compare to the current KiCad. Both those programs seem to be usable enough to warrant this attention from Hackaday. If you can convince Hackaday to do a “Creating a PCB in Horizon” (or LibrePCB) I’ll read it. Creating a PCB in KiCad was made in 2016, which means KiCad V4, and progress has been enormous since then.

And again, it’s not just the program and how well it works. It’s also the community and infrastructure around the whole project. I don’t now how many people are working on KiCad’s source code. I guess about 40 “regulars” and a hundred (maybe 200?) people who do an occasional commit or other tasks such as library and website maintenance, packaging for different OS flavors, writing documentation , making youtube tutorials, website blogs etc. The KiCad forum has over 10.000 registered users, about 30 topics per day and a lot of regulars who answer questions, and often within minutes or hours after posting. About 300 new issues are created on gitlab for KiCad, and about as much get closed each month. And many issues get fixed quickly. I have created about 70 myself, and several got fixed within half an hour of reporting, with a personal record of 15 minutes between creating an issue and “fix committed” Another significant thing for KiCad is that commercial support is available, and this can be important for companies to get answers quick, or even do some custom development or priority with bug fixes. And I don’t see HorizonEDA nor LibrePCB coming even close to that.

One thing I noticed the last days is that KiCad users can be quite territorial and a bit attacking of anybody who is not in their camp.

I noticed that trend in other big open source projects, which I find is problematic.

We as a society should both support small and big communities. Individual developers matter, just as much as small companies matter. When we are against huge commercial companies, there is no reason to not be critical as well of huge groups in open source projects, especially since many huge projects are now essentially influenced by huge companies.

The hacker mindset is mostly about allowing as much access to anybody willing to invest a little effort. I think we should focus on that.

To add to that: individual developers matter, and they are far too often not rewarded sufficiently for the hard work they put into their projects. Many people working in big communities / companies forget that, and the community/some “greater” good, decides what the rest should do.

So the momentum of big communities can be amazing, but also have the effect of crushing everything on their way, and bullying people into joining their cause (which almost always means less influence).

People might say you could fork a project, but if you are a single developer that is not possible to maintain, so you have to focus on the key points that matter. And that’s not something bad at all, it’s how innovation works, and we should enable that, not hinder it.

When I saw “from scratch” in the title I thought it might be about recovering audio content from scratched CD.

My work on reverse engineering the Digital Compact Cassette doesn’t go quite as deep as that. So kudos! Very impressive.

I expect to eventually do the hard part of getting the bits directly off DCC tape and developing my own hardware to de-interlace, demodulate, error-correct and decode them, and I have all the necessary information to do that, but it will take a while. For now I’m at the stage of grabbing the hidden data such as text information that’s on every prerecorded tape but can’t be decoded by any recorder.

More info in my Hackaday project https://hackaday.io/project/20404 and in my presentation last November at the Hackaday Supercon (hopefully there will be an upcoming video).

The project linked above is on hold because of lack of space, but I’m currently working on a color graphic display replacement for the DCC 730 / 951 recorders. More information will follow in a new Hackaday project.

Years ago, at a different job, we had a software distribution on a copy protected tape (QIC-50?). One of my cow-orkers made a C program that copied a tape bit by bit. The copies were also “copy protected”.

B^)

Have a look at the ld-decode and vhs-decode projects. They have most of the building blocks already done. They have already decoded EFM from RF on laserdiscs, AC3 audio from the QPSK signal and it supports decoding Laserdisc VHS Beta and many other tape formats, Im sure a DCC sample would be good to add to the list to work through.

I’m aware of those projects. DCC is a little different; it uses eight-to-ten modulation instead of eight-to-fourteen (both invented by the same guy, Kees Schouhamer Immink) and because there are 8 heads with music information, it uses interleaving of the bits so that the CIRC error correction gets enough information to completely decode all the data even if an entire track is missing continuously, or if all tracks are missing for a few millimeters. It’s all documented very well in the DCC System Description (https://digitalcompactcassette.github.io/Documentation/General/Philips%20DCC%20System%20Description%20Draft.pdf).

Fortunately there are not as many prerecorded audio cassettes (a few thousand) as there are CD’s, and decoding or playing them has not been a problem yet. For now, we can trust the hardware in existing DCC recorders/player to reproduce all the digital bits, and we theoretically know enough to make tape dumps and reproduce the data that nobody was ever able to see when the format was a thing.

In the future when the custom chips to recover the bits have all gone bad, we may need a project like this to replace those chips with something of our own (I’m thinking a couple of fast A/D converters and an FPGA or something). But that time hasn’t come yet.

Why the need to read/decode pre-recorder DCC, since i believe that most, if not all, of them already exist in other formats with better quality? It seems to me that self-recorded ones are much more interesting, since they can contain the only existing recording of some events/contents.

Sure, the music from most prerecorded DCC’s (if not all) is probably available in other forms. But something that’s also on prerecorded DCC’s is text information in the form of ITTS (“Interactive Text Transmission Service”). And that was added specifically for the DCC release. And sometimes it includes some interesting surprises, for example lyrics. No DCC recorders were ever released that could decode ITTS information (though prototypes are known to exist).

And yes you could argue that even ITTS is not that interesting or important compared to the music recordings on the tapes. You could also argue that websites like archive.org are unimportant. I however like to think that preserving even the seemingly unimportant stuff is important if we want to learn from it in the future. And our task as DCC Museum is to take care of that preservation.

Well, you learn something new every day! Didn’t know about ITTS, and sure it is an interesting stuff to preserve. Thanks for this information!

I used to have a DCC900 but sold it some years ago, and kind of regret it.

Hm, food fort thought regarding the enthusiastic archive.org plugging:

Big fan of archive.org! In this age of media-owning and -revising billionaires, rapidly disappearing knowledge and misinformation, it’s an essential societal asset.

Maybe, just maybe, we shouldn’t slashdot it (hackaday it?) by abusing it as CDN for pirated content on the front page.

“Hm, food fort thought”

Food fort?

Is that a place of refuge during a food fight?

B^)

As for the DVD player reading the disk at 4x speed…

Very old CD players read the data at 1x speed, and especially for portable players this was a nuisance, as each bump could get the laser out of alignment and become audible. Thus a system was created to read audio in bursts and buffer it, so that even if the laser lost track of mechanical bumps, it would just re synchronize before the read buffer was emptied. I guess the DVD player just read at it’s normal speed because reading data and outputting audio was common by that time.

I still buy and use cd much better than bulky fragile expensive vynyl only problem with cds is modern mean car makers in order to save money put no cd players in cars so you have to copy them to usb sticks

Ten years ago(?) I.bought portable CDs at garage sales, a few dollars each. Even two that plays MP3 files. So.I’m set.

“In the rare case we listen to an audio CD these days”… Speak for yourself, I’m listening to one right now!

About 90%of the time I can tell it is a Day article simply by the tone deaf (zing!) and needlessly judgy or assertive intro sentence /paragraph. But again I’ll say, if the point is to get clicks/comments, well played Lewin. Well played.

Yeah, I guess that is his “style”.

I will read it anyway unless it turns out to be another “The sky is falling!” article.

Well that would a yesterday thing.

https://www.livescience.com/potentially-hazardous-asteroid-twice-the-size-of-the-world-trade-center-will-shoot-past-earth-tonight

The Domesday Duplicator and LD-Decode projects have been decoding EFM since 2019 from the pits on the disc. The first tests were with CDs before moving onto laserdisc audio which is the same without a TOC.

The error correction gets pushed harder on laserdisc than audio/data CDs due to the other signals on the disc.

Great piece of work and its not as simple as it looks.

https://github.com/happycube/ld-decode/