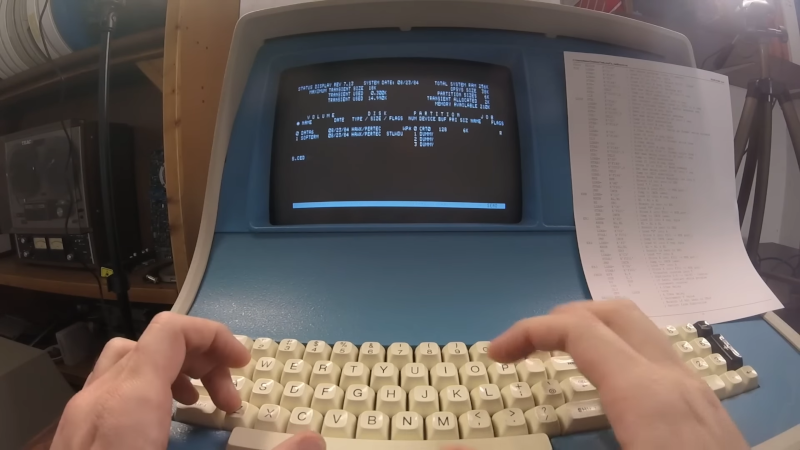

In the days before computers usually used off-the-shelf CPU chips, people who needed a CPU often used something called “bitslice.” The idea was to have a building block chip that needed some surrounding logic and could cascade with other identical building block chips to form a CPU of any bit width that could do whatever you wanted to do. It was still harder than using a CPU chip, but not as hard as rolling your own CPU from scratch. [Usagi Electric] has a Centurion, which is a 1980s-vintage minicomputer based on a bitslice processor. He wanted to use it to write assembly language programs targeting the same system (or an identical one). You can see the video below.

Truthfully, unless you have a Centurion yourself, the details of this are probably not interesting. But if you have wondered what it was like to code on an old machine like this, you’ll enjoy the video. Even so, the process isn’t quite authentic since he uses a more modern editor written for the Centurion. Most editors from those days were more like CP/M ed or DOS edlin, which were painful, indeed.

The target program is a hard drive test, so part of it isn’t just knowing assembly but understanding how to interface with the machine. That was pretty common, too. You didn’t have a lot of help from canned routines in those days. For example, it was common to read an entire block from a hard drive, tape, or drum and have to figure out what part of it you were actually interested in instead of, say, opening a file and reading a stream of characters.

If nothing else, fast forward over to the 25-minute mark and see what a hard drive from that era looked like. Guess how much storage was on that monster? If you guessed more than 10 MB, you probably didn’t live through the 1980s. We won’t even guess what the price tag was, but you can bet it was spendy.

If you think entering programs like this is painful, try a front panel. That made paper tape seem like a great thing.

Ah yes, the joy of rolling your own instruction set – one of the fun projects I did for Q1 was designing and building a disk controller using the AMD 2900 series parts – it ran at a modest 5 million instructions er second, and did up to 4 separate functions per instruction. I had to design a microcode emulator for it, the write a compiler using the PL/1 language [which the Q1/Lite used]. I never did a design as fast again – from initial concept to delivered project [including the integration into a floppy disk system including Fortmat & test utilities, it took only about 4 months. After I left Q1, I designed several other disk controllers using the 2900 series both 8 & 16 bit wide that also used the burst error correction chip later available from AMD.

Now THIS is bare metal.

I never heard of bitslice until after Byte was publishing, I suspect the 2900.

And I did see some articles about building your home comouter from scratch.

Did a serial terminal multiplexer board for Data General that used the 2901 bit-slice CPU from the NOVA 4 with a slightly modified instruction set. Which meant I got to write microcode. It takes a different mindset than writing assembly, because you don’t have a micro-accumulator or a micro-ALU (unless you design them in) just the basics, like single-bit logic operations and micro-PC branches.

Good on him for resurrecting this dinosaur. I understand the kids coming out of Computer Systems Engineering courses do all their CPU design now in FPGAs with HDLs. Back in the 70s, they had us doing that, too, but the hardware wasn’t available, so it was a paper and pencil exercise only.

Anyone else thinking “bowling alley”?

I have reverse engineered 3 different hard drive controllers of the same vintage that used “bit slice” components. This is where the whole concept of “microcode” seems to have originated. You had the bitslice engine with its microcode presenting itself as a processor with some ISA. Doing away with the microcode was a lot of what RISC was all about. A machine of that vintage that exposed the bitslice to the end user would be pretty cool indeed. In my endeavors the code was in several fast bipolar ROMs that I had to read out and then work up a disassembler for.

Maurice Wilkes (possibly the worst lecturer I’ve ever sat through) who built the EDSAC invented the concept microcode in 1951…

IBM used microcode in the 360 series in 1964. There may well be earlier examples.

Indeed, I think the EDSAC II was microcoded – and would therefore have been the first. Here’s a paper on it: http://cva.stanford.edu/classes/cs99s/papers/wilkes-edsac2.pdf (PDF)

Please, tell us more about Maurice Wilkes’ lecturing style!

I never got to hear Dr. Wilkes lecture, but he was a very pleasant correspondent. I had communicated with him a few times about EDSAC> I wish I had done an FPGA EDSAC while he was alive to enjoy it. I wanted to do it with serial logic to work like the mercury delay lines and he was kind enough to clear up a few design details for me.

My first exposure to microcode [ and what gave me the confidence to do the controller for Q1 ],

was the TI980A minicomputer – I went to a class on maintenance and programming it in the early 70’s.

It even used the microcode to read and write the internal registers and front panel LEDs and switches.

Microcode is still used today.

Infamously there have been many sidechannel attacks on desktop processor caches that break security.

AMD and Intel deliver (to processors out in customers machines) “ameliorations” by issuing updated microcode.

Microcode was invented in the 1940’s as part of the Whirlwind computer project. The concept was further improved by Maurice WIlkes in 1951 who added the notion of conditional branches depending on simple state information to allow microcode to take different paths depending on a selection of state flip flops.

The concept of microcoded engines for high-speed devices like disk and tape controllers made a lot of sense, but the concept of microcode came much earlier. Even some electronic calculators from the 1960’s utilized microcode to simplify the logic needed to implement their functionality (e.g, the HP 9100A and Wang 700). And of course, many of the minicomputers and larger-scale computers of the 1970’s utilized microcode engines at the core of their CPUs. AMD’s 2900-series combined with fast ROM made roll-your-own CPUs much less complex, and became quite popular in the design of mini-computer systems. Other than the original PDP-11, all of the later PDP-11 models were microcoded designs. The concept of RISC reduced the need for microcode because instructions became much less complex, allowing instructions to be implemented with simple logic that was typically integrated into programmable logic arrays. Despite this, microcoded architectures are still in a lot of places today, ranging from device controllers (such as high speed disk) to GPUs with instructions that process large blocks of data in one instruction and specialized CPUs in supercomputers that have a lot of embedded intelligence dedicated to interpreting the flow of instructions to optimize speed by scheduling and executing many instructions in parallel.

Thanks for jogging my memory. Yes, the AMD2900 chip(s) are what I encountered in the disk controllers I worked with. I didn’t mean to say that microcode originated with disk controllers. What I really meant to say was that microcode originated with the bitslice components, but that clearly isn’t true given what others have written. The bitslice parts just made putting together a microcoded design easier, whether for a CPU or a disk controller.

Forth interactive, incremental assemblers allow assembler production of machine language modules.

And code fragments too.

I saw ‘the light’ when I studied and used figForth 8080 assembler.

Assembler programming became fun because of interactive assembly/test cycles.

Batch assembler sucks – -, imo. 1960s assembler technology. :(

US National Security Agency funded my 8051 syntax-checking forth assembler. :)

“Even so, the process isn’t quite authentic since he uses a more modern editor written for the Centurion. Most editors from those days were more like CP/M ed or DOS edlin, which were painful, indeed.”

My mom used CP/M back in the late 70s, early 80s, so I asked her. [Usagi Electric]’s description of “Centurion Editor” sounds a lot like ed, so seems very 80s to me.

Given that my mom ported UC Berkley’s se (screen editor) to her Apple ][, seems reasonable that a port of ed to the Centurion could have existed back then. I don’t see not using Centurion compose as unauthentic, just 80s hacking ;)

I went from programming the HP2000E and various mainframes to buying my own PC XT in autumn 1986. Soon after bought a HDD. 30 MB for $325. All on my retail store salary while renting my own place to live. One wonders if the author “lived through the 80s.”

I really enjoyed this video, but I was curious about something with the assembly language instructions being used, and I couldn’t figure out the answer from this document, which is the best I’ve found so far: https://github.com/Nakazoto/CenturionComputer/wiki/Instructions

Anyway, I’m curious about the difference between the “LDAB=” instruction and the “XFR=” instruction. They both are being used to load a literal value into a register. The primary difference I see is that XFR is being used to load values into the X and Y registers whereas LDAB is used to load values into the A register. Is there a reason why you have to use one instruction for one register and another for others?

Well, to be absolutely fair he was not being well employed: a second-year course on Numerical Analysis to Maths students in 1969/70 (we used, the horrors!, BASIC on a PDP/8).

But it was obvious that his heart was not in the job, he was basically reading aloud from his own textbook on NA, which was, to say the least, hardly a riveting lecture style.

I do clearly recall one time when he wrote up an example (directly from the book) on the blackboard, but mistranscribed one of the numbers.

One of the students called out “Professor Wilkes, shouldn’t that N (whatever number he had actually written) be M (the number in the book)?”

To which he replied: “yes, you’re right, thank you”.

It never seemed to occur to him to wonder just how a student would knew that he had used the wrong number in a worked example *before* working it out.

Even then and as a callow youth, it struck me as sad that a man with his achievements was reduced to this.

While I haven’t watched the video, having more than one load instruction was fairly common on machines which had both accumulator and index registers.

On the old GE-600 (later Honeywell 6000, Level 66 and DPS-8) mainframes (36-bit machines) there were two 36-bit registers: A (accumulator) and Q (quotient) and eight 18-bit index registers: X0 thru X7.

The former were loaded with LDA and LDQ instructions (there was even an LDAQ to load the two from successive 36-bit words in memory) and the latter with LDXn instructions (8 of them). There were also corresponding store, add and subtract instructions for the different length registers.The register specifier was part of the opcode, not an operand.

Are the A, X and Y registers in the video all the same length?