The amateur astronomy world got a tremendous boost during the 1960s when John Dobson invented what is now called the Dobsonian telescope. Made from commonly-sourced materials and mechanically much simpler than what was otherwise available at the time, the telescope dramatically reduced the barrier to entry for larger telescopes and also made them much more portable and inexpensive.

For all their perks, though, a major downside is increased complexity when building automatic tracking systems. [brickbots] went a different way when solving this problem, though: a plate solver.

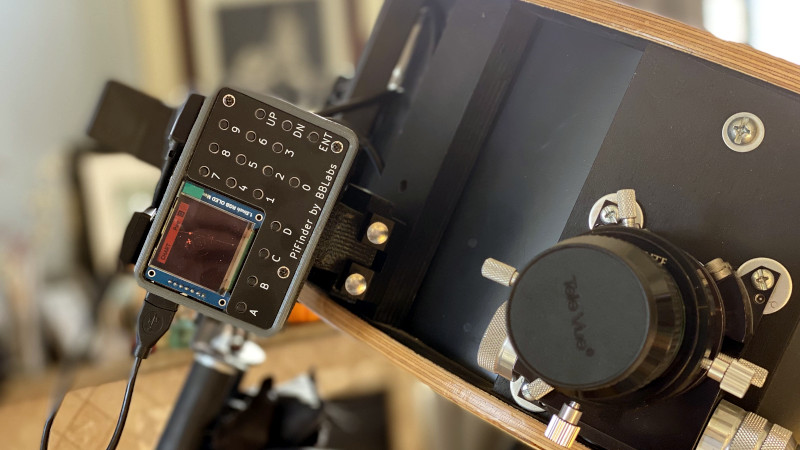

Plate solving is a method by which the telescope’s field of view is compared to known star charts to determine what it’s currently looking at. Using a Raspberry Pi at the center of the build, the camera module pointed at the sky lets the small computer know exactly what it’s looking at, and the GPS system adds precise location data as well for a quick plate solving solution. A red-tinted screen finishes out the build and lets [brickbots] know exactly what the telescope is pointed towards at all times.

Plate solving is a method by which the telescope’s field of view is compared to known star charts to determine what it’s currently looking at. Using a Raspberry Pi at the center of the build, the camera module pointed at the sky lets the small computer know exactly what it’s looking at, and the GPS system adds precise location data as well for a quick plate solving solution. A red-tinted screen finishes out the build and lets [brickbots] know exactly what the telescope is pointed towards at all times.

While this doesn’t fully automate or control the telescope like a tracking system would do, it’s much simpler to build a plate solver in this situation. That doesn’t mean it’s impossible to star hop with a telescope like this, though; alt-azimuth mounted telescopes like Dobsonians just need some extra equipment to get this job done. Here’s an example which controls a similar alt-azimuth telescope using an ESP32 and a few rotary encoders.

Great read!

This has me thinking, what would be the opposite of a plate solver? Like an automated version of low-tech “guided by stars positioning system”. There probably has been at least one hacker out there scanning the sky and comparing that positioning with GPS, just for fun.

Well, it’s called celestial navigation. The US Army seems to have recovered some interest in it as an alternative if GPS fails.

I’ve also found this students’ project to solve it with deep learning: https://github.com/gregtozzi/deep_learning_celnav

Learning cel nav is super easy and can be self taught 100%. I did.

The history is obviously way long but Cel nav was used on Apollo missions intended to be primary means of guidance and navigation until they realized ground based radar was better. Then it was just done for data and backup purposes. Also, SR71 (I believe) had a set guided cel nav that could read like 7 stars even in daylight and compute position to a 60 ft 3-S box. Something like that.

Military dropped cel nav requirements for Navy officers some time ago but is trialing it again die to concerns not of GPS failure per se but GPS spoofing. I would imagine an officer on the foredeck with a sextant would be sexy but considering the automated guidance from at least the 50’s, the modern equivalent would certainly need about zero human interaction.

Well my telescope does it, itself with a little thing i added to it called starsense

A few weeks ago, I gave a talk on celestial navigation for our local astronomy club: https://www.youtube.com/watch?v=5kAqcZYmWjA

As a demonstration I wrote a very crude webpage which allows you to get a fix from two stars, mentioned at the start of the video. It would work a lot better as a native app, as only Chrome on Android at this point allows changing zoom and exposure on a webcam. But it’s just intended an example of how to do it. I typically get within about 10 miles of my actual location.

https://www.celestialprogramming.com/apps/celestialfix/sextant.html

Usually you should be able to get well within 1nm from land or moderate seas; I had a terrible time with this due to cheap ($150-200) plastic sextant that was as worthless and dangerous as it was unreliable. I saved up and for a used Astra IIIB which is a joy to use. With an artificial horizon (doubles the accuracy) and about 5 good sights per session I can get to about 0.01 nm to GPS position pretty regularly.

Night time terrestrial is much, much harder because artificial horizon is very challenging to use for all but brightest planets and moon.

I use StarPilot app ($50) which is incredibly full featured and makes reduction trivial. That said I don’t use it often, I prefer tables and paper because if I really need to know exactly where I am there are many better methods in 2023.

How do you get such fantastic accuracy? Don’t you need accurate time to get longitude? Since you’re zipping along at around .2 nm per second while sitting on earth’s crust at mid-lattitudes, I’d naively guess you’d need (0.01 nm / .2 nm/s) = 0.05 seconds timing accuracy. Can you really achieve that? What am I missing?

Yes accurate time is necessary but taking many sights and averaging gets you less than one second of error. Your estimation of time error to positional error is complex, my hand waving argument is worst case 1 second is 1nm but it is not a linear relationship and by taking multiple sights per session and averaging as well as taking multiple sessions, you iteratively improve your accuracy. There are also statistical tools (built into StarPilot and described in Burch’s book) to discard bad sight data. See below for some other reasons. I maybe mis-remembering but SR71 could, using automated instruments in real time get a 3-D fix to a 60ft box level of accuracy using 1950’s tech, ro out a limit and comparison (0.01nm= 60ft). So it is do-able but takes work and is sloooooow for one dude in his backyard. I may also just be getting lucky and all the errors cancel out just right which is a real possibility, but I hope not haha. Best

I too am intrigued about the .01nm accuracy. The Astra IIIB is only marked to .1 arcmin on the veneer scale. Adding in things like atmospheric refraction would reduce that considerably. At the beginning of the video I posted above, I note my friend got .5 mile accuracy, and it was his primary duty in the Navy.

By using an artificial horizon you get double the accuracy because you are doubling the angle which makes the error half.

Taking multiple readings per sight session averages out the error of the instrument and you can get much better data than the error of the instrument.

Taking multiple sight sessions (there is a nice table in Burch’s book to determine best amount of time to wait between sessions) and solving analytically (not on paper/graphically)

Being on land and not moving makes all of this much easier as well, and eliminates errors in dead reckoning estimations etc.

the science of correcting for atmospheric refraction and all other necessary corrections is well, well established and IS the process of sight reduction. Refraction is also minimized by taking the shots as far overhead as possible (to an extent). Computer software is also more accurate than tables; I use StarPilot for that.

We have an 8″ Dob that has been amazing. there is an argument that you don’t really need guidance and star tracking and all that but…. in my super light polluted area it often takes me 20+ frustrating minutes to find “the thing” as I star hop halfway across the sky. This would be great if cheap/ easy to even know I’m pointed in the right direction.

For reference I’m Bortle 7.5 or 8, meaning even bright constellations often don’t have all their stars visible to naked eye.

I feel your pain. I also have an 8″ Dob and live 5 miles from Disneyland. Just finding a bright star to start hopping from can be a challenge. I mounted a small green laser on my scope. I find the starting star with 10×50 binoculars and move the scope to align the beam with it. You got to watch out for aircraft though.

I may try mounting up a laser co-axial with the finder scope just to get me remotely in the right direction. Good idea and cheap. Using Stellarium on my phone has been a godsend over paper star chart because I can limit the visible magnitude to match what I see and also when I switch to main instrument I can invert image too. Still though, I toy with working some extra shifts and getting an Unistellar. But where’s the fun in that haha. It’s fun to be a little grouchy about tech and seeing through nice pure analog optics without ability to really even take a photo, just knowing you saw it and maybe sketched it in the journal has a calming appeal.

Would a phone with an app like Sky Map mounted on the tube work as a rough finder? It uses the GPS, accelerometer and compass; I don’t remember how many objects it knows, but you can enter one and it will guide you with an arrow on screen.

I have done that, mostly. The metal tube messes with the compass, tho, so I have to get some distance from the scope and visually aling it with the pole.

The SkyMap app is good enough to find some targets, nonetheless…..

Aw, shucks; I didn’t think there would be dob tubes made of steel. Back in the U we had one and I think it was fiberglass; I’ve also heard about hard cardboard concrete forms.

Short of motorizing the whole thing, maybe the simplest solution would be a couple of incremental optical encoders to keep track of azimuth and elevation. Point at one known object and enter RA and DEC into a small program (along with lat-lon-time); and it will guide you to any other coord.

This is pretty cool – thanks for highlighting it!

I believe that most modern “star tracker” based attitude determination systems on satellites use this same method of finding the best fitting pointing of the field of view and rotation about that FOV axis against a map of the sky to estimate the orientation of the spacecraft with respect to the distant stars.

Unless there’s some independent positional data available, either from ground tracking or on board GPS, it can’t easily be used for navigation (determining position or finding the bearing to move from one location to another) the way that time and star field can be used to estimate position on the ground (I.e. constrained to a known surface in space).

The Celestron Starsense series of telescopes, including the 8 and 10in Dobs, already provide this with a special dock and phone app

Hear that ladies and gentlemen? Throw out your existing equipment, somebody solved this problem and we don’t need anything else.

Star Sense is great. I have an Intelliscope “push to” telescope that needs some work, and after seeing starsense in action I would rather have that compared to Orion’s Intellescope system with keypad + hall effect / encoder sensors.

I saw one user on cloudynight forums (l guess astronomers only spend their time on forums when it is cloudy at night) – the user suggested to buy the cheapest Celestron scope with starsense (~$150 new) and either give away or sell the scope, while keeping the starsense part. This gets you the phone mount plus a license to download and operate starsense to your phone.

It adds about $200-$250 to the price, money i elected to use to purchase a slightly better telescope that came with better eyepieces and a laser collumnator, and a bit left over for a broad and narrow band filter set. It’s a trade off. It takes me longer to find stuff but when I get there I can actually see it. Nothing more disappointing than spending forever getting there and on top of that you can’t see jack!

I can’t find in the docs the actual algorithm it uses to solve the image.

Anybody know?

I was very impressed by the algorithms showcased on astrometry.net years ago. I’m wondering if this is the same, or a new take on it.

The method Astrometry.net uses/used is published at https://arxiv.org/abs/0910.2233

It illustrates an amazing use of hashes for super-quick lookups into a complex dataset.

The pre-computed hash doesn’t care about the image scale (pixels per degree) or orientation (which way is north. Put in the hashed value of the relative positions of 4 stars, and the hash lookup returns with very high probability that actual stars, scaling, and orientation. It’s very neat.

The specific algorithm used by this one is called Tetra3 (https://github.com/esa/tetra3), it is available as a stand alone Python module. Astrometry.net mentioned in another comment is quite a bit better at solving, but significantly more complex to set up, whereas Tetra3 is a simple copy command. Another publicly available one is called ASTAP, written in Pascal. The algorithms among them are quite similar in that they use a hash that is invariant to rotations to group together stars, then simply search a database for close matches.

There are detailed papers on Tetra3 and Astrometry.net, and ASTAP has an explanation on its website: https://www.hnsky.org/astap_astrometric_solving.htm

Ah. Excellent. Thanks.

Good thing you were never present with your dob at one of John’s sidewalk astronomy parties. He probably would’ve ripped it off your scope and thrown it to the ground… seriously! He a little bit of a stickler for not using technology based on first had experience.

There is a new program called Openlivestacker for Android phones which can take images with an ASI camera and solve them using the ASTAP.solver. Current discussion:

https://www.cloudynights.com/topic/872895-openlivestacker-4th-beta-android-stability-fixes/

So if you attach to your Dobson mount an ASI camera with a simple camera lens and connect it to your Android phone you should be able to get the celestial position within maybe 6 or 8 seconds.

Openlivestacker is in beta. I proposed to loop the image taking and solving to make it more useful for celestial navigation. See https://github.com/artyom-beilis/OpenLiveStacker/issues/8

The practical problem is that it will still take 6-8 seconds before you get the celestial position where to camera is pointing to. In comparison using encoders on the two AZ, ALT axes will give you immediate feedback.