In VR, a blink can be a window of opportunity to improve the user’s experience. We’ll explain how in a moment, but blinks are tough to capitalize on because they are unpredictable and don’t last very long. That’s why researchers spent time figuring out how to induce eye blinks on demand in VR (video) and the details are available in a full PDF report. Turns out there are some novel, VR-based ways to reliably induce blinks. If an application can induce them, it makes it easier to use them to fudge details in helpful ways.

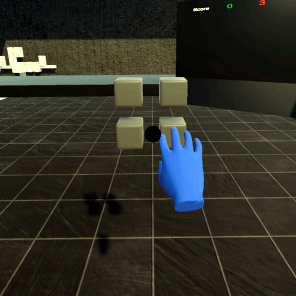

It turns out that humans experience a form of change blindness during blinks, and this can be used to sneak small changes into a scene in useful ways. Two examples are hand redirection (HR), and redirected walking (RDW). Both are ways to subtly break the implicit one-to-one mapping of physical and virtual motions. Redirected walking can nudge a user to stay inside a physical boundary without realizing it, leading the user to feel the area is larger than it actually is. Hand redirection can be used to improve haptics and ergonomics. For example, VR experiences that use physical controls (like a steering wheel in a driving simulator, or maybe a starship simulator project like this one) rely on physical and virtual controls overlapping each other perfectly. Hand redirection can improve the process by covering up mismatches in a way that is imperceptible to the user.

It turns out that humans experience a form of change blindness during blinks, and this can be used to sneak small changes into a scene in useful ways. Two examples are hand redirection (HR), and redirected walking (RDW). Both are ways to subtly break the implicit one-to-one mapping of physical and virtual motions. Redirected walking can nudge a user to stay inside a physical boundary without realizing it, leading the user to feel the area is larger than it actually is. Hand redirection can be used to improve haptics and ergonomics. For example, VR experiences that use physical controls (like a steering wheel in a driving simulator, or maybe a starship simulator project like this one) rely on physical and virtual controls overlapping each other perfectly. Hand redirection can improve the process by covering up mismatches in a way that is imperceptible to the user.

There are several known ways to induce a blink reflex, but it turns out that one novel method is particularly suited to implementing in VR: triggering the menace reflex by simulating a fast-approaching object. In VR, a small shadow appears in the field of view and rapidly seems to approach one’s eyes. This very brief event is hardly noticeable, yet reliably triggers a blink. There are other approaches as well such as flashes, sudden noise, or simulating the gradual blurring of vision, but to be useful a method must be unobtrusive and reliable.

We’ve already seen saccadic movement of the eyes used to implement redirected walking, but it turns out that leveraging eye blinks allows for even larger adjustments and changes to go unnoticed by the user. Who knew blinks could be so useful to exploit?

gaze tracking is quite a powerful technology!

people have various sensitivities to small perturbations in spatial interfaces.

some TV/monitors (especially OLED) will do “pixel shifting” where the entire screen is shifted up/down/left/right a pixel or few in order to reduce fixed pattern burn in. for some content, the sudden shift can be noticed easily, others, less! if the screen could just wait until the viewer blinks of their own accord, maybe it could be less distracting.

one way of detecting low frequency PWM driven lights is to do saccade back and forth across the source. some car tail lights will have a perceivable Persistence Of Vision trail. it can also happen with DLP projectors with color wheels that don’t double time the sequence of filtering light!

there’s a powerpoint pdf somewhere from valve’s “left 4 dead” video game that discusses the “director” which will create zombies only in places the player is not currently looking. spooky! that neat document also goes a bit over other biofeedback integrations with interactive software.

a television show called “bang goes the theory” shows a Saccadic masking demo, quite an interesting magic trick! Season 1 Episode 9 perhaps it was.

yay vision!

“This very brief event is hardly noticeable, yet reliably triggers a blink.”

Er? The video literally noted that the approaching object trigger was the *most* noticeable.

Don’t Blink! Doctor Who

I found the “blink” by inserting a few black frames around 00:53 quite annoying, although I do concede those dark frames were so distracting I did not notice the other changes in the scene. (Even after reviewing and knowing where they are it seems to half elude me, so at least it partially works and it’s a somewhat interesting topic to make use of “change blindness” in such clever ways). But still, I am leaning towards the other direction. Don’t try to induce blinks, but just measure them. Simple camera’s are so cheap these days that adding them to a VR set would be a nearly negligible cost. And you can also use it for other things such as measuring in which direction the eyes are actually looking.

Another thing I’m curious about, how did this evolve in the first place? If a Lion with wide open mouth suddenly jumps you, it does not go away when you close your eyes…

If a lion suddenly jumps you, you aren’t going to forget it in the blink it might cause. But you are probably going to miss if you are a couple degrees off from the orientation you thought when you blinked with no motion that registered with your vestibular system, and you’re more likely to still have use of your eyes than if you hadn’t blinked. You’re just going to be doing less comparison of predicted model and reality, so you can, y’know, be acting to deal with the lion where it _is_ rather than where you thought it was, and you’re less likely to notice the physically unlikely thing that changed _while_ your eyes were blinking.

Science is catching up to Douglas Adams.

This is a precursor to the SEP Field.

>Another thing I’m curious about, how did this evolve in the first place?

My guess is it’s a side-effect of your brain smoothing over small differences due to, eg, walking while blinking. If your brain really noticed changes like that, or was fully aware of what you see while your gaze shifts from point to point, it would swamp the details you actually need to see. And real lions rarely teleport.

Already solved:

https://hackaday.com/2011/01/16/electrodes-turn-your-eyelids-into-3d-shutter-glasses/. :D