Ever looked at Wolfram Alpha and the development of Wolfram Language and thought that perhaps Stephen Wolfram was a bit ahead of his time? Well, maybe the times have finally caught up because Wolfram plus ChatGPT looks like an amazing combo. That link goes to a long blog post from Stephen Wolfram that showcases exactly how and why the two make such a wonderful match, with loads of examples. (If you’d prefer a video discussion, one is embedded below the page break.)

OpenAI’s ChatGPT is a large language model (LLM) neural network, or more conventionally, an AI system capable of conversing in natural language. Thanks to a recently announced plugin system, ChatGPT can now interact with remote APIs and therefore use external resources.

This is meaningful because LLMs are very good at processing natural language and generating plausible-sounding output, but whether or not the output is factually correct can be another matter. It’s not so much that ChatGPT is especially prone to confabulation, it’s more that the nature of an LLM neural network makes it difficult to ask “why exactly did you come up with your answer, and not something else?” In addition, asking ChatGPT to do things like perform nontrivial calculations is a bit of a square peg and round hole situation.

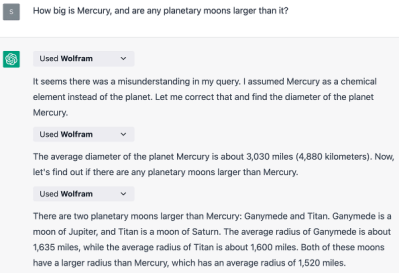

So how does the Wolfram plugin change that? When asked to produce data or perform computations, ChatGPT can now hand it off to Wolfram Alpha instead of attempting to generate the answer by itself. Both sides use their strengths in this arrangement. First, ChatGPT interprets the user’s question and formulates it as a query, which is then sent to Wolfram Alpha for computation, and ChatGPT structures its response based on what it got back. In short, ChatGPT can now ask for help to get data or perform a computation, and it can show the receipts when it does.

We’ve looked at Wolfram Alpha’s abilities before, especially the educational value of its ability to show every step of its work. Stephen also makes a great case for what an effective human-AI workflow based on Wolfram Language could look like. At this writing, access to plugins for ChatGPT has a waiting list but if you’ve had a chance to check it out, let us know in the comments!

“why exactly did you come up with your answer, and not something else?”

Not just LLMs, but humans frequently have similar problems to reason exactly why they chose something. We often come up with intuitive decisions first, and then make up a plausible sounding reason afterwards. If humans were better at bottom up reasoning, we wouldn’t disagree so much on things like climate change. Or at least, if we had such disagreements, we should be able to quickly compare our reasoning and then one person would realize their mistake and correct it.

Some things aren’t because of lack of reasoning but lack of sacrifice. For example no more building on flood plains, or in high fire areas.

https://www.nytimes.com/2023/03/24/podcasts/the-daily/should-the-government-pay-for-your-bad-climate-decisions.html?showTranscript=1

Ostracus,

Forgive me if misunderstood your reply, but can you help me get a better sense of how it is on-topic?

I had the same reaction to his comment.

Simple, a decline of “bottom-up reasoning” isn’t the only reason for people’s behavior on climate change. Sometimes it can be everything from loss of familiarity by moving, to perceived decline of quality of life from same. The podcast above also notes perverse incentives by government that reward the status quo. The part in both podcast and in the main article, even though far as I’m aware no one has tried is AI in both better articulating and managing RISK for both government and individuals as it relates to climate change.

Feature not bug.

Remember when BofA got caught with a line of code that held deposits for days on low balance accounts, in hope of collecting extra bounced payment fees? With a neural net based system there is no such line of code, just training data that is carefully curated to produce the same result.

AI goes through periodic busts of irrational exuberance. Anybody got any ideas on who to short when reality sets in? Nvidia?

I’m not impressed by a word salad generator trained by the entire net. Even Joe Biden can spout word salad.

Can word salad write running code? Because with the Wolfram plugin, that’s what ChatGPT is doing for me. In 20 minutes without writing a line of code myself, only giving directions, it can read from a website, dissect a spreadsheet, chart extracted elements, calculate with a couple of columns of results, form a fitted statistical distribution from its data, simulate with that as its marginal distribution, accumulate simulated forward paths, give me confidence intervals on the resulting projections from the sample, and at the end cheerfully write up Wolfram Language code to reproduce the whole operation end to end, at will, on any number of future examples. The killer thing here isn’t making poems – its understanding human verbal directions well enough to translate them into running code. Don’t underestimate what is happening here.

It’s regurgitating code it found on Stackoverflow or Github, after matching the problem description.

Bet you had to pick the result that worked from an offered list. Just as when you lookup code for yourself.

None of the problems you claim it solved are at all novel. Bob could do those in excel (scrape some data and beat on it with stats).

Most problems that most people “solve” aren’t really novel. Most aren’t even incremental.

You’re a bit wrong in that it doesn’t just regurgitate a Stackoverflow solution. You’re also wrong in that it can’t be used for novel problems as long as you guide it along. Because it’s rare that every part of a problem is novel. I’ve heard from numerous senior software engineers that it makes a much better collaborator than a junior or even sometimes another senior engineer would be.

Bard ai can be instructed to use Wolfram Alpha when answering your questions. No need for a plugin

No disagrees that climate change isn’t a thing, its been happening ever since the the time of the dinosaurs, or more accurately that pollution is bad. Whats disagreed about is that a bogey man is trying to be made up and used to up end how society functions to appease various sociopaths power fantasy.

I eagerly await the day that my AI can unironically (and perhaps sarcastically) reply, “my source is I made it the f*** up!”

The nice thing about what he’s doing is kind of like combining a human with a calculator or a spreadsheet. The advantages of both, against the disadvantages.

Maybe don’t use the adjective “killer” ?

Mm definitely not going to ease the concerns of those terrified by the thought of these algos going full Terminator!

@Donald Papp said: “Wolfram Alpha With ChatGPT Looks Like A Killer Combo”

Well that depends; who or what are you trying to kill?

The Radio Star?

No, wait, wrong song…

Did that word “trigger” you?

Shots fired!

What would be cooler is to see Alpaca combined with GeoGebra, NaSC, Kalker, or Insect…

gpt with mizar will be great https://en.wikipedia.org/wiki/Mizar_system

I asked Chat GPT the Mercury question and it answered that Io, Europa, Ganymede and Callisto were all larger.

I told it Io was smaller and it apologized and agreed that with a diameter of 3,630 km, Io is smaller than Mercury with a diameter of 4,880 km.

Then I told it Io was larger, and it apologized and agreed that with a diameter of 3,640 km, Io is larger than Mercury with a diameter of 4,880 km.

So basically it will tell you something and then agree with whatever you say. I still don’t understand how I keep reading articles of these people who are outsourcing “80% of their job” to chat GPT. What on earth do they do for work??

President of the United States.

LOL!

Well some see it like it should be seen. As a tool, not a replacement for themselves.

https://www.vox.com/technology/23673018/generative-ai-chatgpt-bing-bard-work-jobs

“Facts don’t matter, only feelings matter.”

You have obviously been asleep for the last few years :)

LLM’s are like pure liberal arts majors with only the right side of their brain. The article was specifically about the integration of Wolfram Alpha, which covers up this weakness.

Consider the ability to persuade the chatbot: Teaching with a Chatbot: Persuasion, Lying, and Self-Reflection – Minding The Campus

https://www.mindingthecampus.org/2023/04/13/teaching-with-a-chatbot-persuasion-lying-and-self-reflection/

I have ChatGPT Plus plugins. With Wolfram Alpha plugin, ChatGPT can take about 75% of a civil engineer’s job. Flawless calculations with work shown.

Have also played around with generating Python code for FreeCAD. I’m less impressed with ChatGPT’s *current* drafting abilities. However, once it has its eyes… it will start taking jobs. Not outright. But by empowering some engineer’s to multiply their productive output leading to a decline in the demand in number of civil engineers… and along with that, a decrease in the market rate of productive output.

Knowledge work will be first to go. Say good bye to Doctors, Engineers, Programmers, and Lawyers first. Let’s not kid ourselves. Manual labor will be the last to be taken over by AI-assisted robots.

Buckle up while we head to a future where Idiocracy is not a parody, but a documentary.

Now leave me alone. I’m watching “Ow my ballz”.

75% of a civil engineers job is ‘cookbook’. The remaining 25% is the hard part. Yes I know Engineers like to rip on each other (Easy Engineer, Glorified ditch digger etc). We SHOULD rip on humanities majors, but we’re too busy trying to get into their pants in college.

Happens to all engineering disciplines as they become less ‘making it up as we go along’ and more ‘applying the standard solution method’. Software engineering will be similar eventually, 2000 years or so.

You’re not wrong about productivity though. When do you think ChatGPT will get it’s (PE/SE stamp)? Never…that’s my point.

If a chatbot ever becomes a competent lawyer society will be destroyed. Beyond a very low level lawyers cause/are social cancer.

I don’t consider ChatGPT an AI at all. It’s just statistics and lies.

Check out Wolfram’s video about ChatGPT and how you can make WolframAlpha do the same thing. He explains the inner working quite well, even an idiot like me can understand most of it.