Some professional coders are absolutely adamant that learning to program in assembly language in these modern times is simply a waste of time, and this post is not for them. This is for the rest of us, who still think there is value in knowing at a low level what is going on, a deeper appreciation can be developed. [Philippe Gaultier] was certainly in this latter camp and figured the best way to learn was to work on a substantial project.

Now, there are some valid reasons to write directly in assembler; for example hand-crafting unusual code sequences for creating software exploits would be hindered by an optimising compiler. Creating code optimised for speed and size is unlikely to be among those reasons, as doing a better job than a modern compiler would be a considerable challenge. Some folks would follow the tried and trusted route and work towards getting a “hello world!” output to the console or a serial port, but not [Philippe]. This project aimed to get a full-custom GUI application running as a client to the X11 server running atop Linux, but the theory should be good for any *nix OS.

The first part of the process was to create a valid ELF executable that Linux would work with. Using nasm to assemble and the standard linker, only a few X86_64 instructions are needed to create a tiny executable that just exits cleanly. Next, we learn how to manipulate the stack in order to set up a non-trivial system call that sends some text to the system STDOUT.

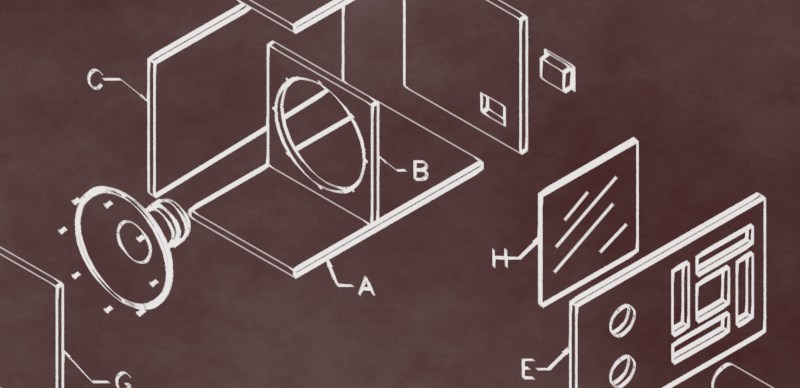

To perform any GUI actions, we must remember that X11 is a network-orientated system, where our executable will be a client connected via a socket. In the simple case, we just connect the locally created socket to the server path for the local X server, which is just a matter of populating the sockaddr_un data structure on the stack and calling the connect() system call.

Now the connection is made, we can follow the usual X11 route of creating client ids, then allocating resources using them. Next, fonts are opened, and a graphical context is created to use it to create a window. Finally, after mapping the window, something will be visible to draw into with subsequent commands. X11 is a low-level GUI system, so there are quite a few steps to create even the most simple drawable object, but this also makes it quite simple to understand and thus quite suited to such a project.

We applaud [Phillip] for the fabulous documentation of this learning hack and can’t wait to see what’s next in store!

Not too long ago, we covered Snowdrop OS, which is written entirely in X86 assembly, and we also found out a thing or two about some oddball X86 instructions. We’ve also done our own Linux assembly primer.

I disagree with the comment about speed and modern compilers. We coded in assembler and could beat the compiler even a high optimization levels. An example is a routine with multiple exit points. The compiler would put a single exit point at the end of the code and have jmp instructions to it. The problem would be that the exit code would be in a different cache line than the most used flow through the code. Keeping that exit with the most used code gave a performance boost. Keeping the performance part of the code in a single cache line is something a compiler could not do as it does not know what code it consider high performance.

Modern compilers support profile guided optimization, which should typically cover the case you mentioned.

Also, stuff like WPO/LTO is able to propagate values across function borders, which helps the compiler to determine the hottest code path statically.

Are there code fragments where assembly beats compiler generated code – yes. But in most cases, as the article stated, it is as “considerable challenge”.

I can’t really give a whole disseration of optimizing “assembly” here, it just gets waaaaay too long way to fast, and no one will read it anyhow. The short answer is ISA’s don’t match processor architectures, and haven’t for quite some time. There are other things compilers should be able to understand and optimize at a basic level, like superscalar architectures, and availability of extended instruction sets that can be used for “regular” processing in addition to “regular” instruction sets, in parallel assuming no data dependencies. (And the compiler should be able to find data dependencies far easier than we can). But they don’t. You’d have to ask a compiler guy why this is. I’m not going to go find examples, but there was an SSE optimized matrix multiply that had good comments online. It explained a lot about available load units, independent data, superscalar issuing of SSE commands, etc. Its not a be all end all of optimization and its dated. However, it will get you a good idea of what optimized code would look like. Honestly, that algorithm probably doesn’t benefit much from more recent advances, so it may still be as optimal as it can be. Anyhow, you’ll notice a stark difference between it and what the compiler will generate even in profile guided mode. Again, it comes from understanding of processor architecture vs ISA.

i was thinking the same thing. the cases where you are going to outperform a compiler are really limited in scope but it’s totally possible.

what’s hard is sustaining that kind effort across a large number of lines of code. in order to make it writable (let alone readable or maintainable), you will have to make some simplifying assumptions that will make your asm code much more constrained than the compiler’s, and your code will be slower. in environments where everything is written in ASM, it is an odd trade-off. they tend to be much more aware of some resources (some ASM programmers hate malloc and hate stacks, for example), so they can wring some speed out just by bringing that different mentality. but overall the code winds up being so clumsy if you’re writing tens of thousands of lines of it that it is much slower than compiled code. that’s certainly been my experience when i am forced to write a big pile of ASM as well.

but on any given inner loop or critical section, i think most people could beat most compilers if they kept at it long enough. if not just because the compiler generally creates it once and then lets go of it, while you can iterate towards ‘optimality’. there are exotic things like profile-guided optimization but it’s pretty much a red herring for most of us.

Coding like this has a high cost and I’m convinced that while you can beat the compiler on some case, on an average current program, the task is just too large for you to optimize everything and the compiler will just do a better job… optimizing some specific code fragment is good when needed, but just using the compiler for all the rest is just better and cheaper…

I would agree that a “professional coder” can have a career and not require knowing assembly. Knowing assembly brings a different level of understanding that provides an additional depth to the code one produces. I’d argue, a level that is beyond just “professional coder”.

that’s pretty low ;)

BIOS and device driver writers.

It’s easy to get by without writing assembly. But eventually you’ll run into a problem that requires reading assembly to solve. (Though you can hope somebody else will solve it for you.)

Assembly Language is how the CPU is Coded and building your code knowing the Assembly Language is Vital for an Professional Coder

A CPU is not coded in Assembly. A CPU is coded in opcodes, aka instruction sets. Opcodes are voltage values that are handled digitally and are usually represented in binary or hex values. Assembly is a programming language using mnemonics to represent those opcodes, but must still be assembled which translate the mnemonic to the actual opcodes. This process allows for emulated instructions which some CPUs might have. The emulated instructions don’t have a different opcode and are there to make the Assembly easier to read, but instead get translated into an opcode shared with another similar command, thus the mnemonic and opcodes do not have a 1 to 1 relationship. And, as a professional coder who does know Assembly and has worked in it professionally, I would argue that it is not vital. You can have a long and healthy career as a programmer without knowing Assembly. It really depends on what type of software you work on. Knowing the details of exactly how a computer works can make you a better programmer and can allow you to write more optimized code, but is not vital for all programmers.

“not vital”…. It all depends on the type of programmer you are. If working with VB in M$ products, for example for business type apps. Probably not. For embedded and real-time programmers, it usually is a good thing to know about the processor you are working on….

“Opcodes are voltage values that are handled digitally and are usually represented in binary or hex values.” is not how many modern processors work. In many modern processors, what one would call machine language is translated by the processor into microcode and then further processed, dispatched, pipelined, executed, …, not necessarily in this particular order. Even the voltage values statement is archaic.

My first jobs involved coding for very small hardware environments based on Z80 and 6502 processors. Learning hexadecimal math and processor instruction op codes was pretty much mandatory for debugging code which had been generated by cross assembler.

In the 21st century, it is possible to get a job writing general purpose programs in Visual Studio or another high level integrated development environment, treating the target system as a box with no idea of what is going on inside. Such a programmer can probably be productive and do a decent job.

Having worked directly with hardware and symbolic assemblers has influenced the way I write high level language programs, anticipating what a compiler will generate. I look at it as similar to learning additional language in high school in addition to one’s native tongue. It likely isn’t necessary to know more than a native tongue for most jobs, but that additional study makes one a better communicator through a better foundation in grammar, structure, general linguistics, etc. Those skills probably make one a more productive and more apt to advance to a higher career level.

this project makes my skin crawl. interfacing with X11 is such an exercise in idiomatic function call code. it’s the sort of thing compilers excel at. if i took on this task, i’d learn assembly by writing a dumb compiler instead :)

Yes, writing parts of X11 in assembly might make sense, but merely a GUI far less so.

In the end, it sounds like a hobby project, so kudos to the programmer for picking an esoteric project and actually completing it.

The best reason to learn x86 assembly is so you can learn to hate the architecture. Then you can move on to something else (anything else really), but ARM is a good example of something nicer.

A person absolutely should learn assembly language, and preferably for many different architectures. You really can’t say you know and understand computers without a grasp on what goes on at that level.

So back to coding in Haskell and Kotlin for the time being …

just don’t learn assembly and then you can use whatever architecture is handy at your form factor and budget :)

I agree with the “Best way to learn is a substantial project”. Back in college in the 80s, we learned VAX Assembly as an exercise, but that was it. When I started work ‘work’ we used assembly for drivers x86 and 68XXX. A couple years ago, I started digging into ARM assembly. And I took an old text game and re-created it in ARM 64 assembly. Best way to ‘learn’. Assembly is ‘fun’ (in my mind). May not be ‘productive’ for real-world work as not only not cost affective, but not portable like C or Python. None the less there is nothing like getting your fingers dirty so to speak in writing assembly code. About 15 years ago when I was a supervisor and hiring people, it was amazing to see how ’empty’ headed some of the graduates were when it came to assembly and converting hex to binary and octal, hex to decimal, adding hex numbers, as examples. In my business you needed to know those things, say, when looking at a hex data stream (protocol) you are analyzing, or adding a feature to a driver. They could write Java though and C++…. Way different CS degree than mine (1986) .

Things are not built the way they were used to.

Yes, reflow ovens, etc., largely replaced soldering irons.

Isn’t “Roller Coaster Tycoon” written with assembly language?

I think writing in assembly language is good for making a video game that can go onto every gen console and computer…

Maybe they should have written “Cyberpunk:2077” in assembly language!

As a videogame developer… This is a really horrible idea ;)

Um… what? That’s… the exact opposite. One of the big reasons why languages were invented was to make code portable across architectures. Assembly is not.

Maybe if they did write Cyberpunk in assembly, the game would’ve actually worked.

You usually don’t write in assembly with hopes to beat a compiler, that’s just silly.

Assembly is very useful for specific cases, especially when it comes down to security. For instance, if you want to do things like API hooking via trampolines, you must use assembly. Of you want to walk the stack back, assembly again. You want to use a specific MSR? Assembly to the rescue.

Another reason for learning assembly is that it helps understanding the machine’s architecture and how things work. Nowadays, a LOT of developers don’t know how a string is represented in memory. If you ask them to reverse one, they can’t do it, even though it’s trivial. They also don’t know how the stack works, or how processes interact with each other, and a plethora of other things.

You either write assembly if you need too (as you say) … or for fun. Some of us bit heads enjoy the challenge of writing a project in assembly.

Most people don’t are about the architecture or how a stack works… Care more about getting a light to blink with Python, or Scratch, or etc. … Which is perfectly fine. Or at a higher level, the computer plays their game, or runs Excel. There is a level of understanding for every one :) .

No software professional (engineer, programmer, developer) would ever call themselves a “coder”. Open to changing that language a bit?

Why not? Always been a term applied to us professional programmers … Doesn’t bother me. To much political correctness already … no need to add more…

To me, computer programming is a means to solving problems AKA accomplishing a task. Just a tool that I learned to use. I never much cared if I was referred to as a coder, software developer, programmer, consultant, engineer, computer guy, genius, idiot, or whatever. I guess I’ve been all of those things.

Though I’m not a fan of labels or titles, I’ve been paid for doing those things/that thing so I’d have to label myself a software professional.

In a similar vein, using assembler or asssembling by hand is also a means to an end.

There are cases where using assembler or machine language is an inane exercise in futility left to hobbyists with copious amounts of time and dedication, as there are cases where doing so is absolutely crucial, and some cases in-between.

Knowing assemblers does not make one a programming deity, nor does complete ignorance of them make one a cretin.

Can knowing assembler make you a better programmer, sure. Is it guaranteed, absolutely not. Is it a waste of time for nearly all programmers, absolutely.

I found the article very biased. While arrogantly complimentary to compilers, it doesn’t give credit to compiler programmers and designers who often are assembly experts to make compilers do perform the amazing optimizations that they do automatically.

My personal approach for the areas that matter would be to code most of almost any project in an appropriate (cost, efficiency requirements, portability, …) high level language and, only if warranted, review the assembler of the critical sections for any possible improvements. Of course if it was a low level compiler or assembler (the program) I’d likely end up coding significant sections in assembler (the language) and in machine language.

No software professional should be called a software engineer or architect. Some may be coders, some may be designers, others may be programmers, but calling yourself an engineer or architect is just arrogant, just like calling the janitor a sanitary engineer.

MenuetOS, anyone?

Have heard of MenuetOS but been tweaking around BMOS (Bare Metal OS) originally inspired by MenuetOS according to the author