With the invention of the first LED featuring a red color, it seemed only a matter of time before LEDs would appear with other colors. Indeed, soon green and other colors joined the LED revolution, but not blue. Although some dim prototypes existed, none of them were practical enough to be considered for commercialization. The subject of a recent [Veritasium] video, the core of the problem was that finding a material with the right bandgap and other desirable properties remained elusive. It was in this situation that at the tail end of the 1980s a young engineer at Nichia in Japan found himself pursuing a solution to this conundrum.

Although Nichia was struggling at the time due to the competition in the semiconductor market, its president was not afraid to take a gamble on a promise, which is why this young engineer – [Shuji Nakamura] – got permission to try his wits at the problem. This included a year long study trip to Florida to learn the ins and outs of a new technology called metalorganic chemical vapor deposition (MOCVD, also metalorganic vapor-phase epitaxy). Once back in Japan, he got access to a new MOCVD machine at Nichia, which he quickly got around to heavily modifying into the now well-known two-flow reactor version which improves the yield.

![A blue LED held up by its inventor, [Shuji Nakamura].](https://hackaday.com/wp-content/uploads/2024/02/shuji_nakamura_with_blue_led.jpg?w=400)

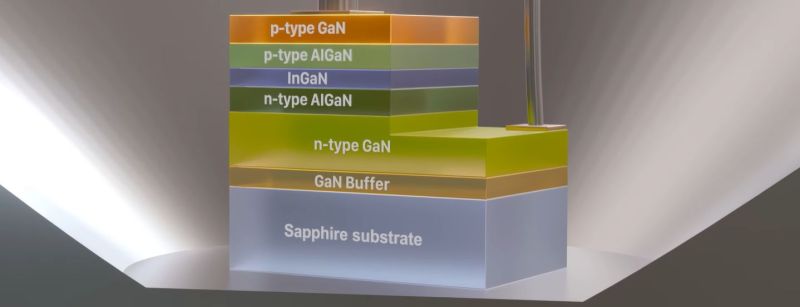

Ultimately through an annealing process and the use of an indium gallium nitride-based electron well (with aluminium gallium nitride ‘hill’ as barrier) he managed to boost the brightness of the LED from an early 42 µW to 1,500 µW when the LED was presented to the public in 1992. Despite this success and the immense boost it gave Nichia’s fortunes, [Shuji] never got the acknowledgement from his employer, nor shared in the revenue.

Although [Shuji] is now clearly happily working in the US on semiconductor research and even started his own nuclear fusion undertaking, it remains a bitter note that the inventor of blue LEDs and thus LED lighting had to go through such an ordeal. The recognition from [Veritasium]’s interview and documentary is definitely welcome to somewhat right a historic wrong.

I agree, Dr. Nakamura isn’t recognized enough for his efforts and ultimate breakthrough despite the forces and odds against him. His Nobel prize was well-earned.

Nichia Corporation, on the other hand, comes across very poorly in the video.

A bonus is that in the comments for the video, [Curious Marc] posted that at the time he was working in one of the labs that was racing to develop the GaN blue LED and was amazed by the performance of one of Dr. Nakamura’s prototypes they were testing which far outperformed their efforts.

Inventing is a gamble, and inventors love it.

Doesn’t mean it was strategically correct.

Think the poor family and the lottery ticket.

“Doesn’t mean it was strategically correct.”

In the semiconductor world, if you want to be anything more than a bottom-of-the-barrel second source for other company’s successful parts, invention and its risks must always be the strategically correct move.

Nichia wasn’t a “poor family and a lottery ticket”, they were a failing company that wasn’t innovating. There was no other real strategy to dig themselves out other than to innovate.

The company still was researching just not blue LEDs.

Your reasoning is based on him achieving the miraculous and so just a “lottery ticket”.

Worse still Namakura was gambling with SOMEONE ELSES MONEY.

A poor family might think they have no hope and might as well just use their last £1 for a scratch card. Not a sensible designed unless you like gambles.

No they weren’t. The change of management came with an almost total cut on R&D, like many modern companies. Why? Because they see innovation as something that investors don’t want. Nakamura had a mandate from the person who hired him and followed through with that despite insufficient funding.

Re: Nichia Corporation

I understand owning all the IP your employee creates from a corporate standpoint, and the fact you’re covering risk for the potential reward.

But I do agree, Nichia looks bad from this. In my opinion, if an employee makes a significant contribution to driving a revenue stream, you compensate them for that above and beyond their salary. That’s just being a good employer. He should have been given an executive position with share options, but that’s just me.

I doubt that there’s much correlation between being a good engineer or scientist and a being a good manager.

Zero really, but a solid raise and appointment of a position that reflects his actions plus a team would have been more than enough for him. All the things he currently has.

The world is rife with examples of company leaders who lacked the vision to support the visionaries they had on their staff. There are, of course, even more examples of companies who lacked the insight to capitalize on the product leads they started with. Having worked for Motorola for 30 years, I saw numerous examples of that first hand once Bob Galvin retired and left the company in the hands of lesser minds.

As a fellow ex-Motorolan from that era, I can strongly agree. They lost their focus and spent money chasing fields which were not as profitable as previous ones.

From my visit to the Motorola Museum in Schaumburg, IL, the takeaway for me was obvious. Motorola got into a new technology, found a way to commercialize it, and made a ton of money. They plowed that back into the cycle. Once the field became a battle of manufacturing volume, they got out and moved on. This worked up through cell phones where they just *stopped*.

LMR had already stopped, but it was still lucrative because of the military/government contracts–though it wasn’t the big cash cow it had been and it tied up a signifigant amount of resources in the company to keep going–and prevented growth in other fields.

It just stagnated to a stop and we all know how that ended.

More OT, I followed the development of the blue LED from the beginnina and I’m well aware of the role of Nakamura-san and I am very much willing to give him the respect he deserves for his efforts. Blue LEDs have probably been the most impactful technology in a few decades. The impact it’s had on lighting–bringing power efficiency–is gigantic. White LEDs are possible because of his work. They’re not only *possible*, but they’re better than existing white light sources.

Same kind of cycles happen here on this side of the pond, previously wildly successful organisations fall apart because management get stuck monitoring profit/productivity and market share while failing to realise that letting creative engineers be creative is what helped make them great in the first place.

Inventors in the UK have an image of being men (nutjobs) in sheds (and some are, Trevor Bayliss for example) but we have some of the very best research and development facilities and engineers in the world start their careers here before being tempted offshore by companies and governments willing to employ and fund them.

Ditto with government and financial establishments, the UK lost out on so many technological innovations because the inventors coudn’t get the funding and support to bring stuff to makret so it got bought out by companies from other countries.

You gotta gamble to innovate.

I need to think about this more, but I suspect that any company that lasts more than 10 years does so because the risk-averse people have taken over control of the company. Startups innovate and mostly fail, huge companies largely subsist on buying innovation rather than funding it because of their risk aversion, and I suspect the transition from willing to fail, to risk averse, is because of a change in who is running the company and how their vision of the future changes.

Almost like investors shouldn’t be management, because quarterly profits are a conflict of interest for the long term success of any organisation.

The world is also rife with companies failing as they pursue pipe dreams.

Invention is pursuing a pipe dream and hoping you get there before you run out of money.

I worked for two companies that illustrate both sides of the coin. Collins Radio went belly up because Art Collins spent all of his money chasing computers (where they were woefully behind the curve) instead of focusing on his core businesses. Motorola, on the other hand, invented cell phones and was the industry leader until the conversion to digital. The guy running that division (I can picture him but don’t remember his name) insisted upon milking analog instead of listening to those around him. It eventually got him fired, but by then it was too late.

Sure, like everything in life moderation seems to be the way.

Companies tend to get into the mindset that everyone is replaceable and talent is easily found. Because of that, they don’t fairly compensate them enough to keep them from walking out the door — often with knowledge and expertise that is not easily replaced.

It’s like an NFL team that strikes it right and draft a HoF caliber player, but then decide to let them walk because “well, lightning struck once.” It’s a little easier to see these gifted people in athletics because of the stat tracking, but the same thing very well exists in business .. some people are exceptionally talented.

> The world is rife with examples of company leaders who lacked the vision to support the visionaries they had on their staff. There are, of course, even more examples of companies who lacked the insight to capitalize on the product leads they started with.

Apple in the 90’s is such a good example of both of these. They had thousands of engineers working on various products that crossed the gamut from “pipe dream” to “surefire win” and yet leaders from middle management all the way up to the executives were simply unable to differentiate between them, and the ones they did try to pursue, they failed hard on execution. The book Apple (https://www.amazon.com/Apple-Jim-Carlton/dp/0887309658) from 1998 is dated now, but still very good if you’re interested in that era.

Steve Jobs was a flawed human in many ways (aren’t we all) but one thing he excelled at was identifying a product he believed in and pushing it as far as he could — even to a fault.

One thing I absolutely loved about this story is that he’s clearly brilliant, able to think of clever workarounds and advance the fundamental science involved, but he was also able to take a step back and realize the solution to the well problem was “just stuff more in there and hope”.

A lot of very smart and creative people have trouble with that stepping back to see the simple or brute forced solution.

I recently watched a video on Dr Nakamura. He’s a brilliant man, and there’s no doubt he earned his Nobel. A true bulldog of a scientist, who wouldn’t let go of an idea, and used all his skills, professional and mechanical, to make it real. We can all learn from his persistence!

Dude became a laureate when i was an undergrad at his university. I recall stories about how he developed it which obviously went on to make billions of dollars worth of profit but, as was (is?) common he didn’t personally profit, the IP being property of his employer. Then a lot of legal battles. The wiki page does an ok job of describing it now but at the time on campus, before the internet as we know it, the rumor mill was insane and it was impossible to get reliable news. Good times.

Does anybody know what’s the main reason why UV-C LED efficiency remains withing single digit percent? The latest 250-280nm UV-C LED from Nichia eats up 8W for a meager 200mW output radiant power… is it the inherent non-transparency of the AlGaN crystals or something else?

I’ve been wising someone would come up with a true blue LED, and then the video mentions 450nm. It seems like all I see in product specifications is 560nm which to me appears turquoise, not blue.

A quick Google shows https://www.jaycar.com.au/blue-5mm-led-2500mcd-round-clear/p/ZD0180 which says its 469nm. So clearly blue LEDs with the right wavelength do exist.

DigiKey shows over 1,000 LEDs, over 800 of which are in the 428-476nm range, and from 3mcd up to 15,000 mcd. Your wish was granted quite a while ago…

So much effort for a blue LED.

And still, for me blue LED indicators on devices look much worse than any other color for some reason.

I avoid buying any device with blue LED indicators.

Blue LEDs are “only” the base layer for all the phosphor based LEDs. Like the withe ones…

I kinda hate those white LEDs. Yes, they are a simple, cheap and efficient way to light a room, but they still feel unnatural due to their spectrum being very different from “proper” black body radiation (sunlight, incandescent lighting). Luckily not as bad as neon light and there are higher quality LEDs, but they are hard to get and expensive.

You lose efficiency but high CRI LEDs do exist. They work by having a greater variety of phosphorus with overlapping emissions.

Yes, try Yuji high CRI https://store.yujiintl.com/collections/high-cri-led-technology

*phosphors

All white LED’s have a blue LED at their base. The “phosphors” make the difference. There are very good “sunlike” white LED’s that are near-indistinguishable from the sun coming in through the window. It’s just that the cheap “blue peak” phosphors are way cheaper and a bit more energy efficient. Look for “high CRI” (90 or above) LED’s.

Another effect at play is the fact that lumen output is a measure of perceived brightness, so manufacturers can boast higher lumen outputs by concentrating the energy on green-yellow and blue. A low-CRI bulb can therefore appear brighter and boast higher “efficiency”, which gives it an edge in the energy ratings game.

Although all common white LEDs are blue LEDs with phosphors, not all LED bulbs are that technology. A few are available with one type of LED for each primary color, assuming you’re not a tetrachromat.

Plain RGB LEDs have a terrible CRI rating, since the diode itself is a fairly narrow-band emitter. It’s not a continuous smooth spectrum, which is what the CRI measures. The only way to get that is by either having many many slightly different diodes to span the spectrum (which is not done for cost reasons) or using phosphors to smooth it out.

The common decent white LED has blue and red diodes, and a phosphor to span the gap in between. The crap LEDs omit the red diodes.

The LEDs that use a separate red LED are doing it to score better without needing a better phosphor; the actually good ones can have plenty high of a red component without that. Other than if you mean wide-spectrum bulbs, like the stuff with a bit of IR and UV?

97 cri LED lamps already exist these days, which is extremely close to the 98 of a halogen incandescent.

Halogens were 100 unless you did something weird with them.

There are LEDs that get to 99 with the extensive use of phosphors and violet LEDs to push the blue spike just to the edge of the visible spectrum. That way almost all the visible light comes from the phosphors and there’s no strong blue spike.

It would be nice if regular consumer LED products were 95 CRI, but they’re so much more expensive that the common household light still falls somewhere between 75 – 85 which is slightly worse than the energy saving spiral tube lamps.

@Dude, the filament is a black body, but the light is tinted by the coating on the glass and/or the reflectors/lampshades/etc.

Also, it’s very easy to get high CRI bulbs; it’s just that people don’t care enough for the other options to stop being sold. In the U.S, Philips has a couple of relevant and commonly available options. There’s 8 watt 800 lumen bulbs at 95 CRI for $4, or you can get 4 watt bulbs the same brightness with 90 CRI for about 8 dollars. I believe the latter are not made to be fully dimmable, unlike the former, but the efficiency at that CRI is pretty nice.

And uhh, the CFL’s we had here were much worse in color than even the early LEDs, back when one LED cost $40 and one CFL cost under half that. Their nominal CRI may have been okay, but the spectrum was still somewhat uneven and tinted.

> but the light is tinted by the coating

Just being tinted doesn’t necessarily change the CRI rating. It’s a measure of whether there are gaps in the spectrum by measuring the light at different evenly spaced color values. Certain coatings will do that, but others apply smoothly over the spectrum, so they merely change the color temperature.

In fact, having specially tinted glass that suppresses the spikes of the spectrum can even improve CRI at the cost of efficiency.

Sure, it’s possible to make a coating that’s not a detriment. It’s not what I’d expect when they were more likely looking to manage heat and make good use of the light. Reflecting back some deep red might improve efficiency very slightly. But hot tungsten really is a perfectly reasonable black body in this spectrum; you’re not making it an even better black body with a coating. You might make it more pleasant if you intentionally deviate towards the pink side of the spectrum, but effectively the instant you put any of these lights in a real room with lampshades, reflectors, coatings, furnishings, etc none of them result in a scientifically ideal black body spectrum. And the ones using a hot wire are still farther from daylight than a LED of not quite as high CRI but a higher color temperature. And they can be less pleasant than an LED or mix of LEDs that is pinker than a true black body.

New high end LED bulbs from Phillips and Cree have excellent CRI and are under $5 each. It’s the crappy store brand ones that are the problem.

The problem is rather that there’s not that much of a visual difference between the crappy store brand and a high-end LED, and most consumers don’t notice the difference because they don’t have an immediate point of comparison. That’s why the store brand keeps winning and the better offerings remain niche products that aren’t available in regular stores.

To notice the difference, you need two bulbs with the same color temperature and light output, and some printed or painted graphics, and switch between the two. If you turn one light on and wait three minutes, your eye balances for the color discrepancies and it looks “normal” – the colors are just slightly muted with the lower CRI light.

One day I was watching TV and noticed that the image had a different color balance when seen with my left eye vs right eye. I got worried that I have some eye disease, but then I noticed that I have a halogen foot lamp shining on the left side of my face and a LED ceiling light towards the right – both specified as 2700K – but the true color of the ambient light on either side of my face was different.

I wonder how much the masking tape/duct tape sales benefited from the blue LED :)

^^ This!

I was literally just looking at the freaking blue power led(!) of my old crappy Samsung “SM SA450” (the one with the firmware bug which bricked it after a certain runtime(?)) and was pleased to see the tiny piece of duck-tape still sticking nicely (pretty sure it’s not the duct tape?).

Same with some HDMI KVM switch. What kind of honks design a device intended to be used in proximity to screens with such bright LEDs?!

I bought a wakeup light once, and it must have been end of the ’90’s. Because the manufacturer had the unwise idea that it would be great if it would use blue LED displays (exclamation mark).

And it had two settings for brightness: 1) ‘Eye searing’, and 2) ‘Sleep depriving’.

I did not want to open it up because of warranty, and I also wanted to have the option to see the time if I wanted. So, I created a ‘dimmer’ from duct-tap. It had two ‘settings’ for the display: non-visible and visible. :P

Later I did take some time to modify it with an LDR. But the masking tape worked great as a ‘dimmer’.

I also have a 3.5″ harddisk enclosure with a blue led that will burn your eyes if you would accidentally look straight into it. I could use it as a flashlight.

I’m not undivided happy with blue leds. :)

I am told…that the eye has difficulty getting focus on blue light sources. This may be the reason for your (and my) problem with them.

When I was developing products, the arguments over LEDs (color, number, placement and blink pattern) with Marketing were by far the longest and most detailed. Far, far lengthier than any arguments over product function.

Chromatic aberration. It’s caused by the fact that the eye has a simple lens that physically cannot focus red and blue on the same focal plane simultaneously.

When there’s plenty of light, the pupil constricts and the eye becomes more like a pinhole camera which can focus both colors. When there’s very little light, the red cones in your eye become inactive, so the eye focuses on blue. With artificial light, you have the middle case where the red cones are active but the pupil is large because of the lack of brightness, so you’ll have trouble focusing. This is also why the fad of using low wattage “daylight” bulbs indoors is counterproductive – it makes you see worse. With lower color temperature bulbs, you can see more sharply even with less light.

While chromatic aberration is what you call it when optics focus wavelengths differently, in this case scattering has to be considered. There are various kinds of scattering, including that which colors the sky blue, but generally shorter wavelengths are scattered more by any kind of scattering. A monochromatic light would still scatter more if it was blue than if it was red. I’ve used various monochromatic sources from ~380nm to ~850nm, and have observed exactly that – short wavelength sources make it harder to get good contrast, with a point source sometimes able to make my entire field of vision glow a bit, while technically for fine detail / maximum resolution you are putting yourself at a disadvantage with a long wavelength source. Specifics are complicated by the spatial arrangement of cones and rods. The best general light is a broadband source of good brightness, as you might expect.

Other factors may also be involved in this example, but if you’ve ever aimed a common red laser and then a violet laser at the same distant spot in a dark room, you’ll have seen that the violet laser’s spot looks much more fuzzy from a distance, even if it turns out to be the same size once you walk closer.

The cones never “become inactive”, they actually become the most sensitive in dim conditions – but the rods can be even more sensitive when fully adjusted, so that the rods dominate in dark enough light. Also, most people don’t get into dim enough light to rely primarily on their rods for vision – some estimates go up to 30 minutes of waiting without any interruptions of brighter light. The first adaption is widening pupils, then your cones adapt some, and finally your rods recover. By the way, rods are not truly blind to red, they’re just significantly less sensitive to it than other colors. They can still see and be inactivated by even a deep red or near infrared light, it just takes more red than any other color to produce the same effect on them. People catch themselves out by using too-bright red lights thinking it won’t do any harm.

http://hyperphysics.phy-astr.gsu.edu/hbase/vision/bright.html may be worth mentioning as something I generally agree with.

I don’t design mass-market stuff but the following has served me well….

Green for power “OK”

Red for “error”

Yellow for setting, user-adjustable stuff.

Not too many LEDs visible to the user (if there’s a lot of info to report, consider a screen, 7-seg display, streaming data to the user’s computer etc)

LOTS of SMD LEDs on the PCB for debugging. Green ones for every power rail, yellow ones for anything that changes while the product is running: switches, interlocks, and data that is slow enough to see, e.g rs232.

Knowing your power rails are alive/dead and knowing where there is communication across the system will save a lot of fooling around with a multimeter.

Spawned a whole anti-led industry… I have half a sheet left of these: LED Light Blocking Stickers, https://a.co/d/aV2t0NC

Finally I have a face to attach to the horrible blue power indicator leds

It’s so odd that lately every article is about a video I watched a couple of days earlier. Are you guys checking my YouTube history? 🤣

I’ve worked in the lighting industry since the late 90’s and was an early adopter of LEDs as a source.

As easy as it is to poop on Nichia for profiting off Nakamura’s blue LED work, consistent quality white light could not exist without all the innovations around the phosphor mix that is doped over the LED die.

The early days of LED lighting were filled with truly horrible LEDs that produced a rainbow of color variation over their distribution, grossly overestimated lumen output, significant degradation from heat, mechanical failures at the bond wire, wide variations in Vf, and optical distributions all over the map. It took a while for the manufacturers to figure how to manufacture quality LEDs at scale with color binning and Vf binning etc.. but it wasn’t until consistent color was widely available that LED lighting could become mainstream.

Nichia invested heavily in securing the raw materials to make the phosphor mixes – buying mines, etc – and is even selling their phosphor to other manufacturers so they can make white LEDs. I say Nichia’s work with the phosphors is as important as the development of the blue LED itself.

While I appreciate the contributions this man made to materials science, physics, and electronics, we were made to suffer that period in history where EVERYTHING had a blue LED on it, even if it didn’t need lighting or an LED on it at all.

Thanks, Marketing!

What about the yellow LEDs? I think I remember them from my childhood in the 70s.

Green + Red = Yellow

Also now that i know details about Nichia and that Dr. Nakamura works for CREE, i will steer my business towards CREE

No mention anywhere that ‘White’ LEDs are really Blue LEDs with a phosphor that emits white. But we are moving to LASER excited phosphor. Well not in USA, we can’t even have steering headlights (although to be fair the Audi/VW version was always broken and presented a hazard)

>Well not in USA, we can’t even have steering headlights

rong

Tucker did it…

I can remember working in the ’90s and getting a chance to put a blue indicator LED into our product (I believe the LED was made under his patent). It was a big deal back then (still using wheat bulbs for white indicators), we all knew about it reading in the trades (and knew it was only the beginning of a new era with the holy grail of a full color LED finally realizable), but no one we knew had seen such a beast in person.

I’ll never forget the wow factor:

it was dim and meh, very underwhelming.

It’s hard for people now to understand what a big event blue LEDs really were, but even still I’ll always remember what a let down that first one was in person.

Now we play with brilliant individually addressable full color RGBW LEDs without batting an eye, full color led Jumbotrons, LED video walls normal in stores, the LV Sphere. What great times to live in.

Did you just blatantly rip off content from a Veritaseum video?

Did you just blatantly ignore that this is a post pointing people TO the Veritasium video?

Basically employers operate from the standpoint of make me rich not you. Indeed, corporations operate under the model that they pay us so they own us. What we need is a new model: employee ownership. I have worked for many companies that strip their employees off any funding for innovation they they demand innovation. Well, sorry, but you can’t make everything billable, not invest in r&d, and still expect results. The billionaires tell us that their is no free ride, yet that’s what they want from use.