Although it’s generally accepted that synthesized voices which mimic real people’s voices (so-called ‘deepfakes’) can be pretty convincing, what does our brain really think of these mimicry attempts? To answer this question, researchers at the University of Zurich put a number of volunteers into fMRI scanners, allowing them to observe how their brains would react to real and a synthesized voices. The perhaps somewhat surprising finding is that the human brain shows differences in two brain regions depending on whether it’s hearing a real or fake voice, meaning that on some level we are aware of the fact that we are listening to a deepfake.

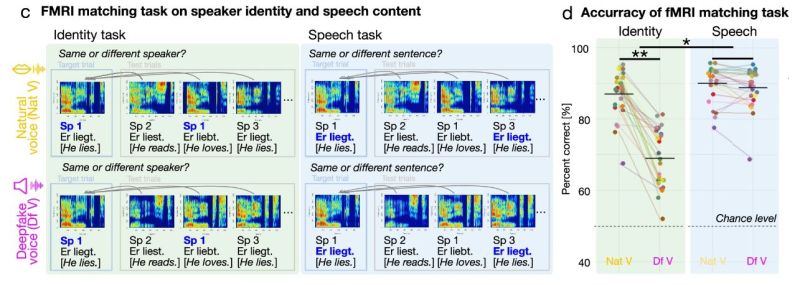

The detailed findings by [Claudia Roswandowitz] and colleagues are published in Communications Biology. For the study, 25 volunteers were asked to accept or reject the voice samples they heard as being natural or synthesized, as well as perform identity matching with the supposed speaker. The natural voices came from four male (German) speakers, whose voices were also used to train the synthesis model with. Not only did identity matching performance crater with the synthesized voices, the resulting fMRI scans showed very different brain activity depending on whether it was the natural or synthesized voice.

One of these regions was the auditory cortex, which clearly indicates that there were acoustic differences between the natural and fake voice, the other was the nucleus accumbens (NAcc). This part of the basal forebrain is involved in the cognitive processing of e.g. motivation, reward and reinforcement learning, which plays a key role in social, maternal and addictive behavior. Overall, the deepfake voices are characterized by acoustic imperfections, and do not elicit the same sense of recognition (and thus reward sensation) as natural voices do.

Until deepfake voices can be made much better, it would appear that we are still safe, for now.

OpenAI lawyers will be frantically taking notes.

slightly off topic but i have noticed that snapchat face filters become easily visible if I’ve vaped a certain variety of mimosa tenuiflora extract. brains are pretty clever!

I wonder if humans can get better at this with some training. You can turn it into a game.

That’s actually a really good idea. A deepfake matching game.

The solution to close the gap apparently is to put artificiality into everything natural that shapes our day-to-day experience: keep the bit rate low for telephony, video conferencing and recording, put “AI” denoising into software and hardware and make it on by default.

Maybe it’s just me still using headphones instead of smartphone speakers, but I feel that this is where we’re headed.

“He aspires to make robots as one of the greatest achievements of his technical mind, and some specialists assure us that the robot will hardly be distinguished from living men. This achievement will not seem so astonishing when man himself is hardly distinguishable from a robot.”

-Erich Fromm

Back in the day there was a period when digital and analog TV transmissions co-existed. One morning they switched the analog network off but kept transmitting the signal, now being generated from the digital stream at the transmitter. I remember I was watching the morning news and suddenly the picture went blurry, and it stayed that way until the very end of analog transmissions.

They deliberately degraded the analog image so the digital channels would look better in comparison. They both had the same high-bandwidth non-compressed digital source stream, so the analog channels would have looked sharp and pretty much like what the DTV technology was advertised for, while the actual DTV channels looked like junk because of the high compression ratio being applied in multiplexing 5-10 channels in the same bandwidth as one analog channel. Therefore they made the analog signal look artificially worse, so when people bought new set-top-boxes and switched them on, they would see an improvement in picture quality instead of a downgrade.

Maybe someone has coined a term for this kind of behavior, where every efficiency measure is abused to reduce quality below the original.

Analog TV channels had the quality they had because there was no point in making them deliberately worse. They would still take up the same bandwidth, so it was better to make the best use of the bandwidth with the best picture you could achieve.

Digital TV channels are more efficient by throwing away unneeded data, but you can also throw away data that you need, to make the quality and error tolerance worse, to fit in more TV channels with more advertisements.

Would you rather your only channels were the top 3 or 4 news stations, ancient aliens, 1-800-buy-junk, and if you’re really lucky the weather channel and/or PBS? Because the only over-the-air channels that are any good anymore are the secondary channels that digital allows you to include alongside the main channel.

As for quality, I don’t think I had complaints in either direction, switching between analog cable and digital dvd’s near the end of the life of my last tube tv. So I’m not sure I would have seen the same difference as you, although maybe your local station did the switchover in a lazy way? I will say that stuff made for 720p and beyond looked pretty bad in the low-res version, but I’m not 100% sure if it was worse on analog or digital when downscaled.

What’s the difference?

All the channels that had any sort of specialized content went under, because guess what: trying to provide niche topics won’t get you many viewers and advertisers won’t pay you. Now it’s literally the same content on all channels: just reality TV and re-runs of old cartoons, or “How I met your Mother” over and over and over…

You could put all of that in 3-5 channels and you’d still get repetition and empty slots in the afternoon for tv-shop.

>I’m not sure I would have seen the same difference as you

I was watching TV with a capture card on my computer, but yes, I did notice the difference on a Sony tube TV as well. At first when they digitized the production equipment and started using HD cameras and buying content in HD in the beginning of the switch-over, the image quality went up with the better source material, and then went down as they switched the analog network off and started converting to analog at the transmitter.

Dude: Not what we see here in the USA.

The large increase in the number of channels has led to many niche channels.

IIRC I’ve got three Mexican bible thumper channels and an eastern European one available (and deleted).

But aside from ‘chump’ channels there aren’t many niches. Religious and shop at home own the secondary channels.

The difference is if you’re a SD secondary channel you don’t need to make as much money to stay on the air, so there’s more chances for there to be something worthwhile than if you had to support the entire transmitter on one channel’s income. They might still be dominated by other stuff, but it’s better than nothing.

All this hullabaloo about deepfakes and it’s still far better to just find somebody who can do good impressions and a decent video editor, which have been around forever btw and haven’t ended the world as we know it. I think it’s way more likely that this is getting blown up so that anything embarrassing to certain people in the future can just be scapegoated on “deepfakes.”

Of the 30 participants, we included 25 in the final analyses (18 females; mean age 25.36 y, range=19–38 y).

Speech was in german.

They used 2018 AI to generate speech.

I mean, that’s great that they tried it, but it’s not representative of today.

These younglings use Tiktok a lot, and synthetic voice is used in half of the video (my own guestimate).

So, can you really say that the conclusion is still correct?

Is there a way to listen to the recordings they used? I’d be curious if they’re representative of current-level audio deepfake.

The recordings can be found here:

https://osf.io/89m2s/

Look under “acoustics” then “sounds.” They are stored in two folders: “natural” and “deepfake.” The files names in each folder are the same.

The voices don’t sound the same to me. The “deepfake” doesn’t sound like the “natural” it is supposed to duplicate.

Something I often think about is a story of an early public demo of Edison’s phonograph, where the audience were looking behind the curtain because they were so convinced it was a real voice. But modern ears are so attuned to audio production that a wax cylinder is not only unconvincing; it’s literally hard to listen to.

Similarly, when you get a new TV with “motion smoothing” turned on by default, for younger viewers, everything looks like local news and it’s unwatchably distracting, while folks who grew up with B&W TV literally can’t see any difference (hence the term “parents mode”, because only boomers leave this turned on).

In that light, the fact that pretty much everyone can currently tell there’s something off with AI content does not bode well for its long-term prospects. Sure, it’ll improve — but history suggests that human sensitivity to it will improve faster. And getting to the beta stage has already consumed tens of billions of dollars, and most of the world’s accessible content, without any obvious path to profit. If perfect deepfakes were going to happen, we should probably have seen them by now.

“Similarly, when you get a new TV with “motion smoothing” turned on by default, for younger viewers, everything looks like local news and it’s unwatchably distracting, while folks who grew up with B&W TV literally can’t see any difference (hence the term “parents mode”, because only boomers leave this turned on). ”

I see what you mean to say, but some of gen X and Y grew up with b/w portable TVs, too, not only boomers!

It may hard to belive in these days of nasty smartphones, but it’s not that long ago that kids had a little b/w TV and watched terrestrial free-TV.

Be it a battery-operated camping TV/Radio combo or a little 14″ portable with RF jack and telescope antenna.

This was in the 80s and early 90s, still, not the 1960s.

Some kids used them as a cheap monitor for their C64 home computer, too.

Anyway, just saying. Little portable TVs with color LC Display were in their infancy in the 90s, still.

Just like portable game consoles (Atari Lynx, Sega Game Gear/Nomad etc).

It wasn’t until the mid-2000s that cell phone cameras got VGA (!) resolution (640×480).

Youtube continued to be limited to 240p up until ~2010.

It’s really not *that* long ago. :)

When people say ‘Boomers’ they mean older Gen X’ers now too. Kids these days XD

I’m 46. And I did mean literal boomers, though obviously I was speaking in broad strokes anyway. And I didn’t mean that anyone who had seen a B&W CRT (as I have) can’t see motion smoothing. Perhaps I should have said “people who grew up before MTV”, because I think the distinction is whether or not you’re culturally sensitized to different kinds of video and their connotations.

I’m sure people of my parents’ generation can physically /see/ the difference. When they watched Doctor Who in the seventies, they could tell that the indoor scenes were shot on video and the outdoor scenes were 16mm, but they wouldn’t be conscious of it, because it wasn’t part of the language of TV back then. But by the 90s, you had shows like The Day Today or Mr Show where they would deliberately use local-news Betacam footage for effect, and if you weren’t attuned to it you wouldn’t get the joke.

There are probably regional variations, too, which may be why Asian TV manufacturers don’t see the problem.

But the point is, humans learn to distinguish /extremely/ subtle signals when it matters to us, and we haven’t even begun to turn our powers against AI content yet. If it can’t fool us today, it’s never going to fool us once we’re used to seeing it.

>but they wouldn’t be conscious of it, because it wasn’t part of the language of TV back then

The contrasting point was movie theater material that was all on film at a steady 24 frames per second, which created a kind of consistent pacing and rhythm to the action that spelled “big budget Hollywood movie”, or the “proper stuff”. This translated well to movies on TV, only slightly sped-up to 25 fps to align with the 50 hz refresh rate of PAL. No feature length movie was shot on video unless it was some very low budget direct-to-VHS release.

Switching frame rates between direct video shot at 50 fps on a studio camera, and the grainy images on cheap 16 mm film, just came across as “cheap production”. It was your standard local A/V club fare – and it was very noticeable and people were conscious of it. People just kept ignoring it because they liked the show, and it actually turned into a point of nostalgia.

More recent British TV production has actually turned this low-brow production style into a fetish. The technology got better, so they started emphasizing the point by using deliberately crappy props and sets where you can see that everything is just traffic cones and tinsel, and generally making a joke of the production.

>while folks who grew up with B&W TV literally can’t see any difference

It’s not about that. You get used to motion upscaling really quickly. Binge watch one TV series and it looks normal. Older people just don’t give a s**t about artifacts like that. This is the same people who would watch 4:3 content on 16:9 televisions stretched without letterboxing, because setting the TV up correctly for the content was too difficult to comprehend. They just assumed this is how it works now and get used to it.

If you deepfake Gandhi’s vouce yelling “kill the babies” my brain will surely react distinctly.

AI voice training has accellerated quite a bit since 2018. Up until recently (probably due to a takedown), there used to be someone who made youtube shorts with AI voice made by taking samples of audiobooks by an audiobook reader that I recognized. I was surprised to hear it. The accuracy was amazing. You could even hear the voice pausing to take a “breath”. The owner of the channel admitted this was AI, not the very talented audiobok reader. If the sample is long enough, the tech to make a reasonable copy is here.