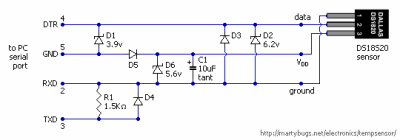

If you’re designing a universal port, you will be expected to provide power. This was a lesson learned in the times of LPT and COM ports, where factory-made peripherals and DIY boards alike had to pull peculiar tricks to get a few milliamps, often tapping data lines. Do it wrong, and a port will burn up – in the best case, it’ll be your port, in worst case, ports of a number of your customers.

Having a dedicated power rail on your connector simply solves this problem. We might’ve never gotten DB-11 and DB-27, but we did eventually get USB, with one of its four pins dedicated to a 5 V power rail. I vividly remember seeing my first USB port, on the side of a Thinkpad 390E that my dad bought in 2000s – I was eight years old at the time. It was merely USB 1.0, and yet, while I never got to properly make use of that port, it definitely marked the beginning of my USB adventures.

About six years later, I was sitting at my desk, trying to build a USB docking station for my EEE PC, as I was hoping, with tons of peripherals inside. Shorting out the USB port due to faulty connections or too many devices connected at once was a regular occurrence; thankfully, the laptop persevered as much as I did. Trying to do some research, one thing I kept stumbling upon was the 500 mA limit. That didn’t really help, since none of the devices I used even attempted to indicate their power consumption on the package – you would get a USB hub saying “100 mA” or a mouse saying “500 mA” with nary an elaboration.

Fifteen more years have passed, and I am here, having gone through hundreds of laptop schematics, investigated and learned from design decisions, harvested laptops for both parts and even ICs on their motherboards, designed and built laptop mods, nowadays I’m even designing my own laptop motherboards! If you ever read about the 500 mA limit and thought of it as a constraint for your project, worry not – it’s not as cut and dried as the specification might have you believe.

Who Really Sets The Current Limit?

The specification originally stated – you aren’t supposed to consume more than 500mA from a USB port. At some points, you’re not even supposed to consume more than 100mA! It talked unit loads, current consumption rates, and a good few other restrictions you would want to apply to a power rail. Naturally, that meant enforcement of some kind, and you would see this limit enforced – occasionally.

On the host side, current limiting had to be resettable, of course, and, at the time, that meant either PTC fuses or digital current limiting – both with their flaws, and a notable price increase – per port. Some bothered (mostly, laptops), but many didn’t, either ganging groups of ports together onto a single limited 5 V rail, or just expecting the board’s entire 5 V regulator to take the fall.

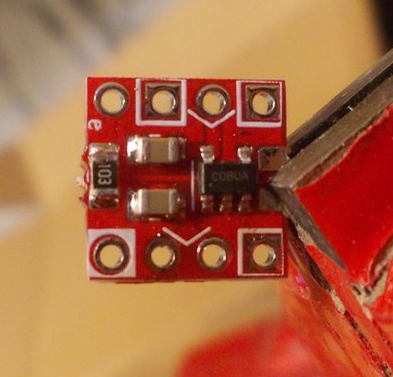

Even today, hackers skimp on current limiting, as much as it can be useful for malfunctioning tech we all so often hack on. Here’s a tip from a budding motherboard designer: buy a good few dozen SY6280’s, they’re 10 cents apiece, and here’s a tiny breakout PCB for them, too. They’re good for up to 2 A, and you get an EN pin for free. Plus, it works for both 3.3 V, 5 V, and anything in between, say, a single LiIon cell’s output. Naturally, other suggestions in comments are appreciated – SY6280 isn’t stocked by Western suppliers much, so you’ll want LCSC or Aliexpress.

Another side of the equation – devices. Remember the USB cup warmer turned hotplate that required 30 paralleled USB ports to cook food? It diligently used these to stay under 500 mA. Mass-manufactured devices, sadly, didn’t.

Portable HDDs wanted just a little more than 2.5 W to spin-up, 3G modem USB sticks wanted an 2 A peak when connecting to a network, phones wanted more than 500 mA to charge, and coffee warmers, well, you don’t want to sell a 2.5 W coffee warmer when your competitor boasts 7.5 W. This led to Y-cables, but it also led to hosts effectively not being compatible with users’ devices, and customer dissatisfaction. And who wants complaints when a fix is simple?

It was also the complexity. Let’s say you’re designing a USB hub with four ports. At its core, there’s a USB hub IC. Do you add current consumption measurement and switching on your outputs to make sure you don’t drain too much from the input? Will your users like having their devices randomly shut down, something that cheaper hubs won’t have a problem with? Will you be limiting yourself to way below what the upstream port can actually offer? Most importantly, do users care enough to buy an overly compliant hub, as opposed to one that costs way less and works just as well save for some edge cases?

Stretching The Limit

500 mA current monitoring might have been the case originally, but there was no real need to keep it in, and whatever safety 500 mA provided, came with bothersome implementation and maintenance. The USB standard didn’t expect the 2.5 W requirement to budge, so they initially had no provisions for increasing, apart from “self-powering” aka having your device grab power from somewhere else other than the USB port. As a result, both devices and manufacturers pushed the upper boundary to something more reasonable, without an agreed-upon mechanism on how to do it.

USB ports, purely mechanically, could very well handle more than 0.5 A all throughout, and soon, having an allowance of 1 A or even 1.5 A became the norm. Manufacturers would have some current limits of their own in mind, but 500 mA was long gone – and forget about the 100 mA figure. Perhaps the only place where you could commonly encounter 500 mA was step-ups inside mobile phones, simply because there’s neither much space on a motherboard nor a lot of power budget to spend.

Smartphone manufacturers were in a bind – how do you distinguish a port able to provide 500 mA from a port able to provide 1000 mA, or even 2 A outright? That’s how D+/D- shenanigans on phone chargers came to be – that, and manufacturers’ greed. For Android, you were expected to short data lines with a 200 Ohm resistor, for Apple, you had to put 2.2 V or 2.7 V on the data pins, and if you tried hard enough, you could sometimes use three resistors to do both at once.

Bringing The Standard In Line

The USB standard group tried to catch up with the USB BC (Battery Charging standard), and adopted the Android scheme. Their idea was – if you wanted to do a 1.5 A-capable charger, you would short D+ and D-, and a device could test for a short to check whether it may consume this much. Of course, many devices never checked, but it was a nice mode for smartphones specifically.

When you’re making a device with a LiIon that aims to consume over an amp and be produced in quantity of hundreds of thousands, safety and charger compatibility is pretty crucial. A less common but nifty charging mode from the BC standard, CDP (Charging Downstream Port), would even allow you to do USB2 *and* 1.5 A. Support for it was added to some laptops using special ICs or chipset-level detection – you might have had a yellow port on your laptop, dedicated for charging a smartphone and able to put your phone’s port detection logic at ease.

Further on, USB3 took the chance to raise the 500 mA limit to 90 0mA. The idea was simple – if you’re connected over USB2, you may consume 500 mA, but if you’re a USB3 device, you may take 900 mA, an increased power budget that is indeed useful for higher-speed USB3 devices more likely to try and do a lot of computation at once. In practice, I’ve never seen any laptop implement the USB2 vs USB3 current limit checking part, however, as more and more devices adopted USB3, it did certainly raise the bar on what you could be guaranteed to expect from any port.

As we’ve all seen, external standards decided to increase the power limit by increasing voltage instead. By playing with analog levels on D+ and D- pins in a certain way, the Quick Charge (QC) standard lets you get 9 V, 12 V, 15 V or even 20 V out of a port; sadly, without an ability to signal the current limit. These standards have mostly been limited to phones, thankfully.

USB-C-lean Slate

USB-C PD (Power Delivery) has completely, utterly demolished this complexity, as you might notice if you’ve followed my USB-C series. That’s because a device can check the port’s current capability with an ADC connected to each of the two CC pins on the USB-C connector. Three current levels are defined – 3 A, 1.5 A and “Default” (500 mA for USB2 devices and 900 mA for USB3). Your phone likely signals the Default level, your charger signals 3 A, and your laptop either signals 3 A or 1.5 A. Want to get higher voltages? You can do pretty simple digital communications to get that.

Want to consume 3 A from a port? Check the CC lines with an ADC, use something like a WUSB3801, or just do the same “check the PSU label” thing. Want to consume less than 500 mA? Don’t even need to bother checking the CCs, if you’ve got 5 V, it will work. And because 5 V / 3 A is a defined option in the standard, myriad laptops will effortlessly give you 15 W of power from a single port.

On USB-C ports, BC can still be supported for backwards compatibility, but it doesn’t make as much sense to support it anymore. Proprietary smartphone charger standards, raising VBUS on their own, are completely outlawed in USB-C. As device designers have been provided with an easy mechanism to consume a good amount of power, compliance has become significantly more likely than before – not that a few manufacturers aren’t trying to make their proprietary schemes, but they are a minority.

“Having a dedicated power rail on your connector simply solves this problem. We might’ve never gotten DB-11 and DB-27,”

We *did* actually get mixed power/data D-subs: they’re called “mixed contact D subminiature” with configurations like “3W3” and “7W2” where the first number is the total number of contacts and the second (after the W) being the number of ‘large’ contacts (nominally power, but there are actually coax inserts as well). The power contacts are *absurd* amp scale (like, tens of amps).

Bit surprising they’re not more commonly known outside of a few industries since they’re nice connectors: the shell sizes are standard D-subs so you can make a custom cable with a nice backshell for an affordable amount, but they don’t have staggered insertion or anything so they’re not nearly as good as later designs. But if you’re not intending them to be hot-pluggable or anything it’s a good option.

https://adamconn.com/product/high-voltage-connectors-solder-cup-7w2-for-upeu-huawei

40 amp. Voltage not given, but “High voltage”. If that were 48V you could hook a tea kettle to it.

Oh, they’re way higher voltage than that. I’ve never used it for anything over ~300V but they’re proofed to 1 kV.

The NorComp & Harting ones I’ve used have been rated for absolute max 1kV for the high current contacts (20-40A). The HV ones can be had with up to 5kV rated coaxial contacts, but at a much lower current. They tend to be used for signal & RF where you want the RF and a bunch of control signals in one package.

The RF inserts are great, and basically the only ones you can buy that are multi vendor: Norcomp, Harting, Molex FCT, and several other companies (although they tend to be no stocked). Plus you can just buy the cable portion preassembled from Pasternack for reasonable.

There are custom (single source) multigang RF connectors (e.g. from Samtec). They all suck. Badly.

The craziest thing is I could never find a standard name or anything for it, so I just had to buy some and try them.

And on a side note – don’t forget Firewire. It came with 8 to 33 V DC, 1500 mA, max. 48 W – enough to typically power audio / video devices, harddisks etc. of that era. And it was way faster than USB of the same time.

oh yeah, Firewire was nice! sadly, if I remember correctly, killed by Sony due to not opening it up in the same way USB was?

But Sony called it i.Link, I think ?

FireWire was an open standard. iLink was based on FireWire with proprietary Sony extensions. USB2 is what killed FireWire. USB 2 could do 480mbps. Original FireWire was limited to 400mbps.

What killed FireWire is that the market it targeted was very weird. It was faster than USB but slower than internal busses (e.g. Fibre Channel/SATA/SAS) and even slower than GbE.

The devices that started out using FireWire (video transfer) quickly outstripped its capabilities.

FireWire also had more smarts in the devices versus usb which used the smarts in the host (cpu) which made FireWire devices more expensive.

Yeah, this gets mentioned often, but I think it’s a red herring: for FireWire’s use cases (medium bandwidth moderate distance data transfer), both the peers already *had* brains anyway. You’re not often generating gigabit/s of data from a dumb device. But USB’s original speeds were tiny, and in those cases you absolutely wanted the complicated parts in the host.

But if you look at the actual design of FireWire, it just wasn’t a good option to move to higher speeds. Data strobe encoding just isn’t an efficient way to transmit data high speed, since you need 2 signals to transmit 1 bit, so 50% encoding overhead. Obviously FireWire moved away from this in the early 2000s but that introduced the “alpha/beta/bilingual” confusion, as opposed to USB and Ethernet which just negotiated to higher speeds.

That being said, as I mentioned above I don’t think anything would’ve saved it: it was always just a very weird market.

Not at all correct. Firewire was from Apple, and Sony (as they like to do) bastardized it by coming out with a bullshit version with a different connector that lacked power pins.

Sony has a long history of undermining industry standards. Another example is S/PDIF, which bastardized AES/EBU digital standards just enough to mess up compatibility in some cases.

After making a mess of Firewire, Sony attempted the same thing with its spiritual successor: Thunderbolt. Sony came out with a laptop that finally incorporated Thunderbolt, but they crammed it into a regular USB-A connector instead of the mini-DisplayPort connector everyone else was using.

And let’s not forget their pathetic attempt to keep shoving MemoryStick down customers’ throats long after everyone else standardized on SD.

Go back even farther to analog days. Sony’s Betamax video tape system was superior to VHS. Sony tried, I once heard, to limit what content could be distributed on the tapes, excluding the ‘adult’ variety. Betamax lost the consumer market and was relegated to pro studio use.

Superior by what standard? VHS had longer run times, and that’s why VHS won. Both formats added picture-quality enhancements over time, VHS usually improving first.

There was also the U-matic format, which I think was most likely in studios,

It wasn’t superior – Sony didn’t have any magic sauce technology – they simply made a different compromise between video bandwidth and tape run time, and refused to budge on that choice until VHS took over the market. Then they had to backpedal on that choice and both turned out pretty much exactly the same in terms of picture quality.

What killed Betamax was the fact that JVC licensed the VHS technology to other manufacturers at reasonable prices, who then went on to make cheaper players and tapes, while Sony did as Sony does and milked the cow to death. Sony didn’t let other manufacturers make cheaper players in fear of “diluting the brand”, which meant that nobody bought Betamax players because they were so expensive.

I wished USB never had existed. It’s such an unprofessional thing, turned PCs into toys..

I remember how I’ve seen an USB “rocket launcher” desktop gadget that uses USB merely for power.

That was over 20 years ago. Back then I felt this was such an affront to any respectable PC user.

The concept reminded me of these ugly Game Boy Color lamps that would leech power from the GB Link Port.

Even back then, I felt uncomfortable about tapping power from a computer port. That’s not it’s purpose!

Now where in the 2020s and even the silly EU requires USB to be the standard phone charger plug.

Such a bad decision, I think. USB was never meant to be a power plug. That’s what the barrel connector is for.

Really, USB is very silly. The plugs, the protocol, the bad naming schemes..

I wished it hadn’t existed. At least not in this form. The plugs are fragile, have no locking mechanism etc.

Very unprofessional. Even the cheap RJ45 western connector is better here, even if it’s a far cry from Centronics with its metal clamps.

would you have had been happier about a barrel jack “rocket launcher”?

Good question! I have to think about this! :D

That would not have been a computer peripheral but just a regular toy.

Parallel port! You could launch 8 rockets at once!

Well, USB may not be “professional” as you say, but it solved many problems and brought many people and designs together. Just think about the power of having a single cable (ok, USB has at least four of them, I know) to rule them all.

I understand. It was also the zeitgeist, maybe. Back then, plug’n’Play (aka plug&pray) was a huge thing at the time. Windows 95 really had bragged with auto-detection features.

It’s still sad that consumer tech has overrun PC industry.

Let’s imagine a PC, a workstation or server with an HDMI port, rather than VGA, DVI or Display Port.

HDMI is like the modern day equivalent to the clunky SCART connector found on European TVs.

It’s an end user, a consumer’s technology. It just doesn’t belong there (on a PC).

Just like a black, glossy flat screen doesn’t belong in the server cabinet.

Such things have different use cases and requirements, simply.

USB isn’t being opto-coupled, for example. It can’t handle shorts. Has no surge protection.

Real ports like RS-232 can handle shorts and different voltages (+-12v usually, some -+24v).

The 16550A FiFo has protective diodes, also. It can be safely tinkered with. It doesn’t die easily.

Many industrial devices have pull-up transistors in the serial interface or an opto-coupler, at least.

USB does have neither. It directly talks to another micro-controller inside the device.

Then there’s RFI/TVI. USB causes a lot of electromagnetic noise. It’s also very sensitive so same thing.

Someone who has ever used a transceiver in close proximity of an USB device can tell a story.

Sometimes USB devices vanish an re-appears if someone pushs the PTT (transmit key on microphone).

Really, I think USB is a cheap sunshine technology. Firewire wasn’t perfect by any means, but a bit more mature here.

It was more like an Ethernet connection, with shielded cables and stuff.

optocoupling on USB doesn’t make sense to me? I’m also not sure modern USB can’t handle shorts but I’m not going to demonstrate 😂

It was more of a thing when houses had poor wiring with things like outlets “grounded” to the neutral instead of a real separate ground, so you could easily have significant voltage differences between appliances depending on which outlet you plug them in.

USB devices were originally designed for computer peripherals that work off of isolating transformers only (simple wall-warts), or are plugged into the computer only, so no ground loops can form. However with cheap switching mode power supplies, the ground isolation isn’t so great and you can run into issues.

Why wouldn’t it make sense? MIDI has optocoupling, and USB should totally have. It’s quite common to fry USB ports, believe me, I’m proof of that.

“MIDI has optocoupling, and USB should totally have.”

You design to the typical use case, and extend to the niche cases. MIDI’s *typical* use case benefits from optocoupling because it’s dealing with cases where even tiny ground differentials can matter.

USB’s typical use case doesn’t benefit from isolation. Yes, you can fry USB ports due to improper grounding, but not in the typical use case – if you’re in a situation where the grounding is questionable, you can (and should) buy USB isolators. You could argue that self-powered devices/hubs should’ve been required to have USB isolation, but requiring it for all devices just adds cost to devices where it’s not needed.

Interesting thought experiment is whether or not you could have required ground fault detection during negotiation, but no matter what you’re going to have an upper limit on fault protection.

>Yes, you can fry USB ports

It’s not even about that. USB devices stop working over slight glitches in ground levels, so you have to keep re-plugging your devices. I’ve seen this with USB data loggers where some device that’s supposed to keep running for months just stops every n-days because the USB hub that is actually powering the device is connected to a different outlet than the PC that is recording the data.

“It’s not even about that. USB devices stop working over slight glitches in ground levels, so you have to keep re-plugging your devices.”

That’s why I said it would’ve been an interesting idea if the spec had required common-mode fault detection or something. Maybe at the host, since the brains are there and then the host could inform the user that the target device may require USB isolation to operate correctly or something.

Requiring optoisolation for a spec like USB is just overkill – you can’t solve every problem for everyone. For bus-powered devices that are *likely* to encounter common-mode shifts, they should be the ones optoisolating, since they need it.

It’s also worth noting that many USB devices are, in general, horribly designed anyway: years ago we tried to find USB hubs that could be chained to support a total of 64 total USB drives. I literally have a drawer full of at least 10 different vendors USB hubs, and *all* of them violate some of the hub IC’s design rules, varying from routing over ground planes to not even routing the USB signals as differential pairs at all.

But the problem is that in the *typical* use case – just plug a single hub into a port – all of those hubs worked. That’s the downside to cheap + high performance: you lose on reliability.

You lost me when you dissed the Scart port. Who wouldn’t want a full RGB interface on their consumer CRT for full, arcade-like video glory?

SCART was kinda the USB-C of the 90’s. Multiple video standards (composite, S-Video, component, RGB) in a single port, but you never knew which device supported what.

I appreciate the critique of USB, you point out some good stuff.

The thing is that there is no worldwide dictator waving a sceptor who decides what standards manufacturers of devices and peripherals use. As is usually the case with absolute authority, that would be a good thing if they were benevolent and wise, but otherwise we have to deal with what the market settles on. We could be (and have been) in a much worse position than now.

I personally do not miss the absolute mess of power and data cabling standards of years past. The newer USB standards have brought incredible versatility to a single port. The market wants a single, powerful data/power port, and I’m glad we have something as useful and robust as usb-c, instead of 15 competing options with varying merits.

Is it perfect? No, as you point out. But it really does get the job done, up to and including power and data sufficient for modern eGPUs. I have not had a problem with modern USB, and if the issues you list really mattered to a manufacturer they would roll a new connector to meet the specific needs for their device. Problem is they would lose market share to competitors who offer the universal connection option. I certainly wouldn’t consider buying a laptop, phone, or other computer/peripheral that decided to use a non-usb data or power connection.

If you get a time machine up and are able to change the course events we might get something better, but I’m happy where we are at – imperfect as it may be, USB is finally becoming a true universal standard

Problem with having a dictator go back in time and change things for the “better”… is that usually they’re better for the dictator, not so much for other people.

USB has to be many things to many people, and there are bound to be countless people who critically depend on things you personally hate.

> Then there’s RFI/TVI. USB causes a lot of electromagnetic noise. It’s also very sensitive so same thing.

Argh. Still having nightmares about aviation-grade USB fueled cameras and various sensors.

You make some good points.

AND and there is one way to judge how good something is – how successful is it? An athlete who wins the world title three years in a row is good at their sport. More people have purchased the Ford F-150 than any other vehicle, so objectively it is a good design – it’s the one people choose.

How successful has been USB been? Do people use it?

I meant to say – I personally may not be a fan of that particular athlete. But I have to acknowledge that they are good, if they’ve won three world titles.

Since you are here, of all places, what makes you think success relates to design quality? That’s just a utopian fantasy.

What’s successful, is successful, and that can (and does) easily crush better designs.

Corn Syrup has also been incredibly successful in the US. As has recreational fentanyl. Both are objectively very bad.

People choosing something can be related to quality, but just as often it’s having the right marketing campaign, misinformation or good old-fashioned lack of understanding.

Remember Monster Cables? Very, very successful at getting people to buy their insanely overpriced cables that provided little to no real benefit over more reasonable ones.

I prefer Mexican (real sugar) sodas and traditional heroin, thank you very much.

Your complaint is about multiple different things. I’m glad that the average Joe can’t fry their PC accidentally when building it just by plugging in the PSU anymore, what to speak of configuring any input or output devices.

Actually maybe you’re right? Who needs $5000 video cards and AAA games? Most of that stuff is overrated. I’m pretty sure I still would have got into this same stuff. I dunno about professionalism, you lost me there, but I don’t think I would miss Nvidia and all that’s come with that.

The sheer stupidity of a rectangular, featureless connector is mind-boggling. And if that weren’t enough, then came the parade of mini- and micro-bullshit versions. These clowns learned nothing from the SCSI connector fiasco.

And now we still have devices coming out with USB-A plugs as their only power connector (looking at you, Sonicare toothbrush). So not only do you have to carry a goddamned USB adapter around, but it’s for a deprecated port.

You can always solder a different adapter on if you prefer a 5v barrel or something else. I have way more USB power options than anything else, so I’m more likely to convert things TO usb, but maybe you got a lot of mains to dc barrel convertors in your kit

heh the connector is a legitimate complaint. i more or less solved it by putting a little dab of nail polish on one side of each plug, and on the same side of each jack. it’s the “up” side on most cellphones so i don’t have to mark them.

The top or front side is the one with the USB logo. You can feel it with your fingers, don’t even have to see it. It takes an especially clueless engineer to mount the port the wrong way.

And it’s not like the non-rectangular connectors (DP, HDMI) aren’t just rectangular enough that to see which way the port is on the monitor you’re plugging it into, you have to pull your whole setup away from the wall…

Regarding DE-9 and the rest of the D-sub series… They’re horrible for anything that gets hot-plugged (the shell on the plug can fit inside the shell on the socket, which means the shell shorts pins when misaligned), the pins are unprotected, and their ubiquity meant that you could never be sure what signals and voltages are on any random connector. Could be standard serial (at which voltage?), could be hundreds of volts balanced DC power on some random scientific equipment.

“and their ubiquity meant that you could never be sure what signals and voltages are on any random connector.”

That’s because it’s a connector, not a protocol. It’s like saying “10 pin headers are terrible, you never know what signals are on any random connector.” Well, yeah. I shouldn’t have to write “NOT A SERIAL PORT” on every D-sub connector connection I have, I just need to write “TO DEVICE X.”

That’s the difference with USB: it’s a spec, and when I abuse and use USB connectors (because they’re fantastic cables for the price) I *do* have to write “NOT A USB PORT” on them because I *expect* people to think they’re USB devices.

Barrel connectors could be any voltage. We had desk phones that used a 24 volt adapter that was same size of some other devices people had. A number of devices got fried with 24 volts.

The Japanese standard is the bigger the diameter, the higher the voltage. So if you see a 5.5mm connector with a yellow tip, that’s 12v..

Or someone just picked the yellow tip because it’s prettier than black.

Until 96Boards decided to specify the use of the EIAJ-3 connector (meant for 6.3-10.5V) to supply 8-18V…

And center-positive (most) or center-negative (some).

As much as I dislike designers and marketing, engineers left unchecked produce unusable products which sell for ludicrous prices

you want it to be steam powered too?

Yes, but only if it has an adjustable relief valve.

haha wow. i love usb. i think having a standard connector for low voltage DC is handy. microusb as a charging standard for small devices is a godsend. “That’s not it’s purpose” — no, it’s purpose is literally “universal”. “usb powerbank”. no true data on the whole device and it’s so useful, only $20!

works with apple, pc, and android. can charge a laptop! it’s a steal. it’s amazing.

I’m sad that the Centronics port is mostly gone; having an easily software-accessible parallel port is a great hacker’s feature. Alas, it’s bulky, making it hard to incorporate into laptop and smaller machines.

I’ve never broken a USB connector, but I’ve snapped the plastic tab off several RJ45s.

A locking connector is not always good. When I trip over a cable, I’d far rather have the cable come loose than have the computer or peripheral come flying off my desk.

i feel like when god closed the door on parallel ports he opened the window on usb-to-parallel adapters :)

i don’t do a ton of hardware hacking but the last 6 times i’ve wanted bitbanged i/o, i’ve used: an lpt parallel port TWICE, an stm32 dev board hanging off of usb, a raspberry pi on ethernet, a pic12 listening to an audio jack with a low pass filter on it (decoding ~100bps serial), and a raspberry pico rp2040 on usb.

of those, raspberry pico is my favorite and parallel port is my least favorite. i didn’t find it to have favorable electrical characteristics (i spent a lot of time struggling with noise, i guess due to my ignorance), and it always required doing all of the timing of bit banging in my pc. with an stm32 or rp2040, the actual timing-dependent i/o can happen in the arm core with good real time constraints, instead of my host pc.

“A locking connector is not always good. When I trip over a cable, I’d far rather have the cable come loose”

This is exactly the reason why USB doesn’t have extremely high retention. It *does* have positive retention – what do you think those holes on USB type A plugs are for? Look inside the plug – those spring fingers seat into the holes, and hold it in place. It’s just not a very high retention force, because in USB’s typical use case (temporary connections) you *do not want* strong retention. (All USB types have some retention latches in them).

If you have the *rarer* use case where you want a permanent connection, you handle the positive retention yourself. USB type C has a locking standard, so you can just use that, or you can use any of the various industrial USB connectors out there that offer higher retention.

Every time the design of USB is brought up *someone* complains about the fact that it doesn’t lock, and it drives me nuts. It does have retention mechanisms, they’re intentionally low, and there are alternatives if that’s not what you want.

Complaining about robustness is a separate issue, although I feel the people who long for barrel jacks are somehow absurdly lucky that they somehow magically haven’t worn any of those out – I *absolutely* have.

One of our products has a USB charging port. I was told to make it a MicroUSB. Customers were regularly breaking it, so in production, they started putting JB Weld around and over it to strengthen it. They break it less often now, but they still manage to break it.

I also started out with the thinking that without adding intelligence to negotiate a higher voltage and power, we should be able to get 5V and 500mA. Nope. The common consumer connectors and thin wires made it so I had to limit the current to 250mA to keep the voltage from drooping so low that my microcontroller that was controlling the battery charging (among other things) would go into brown-out reset. I changed the design a bit on Rev.A to prevent the reset, but I still had to hold the current down.

I hate USB though, for several reasons. There are 11 kinds of mutually incompatible connectors. So much for the “Universal” part of “Universal Serial Bus.” I have several USB cords plugged into my PC, and yet the one I need is always one of the other several on my desk. You can’t make up your own connectors and cables on the workbench. Also, one port normally only goes to one device. So much for it being a “Bus.”

You’re writing in 2024. If your device doesn’t have USB-C, hot-glue an adapter to it and make it USB-C.

Yes, it’s not a bus architecture. Use CAN, or I2C, if that’s what you’re after. It’s meant for easy connections, not for cases where the user has to know that the last device needs a terminating resistor added/enabled.

The mutually incompatible connectors is for a good reason. I get livid when I see someone has decided their particular device has to use USB-A to USB-A cables. Want to talk about ground loops, how about power loops! There’s a reason those suckers are verboten.

And yes, the USB micro-B plug is weak and the micro-B-with-USB3-pins is stupid (given that this is on the peripheral side, making it backwards-compatible with plain micro-B is kinda meh… There’s a reason the device needs high speed). We’ve gotten over it. I’ve yet to break a USB-C connector, while micro-B and especially barrel jacks stopped working routinely.

USB is great for what it is. It sounds like you’re griping about what it’s not, instead of choosing something suitable for your use case.

sigh. USB plugs have latching mechanisms, they’re right in the sides, you can see them. They just don’t have high retention force, on purpose.

There is a locking USB standard as well, you can buy the cables.

Wait charge you phone using a DB-25 see how long it takes

Transfer a few gigs over audio or video at a theoretical max of 1MBps

And that ecp mode

Standard spp mode is slower

USB has a limit of 16 feet IIRC. For RS-232, 115.2Kbps is readily achievable on CAT5 UTP cable over 325 feet. If you need more speed, you can go to RS-422 or RS-485 which are differential (balanced). They can go both much farther and much faster than RS-232. RS-422 can do 10Mbps at 40 feet, and RS-485 can do 35Mbps at 33 feet, which is nearly three times the speed of USB 2.0, and at twice the distance. Both RS-422 and RS-485 can go at least 90kbps at 3/4 mile.

And there’s a reason all of those are being replaced with ethernet even in industrial applications.

For inter-device use, there’s USB-C (dead simple, ubiquitous) and there’s Ethernet (dead simple, slower, longer, just as ubiquitous). I’ll admit CAN has its uses in industrial and automotive, too (simple, deterministic, prioritised, cheap-as-chips).

For intra-device use, there’s I2C, SPI and PCI-E. Or plain logic, single-ended or LVDS.

And if anyone wants to mention HDMI or DP? Just use USB-C. It’s capable.

That’s all the wired standards one needs in 2020s, and if you can’t manage with those in your device, then either you’re making something so niche that the general public won’t ever see one, or you’re incompetent.

USB is for the general public. The fact that you use hardware meant for general public in whatever industrial “professional” environment you’re in just proves how good it is.

Using consumer hardware in a professional setting just proves how cheap the management is.

The price difference is a factor of 10, which seems like a lot on the face of it, so the management thinks it’s cheaper to have an intern press reset and unplug some cables every couple weeks than buy the right stuff for the job.

Without usb we wouldn’t have usb drives and still be carrying floppy disks. Can’t hate usb?

Barrel jacks are so awesome. A few days ago, I plugged in a 48V supply into my 19V Intel NUC frying it. They were both barrel jacks. So much fun.

“Further on, USB3 took the chance to raise the 500 mA limit to 90 0mA.

The idea was simple – if you’re connected over USB2, you may consume 500 mA,

but if you’re a USB3 device, you may take 900 mA, an increased power budget

that is indeed useful for higher-speed USB3 devices more likely to try and do a lot of computation at once”

Not just an “higher-speed USB3 device”, but also an ordinary USB 1.1/2.0 device such as an EPROM programmer.

My TL866 runs more stable via USB3 port, for example.

Anyway, USB3 did solve quite a few issues, I do admit this.

It doesn’t fix the fundamental issues with USB nature, though.

I still think that USB wasn’t completely thought-through back in the mid-90s.

Remember “Windows 95 with USB”? USB 1.0 was a bug fest and merely useful for keyboards/mice.

And even here it sucked. The latency and the concept (polling) was worse than DIN or PS/2 port.

It caused unnecessary CPU overhead and memory. The BIOS had to emulate PS/2 ports, even.

And this emulation caused a lot of trouble with Windows 9x.

Because, once the USB controller was detected, the PS/2 emulation was gone.

That’s especially great if Windows was being freshly installed:

Windows 9x asked then for the USB drivers, but both keyboard and mouse were gone.

So the user never could finish the installation. Windows wanted user input.

The only quick workaround was to borrow a PS/2 mouse and keyboard from a friend and continue installation.

Provided, that the PC still had these ports. By late 90s/early 2000s quite a few “legacy free” PCs arrived.

They were solely equipped with, -you guessed it-, USB port! Great!

Boy, I can’t put into words how annoying USB used to be.

It was the enemy that took away these old, but reliable ports.

If there’s hell, it surely has USB wall plugs. :D

heh i can’t relate. possibly, i was late to the usb festival because in the 90s i was always broke. but my first memories of usb were just the astonishment at everything just-working (plug and prayers answered) or at least working after you track down the floppy with the driver on it. and i have never considered it one way or the other but just incidentally all of the motherboards i’ve bought have had the legacy PS/2 ports. the only problem i have had is there’s a bunch of marginal devices (i think all aiming towards usb 1.0 / 1.1) that don’t reliably enumerate…as recently as a decade ago i would have to plug / unplug several times until it works.

one thing i decidedly love about usb is that its data operations tend to be inherently safe-ish (and well-abstracted) from a software perspective. you don’t tend to hard-lock the whole system by mis-implementing your DMA buffers like you would with userspace PCI drivers. as a result, linux can expose most USB functionality through /dev/bus/usb/ and you can share that with unprivileged accounts using udevd. it’s frankly awesome how easy it is to access usb from userspace these days. a program that uses libusb to flash the rp2040 pico board is only 700 lines of straightforward C source. an afternoon of work, starting from not even knowing the API.

certainly by the mid 2000s, decently intelligent usb hardware was everywhere and the overhead of having the OS participate in the polling and so on was mostly ameliorated.

i sure was surprised to look in the usb standard just last year though and see all the polling. it works really well in practice though.

can’t say that for ps/2. i’m still not sure of the cause but if you spend all day hot swapping ps/2 keyboards and mice then eventually you’ll fry the 5V line coming off the motherboard.

i actually think this article is kind of inaccurate where it suggests that a lot of motherboards lack adequate short circuit protection. it might be hacks with marginal / linear response to overload or what have you. but usb is clearly very robust to a huge amount of abuse. and, again, driver chips are so ubiquitous now…the generic bus interface ASIC cell that they threw into your southbridge does a good job. i’ve never burned out a usb port.

oh and! probably the most unsung success of usb is cd / dvd drives that last forever! i bought i think 5 cd-rom drives that each failed after at most 2 years, and then i bought an external usb dvd drive and it’s lasted 20 years. turns out the only thing killing them was my case / power supply fan pulling dust through them all day long. external drive win!

I have burned out quite a few USB ports throughout my lifetime! The USB-A standard, iirc, defines that any pin can be shorted by any pin without consequences; in practice, it’s not impossible to kill a CPU by shorting 5V to one of the data lines, especially something like a Pi Zero – that kills one alright.

Wasn’t there a hackaday post of a USB device that purposely kills USB ports by effectively shorting all the pins, and sending a inductive spike across all the pins

There a real bad USB

If your computer is shitty enough may kill the entire mobo….

Yeah, but that’s like, hundreds of volts. There’s no interface in existence that can protect someone from a dedicated-enough attack. Even optocoupling has limits.

Well, Pi Zero is a really bad example of designing to a cost. It’s easy to kill one, but they were supposed to be so cheap that you wouldn’t care. Nowadays, the design precautions haven’t changed, but the cost has…

I deliberately said “precautions”, not “quality”. It’s well designed. It’s just designed for a user that will blame themselves, not the Pi Foundation, if they short something.

Well where’s you better standard

USB is pretty much a com port and dh-25 rolled in one port faster with lower rail

And data is sent serially over a differential pair(this also adds immunity to emi and rail noise)

Get faster speeds but no worrying about driving an 8bit port and having to wait until all bits are stable in state before handshaking

You can say USB is a uart that runs over a differential pair

And requires a sort of chip to act like a modem

The funny thing is USB standard has been around since the Atari 400/800

The Sio port operates like a USB port over a DB-13

You get hardware clk line, 3 hardware handshakes, a rx/tx 5v ttl (rs232 compatible),

5v, 12v, and an additional gpio pin for controlling cassette drive motors

Plug and play complaint, supports dairy chaining and hubs like USB

Even the protocol is similar in design

Atari ” Universal Serial Input/Output Bus” is a mouthfull

USB just combines the handshakes, clk, and data as multiplex signals over differential pairs

So in way it’s actually similar to Ethernet

There were several RS-232 hacks which were almost standards, but nobody was around to pick one of the contenders. A couple of flavors of RJ45 socket quality (at least one of which was compatible with RJ-11 plugs if only TX, RX, and GND were used.) And +5V power on pin 1 or pin 9 are both supported by nearly all barcode readers.

“Here’s a tip from a budding motherboard designer: buy a good few dozen SY6280’s, they’re 10 cents apiece, and here’s a tiny breakout PCB for them, too.”

Did you forget a link here? The SY6280 seems relevant to my interests…

ohh my bad, yes, here’s the link too for good measure!

Here you are good man:

https://vi.aliexpress.com/item/1005005848706899.html?spm=a2g0n.productlist.0.0.312f742bBUOdGh

On these site you can find many options for the same component or others. I’m pretty sure that they sell a ready made board with this chip, for the purposes mentioned by the article’s author, but I don’t know the name.

The shipping takes a good while (from China) but it’s reliable.

OMG, I’ve been wanting to write an article like this for years but never got around to it. I would cringe every time I saw “but you can’t draw more than 500mA.”

A couple decades ago I worked at Gateway on their desktop computer design team. Gateway’s motherboards were customized versions of the Intel retail boards that existed at the time.

They did have overcurrent protection, but it was just a PTC auto-resetting fuse. USB ports were grouped in pairs with a 1 Amp PTC fuse. So the two front panel USB ports were on one fuse, the two stacked with the Ethernet jack were on another fuse, and I believe the 4-stack USBs on the back had one fuse for the top two and one for the bottom two.

I believe all the retail version Intel motherboards sold off the shelf at stores like Best Buy were done this way as well, since that is what Gateway’s boards were based on.

And remember, a 1A fuse does not open at 1A, it passes 1A indefinitely.

The other side of all these PTC fuses was just directly connected to the 5V rail on the motherboard.

I think that paired 1 amp PTC fuse came from a reference design from either a host or a hub, can’t remember which. It was *everywhere*.

Even worse than the fact that PTC fuses have a steady-state draw, that draw is (of course!) temperature dependent, and if you connect the fuse directly to the power plane and heatsink the board… yeah, you can guess where this is going.

The inrush current from the USB port can go over the limit of your computer’s USB port, so a current limiter is preferred on your device. If you happen to have a low quality computer, with just some fuses on the motherboard for the USB ports, than some unpleasant surprise will come to you.

“The inrush current from the USB port can go over the limit of your computer’s USB port, so a current limiter is preferred on your device.”

Inrush current limiting (soft start) is just a generically useful thing to have. It mostly comes from ceramic caps being so cheap nowadays that people just throw them everywhere – the issue is that their ESR is so low that they can draw amps at startup, and even if the high current draw is enough… what people often forget is that with a long enough cable (or enough inductance), that dI/dt generates a *voltage*, too: and the voltage can be high enough to destroy the input cap!

It’s incredibly easy to blow up a poorly designed RF bias-tee this way.

It always seems really sketchy to me when people push amps of current through a tiny point contact in a plastic plug that cannot dissipate heat effectively.

The contact resistance must be no more than about 50 milli-Ohms, or you’ll see significant heating and power loss at the connector at 3 Amps – enough to potentially melt the connector. You’ll also see a significant voltage drop at the end of the cable, which is usually made out of hair-thin wires to save on cost anyways.

THAT is why USB peripherals should not draw much current and why such connectors are really not designed for power delivery. At 500 mA the contact and cable losses are negligible, while at 3 Amps you’re really pushing the envelope of what such cables can do.

And don’t forget extensions. AWG20 cable is about 33 mOhms per meter going one way, 66 mOhms both ways, so you’re losing about 0.6 W of power for every meter of your cable running at 3 Amps.

>”With contact resistance taken into account, it can be seen that it is difficult to meet requirements at high currents of 2A and 2.4A. As a result, cables only up to about 50cm can be used with 24AWG” … “at 500mA (the original spec), it can be seen that it is possible to meet the stringent USB voltage requirement at every length with 20AWG wire, and 2m with 24AWG (probably by design).”

https://goughlui.com/2014/10/01/usb-cable-resistance-why-your-phonetablet-might-be-charging-slow/

The reason why high current delivery to phones etc. “works” is because they basically ignore the voltage drop. When you’re charging a phone or a tablet, the battery starts from around 3 Volts empty and rises towards 4.3 Volts, so you can afford to lose couple volts along the way. The current will diminish towards the end of the charge (CC-CV charging), so the voltage will rise enough for a full charge.

I very much agree!

And then there was PoweredUSB… 😬 https://en.wikipedia.org/wiki/PoweredUSB

This is actually a nice article. Well written and informative. Interesting read.

I have an Asrock motherboard with a kind of unique feature, a “special” USB port with a higher output current rating for phones.

Thanks for the article!

Definitely going to be adopting these kinds of Low-voltage current-limiting load switches. Way more convenient for ensuring one’s 5V device can’t overload a host/supply than the old combo of Diode with PTC. Just one tiny SOT-23-5, a limit setting resistor and done. Cheaper too if you can find SY6280.

A Western alternative to the SY6280 would probably be the TI TPS22950. Fulfills the same role of a Current/Polarity limiting load switch for <5.5V devices and comes in a SOT-23-6 Package. Though they are a bit more expensive at ~70 cents. Not that you need more than one per supply-in

Thanks Foxhood, that TPS22950 is a useful part number.