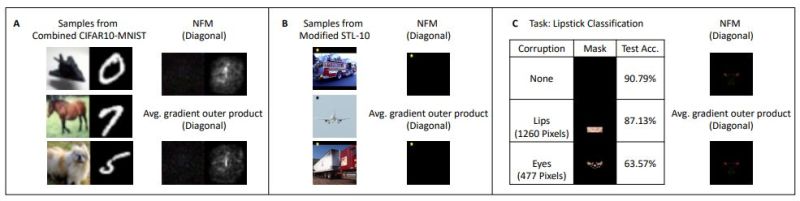

Artificial Neural Networks (ANNs) are commonly used for machine vision purposes, where they are tasked with object recognition. This is accomplished by taking a multi-layer network and using a training data set to configure the weights associated with each ‘neuron’. Due to the complexity of these ANNs for non-trivial data sets, it’s often hard to make head or tails of what the network is actually matching in a given (non-training data) input. In a March 2024 study (preprint) by [A. Radhakrishnan] and colleagues in Science an approach is provided to elucidate and diagnose this mystery somewhat, by using what they call the average gradient outer product (AGOP).

Defined as the uncentered covariance matrix of the ANN’s input-output gradients averaged over the training dataset, this property can provide information on the data set’s features used for predictions. This turns out to be strongly correlated with repetitive information, such as the presence of eyes in recognizing whether lipstick is being worn and star patterns in a car and truck data set rather than anything to do with the (highly variable) vehicles. None of this was perhaps too surprising, but a number of the same researchers used the same AGOP for elucidating the mechanism behind neural collapse (NC) in ANNs.

NC occurs when an ANN gets overtrained (overparametrized). In the preprint paper by [D. Beaglehole] et al. the AGOP is used to provide evidence for the mechanism behind NC during feature learning. Perhaps the biggest take-away from these papers is that while ANNs can be useful, they’re also incredibly complex and poorly understood. The more we learn about their properties, the more appropriately we can use them.

I’ve been really fascinated with understanding how different parts of a neutral network contribute to a network as a whole. In my opinion the easiest way to start to understand the different parts of a network is to just make it way smaller. The thinking here is that once we can shrink and understand a network we (thankfully) can transition out of the realm of computer science and take out our dynamical modeling and systems engineering toolbox.

Trading spatial complexity for temporal complexity is an interesting path i.e. fewer but more complicated nodes:

https://doi.org/10.1016/S0893-6080(05)80125-X

(Currently doing a thesis related to such a topic but a hardware based climax)

One approach I tinkered with many years ago was a MoE style, each agent was trained for a highly specific task with it’s result feeding into another. This allowed for better understanding of what was going on in the overall model. Added benefit that it was also easier to train the individual small components on gaming level GPU memory sizes — downside is there were a lot of models you had to retrain if you made a significant change, so proper system pipeline design became a must (being very selective on what becomes dependent).

There’s been a few other papers recently on trying to develop tools or methods to extract more of the “why” out of the black box, since parameter count has exploded and most older methods (confusion matrix, etc.) are no longer feasible.

I’d recommend checking out the YouTube channel TwoMinutePapers .. great short breakdowns.

With the growing amount of AI generated data on the internet, neural collapse is becoming a real problem. Training AI on datasets that include AI generated data from the previous generations of AI is effectively AI incest.

Yeah, and it’s not an easy thing to fix now that the cat is out of the bag and running wild.

The dataset that existed before the mainstream release of the major LLM’s might be the last “mostly human” dataset. Everything from social media to corporate websites are being polluted.

I will keep my copy of Encarta ’99 just in case!

Sounds like libraries are about to become very profitable thanks to their back catalogues.

While I agree, what you described is model collapse. Neural collapse has to do with the internal representation of ANN classifiers.

Bring back the Confusion Matrix !!!

“Cryptocurrency mining, mostly for Bitcoin, draws up to 2,600 megawatts

from the regional power grid—about the same as the city of Austin.

Another 2,600 megawatts is already approved.”

AI power consumption issue?