For one-off projects, adding a few integrated circuits to a PCB is not too big of a deal. The price of transistors is extremely low thanks to Moore and his laws, so we’re fairly free to throw chips around like peanuts. But for extremely space-constrained projects, huge production runs, or for engineering challenges, every bit of PCB real estate counts. [g3gg0] falls into the latter group, and this project aims to remove the dedicated USB-PD module from a lighting project and instead bit-bang the protocol with the ESP32 already on the board.

The modern USB power delivery (PD) protocol isn’t quite as simple as older USB ports that simply present a 5V source to whatever plugs itself into the port. But with the added complexity we get a lot more capability including different voltages and greater power handling capabilities. The first step with the PD protocol is to communicate with a power source, which requires a 1.2V 600kHz signal. Just generating the signal is challenging enough, but the data encoding for USB requires level changes to encode bits rather than voltage levels directly. With that handled, the program can then move on to encoding packets and sending them out over the bus.

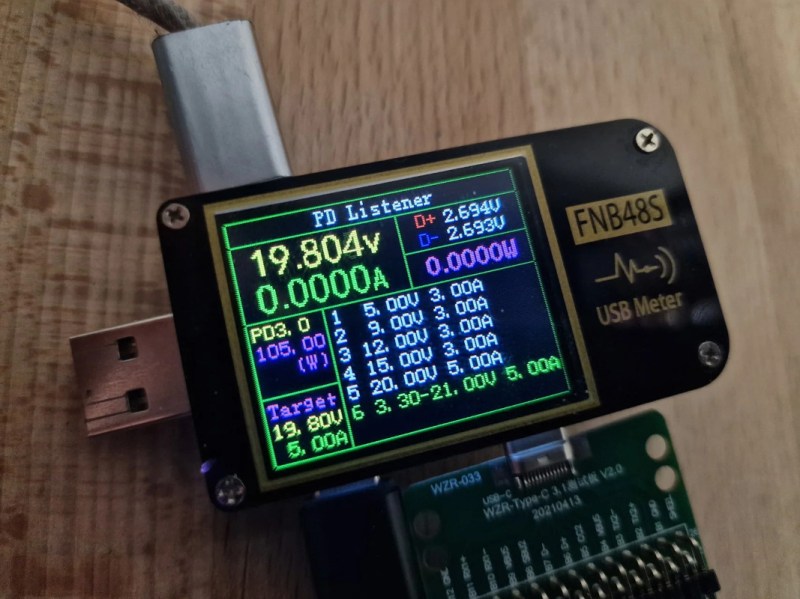

After everything is said and done, [g3gg0] has a piece of software that lets the ESP32 request voltages from a power supply, sniff and log PD communication, and inject commands with vendor defined messages (VDM), all without needing to use something like a CH224K chip which would normally offload the USB-PD tasks. For anyone looking to save PCB space for whatever reason, this could be a valuable starting point. To see some more capabilities of the protocol, check out this USB-PD power supply that can deliver 2 kW.

This comment is intended to start a discussion rather than to criticize anyone, and especially not the project’s author, who, on the contrary, deserves praise for doing so much diligent research, and then blogging about it for our benefit! With that caveat, here goes…

IMHO, bit-banging communication protocols is fraught, especially in the face of jitter introduced when processor-interrupts are enabled. It can take considerable validation efforts to make sure a bit-banged transmitter is actually adhering to the spec under all circumstances. To be fair, bi-phase encoded data is not rocket science, but 600kHz is still kind-of fast to bit-bang reliably. It is often impossible to cycle-count on modern RISC processors, so you have to use timers. (But then, is that really “bit-banging” in the traditional sense?) And can you get into the timer interrupt routine in a deterministic number of clock cycles?

A decade back, when bit-banging low-speed USB protocols was popular, the best that could be said was that these implementations were not future-proofed. They’d work okay until one day they wouldn’t (like when USB3 arrived on the scene).

An embedded software wizard friend once tried to productize a communication protocol he developed in an academic setting. What seemed reliable in the lab was not reliable enough for a product. Fortunately, since it was his private protocol, he had the luxury of slowing it way down. That worked!

What makes you think USB 3 would change anything? It’s backwards compatible and can’t add additional specifications for existing protocols.

This isn’t a hypothetical, a lot of older devices with bit-banged USB implementations – and even pretty sophisticated interrupt-driven software USB implementations – didn’t play well with modern USB3 controllers when they first came out.

This affected (and may still affect) a lot of very popular and well-known devices like the USBtinyISP and some common attiny-based dev boards.

It’s not about the spec, or what’s added: In a lot of usb3 controllers, the implementation of the older protocols lost something significant: forgiveness.

Older controllers didn’t mind as much if the timing was a bit sloppy on a bit-banged USB 1.1 protocol, or if the spec was off in other subtle ways.

I hear what you’re saying, and I really appreciate that top caveat. There needs to be caution here. But these attempts are going to be made. Not just on Hackaday, but through the world over.

These good, cautious write-ups are paving the way to make this fraught task easier. The responsible commentors (Tim, looking at you) are helping call out the risks to elevate the level of safety for the hacker that is doing their homework. As these disclosures happen, and potentially successful avenues are charted, we’re paving the way towards successful implementations.

There’s still a ton of manufactured dangers around USB-PD (Watching my aunt’s USB-C cable smoke during Christmas, as she plugged in some back-alley aliexpress kid’s tablet). But we have to applaud the folks that are doing the tough work (I gave up on bit-banging after making some funky I2C work, and that protocol has been around for freakin’ ever.

Hi,

No offense as well and I fully understand your point :)

Maybe you were a bit quick without reading the blog post, while the HaD title was picking up the term “bitbang” which did not not exactly pinpoint what I did.

I did not exactly bitbang it, while this was my initial question in the blog post:

“why not bitbang PD-protocol with the ESP32 and save precious PCB space? Is this even possible?

Or can we use a peripheral for this task?”

and later:

“or we can use the wonderful RMT peripheral, which gives us a sequence of levels and their duration”

Now we could discuss if “getting high/low durations from a peripheral” is considered bitbanging.

I’d say no, but its neither bitbang nor native, and thus getting a meta-discussion here.

It is still a bit timing critical as I use the CPU to switch IO directions before/after transmission.

(To clarify it for further readers, I added a “Instead of bitbanging” when selecting the peripheral)

sorry, should have been a reply to Tim McNerney’s comment.

Driving the CC lines with 1.7V or even 3.3V seems a bit brutish to me when 1.2V is specified.

How about using two 74AUP1G125 (or ‘126) to drive the two CC lines instead? That would allow to properly drive them with a low-impedance CMOS signal of 1.2V high, 0V low or high-impedance when the other side is sending. The 74AUP1G series gates inputs can be driven with up to 3.6V, so they have no problem with a 3.3V input signal from the ESP32.

You can get them in SOT363-5 for like 5 cents per piece from LCSC, or even go down to DFN1010 if that should be too large.

You’d need a small 1.2V voltage regulator too of course.

Yeah, as written in the post, it was an option.

In this case, I wanted to build it with minimal BOM – transistor and resistor.

The absolutely bare minimum to not fry things and still be functional.

Regarding the 3.3V on CC line – they may be pulled up towards 5V and will be

driven at 5V / 200mA when providing VCONN to an eMarker.

So electrically the voltage level must not a problem for any source involved.

Another option was to use weak outputs creating ~1.6V which is closer to the 1.2V and

far below end device clamping of 2.9V. That feels more safe but turned out to be

not as critical.

At most, it is violating the PD protocol :)

Did you implement the collision avoidance strategy of adjusting the CC voltage when its OK for the SNK to talk. And setting it back when the SRC is about to talk?

No,

I have implemented two “strategies”

1. send directly without waiting when there was a packet, the UFP is required to answer

2. send delayed for all other packets, checking if there is activity detected (RMT detects pulses)

The latter is not perfect and delayed quite a bit and collisions lead to retransmission (implemented).

So the whole thing is (knowingly) not proof for a production device.

Good enough for tinkering however.

does anybody know why they made the usb PD implementation so unfriendly? I mean, they could have just i2c’d (or something like it) it which is easy to bit bang (which this project is not doing, as it is using the RMT) on even a dumb 8 bit processor..

The BMC protocol is very tolerant of clock speed variations. It actually has a header that lets you set your clock.

Its also a balanced protocol for what thats worth. You can do it with the SPI bus and a few logic blocks.

I’ve done pseudo bit banging with a 600kbps SPI output. I’ve also use CLBs to preprocess the data input into SPI in order to read the input. This is on -MHz to 24MHz MCUs that aren’t fast enough to bit bang anyway. There are several examples about this on the web.

Hi, I am trying to have a 12V output from usbc plug by using CH224K. Which have the following datasheet https://www.laskakit.cz/user/related_files/ch224ds1.pdf “6.3. Connect CH224K with a Type-C male port” I want to use “6.3. Connect CH224K with a Type-C male port” construction because I dont want to use D+ and D- minus pins of the usbc port. I only need to have 12V and keep it as clean as possible.

But on the datasheet it only gives the setup for 20V and there is no explanation of what to do for 12V for the case that is not using D+ and D-. How should I proceed or what should I look for ?